Hello @srigowri ,

Thanks for the question and using MS Q&A platform.

Could you please confirm if you are using Dedicated SQL or Serverless?

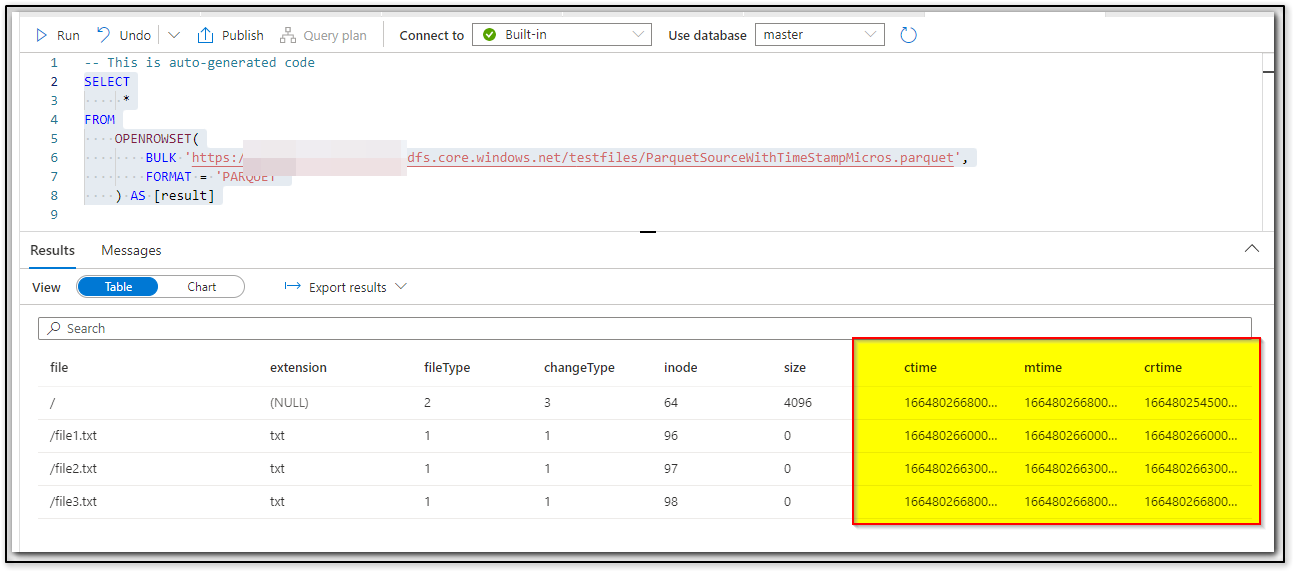

I tried to download the file you have provided and tried with Serverless, and it seems to work without any issue. I don't see any errors.

Whereas in dedicated SQL pool, seems like the datatype is not supported. As a workaround would it be possible for you to use COPY INTO command in stored procedures. Seens like using Copy Into command overcomes the error message.

Hope this info helps. Please let us know how it goes.

Thank you