Thanks for the follow up Junjie.

I will work on an code sample to share with you, but it is not that easy, as its a quite complex 4500 lines program..

I can share more details right now, (not being sure that they are all really related to the issue..)

The program reads sequentially an input file by 12k buffers and for each buffer creates a new file with the input data compressed or not (that is, if they compress!),

and computes a checksum of the input buffer and writes it (actually the hexadecimal representation coded in ASCII) in one global file that holds the checksum list of the input file..

Regarding your test, the point is that the times you are reporting are not appropriate to cause the issue.

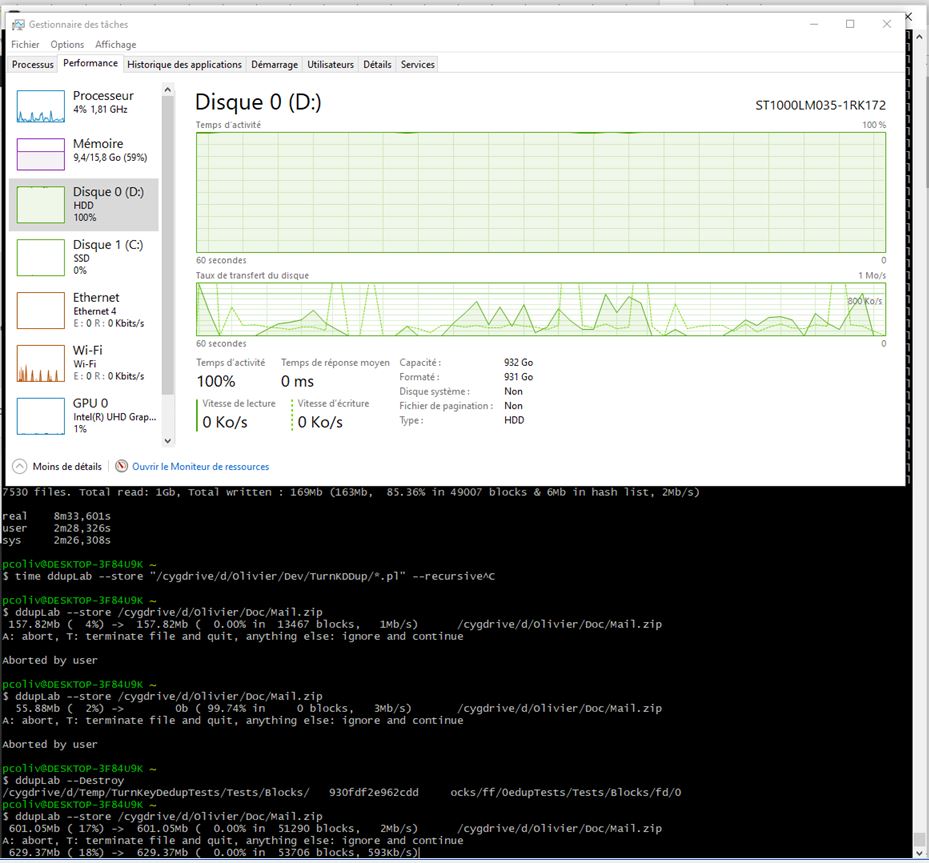

1/ your test should run/repeated several minutes/hours at least to cause the issue (I suggest having the task manager disk performance tab popped up aside to follow the transfer rate..)

2/ be aware that the issue is not predictable! I can run some tests many times without experiencing it along a day. Then when it occurs, in a middle of test with no appearent reason,

It can remain several hours/days. (interrupting the program let the disk with a hight rate for long time, and when idle comeback, relaunching the program makes the issue quickly happen again)

Also, you do not tell about the buffer sizes involved in your test. May I suggest to make sure using random data to avoid potential "no_op" cache optimization.

Last but not least, I work with a "real" hard disk, with heads an cylinders, not SSD. I know that many articles suggest using a SSD. This is somethinq that I could personnaly do on my own,

but this is not a possible answer to a customer that would get the issue with my program running on his computer!

Best regards

The 100% disk usage issue

Hi every body!

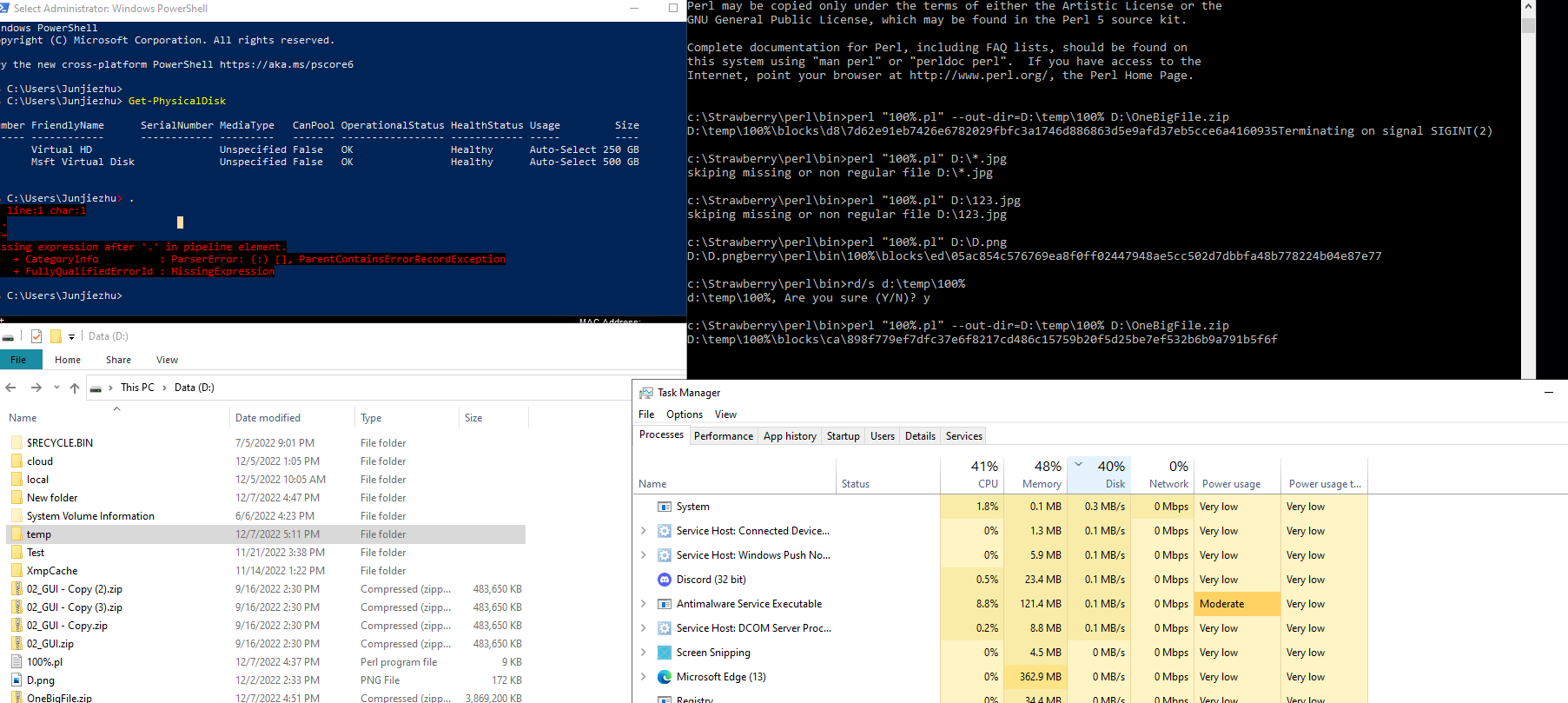

I am writing a program of kind "one to many files" (create many signed/crypted block files from one single input file).I am regularly facing the 100%-disk-usage issue as shown in the performance tab of the task manager.

The program sticks and the system becomes unresponsive (any unrelated program aside too..)

As far I can see with the performance monitor, the small block files that I created stay "on air" many times after I closed them, somehow "delegated" to the 'SYSTEM' task (pid 4), which I assume to be the action of the system write cache (?)

The problem is that 'SYSTEM' seems having no limit in accumulating them, in appearance, not taking into account the free memory still available in the system, while at some point, one would flush some cache entries above a reasonable limitto recover free memory (and to avoid keeping not-written data in memory for long!).. (?) Based on the report of the typeperf tool ("Logical disk(_Total)\Disk time percent", (which can report crazy values such as 2500!),I regularly stop the IO requests of my program and spawn the powershell cmdlet Write-VolumeCache to flush the cache, which does not require Administrator privileges..

Some times, it works as expected: the disk activity drops down after few seconds, and my program gently keeps going ahead.. Some other times, It does not do anything.. It returns after a long delay, with the disk still at 100% and typeperf counter still very high..It may stuck very long as is.. In that case, surprisingly, going to deep sleep mode and awaking after few minutes can solve the problem (??).But not every time (and, anyway, that is not a possible work around!)

Does anyone have suggestions to fix that issue ? Small files are created with Win32 default options. I tried using 'CACHE_THRU' options (that is: FILE_FLAG_NO_BUFFERING|FILE_FLAG_WRITE_THROUGH), and the issue seems not occurring anymore, but performances become then unacceptable. What I need is a reasonable compromise between convenient performances with the help of the disk cache and responsiveness/reliability..

Thanks in advance - bye

Olivier

1 answer

Sort by: Most helpful

-

Olivier Delouya 11 Reputation points

2022-11-21T11:20:05.017+00:00