@Cliff Clive Thanks for the question. Can you please add more details about the sample and SDK version that you are using.

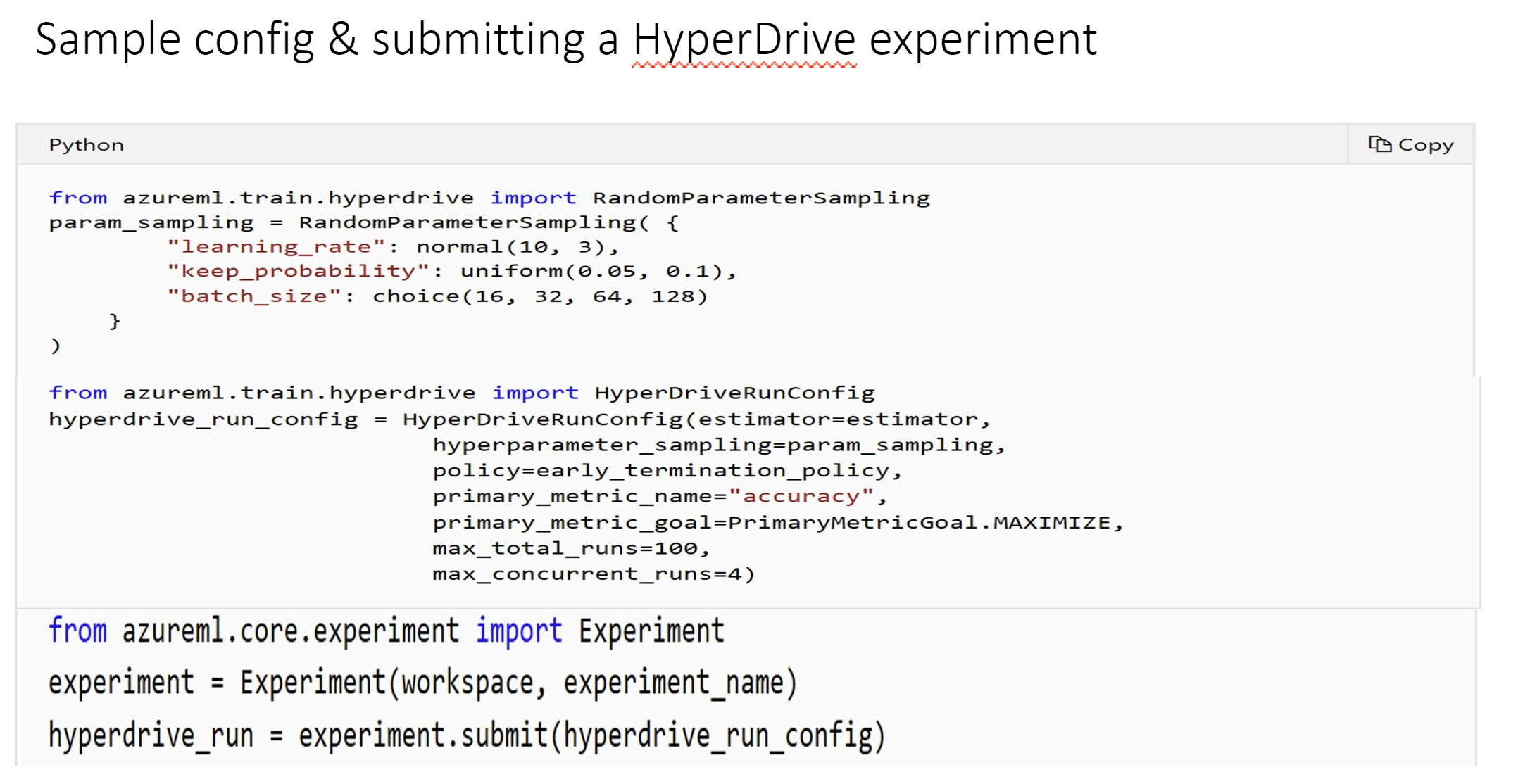

HyperDrive is an automated hyperparameter tuning system that helps users find better results in a cost effective manner.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

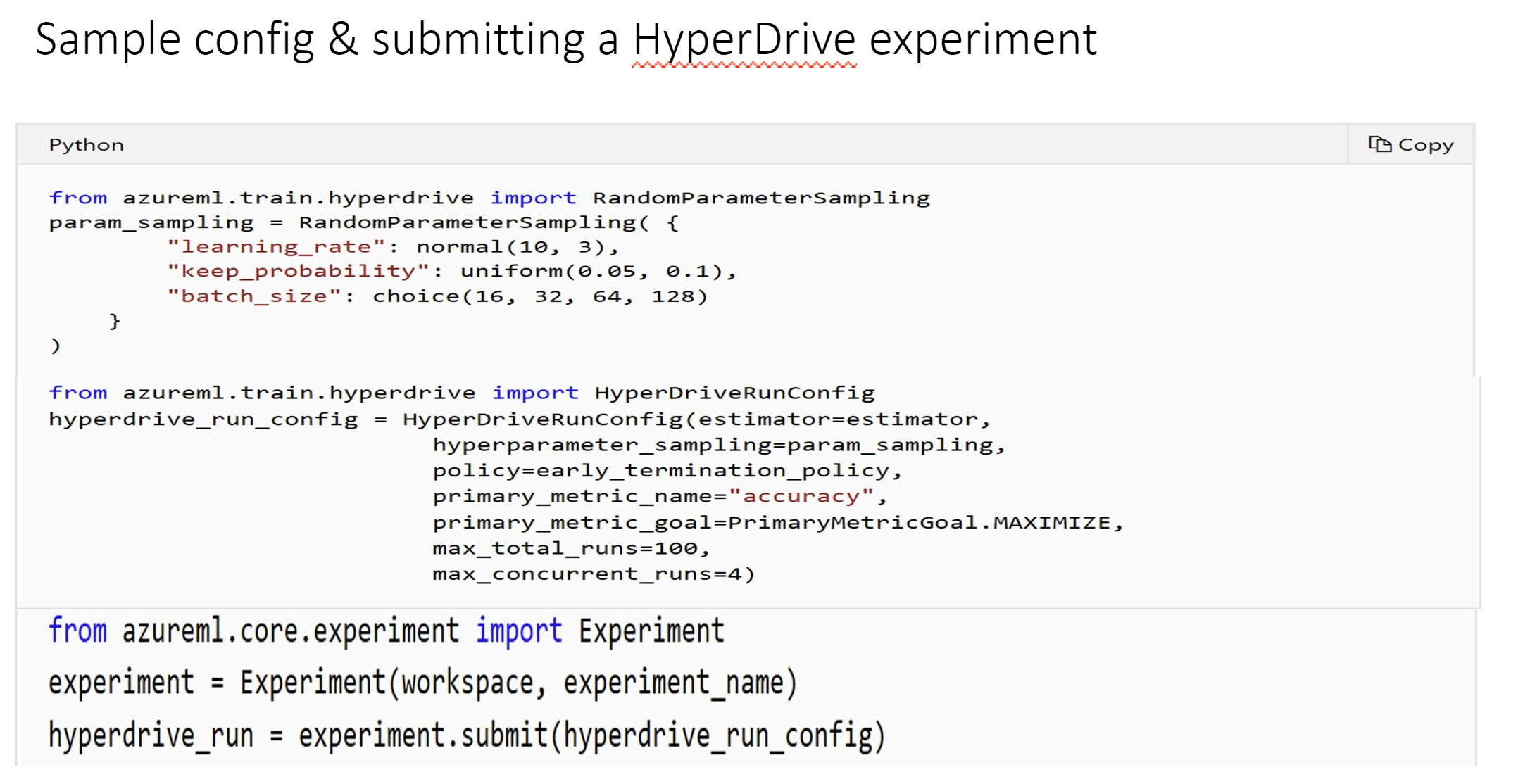

I'm trying to build a regression model pipeline using a HyperDriveStep for parameter tuning.

When I pass in my features dataset and targets dataset as named inputs, if I run this in a notebook with a fresh kernel, I get an error that says ValueError: [features_data] is repeated. Input port names must be unique. (See first screenshot)

If I comment out the features data input and re-run the cell, the HyperDriveStep builds with no issue. (But obviously I can't run the step and train my model without the features.) (See second screenshot)

If I un-comment the features data input and re-run the cell, this time the HyperDriveStep builds with no issue, and I can run my pipeline. (See third screenshot)

Error report is as follows:

ValueError Traceback (most recent call last)

Input In [7], in <cell line: 25>()

21 # Risk: need to ensure tha the model_file entered here is the same name

22 # as what's saved in training/train_{model_algorithm}_regressor.py

24 hd_step_name=f'hd_training_step_{segment_type}_{model_algorithm}'

---> 25 hd_step = HyperDriveStep(

26 name=hd_step_name,

27 hyperdrive_config=hd_config,

28 inputs=[

29 training_features.as_named_input('features_data'),

30 training_targets.as_named_input('targets_data')],

31 outputs=[metrics_data, saved_model],

32 allow_reuse=False)

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/azureml/pipeline/steps/hyper_drive_step.py:249, in HyperDriveStep.init(self, name, hyperdrive_config, estimator_entry_script_arguments, inputs, outputs, metrics_output, allow_reuse, version)

246 self._params[HyperDriveStep._primary_metric_goal] = hyperdrive_config._primary_metric_config['goal'].lower()

247 self._params[HyperDriveStep._primary_metric_name] = hyperdrive_config._primary_metric_config['name']

--> 249 super(HyperDriveStep, self).init(name=name, inputs=inputs, outputs=outputs,

250 arguments=estimator_entry_script_arguments)

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/azureml/pipeline/core/builder.py:149, in PipelineStep.init(self, name, inputs, outputs, arguments, fix_port_name_collisions, resource_inputs)

146 resource_input_port_names = [PipelineStep._get_input_port_name(input) for input in resource_inputs]

147 output_port_names = [PipelineStep._get_output_port_name(output) for output in outputs]

--> 149 PipelineStep._assert_valid_port_names(input_port_names + resource_input_port_names,

150 output_port_names, fix_port_name_collisions)

152 self._inputs = inputs

153 self._resource_inputs = resource_inputs

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/azureml/pipeline/core/builder.py:190, in PipelineStep._assert_valid_port_names(input_port_names, output_port_names, fix_port_name_collisions)

188 if input_port_names is not None:

189 assert_valid_port_names(input_port_names, 'input')

--> 190 assert_unique_port_names(input_port_names, 'input')

192 if output_port_names is not None:

193 assert_valid_port_names(output_port_names, 'output')

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/azureml/pipeline/core/builder.py:184, in PipelineStep._assert_valid_port_names.<locals>.assert_unique_port_names(port_names, port_type, seen)

182 for port_name in port_names:

183 if port_name in seen:

--> 184 raise ValueError("[{port_name}] is repeated. {port_type} port names must be unique."

185 .format(port_name=port_name, port_type=port_type.capitalize()))

186 seen.add(port_name)

ValueError: [features_data] is repeated. Input port names must be unique.

@Cliff Clive Thanks for the question. Can you please add more details about the sample and SDK version that you are using.

HyperDrive is an automated hyperparameter tuning system that helps users find better results in a cost effective manner.