Hello community!

Reaching out for some help here.

ManagedOnlineDeployment

vs KubernetesOnlineDeployment

Goal:

Host a large number of distinct models on Azure ML.

Description:

After throughout investigation, I found out that there are two ways to host a pre-trained real-time model (i.e., run inference) on Azure ML.

Details:

- What I tried

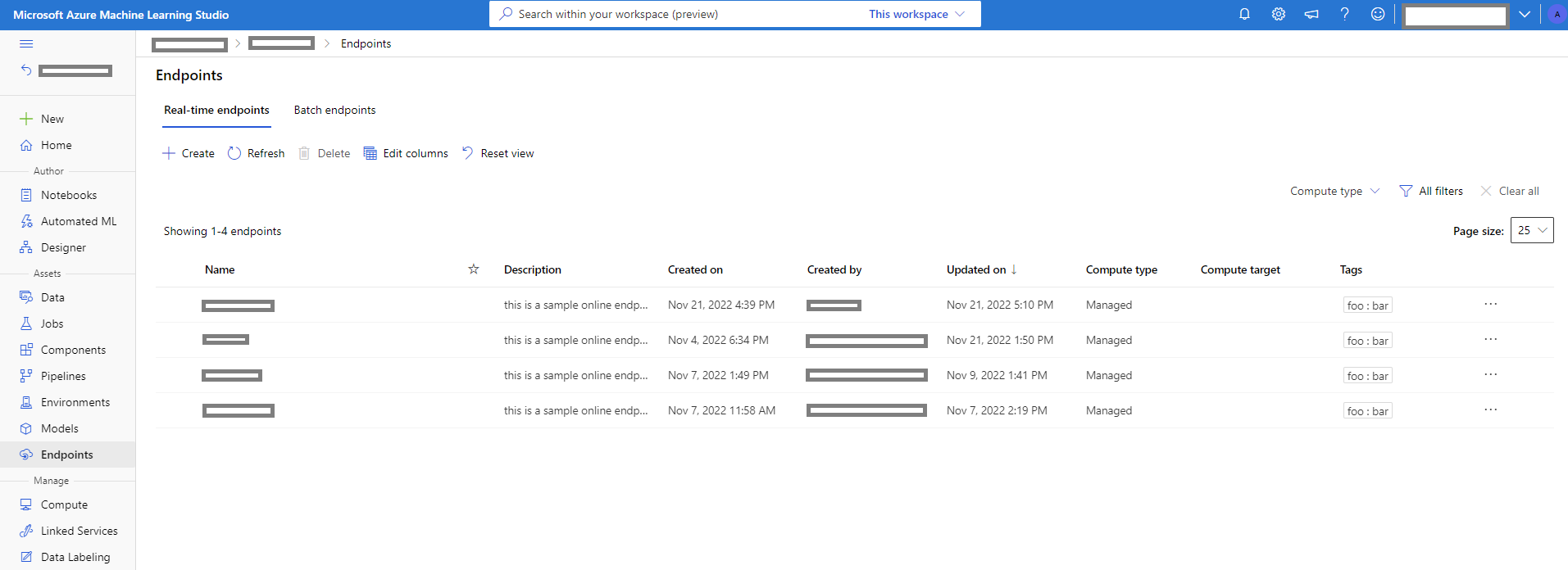

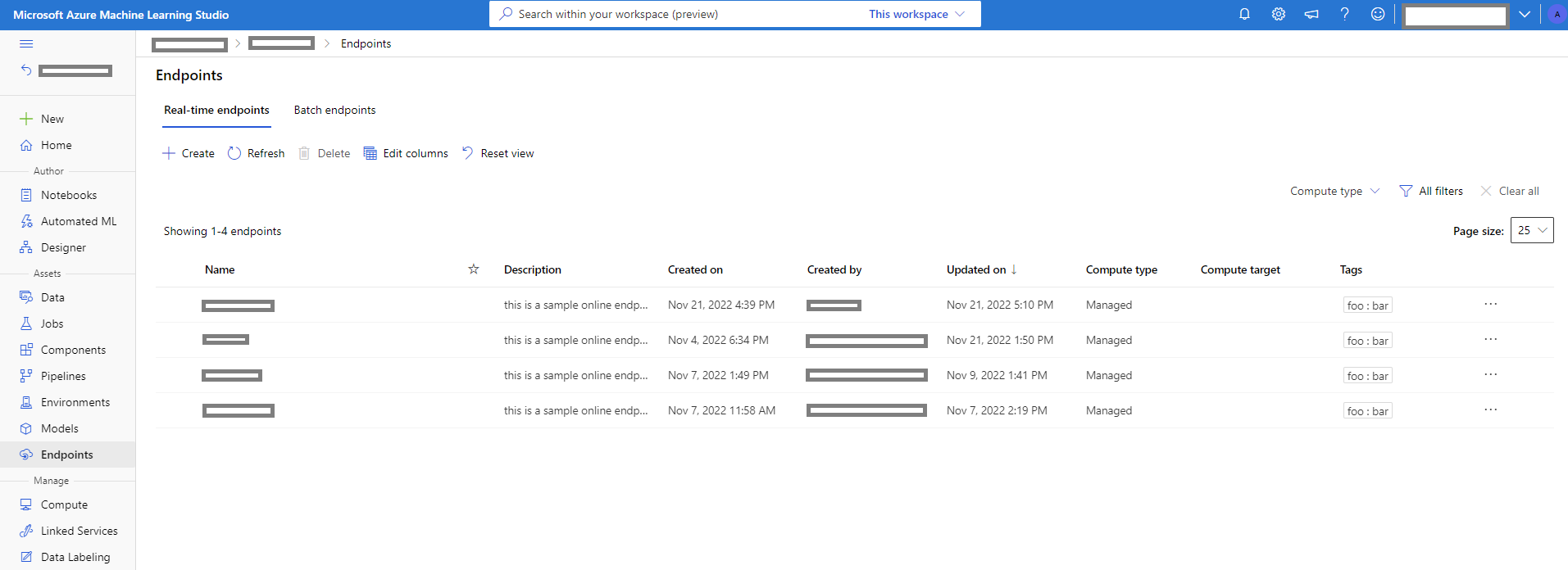

I have 4 running VMs as a result of my creation of 4 real-time endpoints. Those endpoints use Curated Environments that are provided by Microsoft.

- Issues

- When I want to create a custom environment out of a docker file and then use it as a base image for a certain endpoint, it is a long process:

Build Image > Push Image to CR > Create Custom Environment in AzureML > Create and Deploy Endpoint

If something goes wrong, it only shows when I finish the whole pipeline. It just doesn't feel like the correct way of deploying a model.

This process is needed when I cannot use one of the curated environments because I need some dependency that cannot be imported using the conda.yml file

For example:

RUN apt-get update -y && apt-get install build-essential cmake pkg-config -y

RUN python setup.py build_ext --inplace

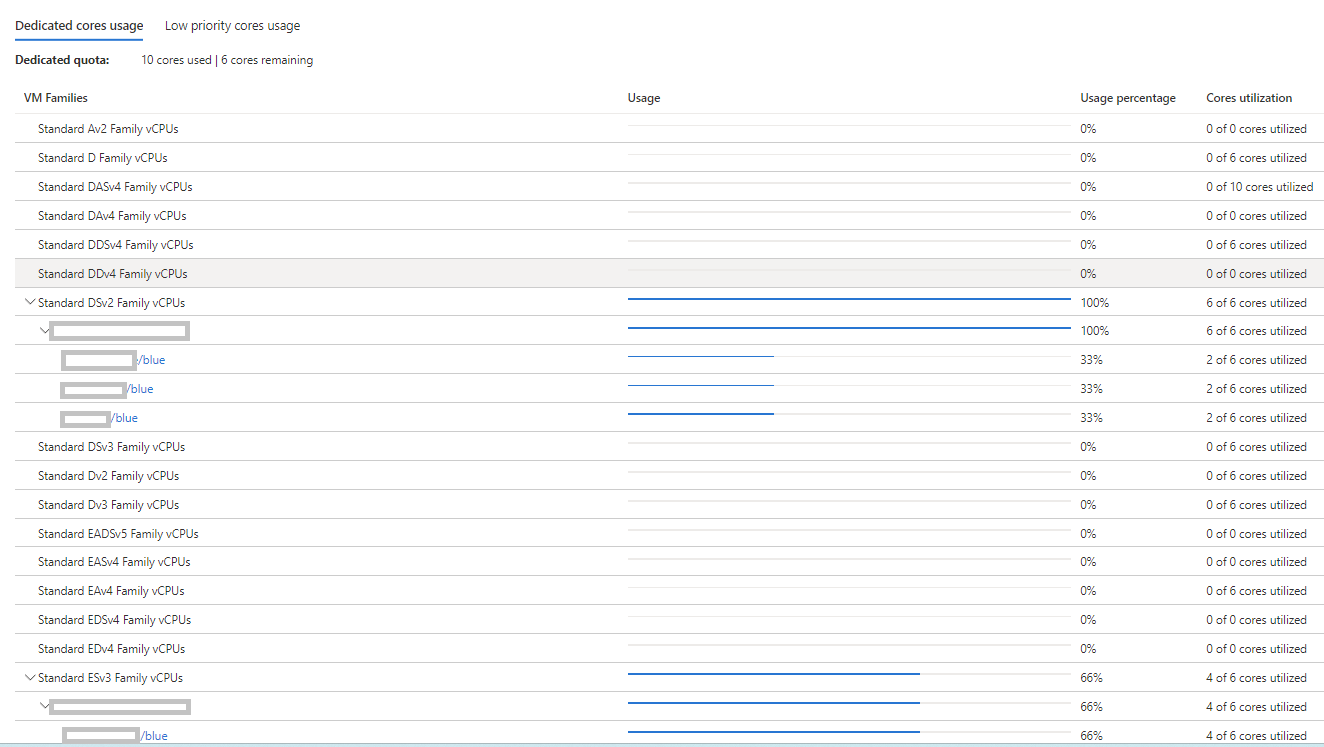

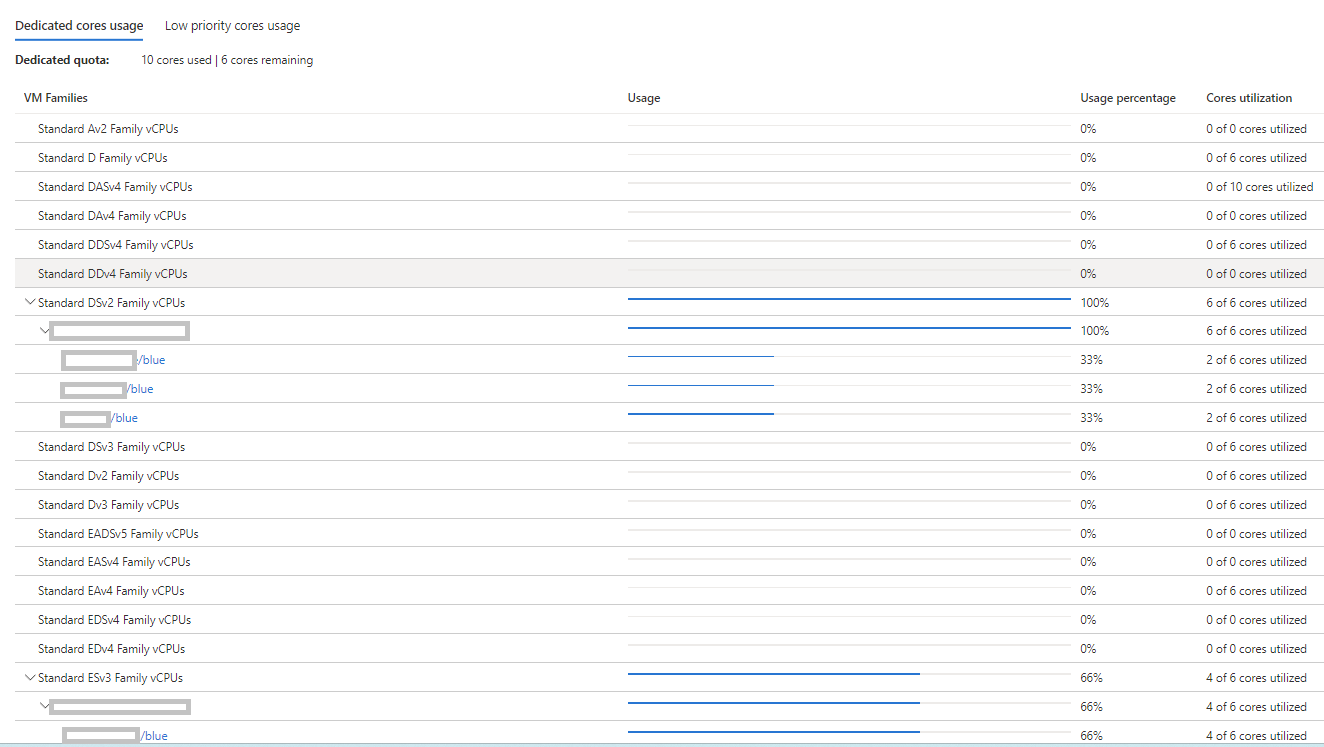

- Although I'm using 1 instance per endpoint (Instance count = 1), each endpoint creates its dedicated VM which will cost me a lot in the long run (i.e., when I have lots of endpoints), now it is costing me around 20$ per day.

- Note: Each endpoint has a distinct set of dependencies/versions...

- Questions

1- Am I following the best practice? Or do I need to drastically change my deployment strategy (Move from ManagedOnlineDeployment to KubernetesOnlineDeployment or even another option that I don't know of)?

2- Is there a way to host all the endpoints on a single VM? Rather than creating a VM for each endpoint. To make it affordable.

3- Is there a way to host the endpoints and get charged per transaction?

General recommendations and clarification questions are more than welcome.

Thank you!