Hello @Rahul Ahuja ,

I have discussed the issue with my internal team. They have advised the below to resolve the issue.

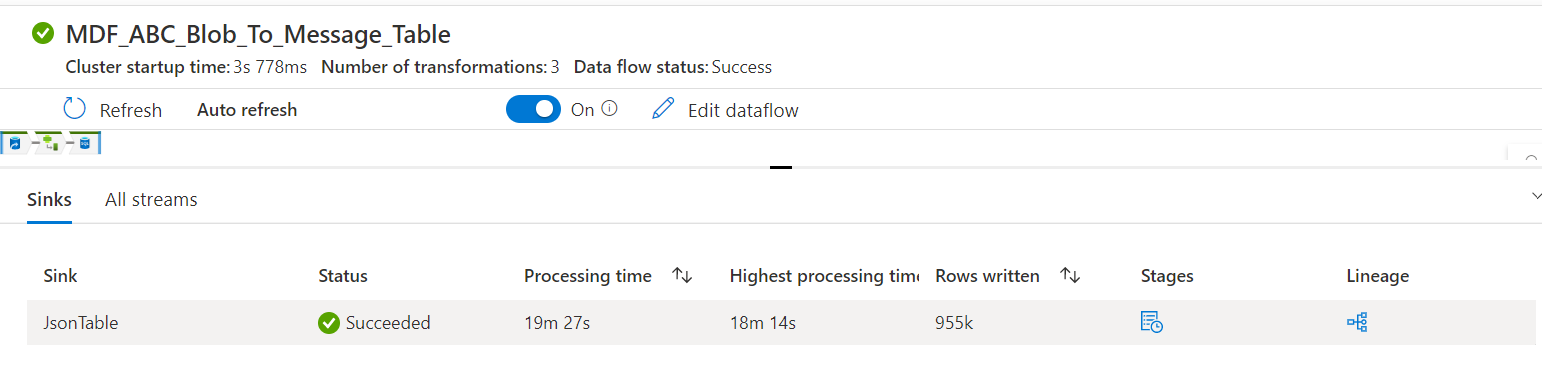

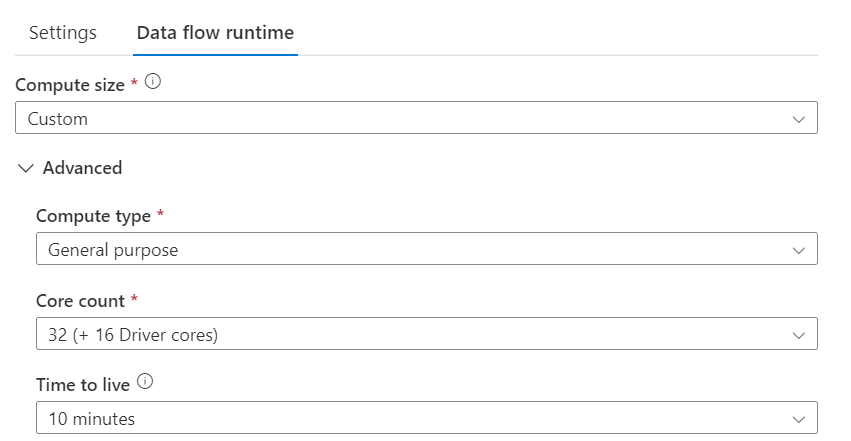

1) It seems, the core count is not enough to process the data. Please increase the core count in the data flow cluster

(or)

2) Process the data in small chunks:

One way to process data in smaller chunks:

Assume you have one large data file, using a Data Factory Source

You could set Sampling ON in that source activity, and set the Rows Limit by a passed job parameter via Add dynamic Content.

You'd have to wrap your Data Factory Job in some sort of Iteration Activity (e.g. Until) in a called Pipeline. I'd probably check for the output of your Source Activity and, if the number is LESS than the value of the parameter you pass in, then break out of the Iteration Loop.

One Caveat: This may NOT do well with "No Records Returned". May have to do some edge testing for that. (e.g. you process 1000 rows at a time, and there are exactly 1000 rows in the last batch, to the NEXT batch processes zero - does the Source activity return an output value of zero? Or does it not have any results at all?)

A similar thread has been discussed here.

I hope this helps. Please let me know if you have any further questions.