Hello @Robert Riley and welcome to Microsoft Q&A.

There are a few things to check as possible causes. I assume you have an execute pipeline activity inside a forEach loop.

There are at least two places the restriction could be taking place. The first two to check are pipeline currency and loop settings. Either one alone could cause the throttle, so both need to be correct.

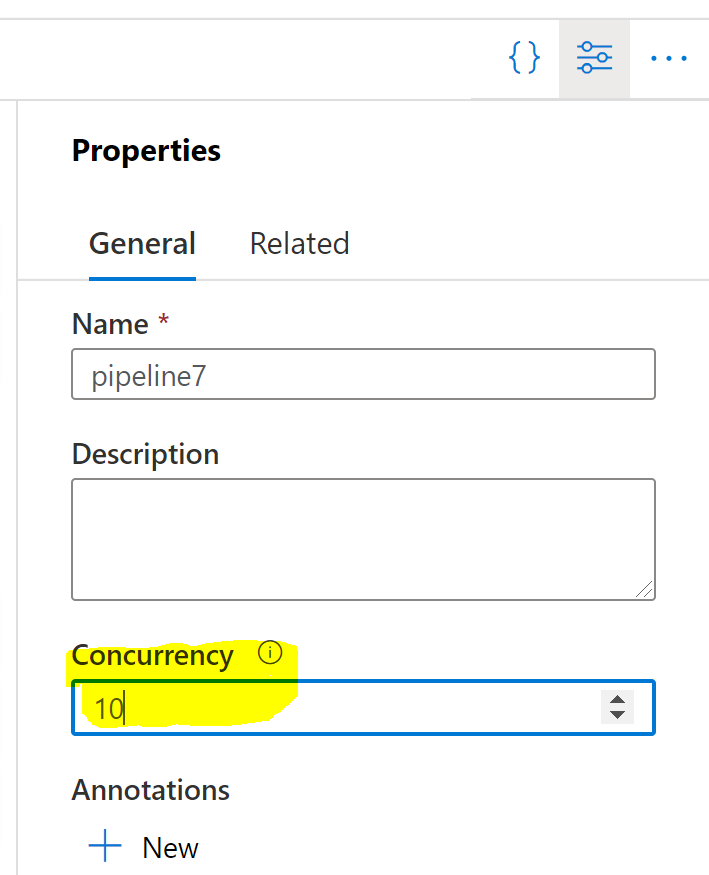

Each pipeline has a concurrency property; how many instances are allowed to run at any given time. This will also throttle triggers.

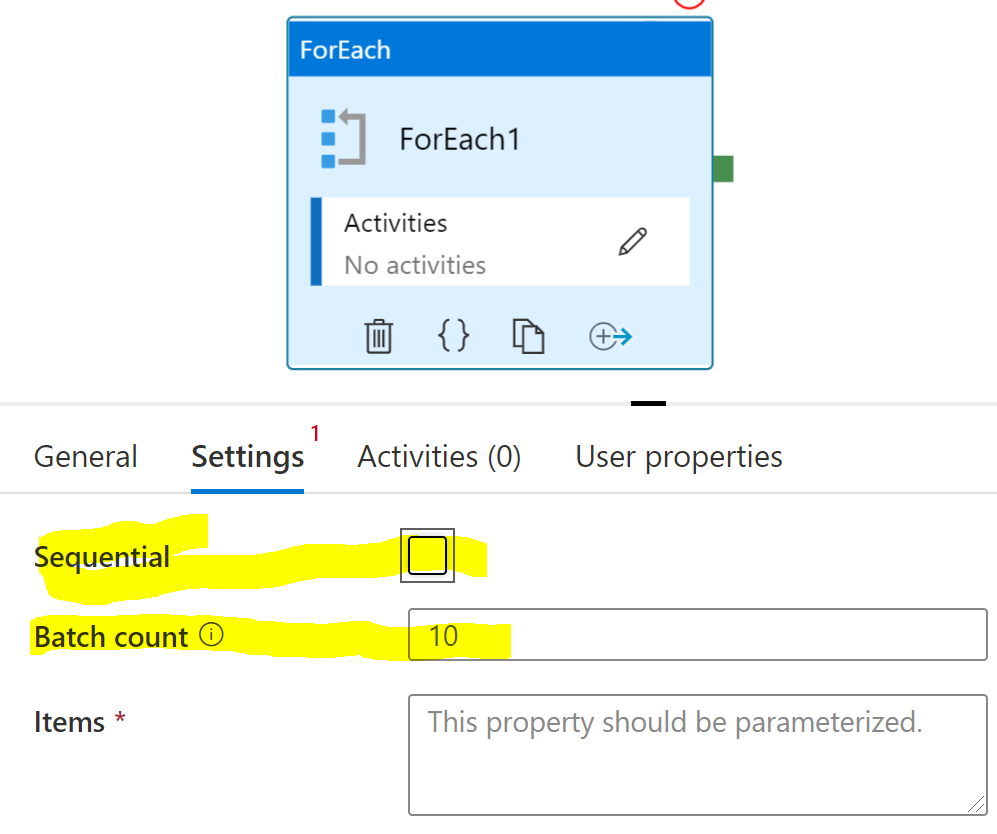

There are also the settings on the loop. When the Sequential option is selected, only one (1) instance is run at a time. There is also the Batch count feature, which determines the maximum number of loop instances run in parallel (assuming Sequential is off).