Welcome to the Microsoft Q&A platform.

Happy to answer your questions.

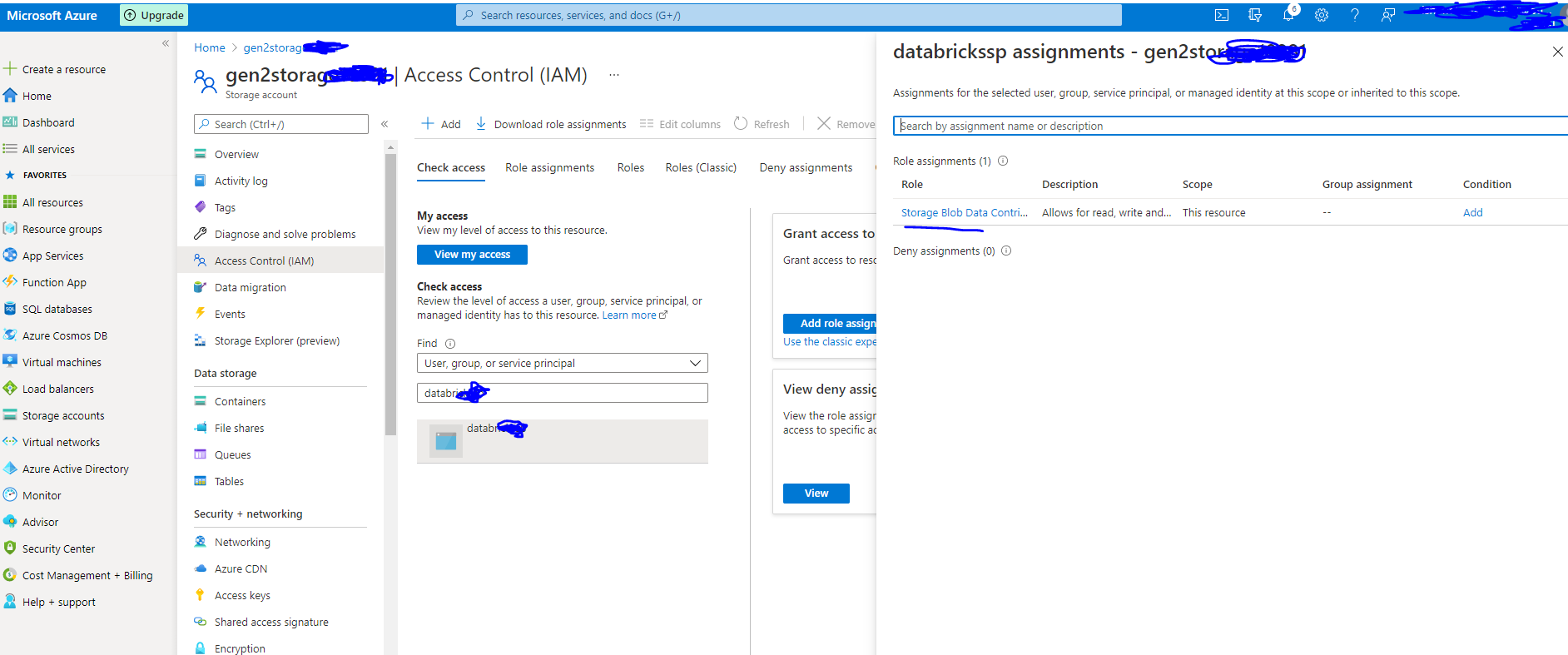

Note: When performing the steps in the Assign the application to a role, make sure to assign the Storage Blob Data Contributor role to the service principal.

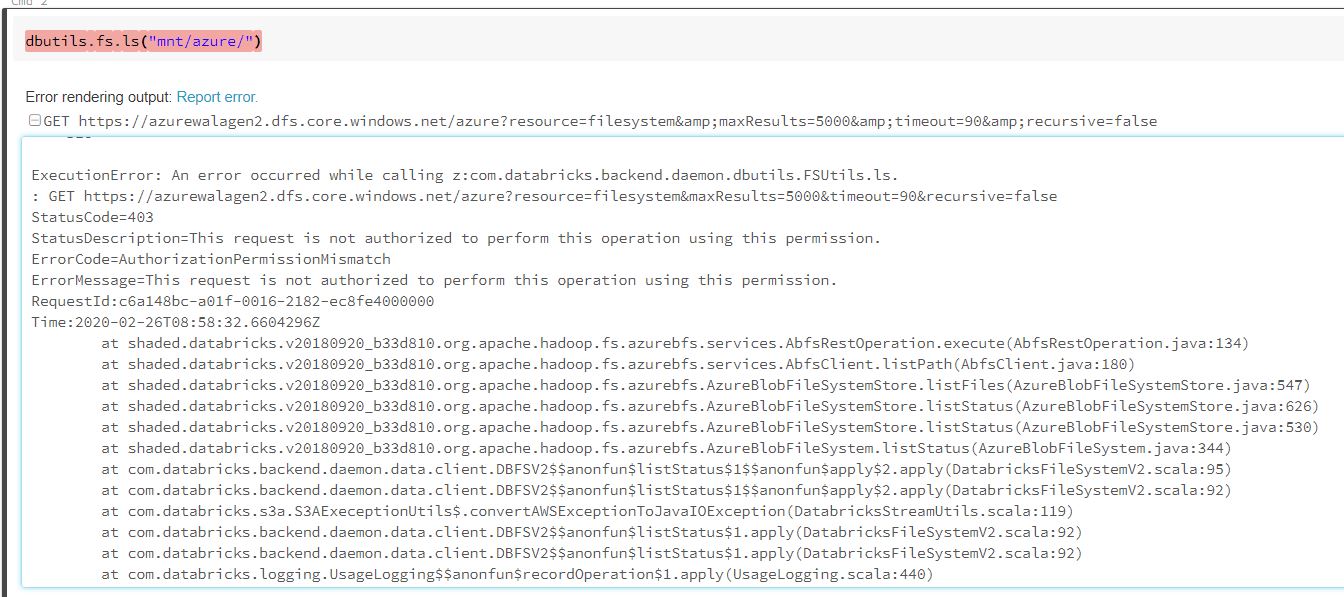

Repro: I have provided owner permission to the service principal and tried to run the “dbutils.fs.ls("mnt/azure/")”, returned same error message as above.

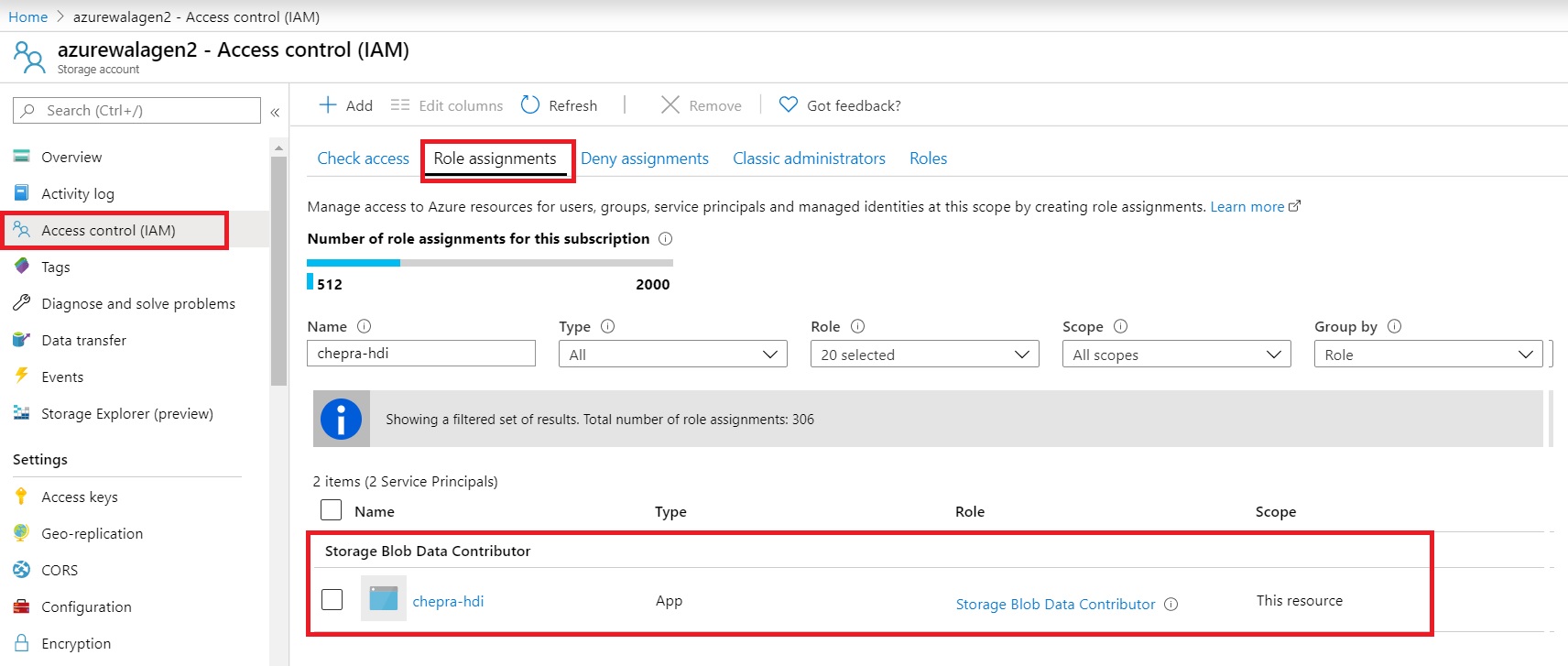

Solution: Now assigned the Storage Blob Data Contributor role to the service principal.

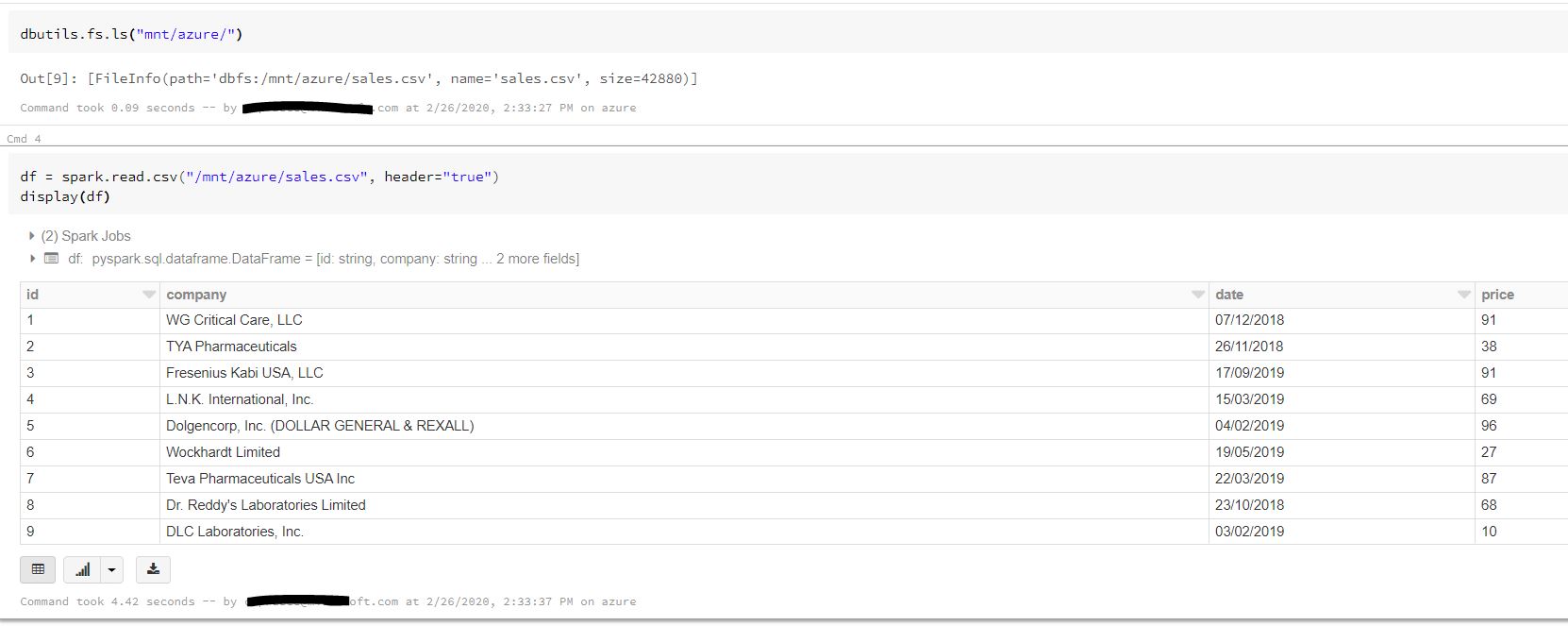

Finally, able to get the output without any error message after assigning Storage Blob Data Contributor role to the service principal.

For more details, refer “Tutorial: Azure Data Lake Storage Gen2, Azure Databricks & Spark”.

Hope this helps.