Today, we have a process that involves the following:

- JIRA

- Google Sheets/tsv files

- c# code

- Python code

- Manual intervention

There is a c# project hosted on bitbukcet that has Azure functions. Amongst the important functions, this one stands out ReadFrameGoogleSheetCols. (Code for it below)

Data is read from the database and then a function uploads the generated worksheet to a JIRA task (via webhooks)

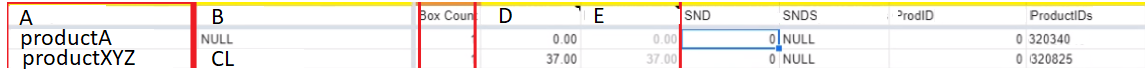

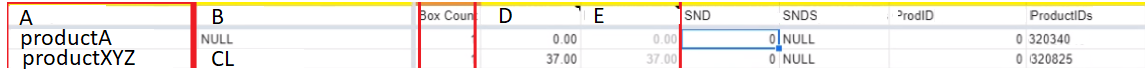

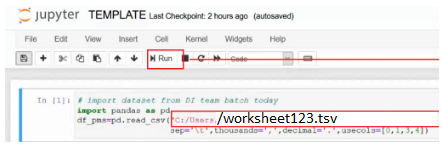

worksheet123.tsv sample file:

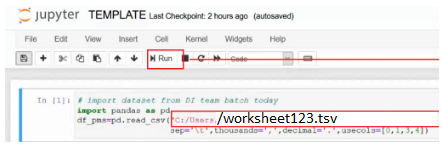

My colleague downloads the sheet attached in the respective JIRA task (e.g. worksheet123.tsv), uses it as input file to run python machine learning code to generate productItemID's from columns A,B, D, and E of the worksheet.

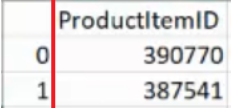

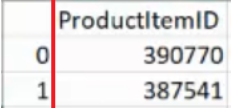

The output of this python code run is a mappings.tsv file that contains columns A, B of the input file as well as the generated ProductItemID column (as column C).

mapping.tsv output file sample:

Then, she copies the ProductItemID column and pastes into the original file (e.g.worksheet123.tsv) on Google Drive. That is the manual part today.

We want to automate this process, and refine the workflow.

As you can probably spot from the images/steps, my colleague hosts all this on her machine today.

We are thinking of utilizing blob storage to host the input file, by maybe creating a webhook in the c# code to generate the file there. and then there would be some form of Azure file watcher that watches whenever theres an input file, inputs it into the python code and generate the mapping.tsv file with productItemID and then copy/paste it into the original worksheet used as input as additional column.

This means that the python code has to run on Azure of course, cause today my colleague runs it locally on her machine. I am not sure if thats possible...

We are also considering a message queue instead of file watcher.

Either way, Im looking for best advise/recommendations about streamlining our current process today. Like what services would be helpful for this on Azure? Is the filewatcher/message queueing method the right/best approaches here? Are there better alternatives? Suggestions are welcome!!

--------------------

Note: I was not sure the right forum to post this, but since i think we may utilize blob storage or maybe a data lake, and there is some Azure functions involved, i tagged it under the respective tags. but there may be other better ways to do this so id appreciate some guidance on this!

ReadFrameGoogleSheetCols()

public static Dictionary<string, List<string>> ReadFrameGoogleSheetCols(string fileId, string sheetCol, int startcol = 1)

{

var dc = new Dictionary<string, List<string>>();

var sheetname = Helper.GetSetting("GoogleSheetName");

var cols = Helper.GetSetting("SheetSPcolumn_Mapping") + "," + sheetCol;

if (!string.IsNullOrEmpty(sheetCol))

{

var col1 = cols.Split(',')[0];

cols = $"{col1},{sheetCol}";

}

String range = $"{sheetname}!A{startcol}:ZZ2";

GoogleAPIService api = new GoogleAPIService();

SpreadsheetsResource.ValuesResource.GetRequest request =

api.GoogleSheetService().Spreadsheets.Values.Get(fileId, range);

ValueRange response = request.Execute();

IList<IList<Object>> values = response.Values;

if (values != null && values.Count > 0)

{

var iWarning = 0;

var iMaxCol = 0;

for (var i = 0; i < values[0].Count; i++)

{

if (values[0][i].ToString().ToLower().Equals(cols.Split(',')[0].ToLower()))

{

iWarning = i;

break;

}

}

List<int> lCols = new List<int>();

for (var i = iWarning + 1; i < values[0].Count; i++)

{

if (cols.ToLower().Contains(values[0][i].ToString().ToLower()))

{

iMaxCol = i;

lCols.Add(i - iWarning);

}

}

var startRow = startcol + 1; // row where to start read data

range = $"{sheetname}!{api.GetColumnName(iWarning)}{startRow}:{api.GetColumnName(iMaxCol)}";

SpreadsheetsResource.ValuesResource.GetRequest reqSP =

api.GoogleSheetService().Spreadsheets.Values.Get(fileId, range);

ValueRange resSP = reqSP.Execute();

IList<IList<Object>> valsp = resSP.Values;

var lstwarnings = new List<string>();

lstwarnings.AddRange(from row in valsp

where !row[0].ToString().ToUpper().StartsWith("OK")

select row[0].ToString());

// return null if All warnings is not OK

if (lstwarnings.Count > 0)

return null;

if (valsp != null && valsp.Count > 0)

{

var colSP = sheetCol.Split(',');

var icol = 0;

foreach (var (i, lstsp) in from i in lCols

let lstsp = new List<string>()

select (i, lstsp))

{

lstsp.AddRange(from row in valsp

where row.Count > i && row[0].ToString().ToUpper().StartsWith("OK") && !row[i].ToString().StartsWith("--")

select row[i].ToString());

dc.Add(colSP[icol], lstsp);

icol++;

}

if (!ValidateHeaders(colSP, dc))

return null;

}

}

return dc;

}