Hi @Imran Mondal ,

As per my testing:

Output - In the table storage I want to load the files and if a new column is there it should load that column as well.

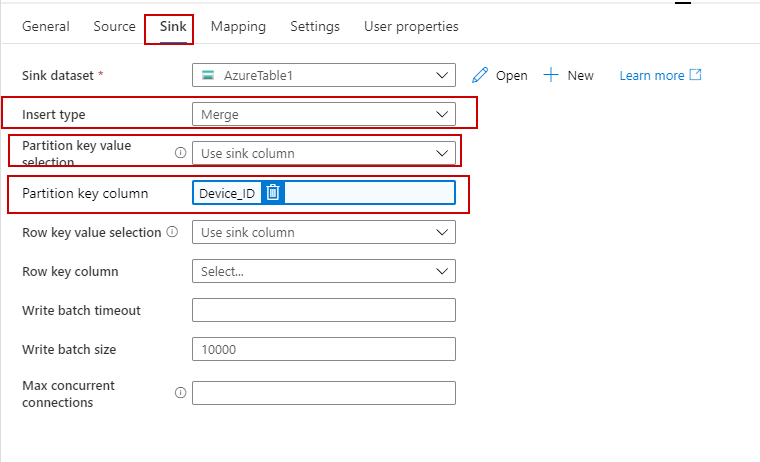

So, I had removed all the source schema and target schema, to load everything. The problem is in the source file I have a column named Device_ID, how can I use that

column as a partition key while loading to the Sink table.

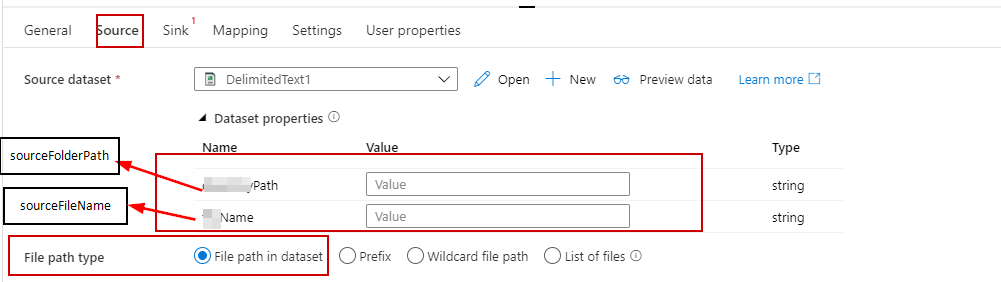

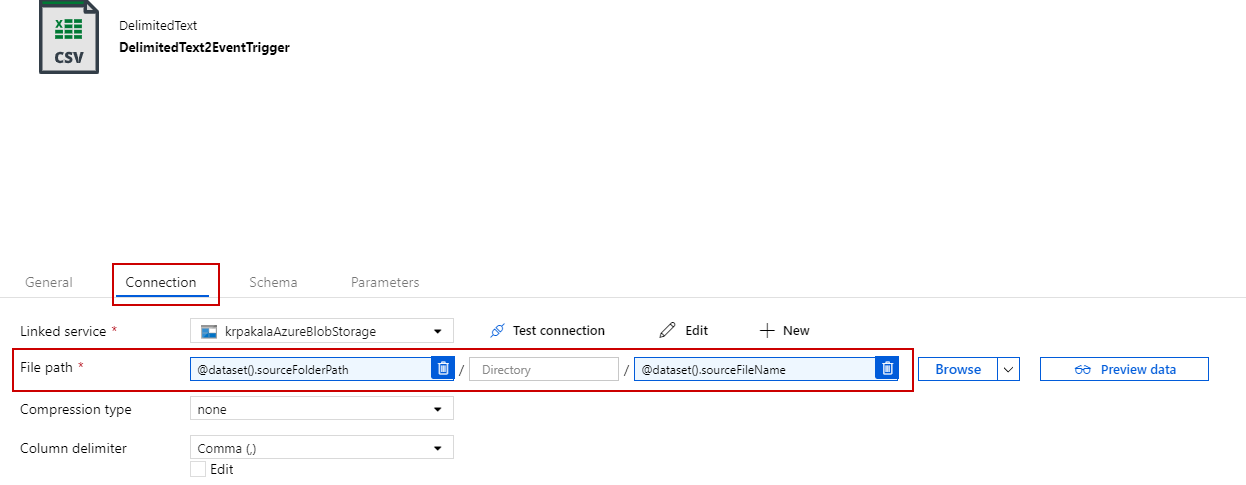

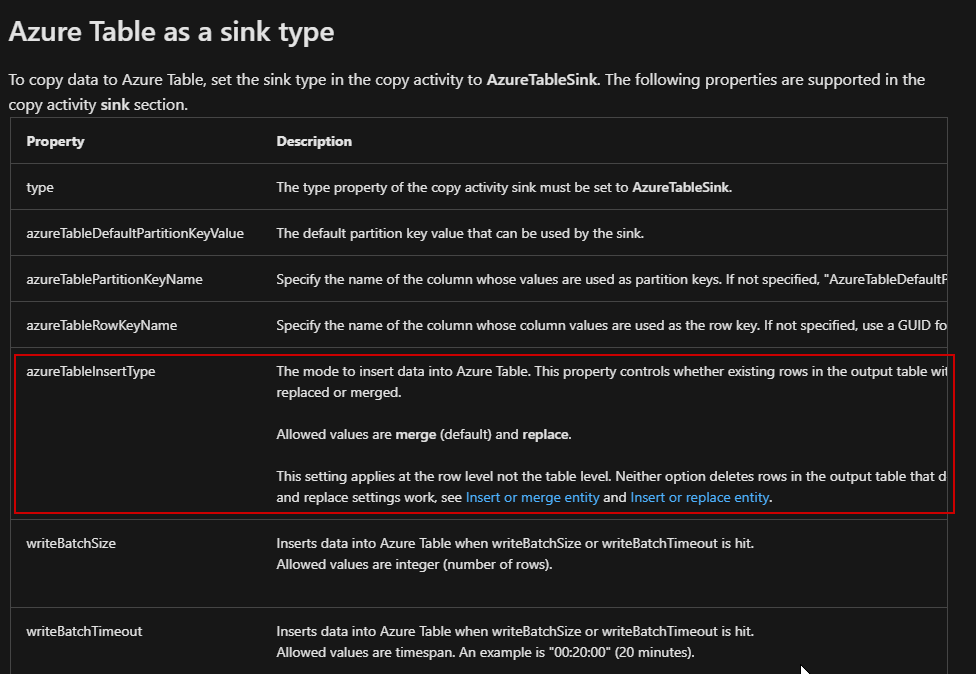

If you source column changes then you can use the below setting in your copy activity. Which will insert new entities including new source columns/properties for the PartitionKey = Device_ID

For the second ask:

Second- All the column data type is string, how can change only one column data type, that is for data time column, keeping in mind the dynamic column in the source.

I don't think this can be done in the same copy activity. By default a property is created as type String, unless you specify a different type. To explicitly type a property, specify its data type by using the appropriate OData data type for an Insert Entity or Update Entity operation. For more information, see Inserting and Updating Entities.

Hope this answers your query.

Note: As the original query of this thread was answered it is always recommended to open new thread for new queries so that it will be easy for the community to find the helpful information :)

----------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.