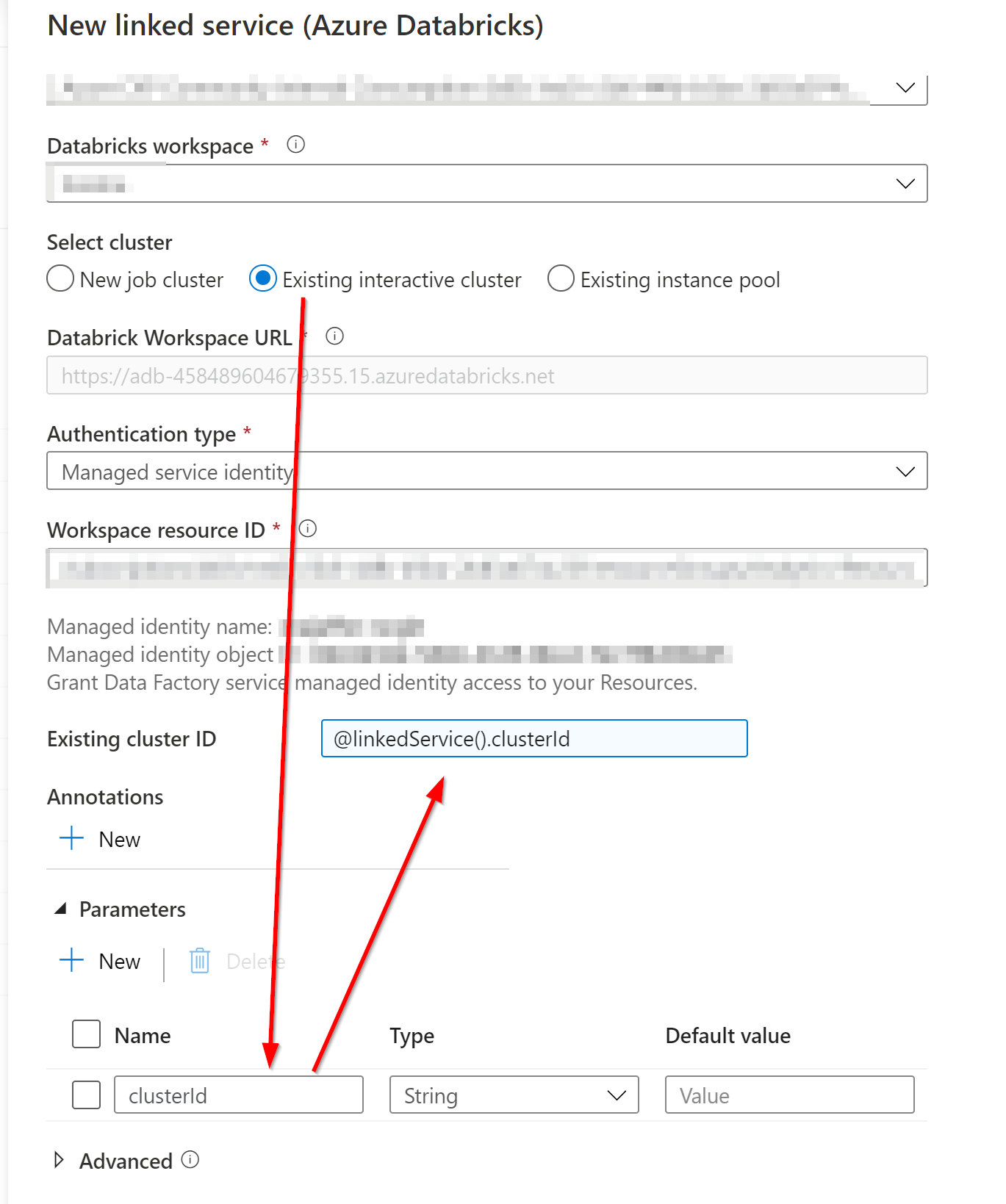

Oh, I see. I was confused before, because the New Cluster option, makes a new cluster every pipeline activity run. Or so I thought, I didn't explicitly look into that.

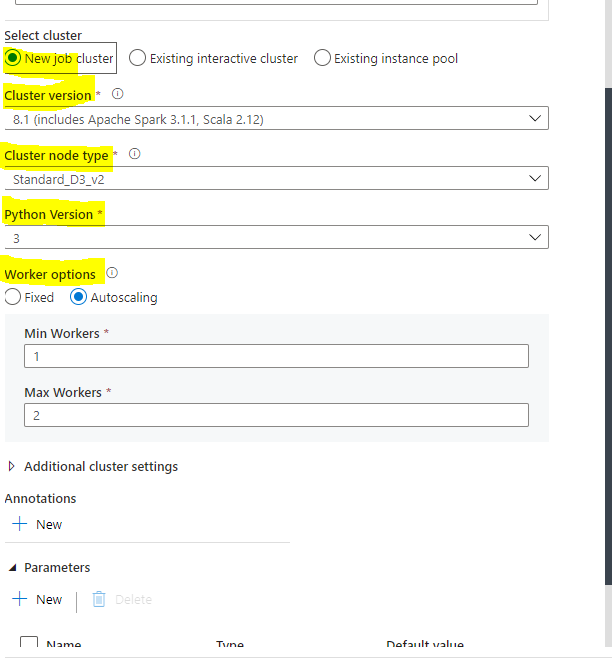

I have been able to parameterize most of the highlighted. The Python version seems to be an oddball.

{

"name": "AzureDatabricks1",

"type": "Microsoft.DataFactory/factories/linkedservices",

"properties": {

"parameters": {

"version": {

"type": "string"

},

"nodeType": {

"type": "string"

},

"driverType": {

"type": "string"

}

},

"annotations": [],

"type": "AzureDatabricks",

"typeProperties": {

"domain": "https://XXXX.azuredatabricks.net",

"newClusterNodeType": "@linkedService().nodeType",

"newClusterNumOfWorker": "1",

"newClusterSparkEnvVars": {

"PYSPARK_PYTHON": "/databricks/python3/bin/python3"

},

"newClusterVersion": "@linkedService().version",

"newClusterInitScripts": [],

"newClusterDriverNodeType": "@linkedService().driverType",

"encryptedCredential": XXXX

}

}

}

Note the Python version is under "newClusterSparkEnvVars" when choosing 3 (above). When choosing 2 (below) it is not present. Also below note the number of workers when I set the autoscaling min 2 max 4. This means the dynamic content for scaling should be a string literal of form min:max

{

"name": "AzureDatabricks1",

"type": "Microsoft.DataFactory/factories/linkedservices",

"properties": {

"parameters": {

"version": {

"type": "string"

},

"nodeType": {

"type": "string"

},

"driverType": {

"type": "string"

},

},

"annotations": [],

"type": "AzureDatabricks",

"typeProperties": {

"domain": "https://XXXX.azuredatabricks.net",

"newClusterNodeType": "@linkedService().nodeType",

"newClusterNumOfWorker": "2:4",

"newClusterVersion": "@linkedService().version",

"newClusterInitScripts": [],

"newClusterDriverNodeType": "@linkedService().driverType",

"encryptedCredential": XXXX

}

}

}