Hi @khouloud Belhaj ,

Thank you for posting your query in Microsoft Q&A Platform.

Below are the steps which you should follow to implement your scenario.

Step 1: Use GetMetaData activity and get child items(file names) from your source folder.

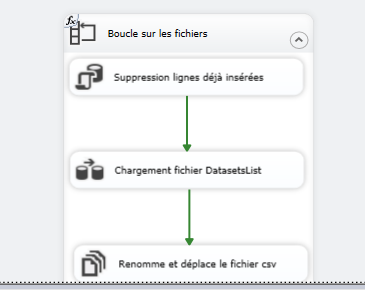

Step 2: Use ForEach Activity. Pass your childItems array to your ForEach activity, to loop each file name

Step 3: Inside ForEach activity use data flow activity. Your data flow activity should have a parameter to take file name dynamically from forecah.

Note, see data flow implementation details below>>

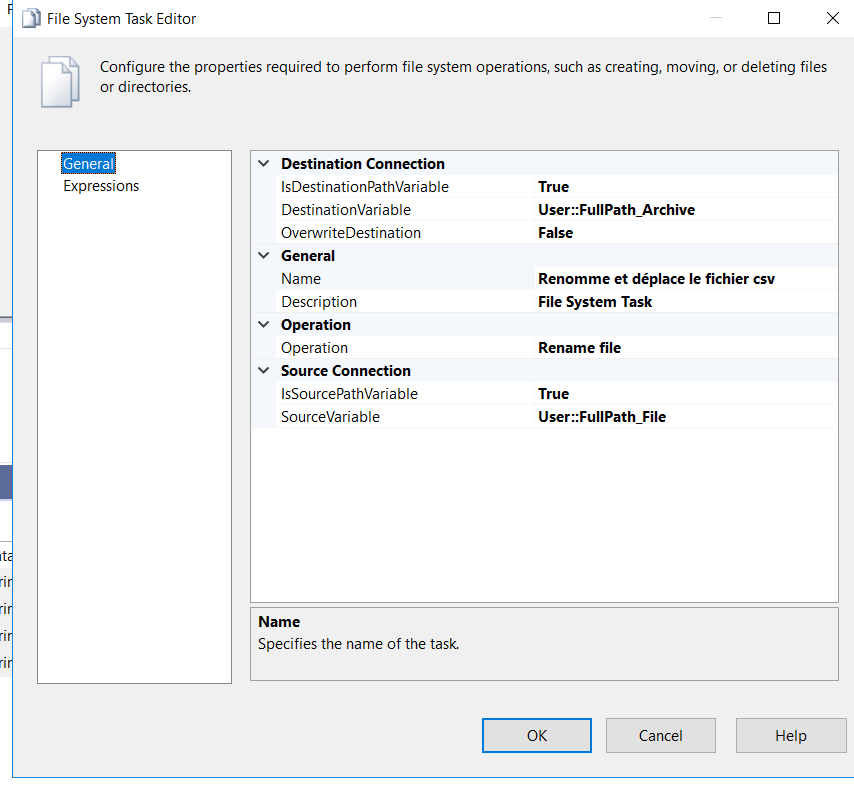

Step4: Inside foreach activity, after data flow activity, use copy activity to copy that files to different folder with different name

Step5: Inside foreach activity, after data flow activity & Copy activity, Use delete activity to delete your file from source folder

Data flow Implementation:

Step1: Create a file name parameter inside data flow to accept file name from foreach.

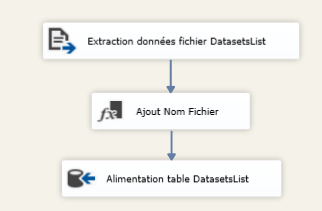

Step2: Add Source Transformation which points to files in your source folder dynamically based on parameter value

Step3: Add another source transformation for your target table

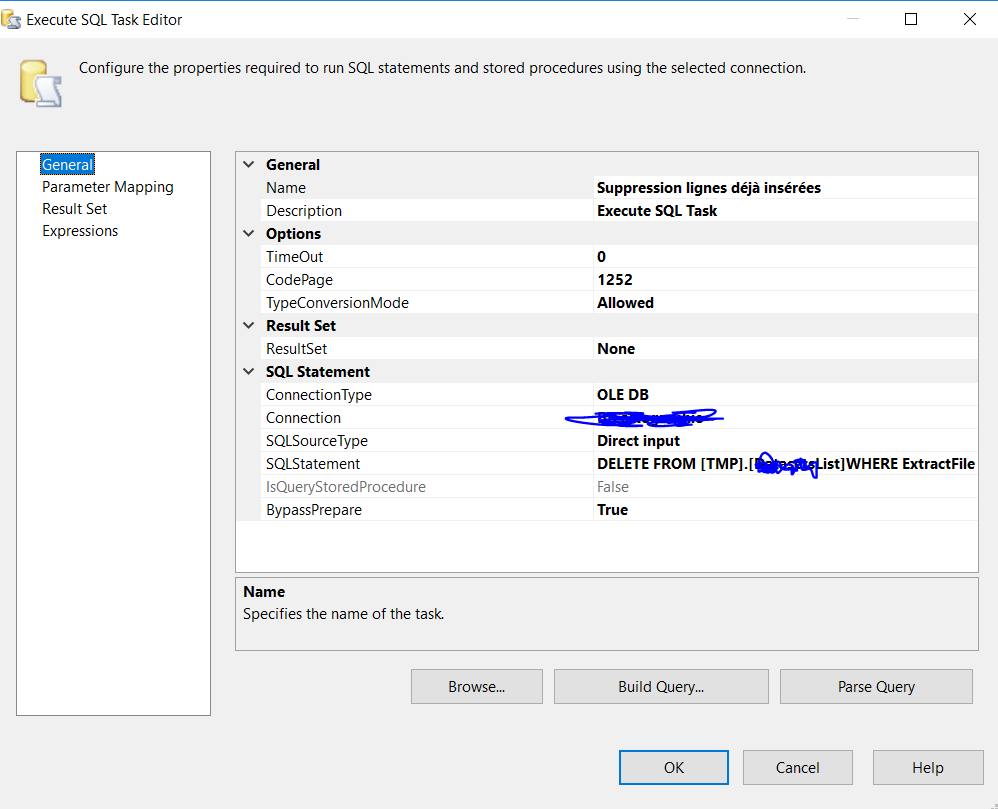

Step4: Use lookup transformation to find non matching rows between your file and table

Step5: Use filter transformation to take only non matching rows

Step6: Use Sink Transformation to load that non matching rows in to your table

Hope this will help. Please let us know if any further queries. Thank you.

-----------------------------

- Please

accept an answerif correct. Original posters help the community find answers faster by identifying the correct answer. Here is how. - Want a reminder to come back and check responses? Here is how to subscribe to a notification.

]

] ]

]