Hello,

Welcome to Microsoft Q&A platform.

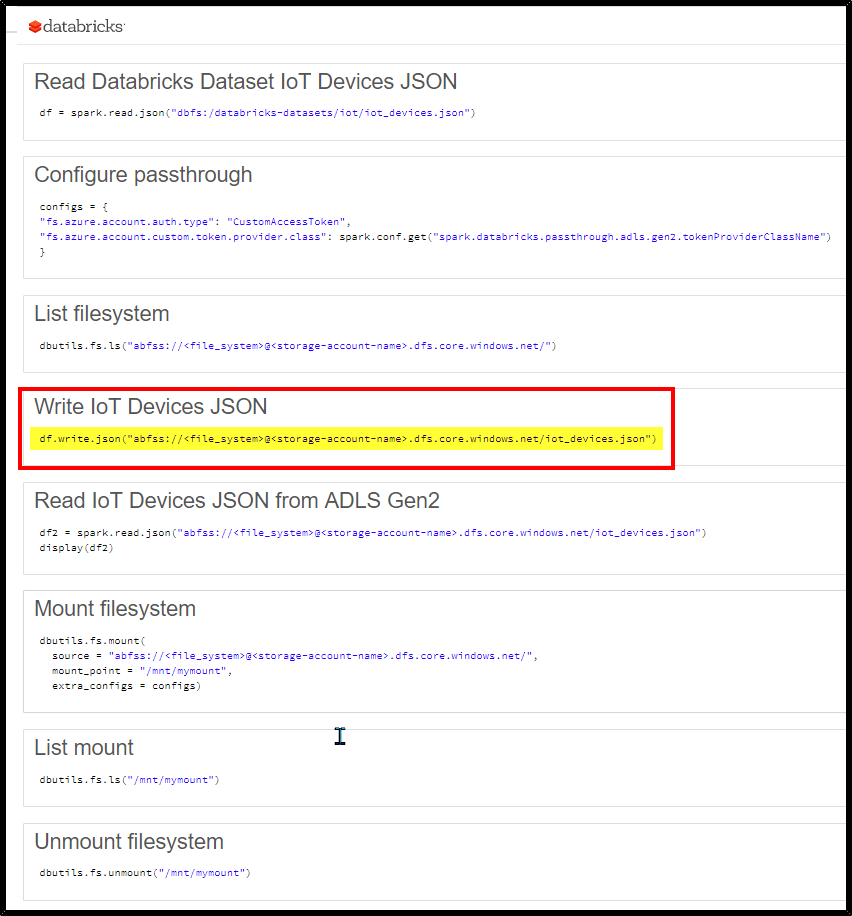

You can use df.write.json API to write to any specific location as per your need.

Syntax:df.write.json('location where you want to save the json file')

Example:df.write.json("abfss://<file_system>@<storage-account-name>.dfs.core.windows.net/iot_devices.json")

Here are the steps to save the JSON documents to Azure Data Lake Gen2 using Azure Databricks.

Step1: You can use spark.read.json API to read the json file and create a dataframe.

Step2: The blob storage location can be mounted to a databricks dbfs directory, using the instructions in below doc

https://learn.microsoft.com/en-us/azure/databricks/data/data-sources/azure/azure-datalake-gen2

Step3: Then use the df.write.json API to write to the mount point, which will write to the blob storage

For more details, refer the below articles:

Sample notebook: https://learn.microsoft.com/en-us/azure/databricks/_static/notebooks/adls-passthrough-gen2.html

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.