Hello There,

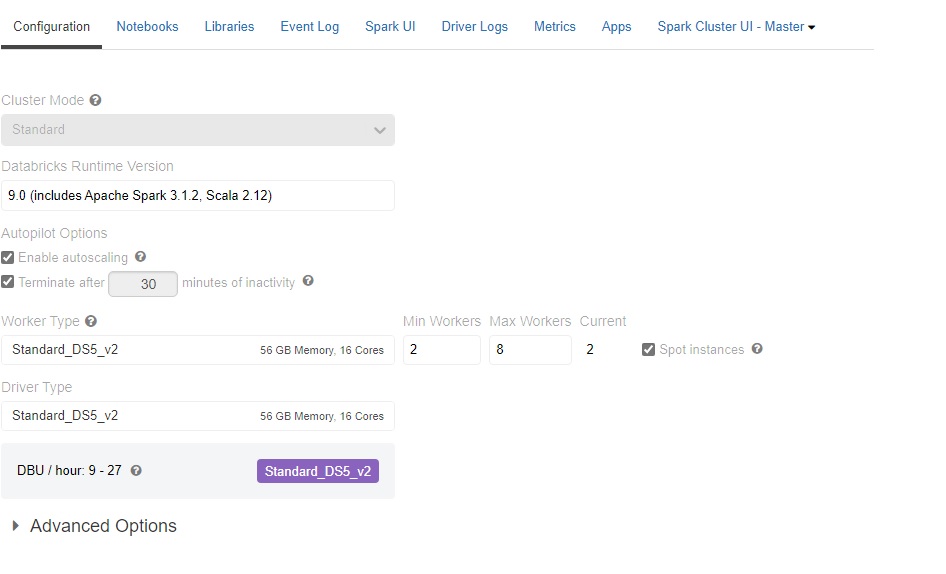

I am trying to upgrade my cluster from runtime 6.4 to 9.0 or 10.0. I am able to do that successfully but I have a notebook that is supposed read data from Cosmos DB (Cassandra) to load into databricks. The jobs runs fine with 6.4 but fails in 9.0 or 10.0.

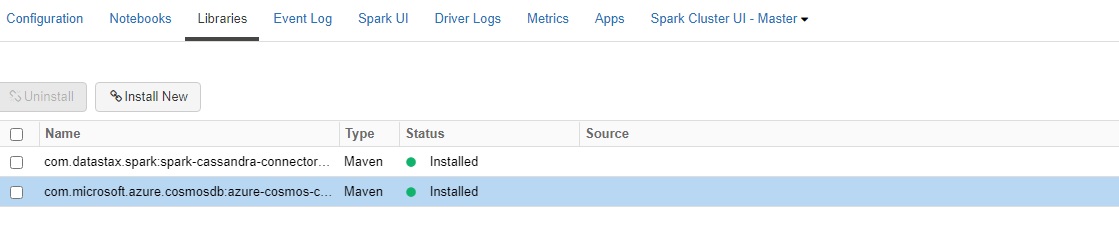

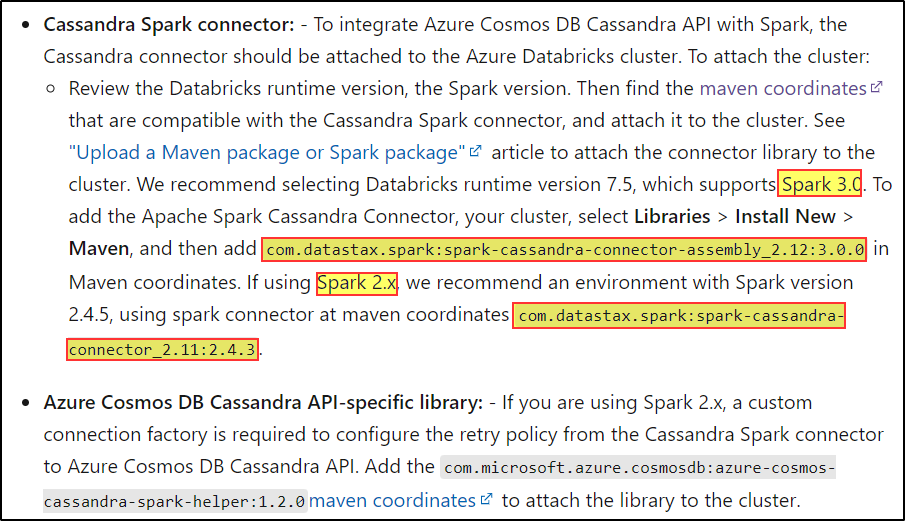

Considering that fact that upgrading requires, new driver and connector version. I tried to install the libraries from maven and also tried to download jar files from third party sites but I believe I couldn't get to the right connector / driver. Require guidance in getting the right libraries for 9.0 or 10.0 runtime.

Below is the code that I am using:

// To import all the cassandra tables

import org.apache.spark.sql.cassandra._

//Spark connector

import com.datastax.spark.connector._

//import com.datastax.spark.connector._

import com.datastax.spark.connector.cql.CassandraConnector

//import com.datastax.oss.driver.api.core._

//CosmosDB library for multiple retry

import com.microsoft.azure.cosmosdb.cassandra

import org.apache.spark.sql.functions._

import spark.sqlContext.implicits._

import org.apache.spark.storage.StorageLevel

spark.conf.set("spark.cassandra.connection.host","<test>")

spark.conf.set("spark.cassandra.connection.port","<test>")

spark.conf.set("spark.cassandra.connection.ssl.enabled","true")

spark.conf.set("spark.cassandra.auth.username","<test>")

spark.conf.set("spark.cassandra.auth.password","<test>")

spark.conf.set("spark.cassandra.connection.factory", "com.microsoft.azure.cosmosdb.cassandra.CosmosDbConnectionFactory")

spark.conf.set("spark.cassandra.output.batch.size.rows", "1")

spark.conf.set("spark.cassandra.connection.connections_per_executor_max", "10")

spark.conf.set("spark.cassandra.output.concurrent.writes", "1000")

spark.conf.set("spark.cassandra.concurrent.reads", "512")

spark.conf.set("spark.cassandra.output.batch.grouping.buffer.size", "1000")

spark.conf.set("spark.cassandra.connection.keep_alive_ms", "600000000")

spark.conf.set("spark.sql.legacy.allowCreatingManagedTableUsingNonemptyLocation","true")

val paymentdetailsDF = sqlContext

.read

.format("org.apache.spark.sql.cassandra")

.options(Map( "table" -> "test_table", "keyspace" -> "test_keyspace"))

.load

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how