Hi @chodkows ,

Sorry you are experiencing this.

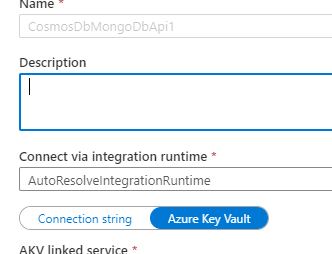

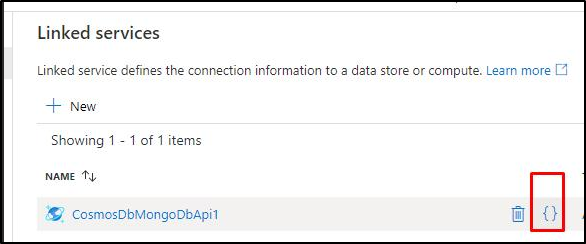

After having conversation with internal team, there has been a bug identified by ADF engineering team related to this. To workaround this issue, could you please manually check the linked service JSON payload, to see if there is a property of "tls=true" inside the connection string.

Change the "tls=true" to "ssl=true" in connection string and rerun failed pipelines. The fix for this issue is currently under deployment, until the fix go live, unfortunately current linked services need this manual effort to correct it.

In case if you have already provided "ssl=true" while creating the linked service, after test connection and preview data, before running the pipeline, please open the linked service code to double check, this "ssl=true" property maybe auto-changed to "tls=true", if so, please change it back to "ssl=true"

Please let us know how it goes. In case if this workaround doesn't resolve your issue, please share details as requested by Himanshu so that we can escalate this to internal team for deeper analysis.

Thank you for your patience.