Unable to copy Delta Lake data to Synapse Dedicated SQL Pool with Azure Data Factory

When trying to use data factory to copy data to a Synapse Dedicated SQL Pool Table I received the following error: ErrorCode=AzureDatabricksCommandError,Hit an error when running the command in Azure Databricks. Error details: shaded.databricks.org.apache.hadoop.fs.azure.AzureException: hadoop_azure_shaded.com.microsoft.azure.storage.StorageException: This request is not authorized to perform this operation.

Caused by: hadoop_azure_shaded.com.microsoft.azure.storage.StorageException: This request is not authorized to perform this operation.

I have created the data source using the Delta connector with no issues I can test connections/preview data all is good.

I have created the sink dataset and linked service. Link service connects to sink table all is good.

On the sink side I am using Polybase Staging with all the necessary settings and link service to the storage account. Link service test out as good and allows me to browse to the proper storage container I wish to use as the polybase staging location.

For the Databricks cluster that is handling the source Delta Table I have added the following lines to the Spark config on the cluster.

spark.sql.shuffle.partitions 8

spark.hadoop.fs.azure.account.key.xxxxxxxxx.blob.core.windows.net <storage account access key value>

spark.databricks.delta.preview.enabled true

spark.databricks.delta.autoCompact.enabled true

spark.databricks.delta.optimizeWrite.enabled true

Note: I have tried both the storage account key and the storage container SAS key with full rights, with both methods giving me the exact same error message

Also note before I found the documentation to modifying the spark config on the cluster I was getting an error message about not allowing anonymous connections. So adding the spark config option for spark.hadoop did change the error message. But still the error above remains.

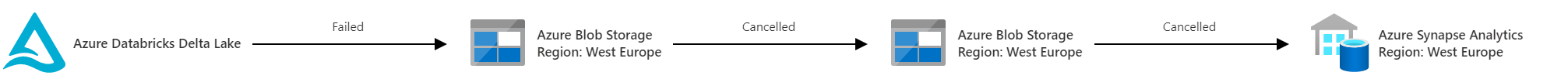

I have tried everything I can think of over the course of several days. At this point I have looked at it so long I am afraid I can not see the forest for the trees. Anyone have any comments, questions or suggestions? I did notice when you execute the ADFY Pipeline in Debug mode in the typical activity run view you get:

It would appear that it is using the storage account to do some work before the actual polybase staging step. Would love to understand that portion better as well.