Hello @Summer ,

Thanks for the question and using MS Q&A platform.

Your HDInsight cluster's ability to access files in Data Lake Storage Gen2 is controlled through managed identities. A managed identity is an identity registered in Azure Active Directory (Azure AD) whose credentials are managed by Azure. With managed identities, you don't need to register service principals in Azure AD. Or maintain credentials such as certificates.

Case1: Access the defualt storage account created while creating the HDInsight cluster.

There are several ways you can access the files in Data Lake Storage Gen2 from an HDInsight cluster.

- Using the fully qualified name. With this approach, you provide the full path to the file that you want to access:

abfs://<containername>@<accountname>.dfs.core.windows.net/<file.path>/ - Using the shortened path format. With this approach, you replace the path up to the cluster root with::

abfs:///<file.path>/ - Using the relative path. With this approach, you only provide the relative path to the file that you want to access:

/<file.path>/

Case2: Access the additional storage account

If you want to access the data residing on the external storage. Then you will have to add that storage as additional storage in the HDInsight cluster.

Steps to add storage accounts to the existing clusters via Ambari UI:

Step 1: From a web browser, navigate to https://CLUSTERNAME.azurehdinsight.net, where CLUSTERNAME is the name of your cluster.

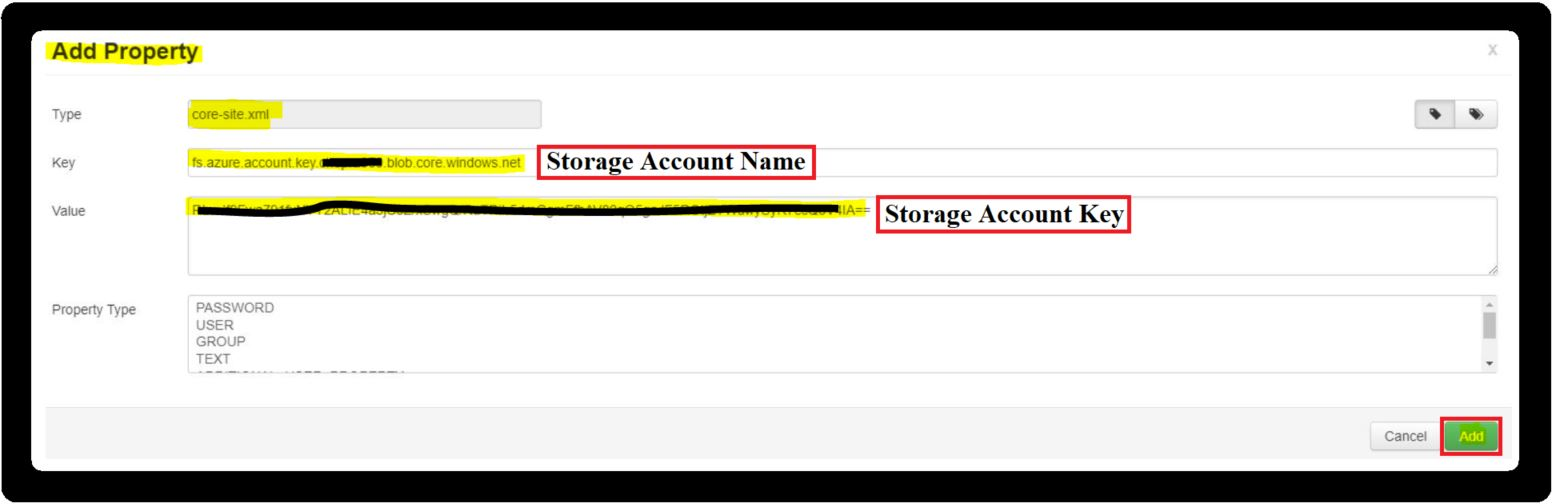

Step 2: Navigate to HDFS -->Config -->Advanced, scroll down to Custom core-site

Step 3: Select Add Property and enter your storage account name and key in following manner

Key => fs.azure.account.key.(storage_account).blob.core.windows.net

Value => (Storage Access Key)

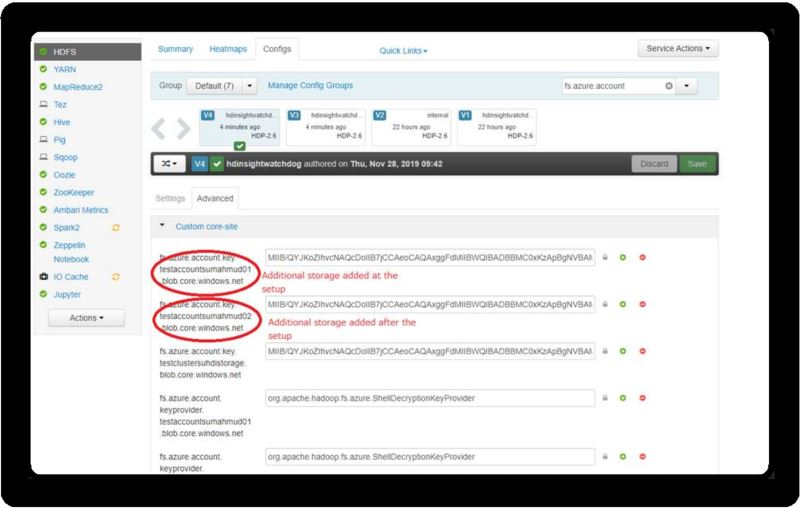

Step 4: Observe the keys that begin with fs.azure.account.key. The account name will be a part of the key as seen in this sample image:

For more details, refer to Use Azure Data Lake Storage Gen2 with Azure HDInsight clusters and Add additional storage accounts to HDInsight

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is howd

- Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.