Tutorial: Use Custom Vision with an IoT device to report visual states

This sample app illustrates how to use Custom Vision to train a device with a camera to detect visual states. You can run this detection scenario on an IoT device using an exported ONNX model.

A visual state describes the content of an image: an empty room or a room with people, an empty driveway or a driveway with a truck, and so on. In the image below, you can see the app detect when a banana or an apple is placed in front of the camera.

This tutorial will show you how to:

- Configure the sample app to use your own Custom Vision and IoT Hub resources.

- Use the app to train your Custom Vision project.

- Use the app to score new images in real time and send the results to Azure.

If you don't have an Azure subscription, create a free account before you begin.

Prerequisites

- To use the Custom Vision Service you will need to create Custom Vision Training and Prediction resources in Azure. To do so in the Azure portal, fill out the dialog window on the Create Custom Vision page to create both a Training and Prediction resource.

Important

This project needs to be a Compact image classification project, because we will be exporting the model to ONNX later.

- You'll also need to create an IoT Hub resource on Azure.

- Visual Studio 2015 or later

- Optionally, an IoT device running Windows 10 IoT Core version 17763 or higher. You can also run the app directly from your PC.

- For Raspberry Pi 2 and 3, you can set up Windows 10 directly from the IoT Dashboard app. For other devices such as DrangonBoard, you'll need to flash it using the eMMC method. If you need help with setting up a new device, see Setting up your device in the Windows IoT documentation.

About the Visual Alerts app

The IoT Visual Alerts app runs in a continuous loop, switching between four different states as appropriate:

- No Model: A no-op state. The app will continually sleep for one second and check the camera.

- Capturing Training Images: In this state, the app captures a picture and uploads it as a training image to the target Custom Vision project. The app then sleeps for 500 ms and repeats the operation until the set target number of images are captured. Then it triggers the training of the Custom Vision model.

- Waiting For Trained Model: In this state, the app calls the Custom Vision API every second to check whether the target project contains a trained iteration. When it finds one, it downloads the corresponding ONNX model to a local file and switches to the Scoring state.

- Scoring: In this state, the app uses Windows ML to evaluate a single frame from the camera against the local ONNX model. The resulting image classification is displayed on the screen and sent as a message to the IoT Hub. The app then sleeps for one second before scoring a new image.

Examine the code structure

The following files handle the main functionality of the app.

| File | Description |

|---|---|

| MainPage.xaml | This file defines the XAML user interface. It hosts the web camera control and contains the labels used for status updates. |

| MainPage.xaml.cs | This code controls the behavior of the XAML UI. It contains the state machine processing code. |

| CustomVision\CustomVisionServiceWrapper.cs | This class is a wrapper that handles integration with the Custom Vision Service. |

| CustomVision\CustomVisionONNXModel.cs | This class is a wrapper that handles integration with Windows ML for loading the ONNX model and scoring images against it. |

| IoTHub\IotHubWrapper.cs | This class is a wrapper that handles integration with IoT Hub for uploading scoring results to Azure. |

Set up the Visual Alerts app

Follow these steps to get the IoT Visual Alerts app running on your PC or IoT device.

- Clone or download the IoTVisualAlerts sample on GitHub.

- Open the solution IoTVisualAlerts.sln in Visual Studio

- Integrate your Custom Vision project:

- In the CustomVision\CustomVisionServiceWrapper.cs script, update the

ApiKeyvariable with your training key. - Then update the

Endpointvariable with the endpoint URL associated with your key. - Update the

targetCVSProjectGuidvariable with the corresponding ID of the Custom Vision project that you want to use.

- In the CustomVision\CustomVisionServiceWrapper.cs script, update the

- Set up the IoT Hub resource:

- In the IoTHub\IotHubWrapper.cs script, update the

s_connectionStringvariable with the proper connection string for your device. - On the Azure portal, load your IoT Hub instance, select IoT devices under Explorers, select on your target device (or create one if needed), and find the connection string under Primary Connection String. The string will contain your IoT Hub name, device ID, and shared access key; it has the following format:

{your iot hub name}.azure-devices.net;DeviceId={your device id};SharedAccessKey={your access key}.

- In the IoTHub\IotHubWrapper.cs script, update the

Run the app

If you're running the app on your PC, select Local Machine for the target device in Visual Studio, and select x64 or x86 for the target platform. Then press F5 to run the program. The app should start and display the live feed from the camera and a status message.

If you're deploying to a IoT device with an ARM processor, you'll need to select ARM as the target platform and Remote Machine as the target device. Provide the IP address of your device when prompted (it must be on the same network as your PC). You can get the IP Address from the Windows IoT default app once you boot the device and connect it to the network. Press F5 to run the program.

When you run the app for the first time, it won't have any knowledge of visual states. It will display a status message that no model is available.

Capture training images

To set up a model, you need to put the app in the Capturing Training Images state. Take one of the following steps:

- If you're running the app on PC, use the button on the top-right corner of the UI.

- If you're running the app on an IoT device, call the

EnterLearningModemethod on the device through the IoT Hub. You can call it through the device entry in the IoT Hub menu on the Azure portal, or with a tool such as IoT Hub Device Explorer.

When the app enters the Capturing Training Images state, it will capture about two images every second until it has reached the target number of images. By default, the target is 30 images, but you can set this parameter by passing the desired number as an argument to the EnterLearningMode IoT Hub method.

While the app is capturing images, you must expose the camera to the types of visual states that you want to detect (for example, an empty room, a room with people, an empty desk, a desk with a toy truck, and so on).

Train the Custom Vision model

Once the app has finished capturing images, it will upload them and then switch to the Waiting For Trained Model state. At this point, you need to go to the Custom Vision website and build a model based on the new training images. The following animation shows an example of this process.

To repeat this process with your own scenario:

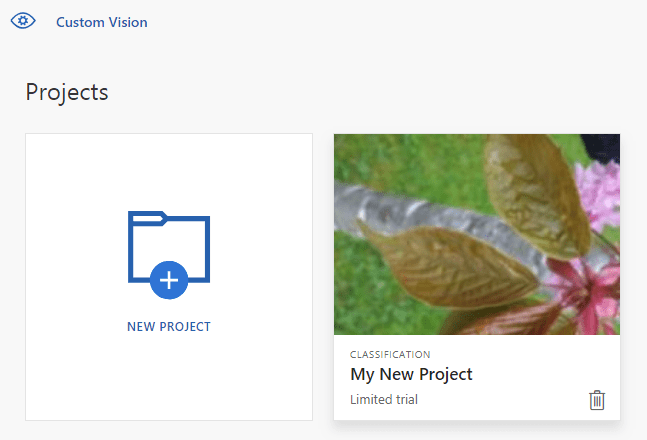

- Sign in to the Custom Vision website.

- Find your target project, which should now have all the training images that the app uploaded.

- For each visual state that you want to identify, select the appropriate images and manually apply the tag.

- For example, if your goal is to distinguish between an empty room and a room with people in it, we recommend tagging five or more images with people as a new class, People, and tagging five or more images without people as the Negative tag. This will help the model differentiate between the two states.

- As another example, if your goal is to approximate how full a shelf is, then you might use tags such as EmptyShelf, PartiallyFullShelf, and FullShelf.

- When you're finished, select the Train button.

- Once training is complete, the app will detect that a trained iteration is available. It will start the process of exporting the trained model to ONNX and downloading it to the device.

Use the trained model

Once the app downloads the trained model, it will switch to the Scoring state and start scoring images from the camera in a continuous loop.

For each captured image, the app will display the top tag on the screen. If it doesn't recognize the visual state, it will display No Matches. The app also sends these messages to the IoT Hub, and if there is a class being detected, the message will include the label, the confidence score, and a property called detectedClassAlert, which can be used by IoT Hub clients interested in doing fast message routing based on properties.

In addition, the sample uses a Sense HAT library to detect when it's running on a Raspberry Pi with a Sense HAT unit, so it can use it as an output display by setting all display lights to red whenever it detects a class and blank when it doesn't detect anything.

Reuse the app

If you'd like to reset the app back to its original state, you can do so by clicking on the button on the top-right corner of the UI, or by invoking the method DeleteCurrentModel through the IoT Hub.

At any point, you can repeat the step of uploading training images by clicking the top-right UI button or calling the EnterLearningMode method again.

If you're running the app on a device and need to retrieve the IP address again (to establish a remote connection through the Windows IoT Remote Client, for example), you can call the GetIpAddress method through IoT Hub.

Clean up resources

Delete your Custom Vision project if you no longer want to maintain it. On the Custom Vision website, navigate to Projects and select the trash can icon under your new project.

Next steps

In this tutorial, you set up and ran an application that detects visual state information on an IoT device and sends the results to the IoT Hub. Next, explore the source code further or make one of the suggested modifications below.

- Add an IoT Hub method to switch the app directly to the Waiting For Trained Model state. This way, you can train the model with images that aren't captured by the device itself and then push the new model to the device on command.

- Follow the Visualize real-time sensor data tutorial to create a Power BI Dashboard to visualize the IoT Hub alerts sent by the sample.

- Follow the IoT remote monitoring tutorial to create a Logic App that responds to the IoT Hub alerts when visual states are detected.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for