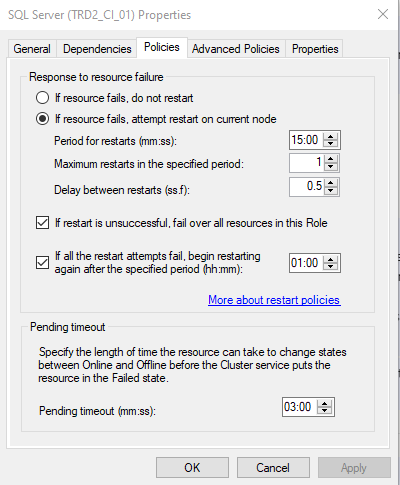

The cluster service however tried to restart the SQL role a couple of times on the same original node that it crashed on.

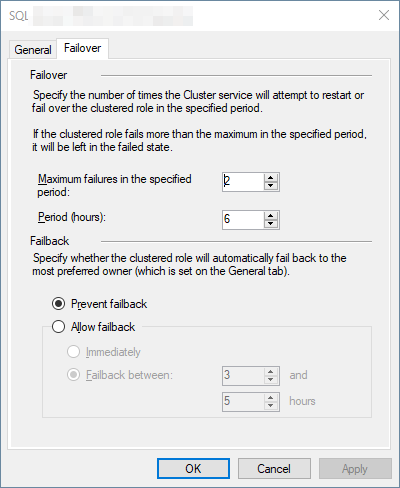

Unless you've gone in and changed the cluster settings, this is the default behavior.

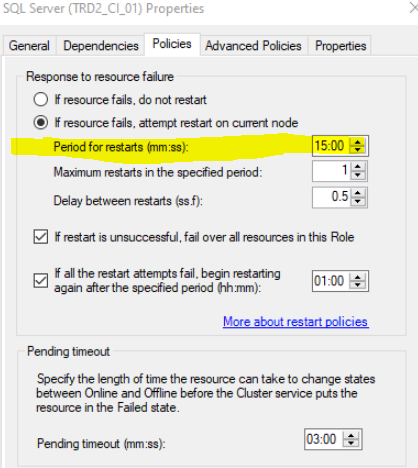

Then after about 15 minutes it finally failed over to node B. Why would it take so long for node B to take over the role?

You'll have to look at the cluster log to determine exactly why, but it's most likely the timing based on your cluster configuration along with the set (or default, most likely) values for the resource and role.