Hello @Tobias Himmighöfer ,

Welcome to the Microsoft Q&A platform.

As per my repro, when I executed the above command for first time I was experiencing the same problem.

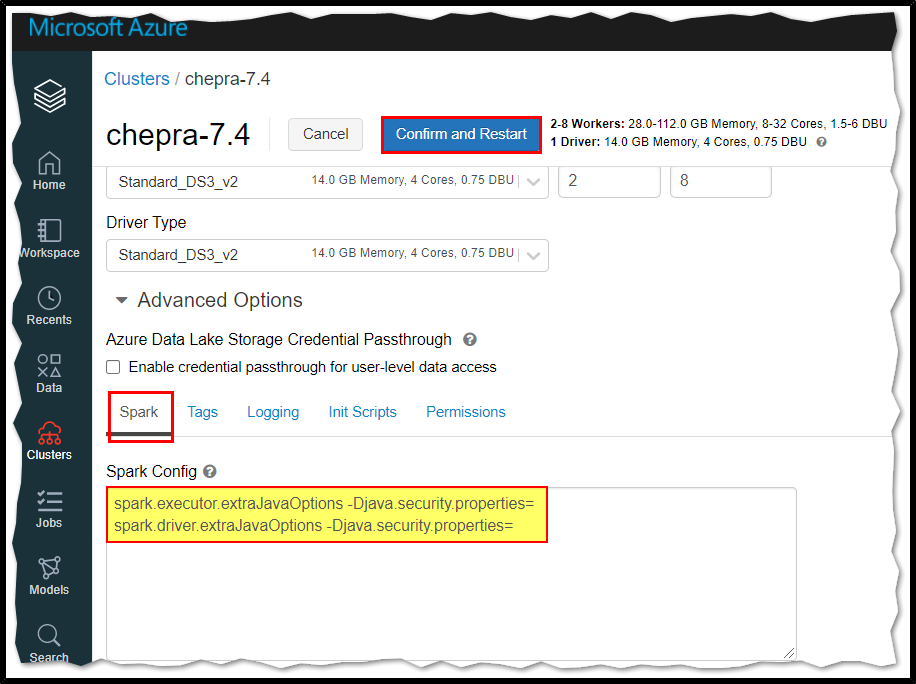

Solution: It started working by overwriting the java security properties for driver and executor.

spark.driver.extraJavaOptions -Djava.security.properties=

spark.executor.extraJavaOptions -Djava.security.properties=

Reason:

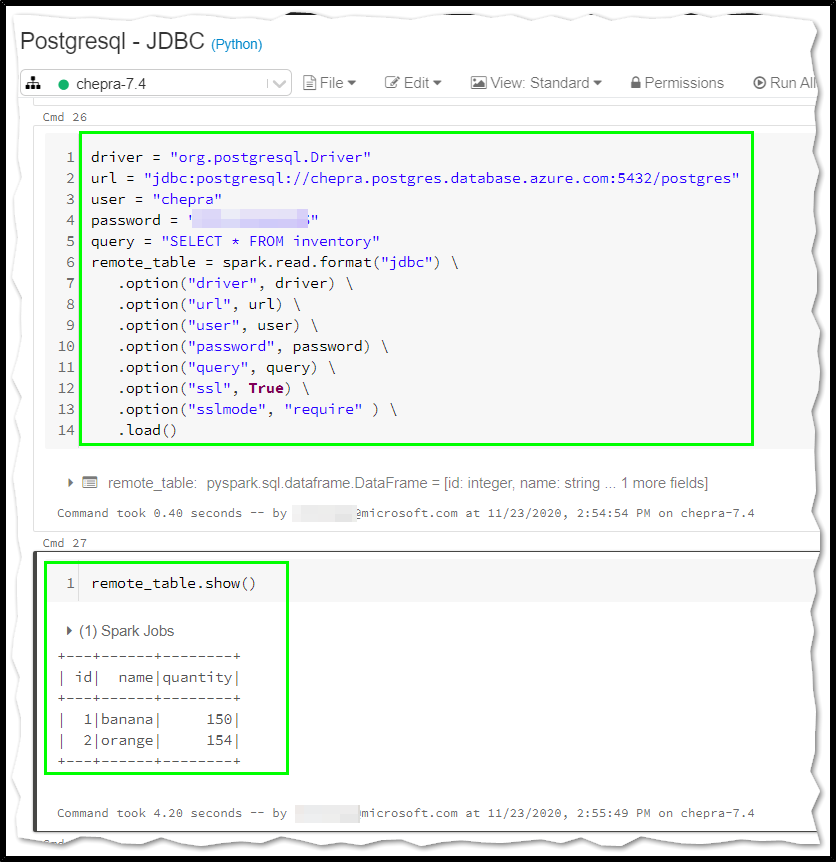

What is happening in reality, is that the “security” variable of the JVM is reading by default the following file (/databricks/spark/dbconf/java/extra.security) and in this file, there are some TLS algorithms that are being disabled by default. That means that if I edit this file and replace the TLS ciphers that work for Postgres flexible server for an empty string that should also work.

When I set this variable to the executors (spark.executor.extraJavaOptions) it will not change the default variables from the JVM. The same does not happen for the driver which overwrites and so it starts to work.

Hope this helps. Do let us know if you any further queries.

------------

- Please accept an answer if correct. Original posters help the community find answers faster by identifying the correct answer. Here is how.

- Want a reminder to come back and check responses? Here is how to subscribe to a notification.