Hello @Vinny Paluch ,

Thanks for the ask and using the forum .

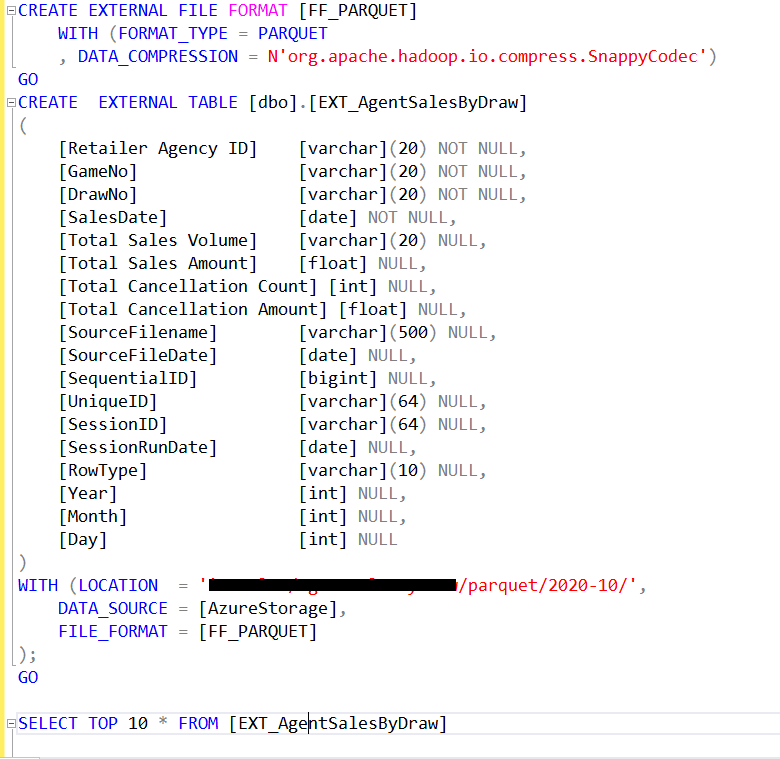

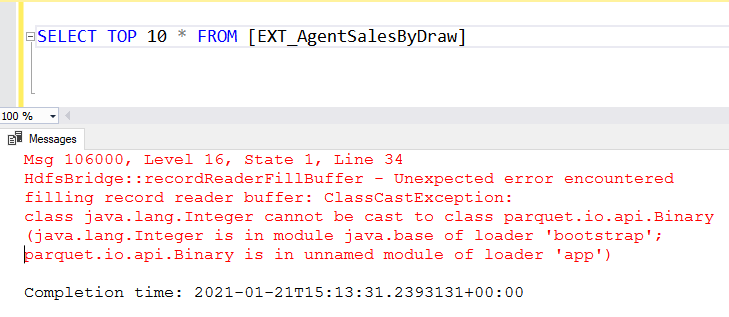

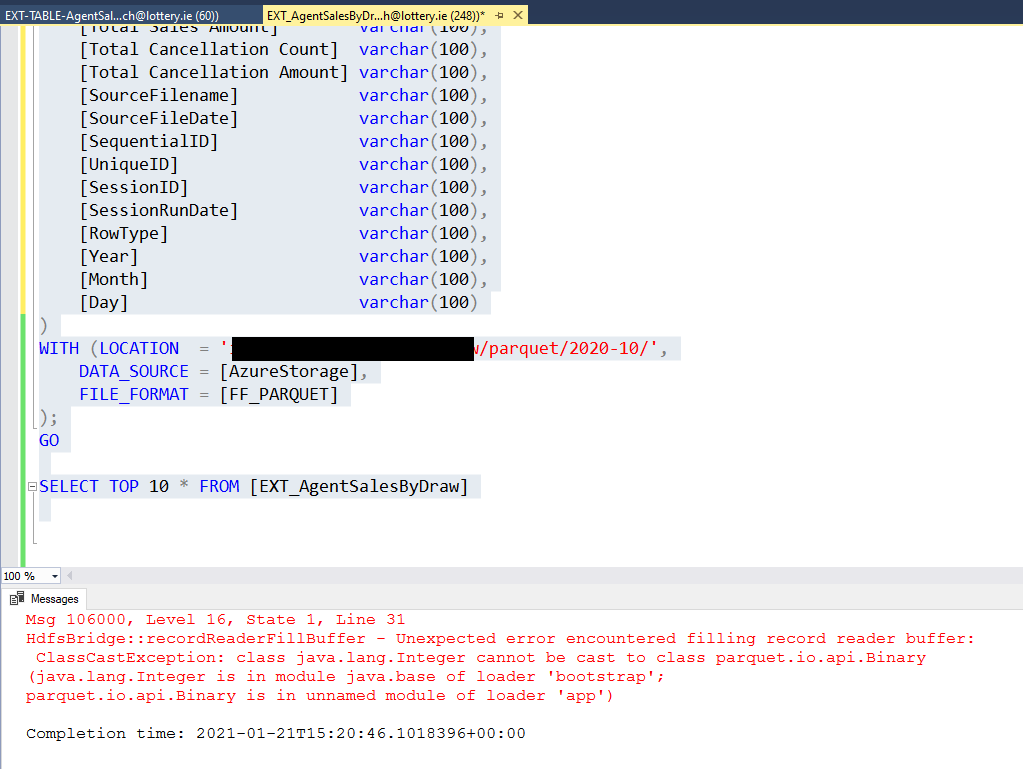

I am confident that the data types are not mapped correctly . Not sure if you have gone thorugh this doc : https://learn.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/design-elt-data-loading#define-the-tables

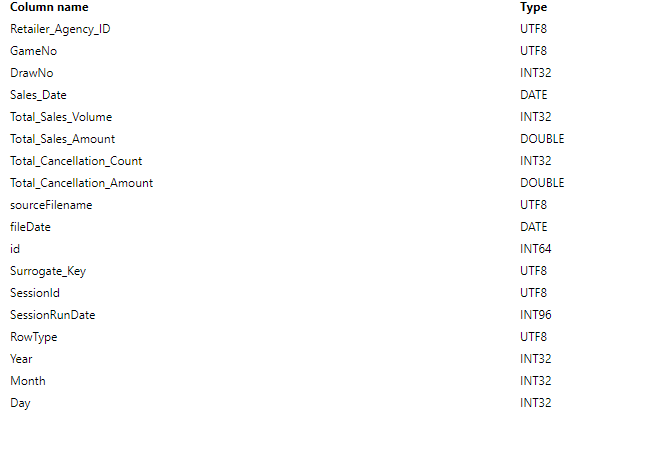

On a quick look i see that the drawno and Sessionrundate are not pobabaly mapped to the correct data type .

Thanks

Himanshu