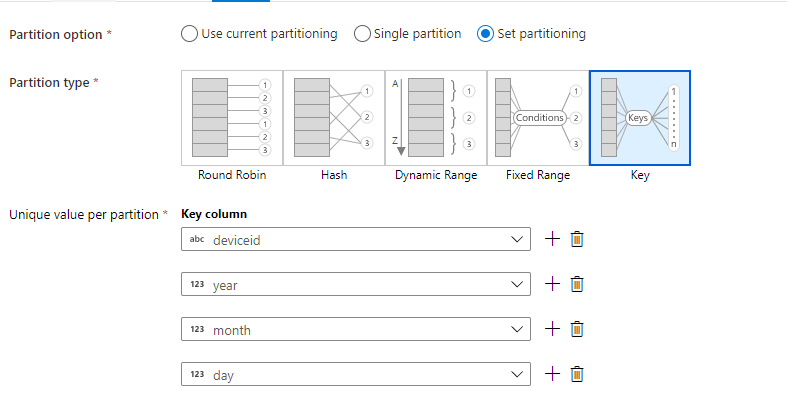

In data flows, you will generate hierarchical folder structures from your data using Key partitioning in the Sink transformation when writing to ADLS or Blob. Click on the Sink's optimize tab, select Key partitioning, and pick the data fields for your folder structure: https://youtu.be/7Q-db4Qgc4M?t=400

How to make a folder pattern while transferring data to ADLS Gen 2 using ADF mapping dataflows

I have a blob container in a azure storage account and I want to migrate it to a hierarchical storage account i.e. ADLS Gen 2 using folder structure on below basis.

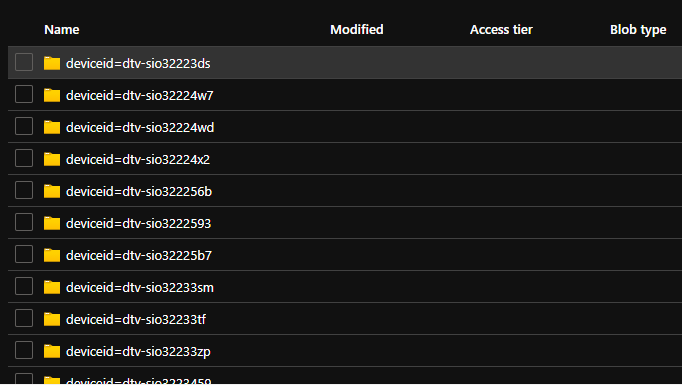

{deviceid}/--->{date}/--->{time}. This would list the data in folder structure as below

deviceid

|-->year

|--->month

|---->day

|------->hour

Sample from my blob file is as below.

{"messageid":"38E3D1A3-E498-AD44-8A8A-A06DA7BAA15F","sysid":"SEN-CH33F7YZ","deviceid":"abc123","ver":"1.0","protocol":"MQTT","ttl":"5000","durable":"true","correlationid":"00000000-0000-0000-0000-000000000000","type":"TLM","timestamp":"1607485875","simulated":"false","data":{"type":"Telemetry","code":"SENSATE_TLM","attributes":[{"watertemperature":"33.540001","waterusage":"0.707000","usageduration":"15.000000"}]},"EventProcessedUtcTime":"2020-12-09T03:54:39.4274990Z","PartitionId":2,"EventEnqueuedUtcTime":"2020-12-09T03:51:13.6160000Z","IoTHub":{"MessageId":null,"CorrelationId":null,"ConnectionDeviceId":"abc123","ConnectionDeviceGenerationId":"637365983999778069","EnqueuedTime":"2020-12-09T03:51:13.5100000Z","StreamId":null}}

{"messageid":"CFE8A8BE-3234-1ED4-8377-37C16654DFF2","sysid":"SEN-CH33F7YZ","deviceid":"abc111","ver":"1.0","protocol":"MQTT","ttl":"5000","durable":"true","correlationid":"00000000-0000-0000-0000-000000000000","type":"TLM","timestamp":"1607485879","simulated":"false","data":{"type":"Telemetry","code":"SENSATE_TLM","attributes":[{"watertemperature":"19.270000","waterusage":"0.470000","usageduration":"5.000000"}]},"EventProcessedUtcTime":"2020-12-09T03:54:39.9119082Z","PartitionId":8,"EventEnqueuedUtcTime":"2020-12-09T03:51:15.6010000Z","IoTHub":{"MessageId":null,"CorrelationId":null,"ConnectionDeviceId":"set-sio32236kh","ConnectionDeviceGenerationId":"637427342913260815","EnqueuedTime":"2020-12-09T03:51:15.4130000Z","StreamId":null}}

{"messageid":"F1AC3AA6-80E6-72B4-8F84-4DC033D33CDB","sysid":"SEN-CH33F7YZ","deviceid":"abc111","ver":"1.0","protocol":"MQTT","ttl":"5000","durable":"true","correlationid":"00000000-0000-0000-0000-000000000000","type":"TLM","timestamp":"1607485877","simulated":"false","data":{"type":"Telemetry","code":"SENSATE_TLM","attributes":[{"watertemperature":"19.360001","waterusage":"0.302000","usageduration":"4.000000"}]},"EventProcessedUtcTime":"2020-12-09T03:54:39.4118700Z","PartitionId":1,"EventEnqueuedUtcTime":"2020-12-09T03:51:17.2890000Z","IoTHub":{"MessageId":null,"CorrelationId":null,"ConnectionDeviceId":"set-sio32229fm","ConnectionDeviceGenerationId":"637117926528097261","EnqueuedTime":"2020-12-09T03:51:17.2290000Z","StreamId":null}}

I am not able to find the exact sink property or setting which would make this data available.

Using "column name" would make my data filter on basis of device id but not sure how to make further folders on basis of timestamp. Please suggest if possible.

1 answer

Sort by: Most helpful

-

MarkKromer-MSFT 5,186 Reputation points Microsoft Employee

MarkKromer-MSFT 5,186 Reputation points Microsoft Employee2021-03-19T19:18:46.473+00:00