Hello @Palash Aich and welcome to Microsoft Q&A.

The main difficulty in your ask, is that CDM runs in Dataflow, but Dataflow does not work on-premise. Copy Activity does work with on-prem.

This leads me to recommend you do this in two steps. First using Dataflow, copy from the CDM to a staging location (such as delimited text in Data Lake). Then use Copy Activity to take from staging, and use Polybase to load into your on-prem SQL.

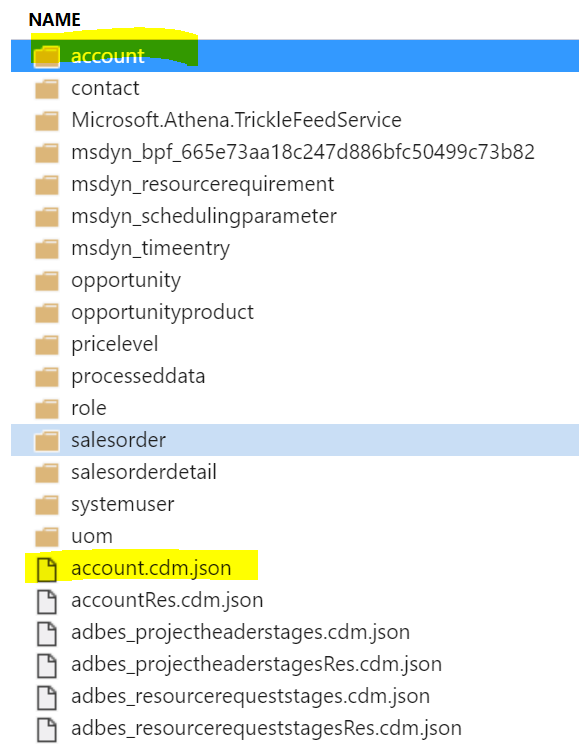

If you know exactly where the files are in the Data Lake, it may be possible to use a Copy activity directly without using CDM. I'm not very good at CDM, so this is just a 'maybe'.