Our team has suggested few things for you to test:

"There is no “team responsible for the data migration tool”. It is provided as a free, open-source example with community contributions and no intrinsic support provision. Customers with a Premier contract can lean on their Premier support contacts for paid assistance with custom code (including this).

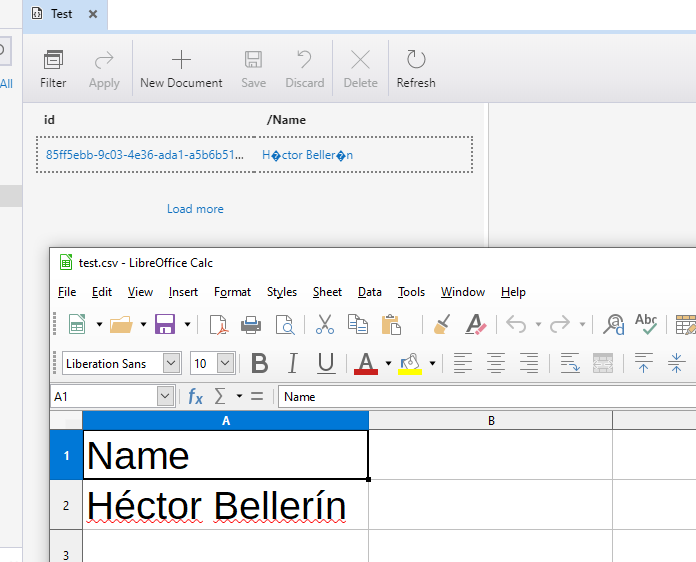

From my keyboard to the customer, it doesn’t seem to have come through quite how complicated this is at the byte level if they are relying on screenshots. LibreOffice has its own encoding habits, expectations, and bugs. Sharing a screenshot doesn’t tell us what bytes are landing on disk to be then interpreted by the migration tool; instead, you can use this tiny PowerShell command: Get-Content -Encoding Byte -Path "path:\to\file.csv"

To put some concrete examples to this, I can produce four files with different bytes on disk that all open in Notepad appearing to show “Héctor”.

In the simplest case (new file, copy-paste the string, default save encoding), Notepad writes the file with no header, encodes the e-with-rising-accent as bytes 0xC3A9, and encodes the other 5 letters with their ANSI values such that the full file is 0x48C3A963746F72.

The same string, saved with the ANSI encoding setting: still no header, but the e-with-rising-accent uses its ANSI character 233 / 0xE9 with a full file of 0x48E963746F72.

The same string, saved with UTF-8 with BOM: header of 0xEFBBBF, e-with-rising-accent encoded as 0xC3A9 and all five other characters encoded with ANSI bytes resulting in: 0xEFBBBF48C3A963746F72

The same string pasted into PowerShell and written using Out-File: header of 0xFFFE, all six letters using their ANSI values but padded to 2 bytes (little endian), resulting in a full file of: 0xFFFE4800E900630074006F007200

I will reiterate that all four files look identical when opened in a GUI like Notepad. The only step forward here is to use a tool like the PowerShell command I mentioned above to extract the raw bytes, which may be able to pin down where the tools are disagreeing."

Please check this and get back to us with your questions.

Regards

Navtej S