Hi @Kedi Peng , welcome to Microsoft QnA forum.

As the error message says this is the issue with request timeout. We are trying to upload 10,000 documents and default timeout value for a request is 10 seconds. In this case, loading 10,000 documents could take longer than 10 seconds depending upon various factor like proximity of Azure Cosmos location from the code we are running, network latency etc.

If we increase the timeout to a considerable value, it will work. I tried with one minute of it and it did work fine. Please check the code below:

public static async Task Main(string[] args)

{

CosmosClientOptions ops = new CosmosClientOptions();

ops.RequestTimeout = new TimeSpan(0,1,0);

using (CosmosClient client = new CosmosClient(_endpointUri, _primaryKey, ops))

{

Database database = client.GetDatabase(_databaseId);

Container container = database.GetContainer(_containerId);

List<Food> foods = new Bogus.Faker<Food>()

.RuleFor(p => p.Id, f => (-1 - f.IndexGlobal).ToString())

.RuleFor(p => p.Description, f => f.Commerce.ProductName())

.RuleFor(p => p.ManufacturerName, f => f.Company.CompanyName())

.RuleFor(p => p.FoodGroup, f => "Energy Bars")

.Generate(10000);

int pointer = 0;

while (pointer < foods.Count)

{

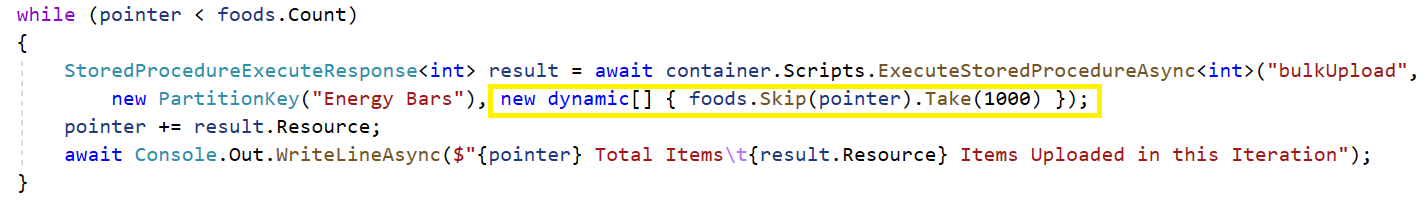

StoredProcedureExecuteResponse<int> result = await container.Scripts.ExecuteStoredProcedureAsync<int>("bulkUpload", new PartitionKey("Energy Bars"), new dynamic[] { foods.Skip(pointer) });

pointer += result.Resource;

await Console.Out.WriteLineAsync($"{pointer} Total Items\t{result.Resource} Items Uploaded in this Iteration");

}

}

}

Please let me know if it works if you face any issues still.

----------

If answer helps, please mark it 'Accept Answer'