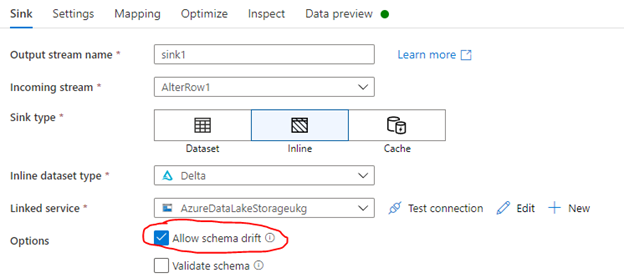

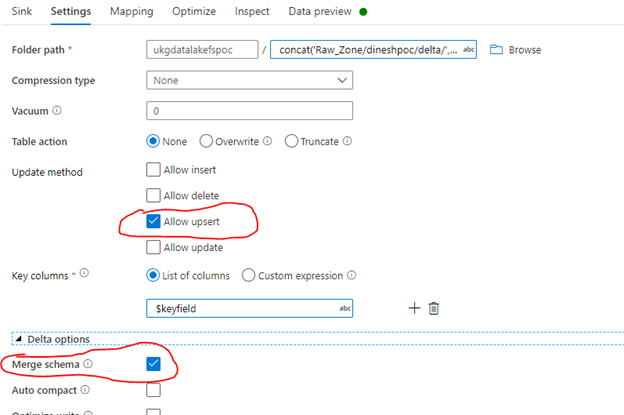

We are trying to perform a schema drift to the data bricks delta tables and it’s not working through the delta lake connecter in ADF.

If we use the Delta lake API (i.e. Merge API) using PYSPARK, it is working fine as expected. But it is not working through the ADF delta connector .

See the error we are getting from the ADF job. Is there anyone who experienced this problem and any resolution.

Error:

StatusCode":"DFExecutorUserError","Message":"Job failed due to reason: at Sink 'sink1': org.apache.spark.sql.AnalysisException: cannot resolve target.UserId in UPDATE clause given columns target.Id, target.IsActive, target.CreatedById, target.Name, target.LastModifiedById, target.Type, target.CreatedDate, target.SystemModstamp, target.LastModifiedDate;