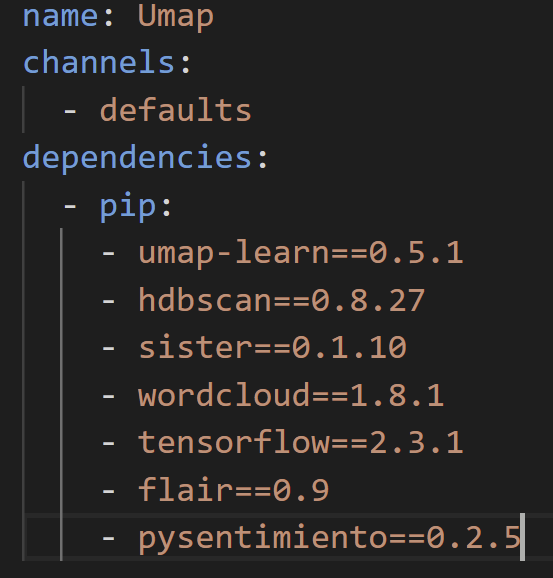

Hey! sorry for the late reply, I was able to solve this issue by essentially cutting down the .yml file to only the packages that I wanted installed, which begs the question of why we are suggesting uploading full .yml clones of anaconda envs instead of uploading a simple reqiurements.txt file like you are adding packages to a spark pool (not knocking it just curious), here is a picture of what I uploaded as a .yml file instead of the above .yml file I initially tried to upload:

). This can be beneficial to other community members. Thank you.

). This can be beneficial to other community members. Thank you.