Hi @PeterSh ,

Welcome to Microsoft Q&A forum and thanks for posting your query.

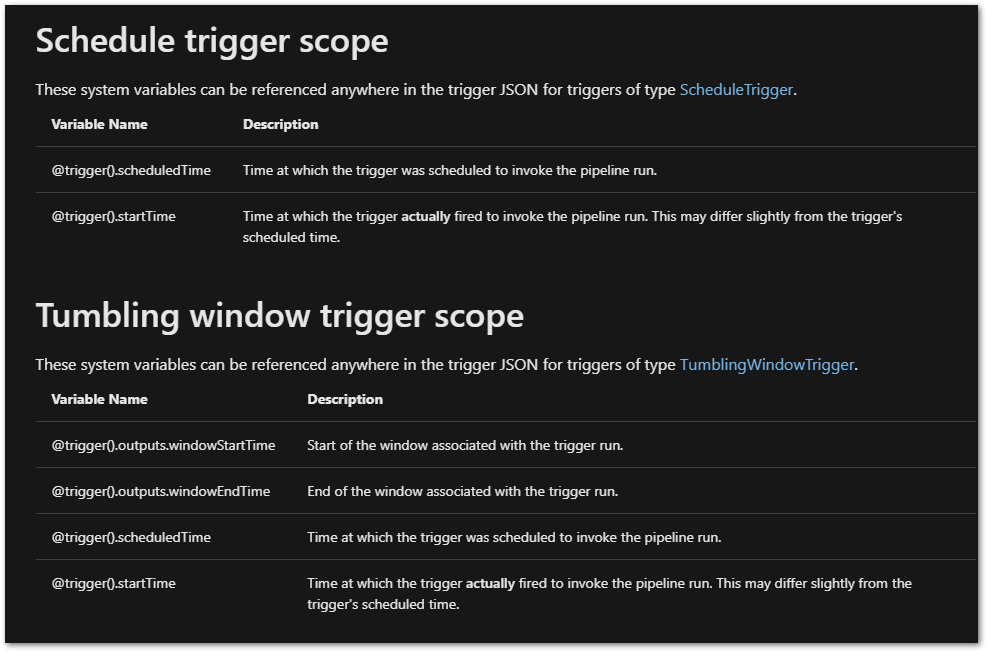

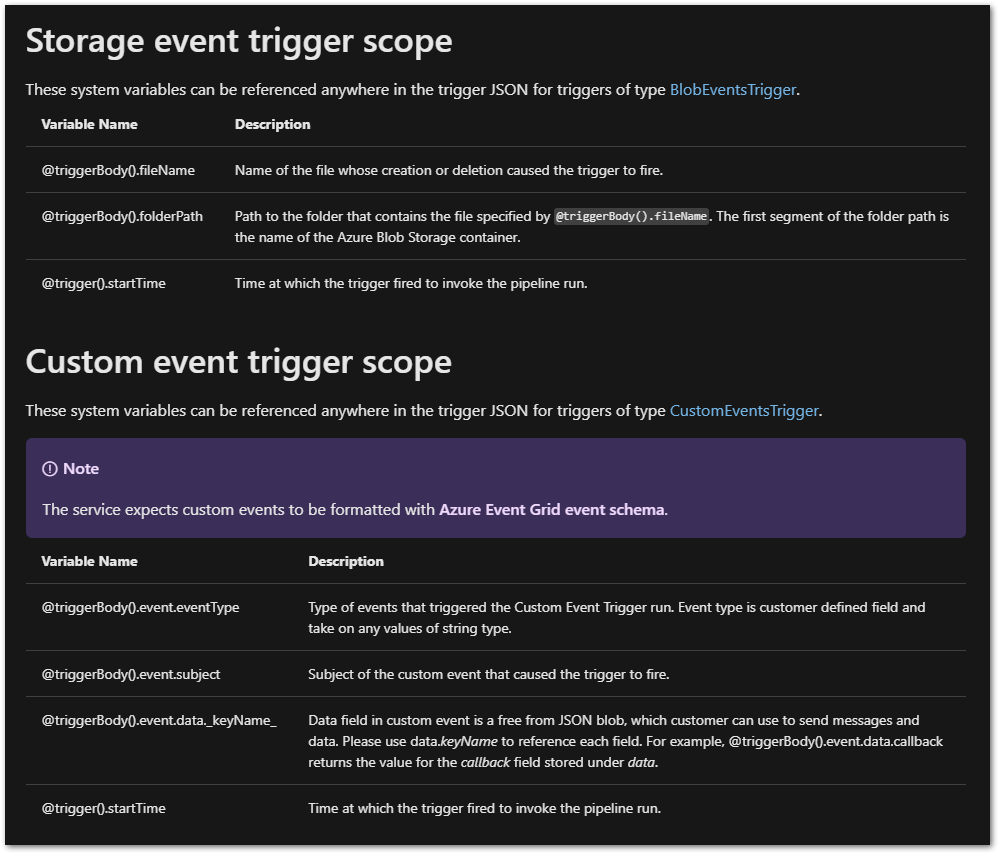

Here is a public document where we have a list of System Variable that are supported by Azure Data Factory and Azure Synapse. You can use these variables in expressions when defining entities within either service.

Here is the reference document: System variables supported by Azure Data Factory and Azure Synapse Analytics

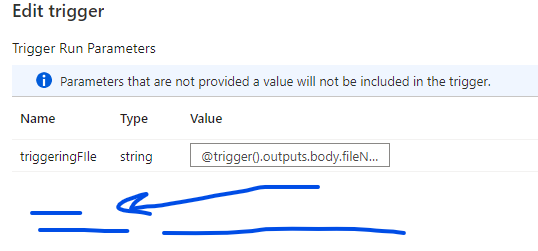

Please note that for Storage event trigger scope, if you are creating your pipeline and trigger in Azure Synapse Analytics, you must use @trigger().outputs.body.fileName and @trigger().outputs.body.folderPath as parameters. Those two properties capture blob information. Use those properties instead of using @triggerBody().fileName and @triggerBody().folderPath.

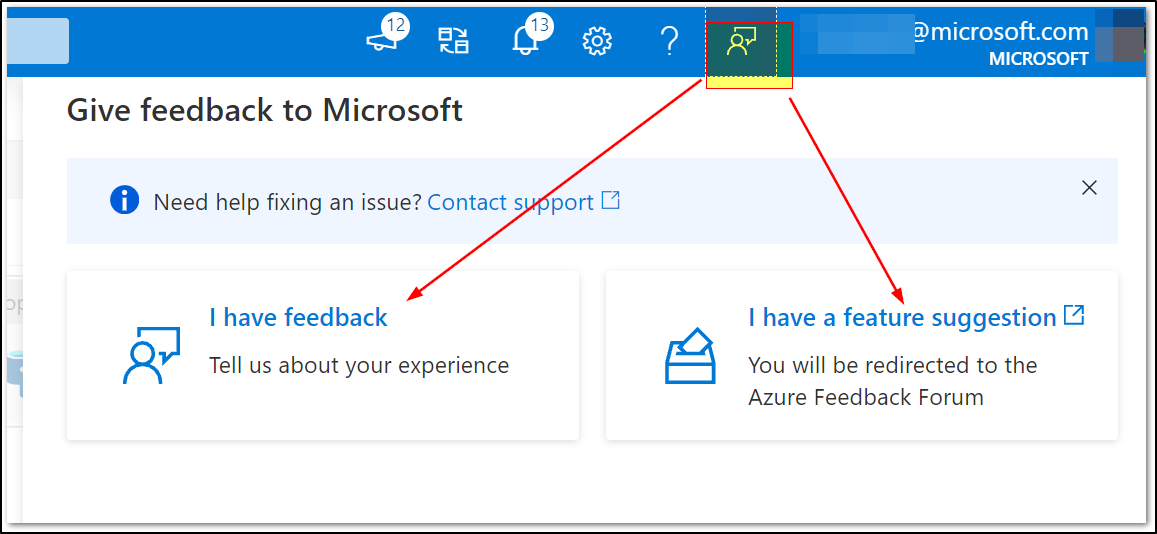

If you have any feedback regarding product feature request, we encourage you to please log a feedback directly from ADF UI as shown below:

Hope this info helps. Do let us know if you have further query

----------

- Please don't forget to click on

and upvote

and upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators