Hi @Birajdar, Sujata ,

Thanks for your query.

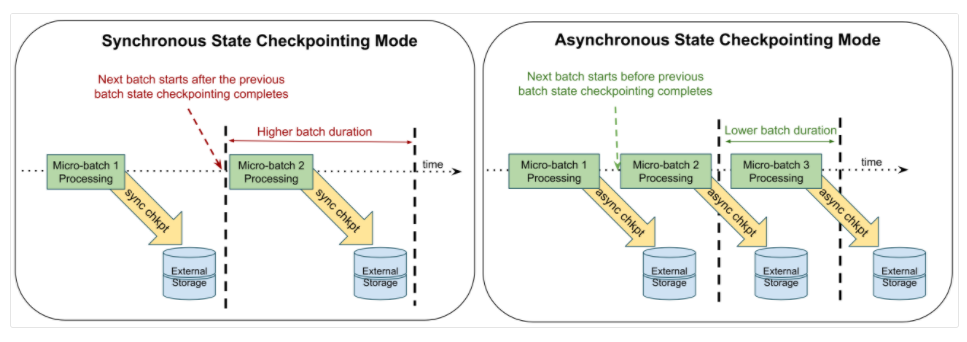

You may try structured streaming with checkpoint enabled, then it will continue from the failed point.

Hope this info helps.

----------

- Please don't forget to click on

and upvote

and upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators