Hello @Victor Seifert ,

Thanks for the question and using MS Q&A platform.

(UPDATE:14/3/2022): As per the discussion with the internal team, the reason that you are facing this IO clothing issue is because of these codes:

stdout_handler = logging.StreamHandler(sys.stdout)

logger.addHandler(stdout_handler)

For this issue, as you are trying to use StreamHandler(), by default it’s sys.stderr, please check this: https://docs.python.org/3/library/logging.handlers.html#logging.StreamHandler, now you are using sys.stdout

- Since synapse is based on livy as the job submitter, thus for livy’s fake_shell, it’ll override the

sys.stdoutandsys.stderrfor every cell, thus when you run the cell for another time, the last stream for log writing is actually already closed. That’s why you’ll get errors like that: https://github.com/apache/incubator-livy/blob/master/repl/src/main/resources/fake_shell.py#L537 - Therefore, we add another handler to make the python E2E logs works for pyspark.

So as a summary, you should avoid using code like logging.StreamHandler(sys.stdout/sys.stderr) which will have issue caused by livy’s original design.

You should directly use the logging method we provided as the example below. The log messages could be found within both cell output and driver log directly.

--------------------------------------------------------

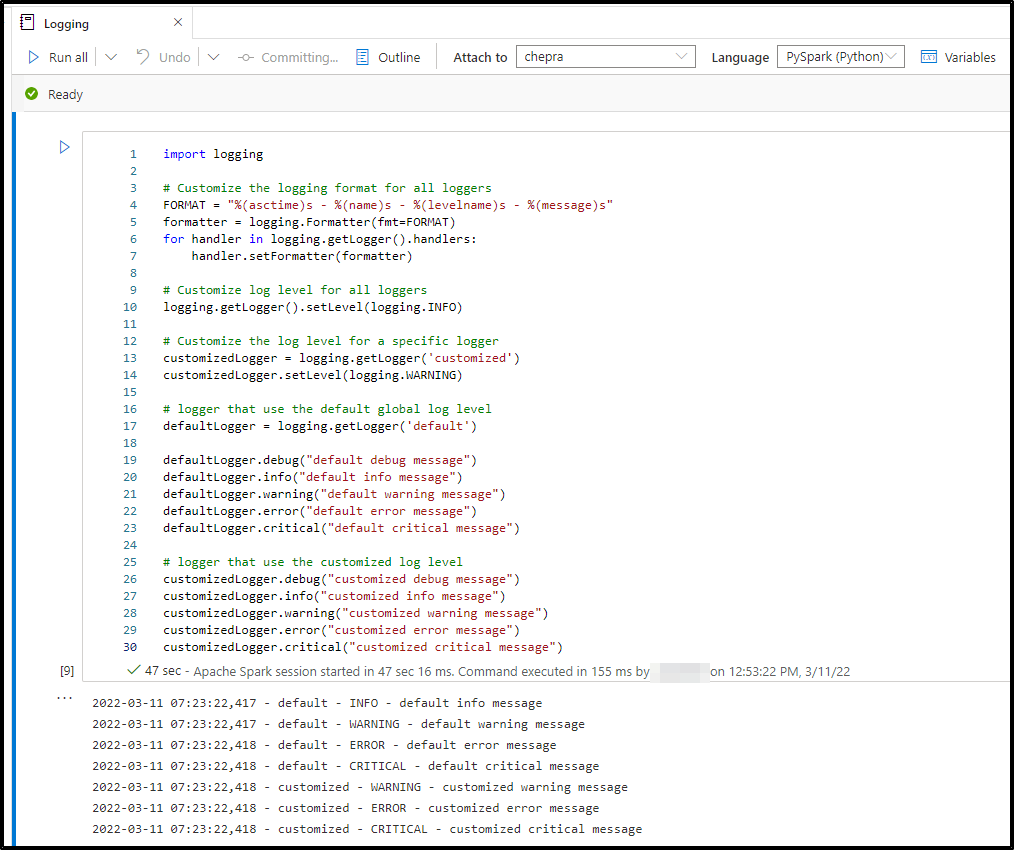

You may try with below sample code for python logging with different format and customized log level.

import logging

# Customize the logging format for all loggers

FORMAT = "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

formatter = logging.Formatter(fmt=FORMAT)

for handler in logging.getLogger().handlers:

handler.setFormatter(formatter)

# Customize log level for all loggers

logging.getLogger().setLevel(logging.INFO)

# Customize the log level for a specific logger

customizedLogger = logging.getLogger('customized')

customizedLogger.setLevel(logging.WARNING)

# logger that use the default global log level

defaultLogger = logging.getLogger('default')

defaultLogger.debug("default debug message")

defaultLogger.info("default info message")

defaultLogger.warning("default warning message")

defaultLogger.error("default error message")

defaultLogger.critical("default critical message")

# logger that use the customized log level

customizedLogger.debug("customized debug message")

customizedLogger.info("customized info message")

customizedLogger.warning("customized warning message")

customizedLogger.error("customized error message")

customizedLogger.critical("customized critical message")

Here is the expected output for the above sample code for python logging with different format and customized log level.

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators