To access the attributes that are inside of arrays (i.e. results.id), use the Flatten transformation first. For the attributes that are properties of a struct (like users.full_name), you do not need to unroll first. After unrolling the array "results" with a Flatten transformation, add a Select transformation. Inside of the Select, you will now see those columns that you can pick from the Input columns list.

How to Flatten A Complex Nested JSON Structure in ADF

I'm trying to flatten the following JSON structure in Azure Data Factory so that the users details roll up to the results.id. I have tried using an ADF Data Flow & the flatten transformation but results[] is the only selection available for 'unroll by' and 'unroll root' in the transformation settings. New to Azure ADF and JSON so any help or suggestions would be much appreciated.

Desired flattened result is:

results.id,users.full_name,users.photo_path,users.email_address,users.headline,users.generic,users.disabled,update_whitelist.full_name,update_whitelist.headline,update_whitelist.email_address,update_whitelist.phone

Sample JSON File Structure is below.

{

"count": 2,

"results": [

{

"key": "users",

"id": "1000000"

},

{

"key": "users",

"id": "2000000"

}

],

"users": {

"1000000": {

"full_name": "Jane Doe",

"photo_path": "https://www.google.com",

"email_address": "janedoe@Stuff .com",

"headline": "Senior Engineer",

"generic": false,

"disabled": false,

"update_whitelist": [

"full_name",

"headline",

"email_address",

"phone"

],

"account_id": "7777777",

"id": "1000000"

},

"2000000": {

"full_name": "John Doe,

"photo_path": "https://www.yahoo.com",

"email_address": "johndoe@réalisations .com",

"headline": "Senior Project Manager",

"generic": false,

"disabled": false,

"update_whitelist": [

"full_name",

"headline",

"email_address",

"phone"

],

"account_id": "7777777",

"id": "2000000"

}

},

"meta": {

"count": 2,

"page_count": 1,

"page_number": 1,

"page_size": 20

}

}

Azure Data Factory

4 answers

Sort by: Most helpful

-

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator

MarkKromer-MSFT 5,231 Reputation points Microsoft Employee Moderator2022-04-18T16:13:49.493+00:00 -

AnnuKumari-MSFT 34,566 Reputation points Microsoft Employee Moderator

AnnuKumari-MSFT 34,566 Reputation points Microsoft Employee Moderator2022-05-02T08:54:48.813+00:00 Hi @dmstech72 ,

Welcome to Microsoft Q&A platform and thanks for posting your query.

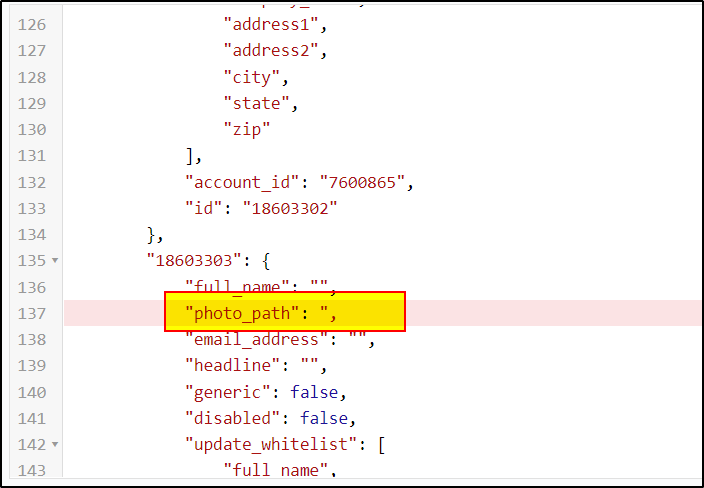

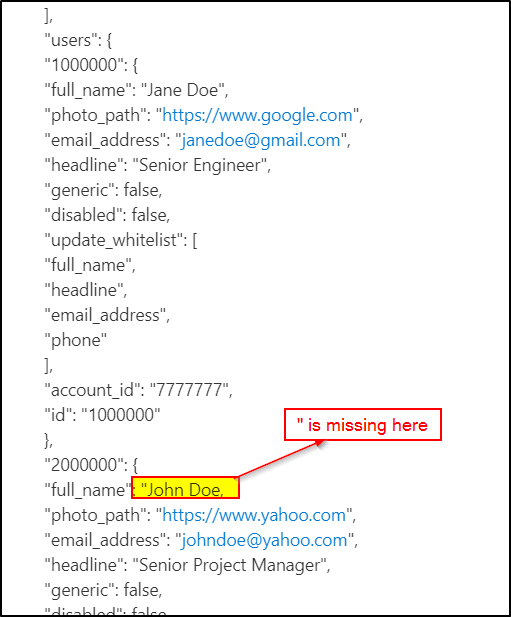

As I understand your issue, you want to flatten the provided JSON data . However, you are able to flatten the results[] but users property is not coming as an option in flatten transformation. Please let me know if my understanding about your query is incorrect.First of all, the JSON content which you have provided is invalid.

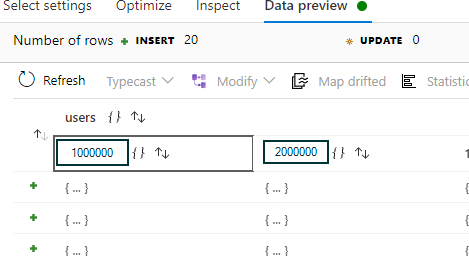

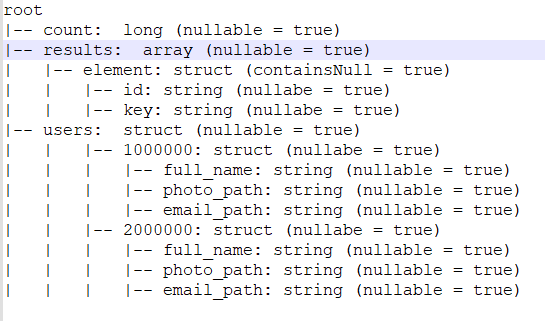

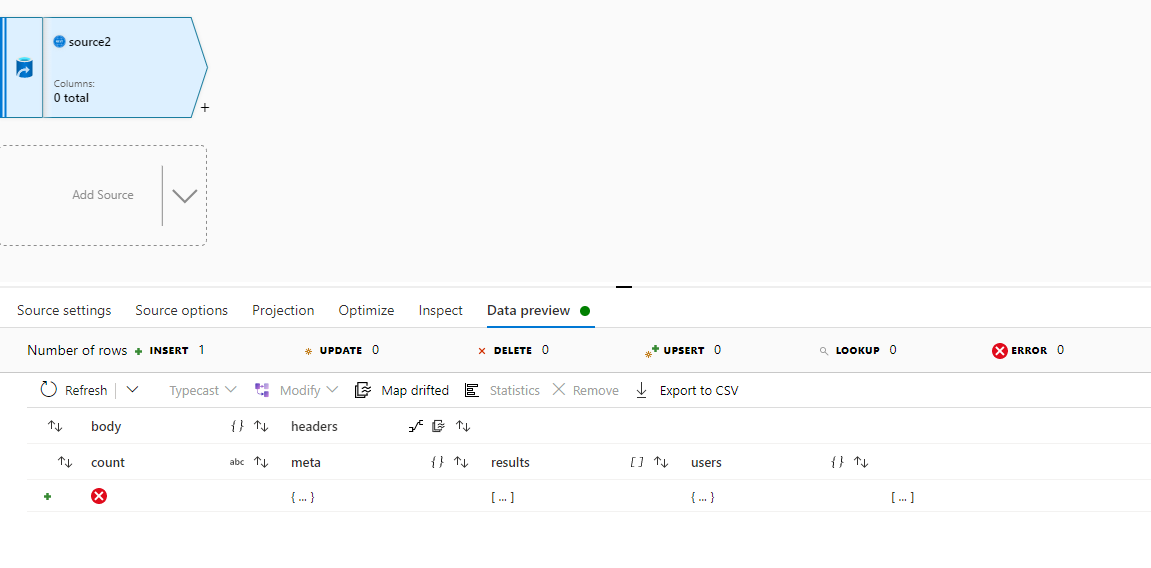

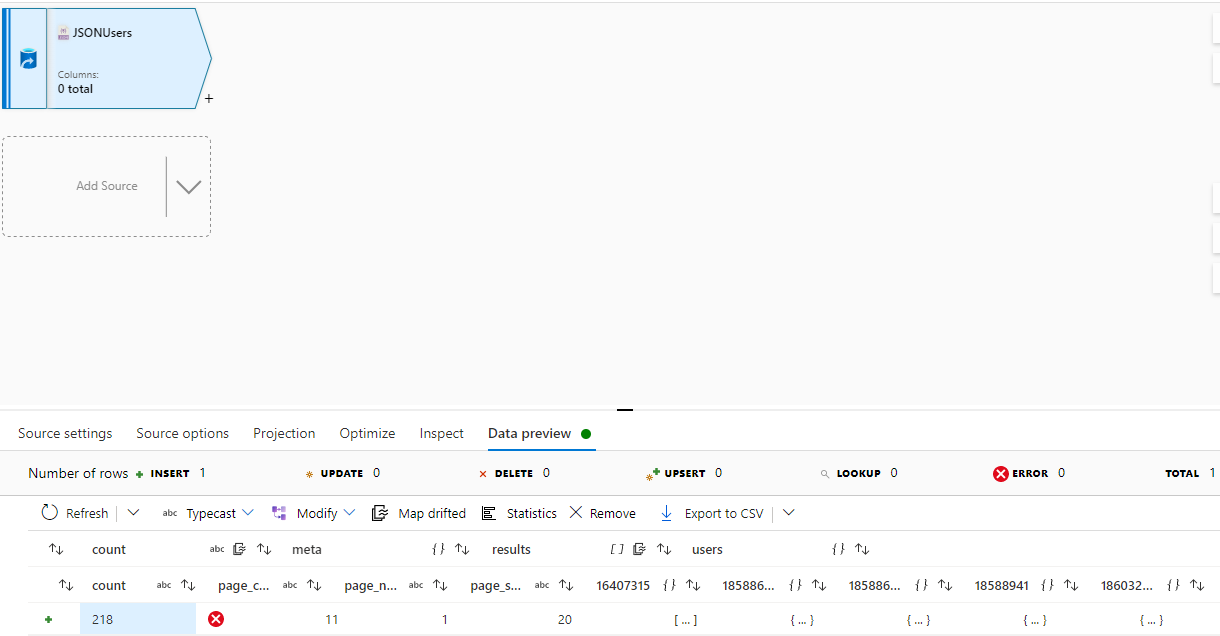

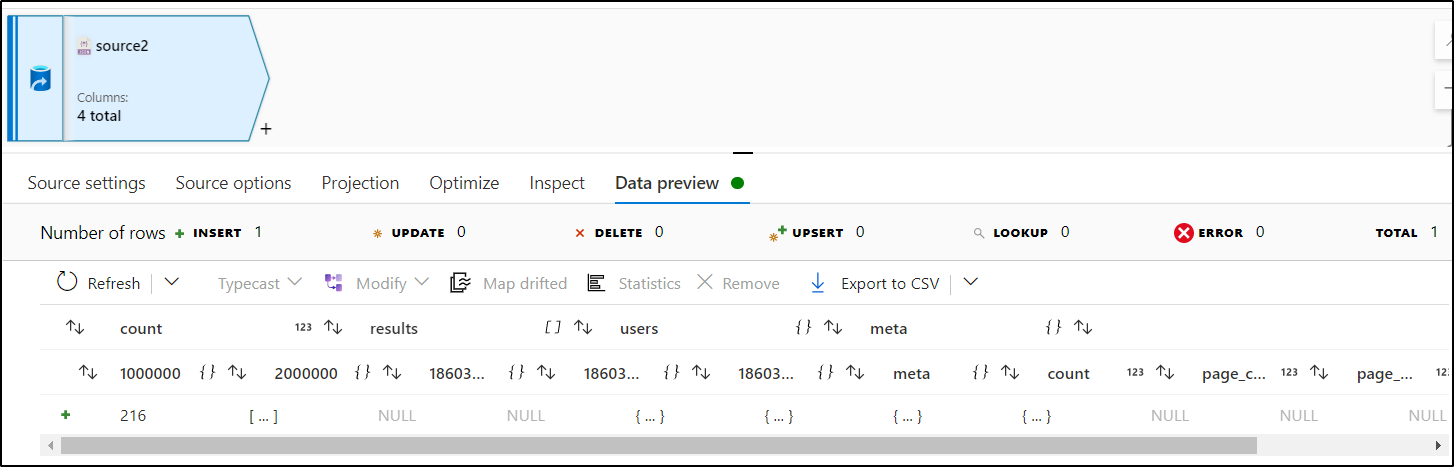

1. In the source transformation option, select Document form as 'Array of documents' . This is how your source data looks like: Results[] is an array but users is a JSON. So, we need to convert users Json to array in order to flatten the data within users property.

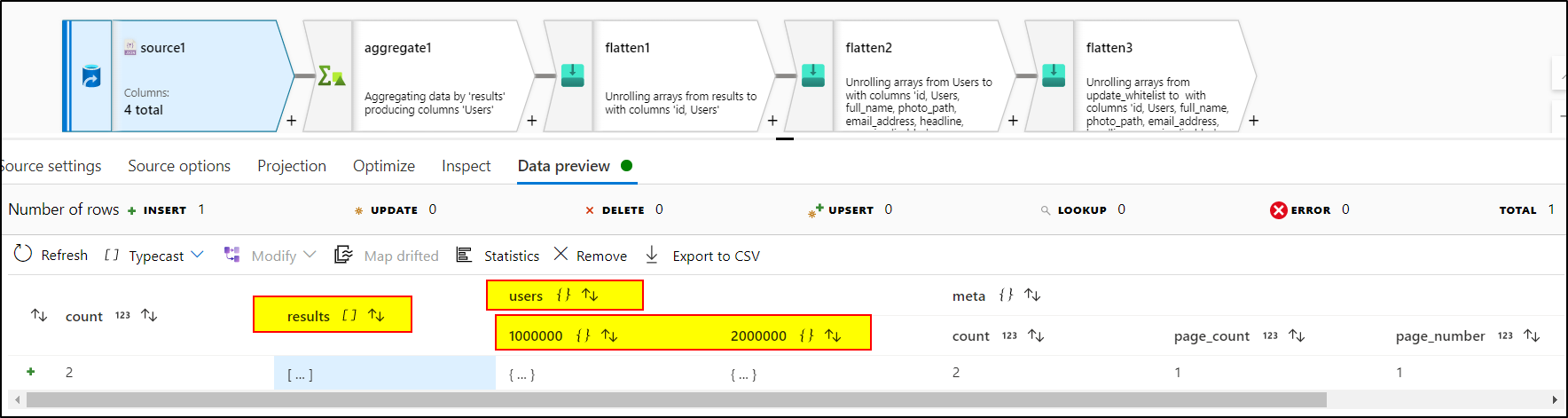

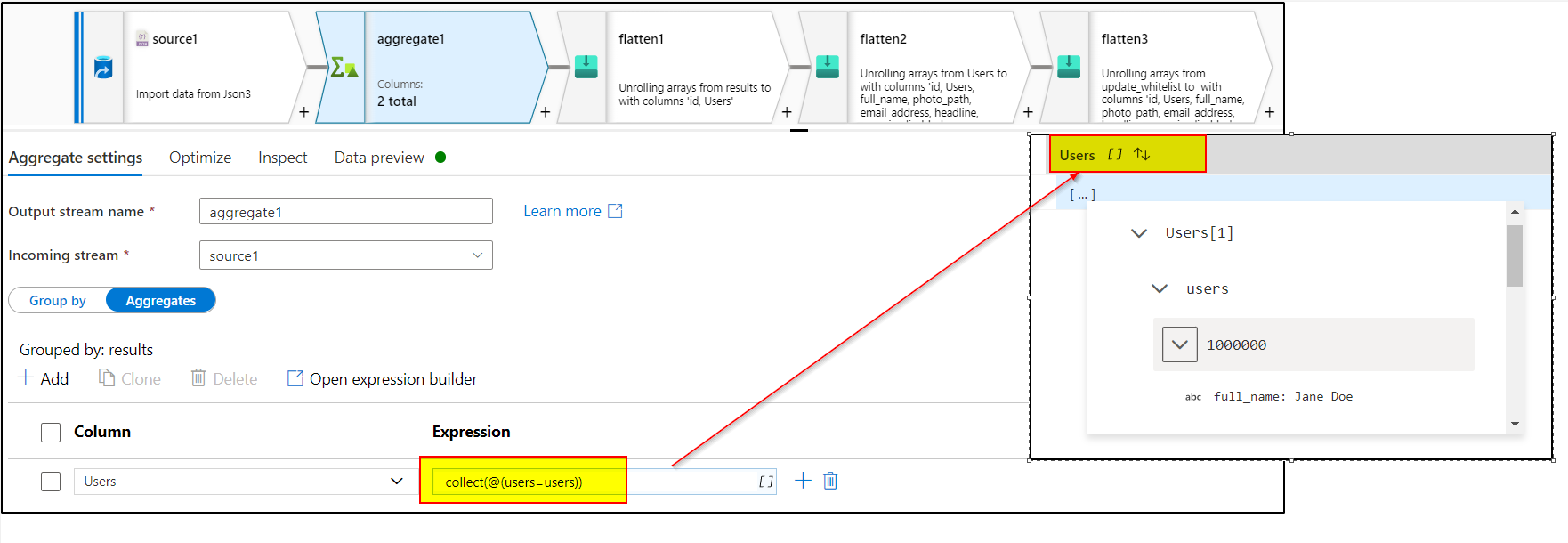

2. Use collect function inside aggregate transformation convert json object into array.

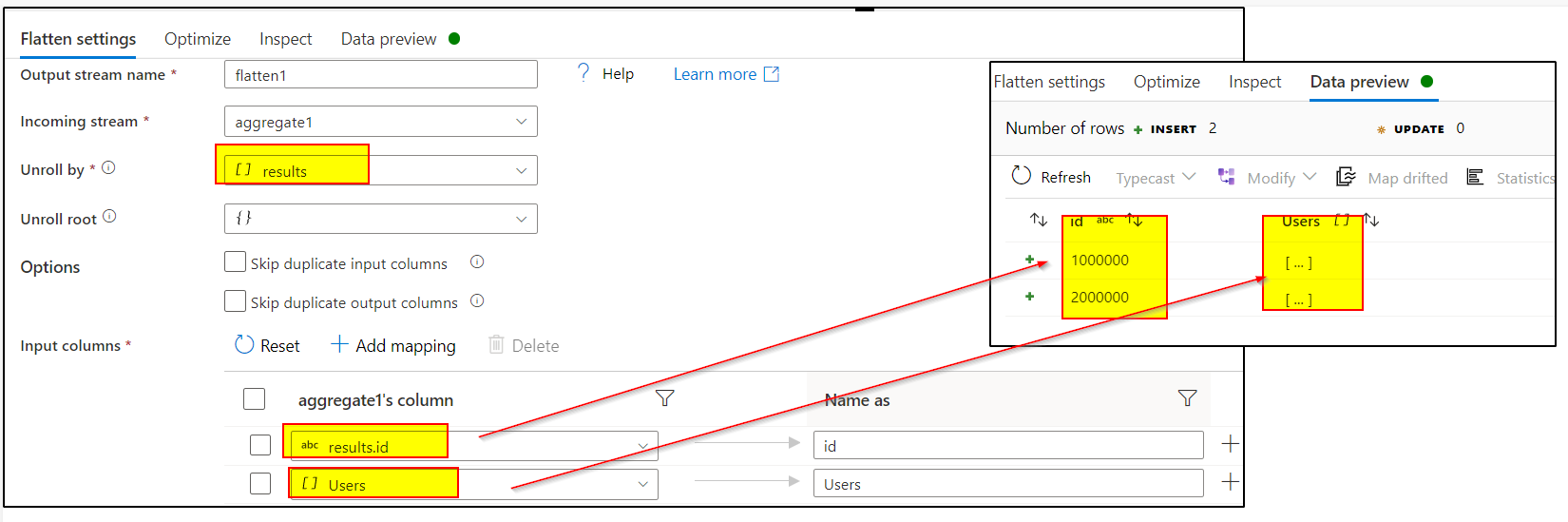

3. Unroll by results[] in first Flatten transformation

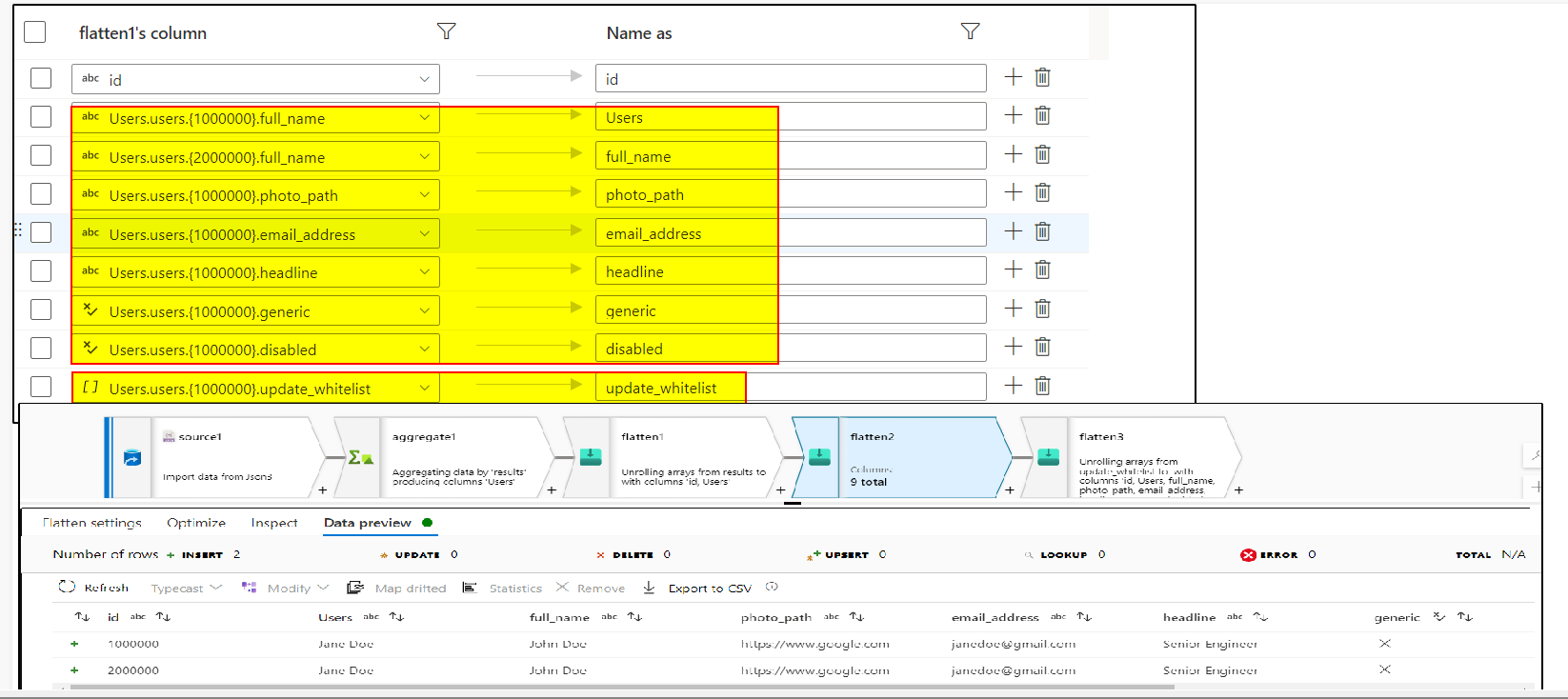

4. Use Flatten transformation again and unroll by users[] and select all the fields needed

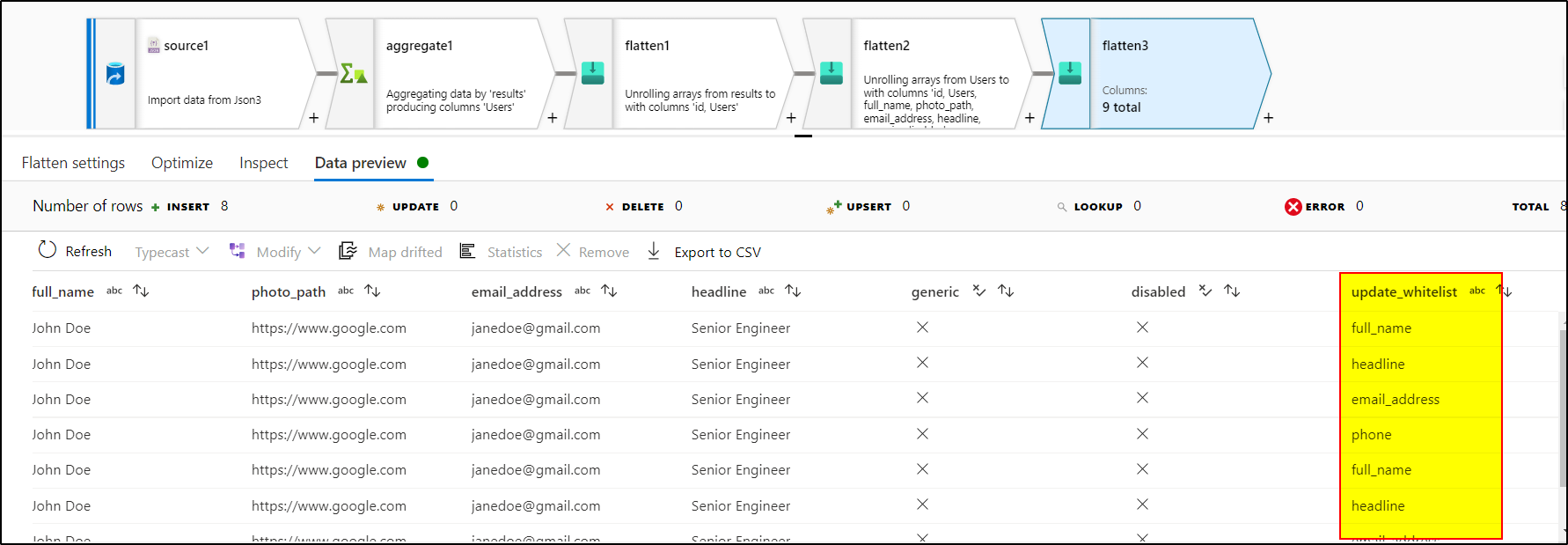

5. As the update_Whitelist[] array doesn't contain key-value pair , if we unroll it using another flatten transformation, it's gonna give us result like this, duplicating other columns :

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators

- Please don't forget to click on

-

Saddaqat Haji Ahmad Jan 1 Reputation point

2022-12-03T23:52:55.577+00:00 All the existing queries are about how we can deal a single list inside a json. How to deal multiple lists within a json.

Here is my payload.

Opening this payload inside flatten gives me the first list data only. The second list name and its column are not available in flatten.This is my Payload

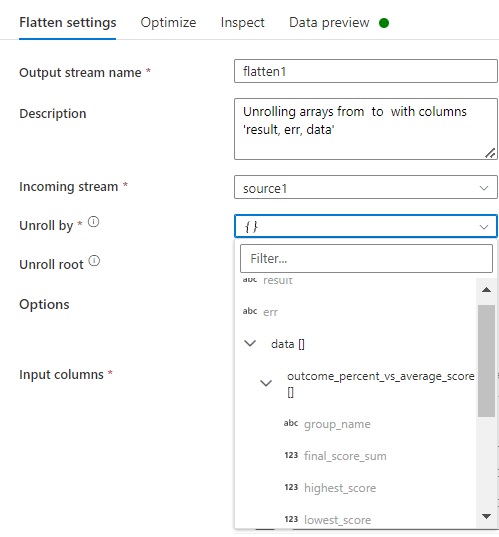

{ "result": "success", "err": None, "data": [ { "outcome_percent_vs_average_score": [ { "name":"sadaqat", "id":12 }, { "name":"sadaqat1", "id":123 } ] }, { "average_criteria_score": [ { "avg_id":123, "avg_name":"hello" }, { "avg_id":1234, "avg_name":"hello 1" } ] } ] }You can see the first list is being shown in the flatten activity not the others are there.

-

dmstech72 21 Reputation points

2023-08-02T11:55:36.4766667+00:00 Thank you for this feedback, it was helpful even though I was never able to get ADF to unroll all the way...same issue as James Klein where I would have had to map every id. I ended up creating Notebook (Python) scripts to flatten each json object, write to parquet files, then load to Synapse...used outer pipeline to copy Metadata (for bulk copy) with a For Each that executed an inner pipeline that did the Rest API call and copied the json files to blob storage where they were flattened & loaded to Synapse.