Hi @KarthikShanth ,

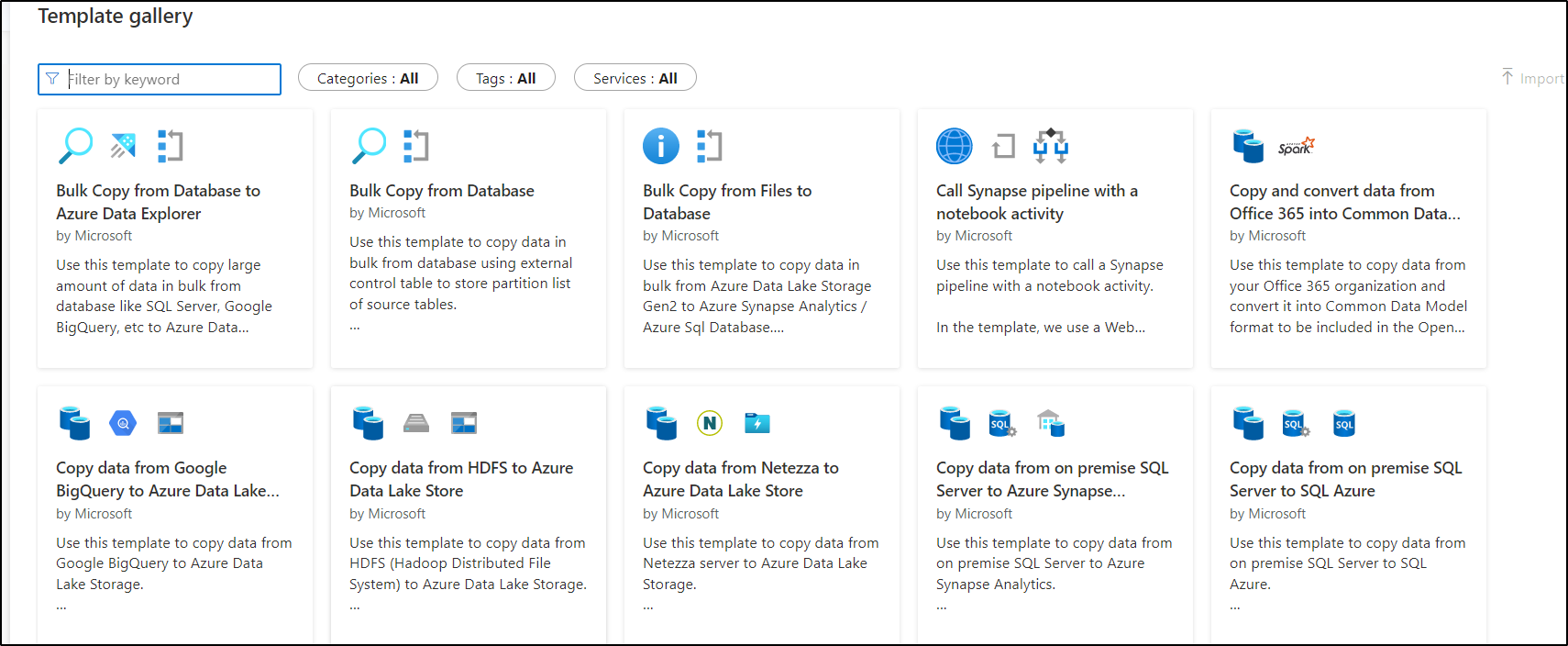

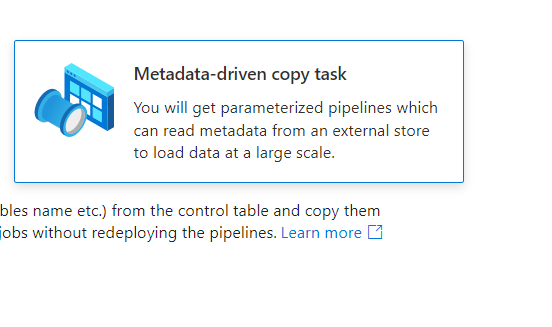

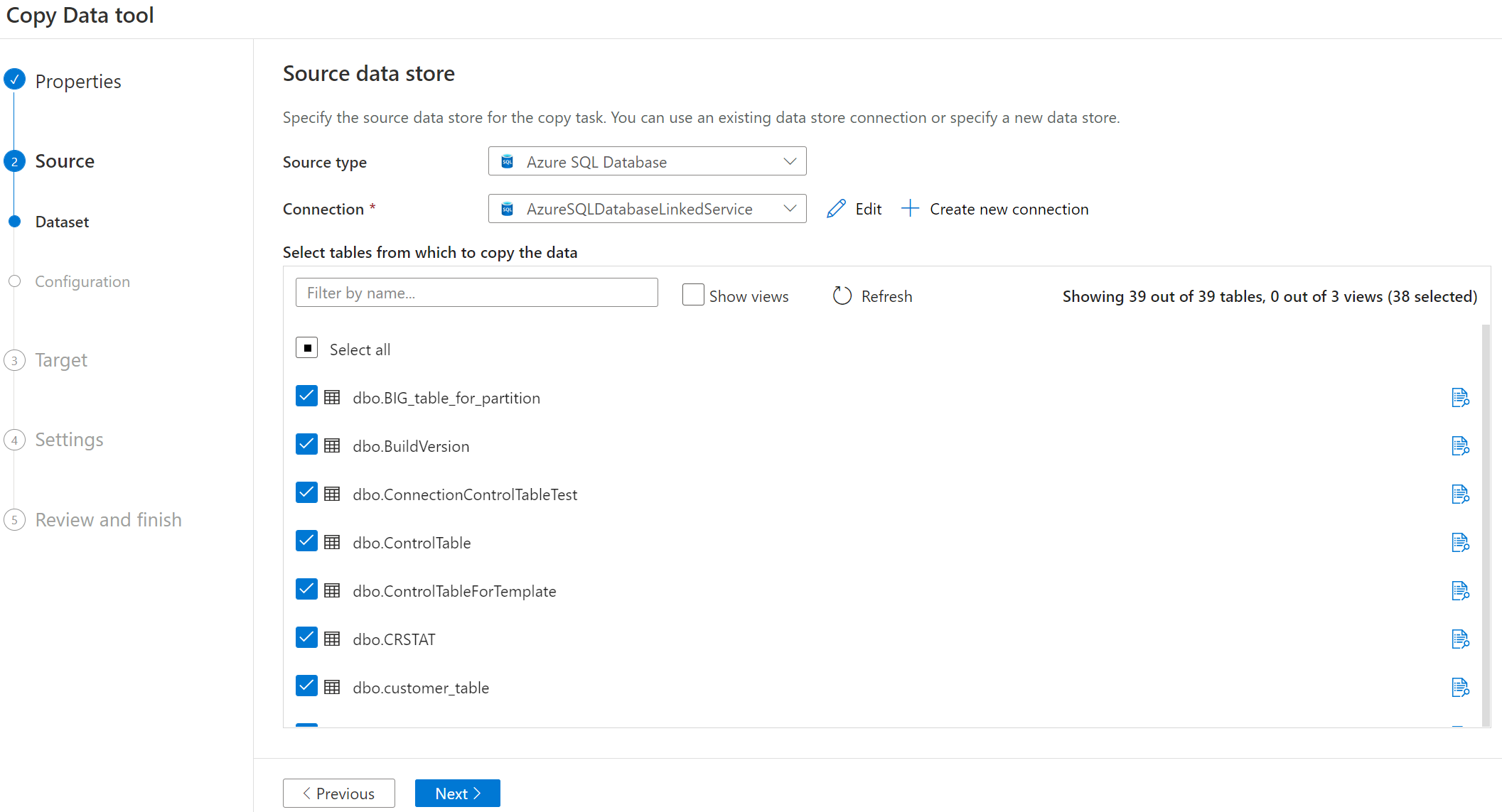

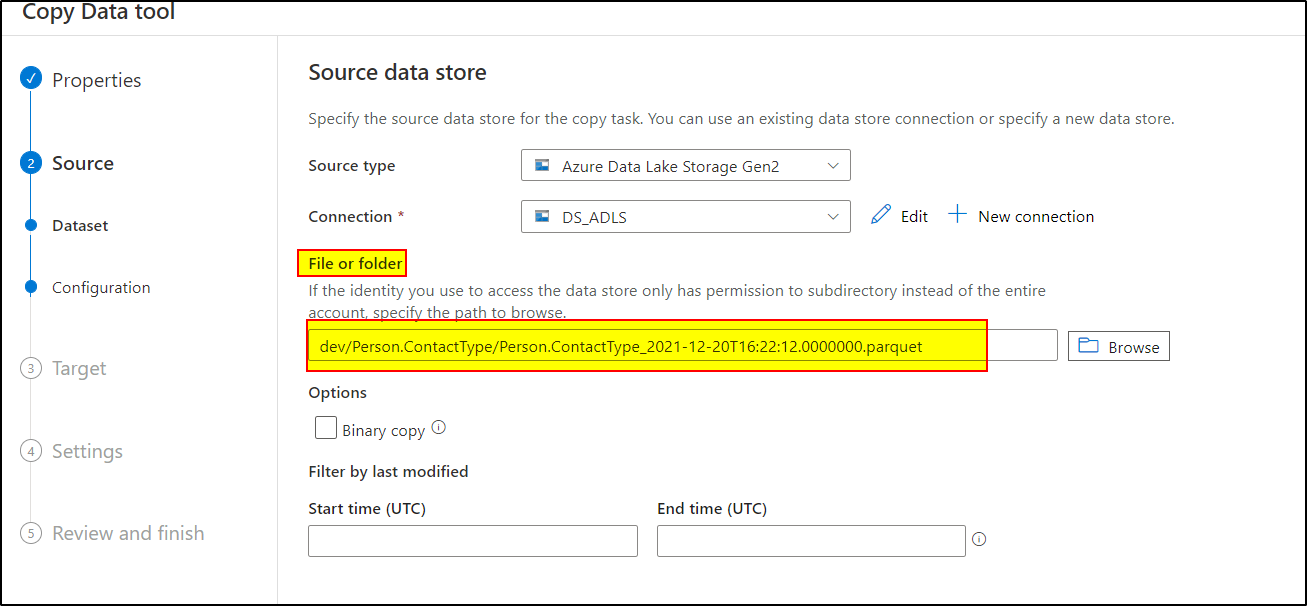

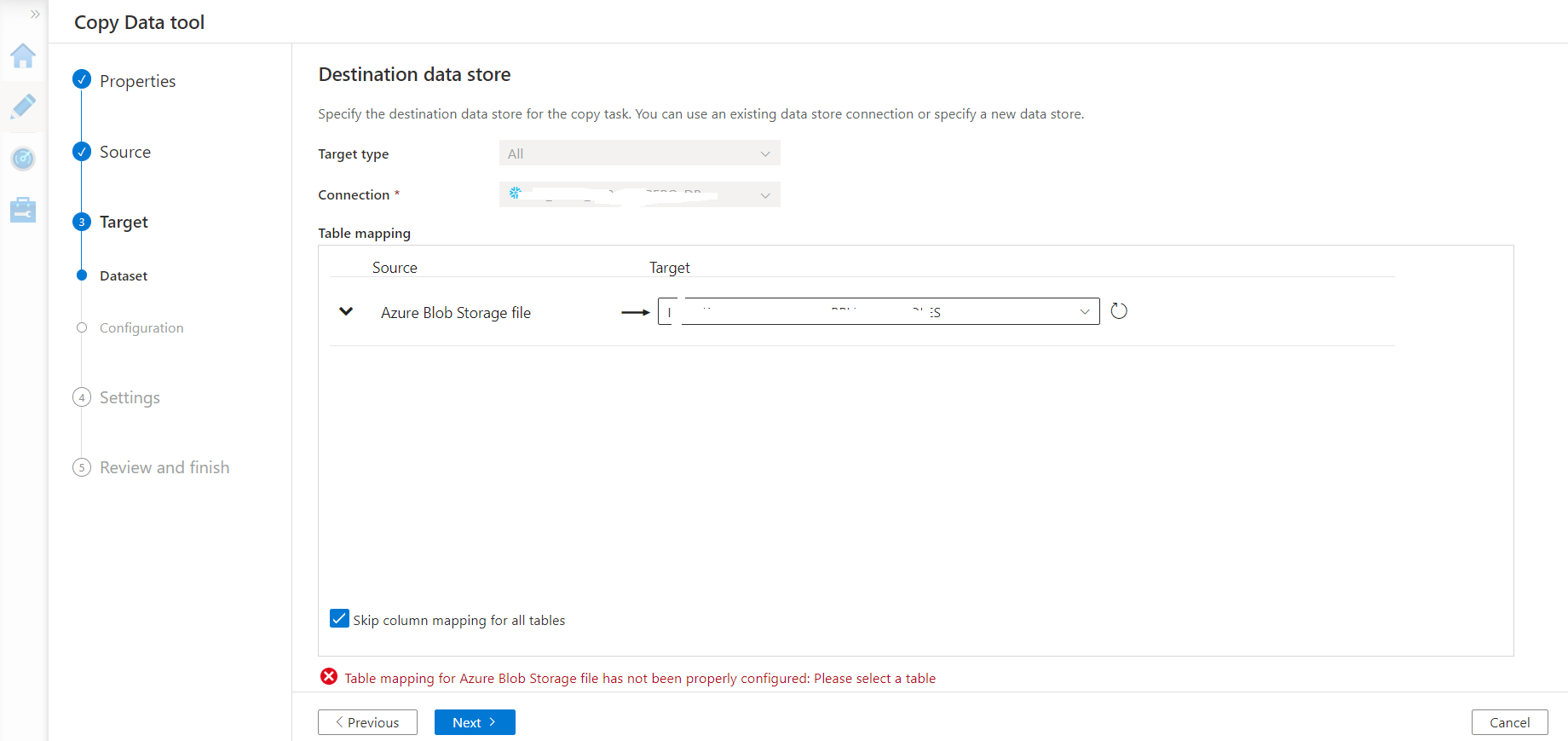

Thanks for sharing details and screenshot. While using Metadata-driven copy task, if your source is tabular datastore such as , Azure SQL server or Oracle database, we can select multiple tables and perform full load or incremental load to other datastore such as ADLS . However, if your source is files , there is no option to select multiple files or folders and perform copy activity dynamically.

When source is tabular datastore, we can select multiple tables to leverage parameterized pipeline to perform copy dynamically.

When source is ADLS files, we can't select multiple files.

Get more details on limitations of metadata driven copy task here: https://learn.microsoft.com/en-us/azure/data-factory/copy-data-tool-metadata-driven#known-limitations

To achieve your requirement, you can create your customized pipeline with the steps as described in the above answer as well. Reiterating the same steps here: These are the steps you can follow to achieve the same:

1. Use Get metadata activity and point the dataset to the folder having 20 files and use childItems in the fieldList to fetch all the fileNames .

2. Use ForEach activity to iterate through each of the filenames and process it one by one. Use this expression for the Item in ForEach : @{activity('Get Metadata1').output.childItems}

3. Inside Foreach , use copy activity and in source settings use wildcard path and provide filename as @item().name . In sink dataset, create parameter to make the tablename dynamic and pass the value as @item().name for fileName in sink table.

--------------------------------------------------------------------------

If the above answer helped, Please do consider clicking Accept Answer and Up-Vote for the same as accepted answers help community as well. If you have any further query do let us know.

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.