Thankyou for using Microsoft Q&A platform and thanks for posting your question.

As I understand your ask, you want to optimize the process of data migration from ADLS to SQL server for which you are using Copy activity in Azure data factory pipeline. Please let me know if my understanding is incorrect.

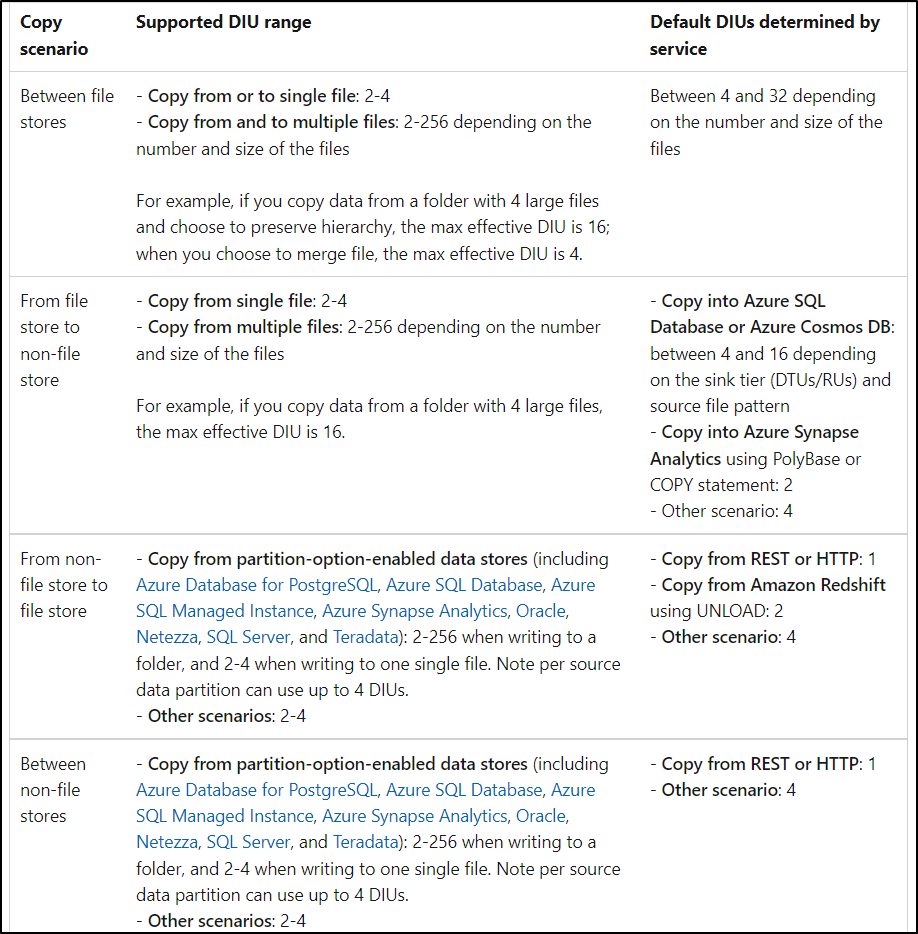

The allowed DIUs or Data Integration Units to empower a copy activity run is between 2 and 256. However, ADF dynamically applies the optimal DIU setting based on your source-sink pair and data pattern.

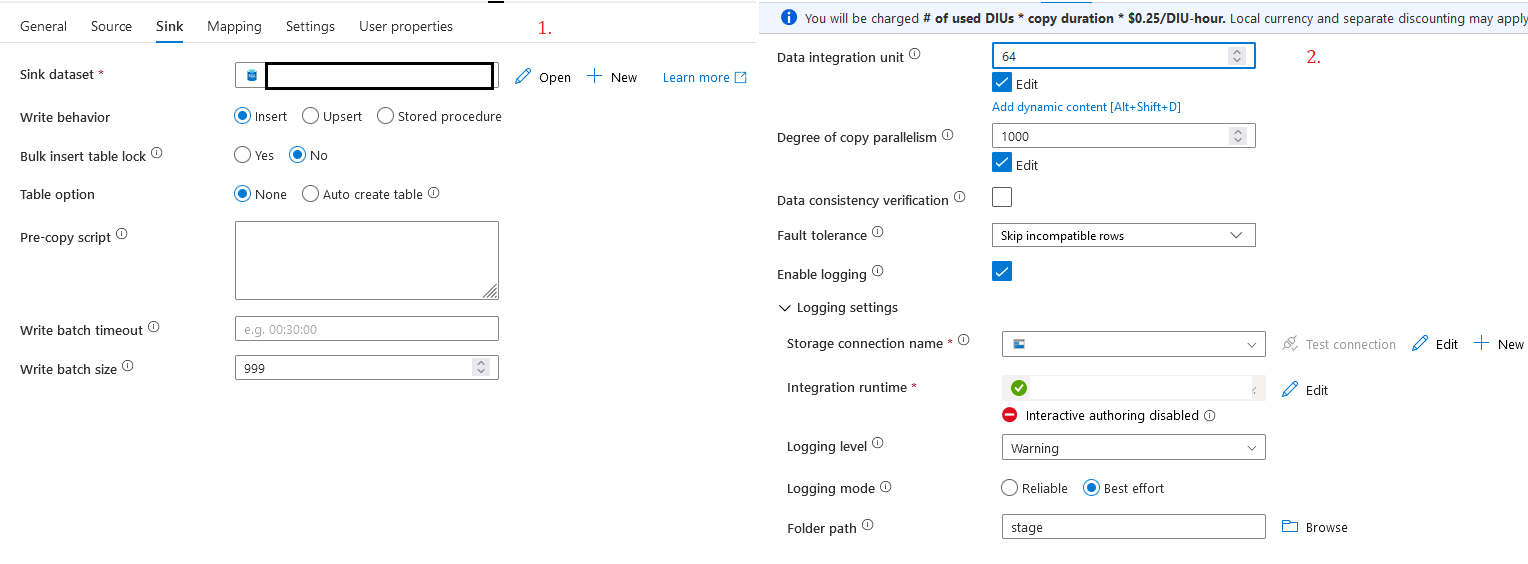

You can consider increasing DIU to 256 . However, during the runtime , DIUs will be decided based on the data stores during the runtime. It depends on various factors like the copy is happening for single file or muliple files or is it between file store to non file store. So, depending on all these factors, DIUs will be assigned.

For more details, check Data Integration Units

Now coming to Degree of parallelism, when you specify a value for the parallelCopies property, take the load increase on your source and sink data stores into account. Also consider the load increase to the integration runtime if the copy activity is empowered by it.

This load increase happens especially when you have multiple activities or concurrent runs of the same activities that run against the same data store.

If you notice that either the data store or the self-hosted integration runtime is overwhelmed with the load, decrease the parallelCopies value to relieve the load.

For more information , please check : Performance tuning steps

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.

Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators