New Breakthrough in Big Data Technologies: the NullSQL Paradigm shift

Mammoth the NullSQL tool

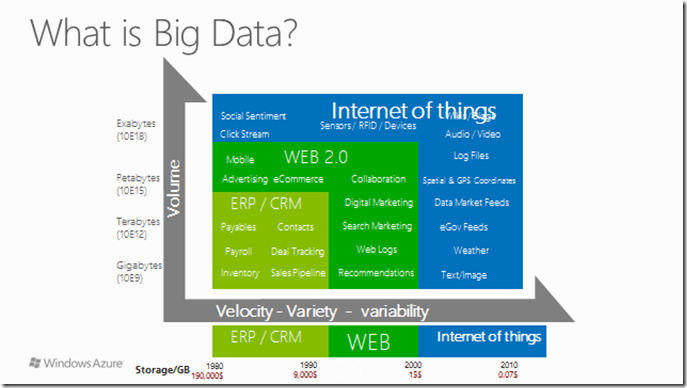

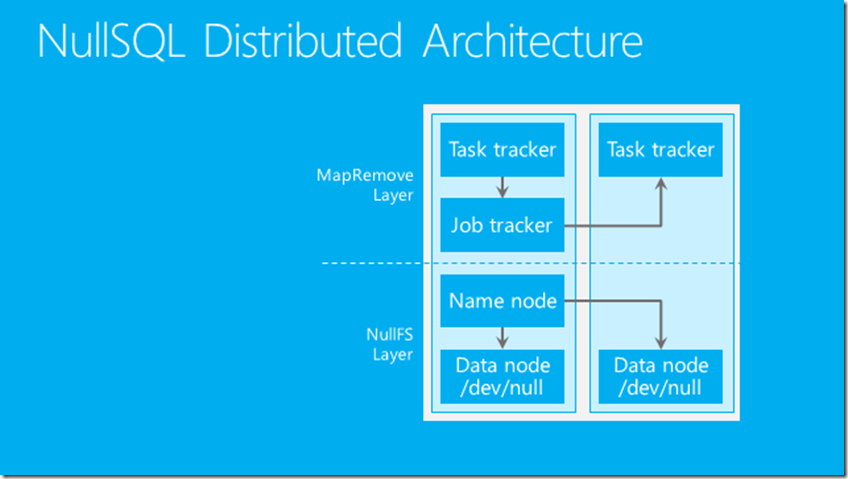

Most of us by now understand the properties of big data. Many of us are already working with big data tools, or NoSQL tools such as Hadoop. I've spent a bit of my spare time in the last 2 months working on prototypes of a new set of tools that can help the big data industry accelerate their progress of handling large amounts of data. The first version, code named Mammoth will be to get the new distribute file system fully implemented and tested. The NullFS, or the Null File System contains a name node which keeps track of a set of Data nodes in a cluster formation.

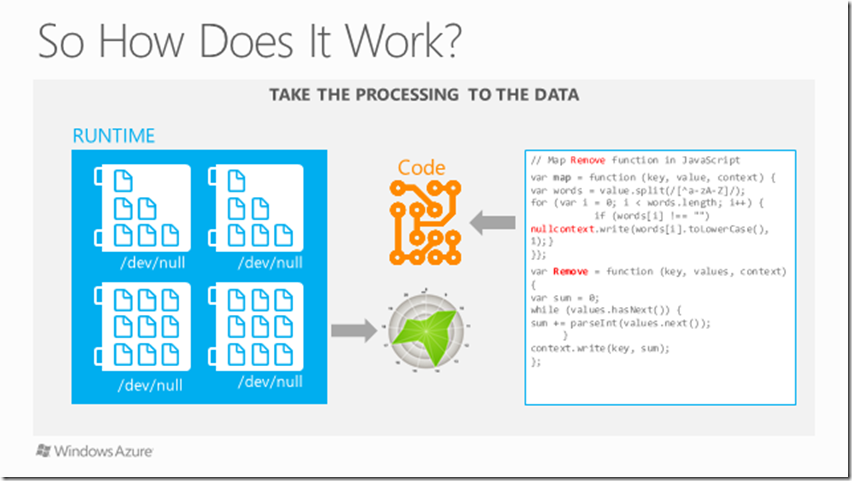

Each of these data nodes contain a data storage device that is write-only, the write only device has the advantage of being to hold any amounts of data you write onto it. This device is the equivalent of achieving quantum computing in big Compute. It is the underlying breakthrough for the NullSQL paradigm. I have tested my implementation with over 1Exabyte of real data including live data from my inbox. The results are promising. In a cluster of 16 nodes, I was able to process more than 1Exabyte of data in minutes.

The Architecture of Mammoth is similar to Hadoop, it is portable, distributed, fault tolerant, and scalable. More importantly, it does satisfy all 3 aspects of the CAP theorem, Concurrency, Availability, and Partition tolerance. It has two layers, the NullFS Layer, and the MapRemove layer. The MapRemove layer will optimally copy data to the right device that is free. We've fully implemented the device drivers for both Windows and Unix: /dev/null (UNIX) and NUL(Windows). The Job tracker and task tracker can optimally handle batch processing jobs as well as jobs submitted by different users.

Here’s an example for a simple MapRemove job, each data node will run the Map process and write results to /dev/null and send leftover data to the global NullContext, and the Remove process simply checks to make sure that there are no more bits left to be processed. This simple programming pattern allows constant scaling. big O(16 ) ,with constant scaling, you will never need a cluster larger than 16 nodes, as the processing time stays the same regardless how many nodes you use beyond 16, or how much data you need to process.

NullSQL

Mammoth will be available on 2/29 of next year, but pre-alpha versions are already available. the NullSQL paradigm with NullFS and MapRemove allow users to store any amounts of data, write-only for less than 16 compute node cluster with constant speed. I believe it will soon be a valuable tool for many organizations that need to store their data write-only economically. It also opens up new opportunities for cloud companies to provide this as a security storage service, as write-only is inherently secure.

Conclusion

Happy 4/1. @wenmingye