Open Source Private Traffic Manager and Regional Failover

Many web applications cannot tolerate downtime in the event of regional data center failures. Failover to a secondary region can be orchestrated with solutions such as Azure Traffic Manager which will monitor multiple end-points and based on availability and performance, users can be directed to the region that is available or closest to them.

The Azure Traffic Manager uses a public end-point (e.g., https://myweb.trafficmanager.net). There are, however, many mission critical, line of business web applications that are private. They are hosted in the cloud on private virtual networks that are peered with on-premises networks. Since they are accessed through a private end-point (e.g., https://myweb.contoso.local), the Azure Traffic Manager cannot be used to orchestrate failover for such application. The solution is to use commercial appliances such ad Big-IP from F5. These commercial solutions are sophisticated and can be hard to configure. Moreover, they can be expensive to operate, so for development and testing scenarios or when budgets are tight, it is worth looking at Open Source alternatives.

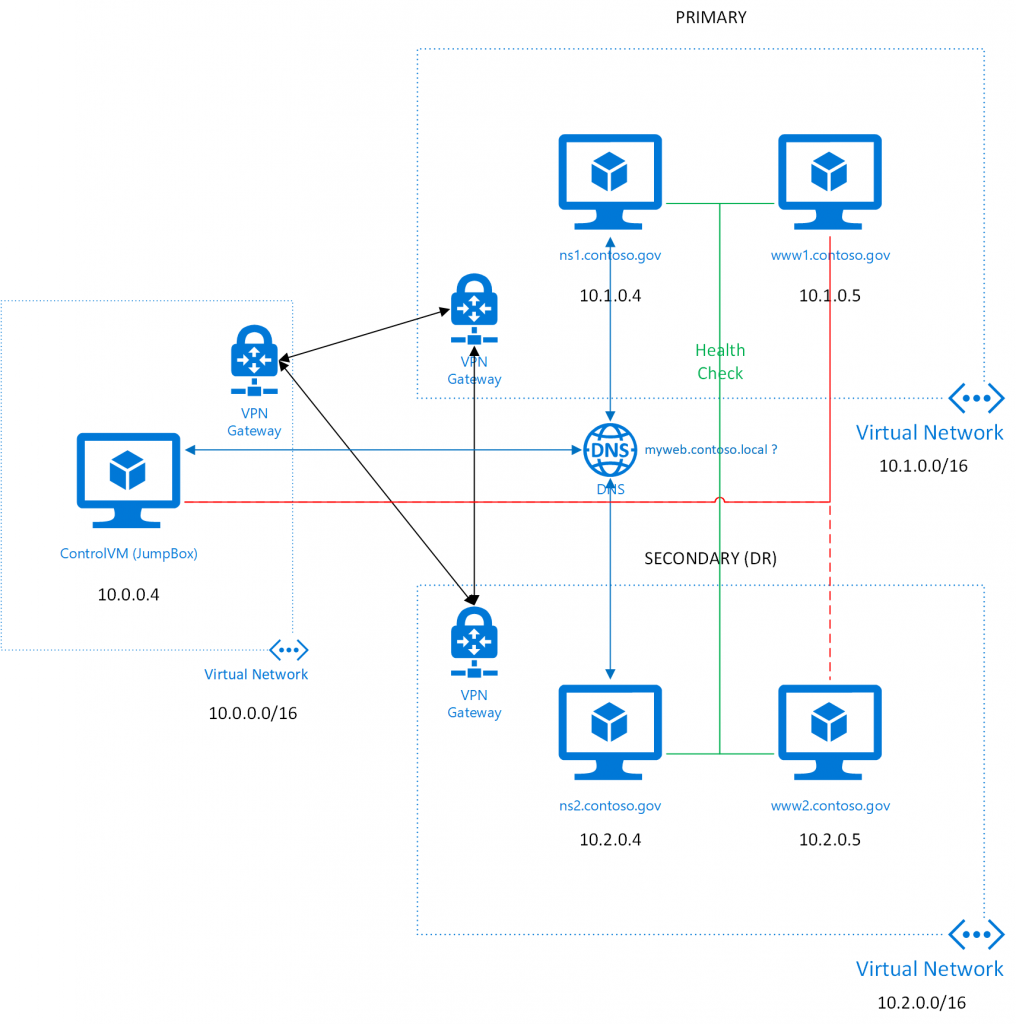

In this blog post, I describe how to use the Polaris Global Server Load Balancer to orchestrate regional failover on a private network consisting of three virtual networks in three different regions. In this hypothetical scenario, the "Contoso" organization has a private network extended into the cloud. They would like to deploy a web application to the URL https://myweb.contoso.local. Under normal circumstances, entering that URL into their browser should take them to a cloud deployment in a private virtual network (e.g., 10.1.0.5), but in the event that this entire region is down, they would like for the user to be directed to another private network in a different region (e.g., 10.2.0.5). Here we are simulating the on-premises network that is peered with the cloud regions by deploying a jump box with a public IP (so that we have easy access here) in a third region. The topology looks something like this:

This entire topology with name servers, web servers, control VM, etc. can be deployed using the PrivateTrafficManager Azure Resource Manager Template, which I have uploaded to GitHub.com. I have deployed this template successfully in both Azure Commercial Cloud and Azure US Government Cloud. Be aware that it takes a while to deploy this template. It uses three (!) Virtual Network Gateways, which can take a while (45 minutes) to deploy. Also be aware that the VM SKUs must be available in the regions that you choose, so your deployment may fail depending on your regional choices. In my testing, I have used eastus, westus2, wescentralus in the commercial cloud and usgovvirginia, usgovtexas, usgovarizona for the regions, but you can experiment.

After deployment, you should have 3 virtual networks (10.0.0.0/16, 10.1.0.0/16, 10.2.0.0/16) and they are connected via the VPN Gateways. In the future, one would probably consider Global VNet Peering, which is currently in public preview in Azure Commercial Cloud. In the first of the networks, we are hosting a Jump Box (or ControlVM), which we are simply using here to test the configuration. It is accessible via RDP using a public IP address. A simple modification to this would be to eliminate the public IP address and use Point-To-Site VPN to connect to the first virtual network. In the other two networks, we have a name server and a web server in each network. The first set is considered the primary one.

When the user enters https://myweb.contoso.local into their browser, traffic should go to https://www1.contoso.local (10.1.0.5), the first web server, unless it is unreachable. In that case, traffic goes to https://www2.contoso.local (10.2.0.5), the second web server. Both name servers (10.1.0.4 and 10.2.0.5) are listed as DNS servers on all networks and they both run the Polaris GSLB and if one of them is down, name resolution requests will go to the other.

The Polaris Wiki has sufficient information to set up the configurations, but the main configuration file is the polaris-lb.yaml file:

[plain]

pools:

www-example:

monitor: http

monitor_params:

use_ssl: false

hostname: myweb.contoso.local

url_path: /

lb_method: fogroup

fallback: any

members:

- ip: 10.1.0.5

name: www1

weight: 1

- ip: 10.2.0.5

name: www2

weight: 1

globalnames:

myweb.contoso.local.:

pool: www-example

ttl: 1

[/plain]

This established two different regions and the endpoints that are being monitored. In the example we also install a polaris-topology.yaml file:

[plain]

datacenter1:

- 10.1.0.5/32

datacenter2:

- 10.2.0.5/32

[/plain]

The name servers are configured with the setup_ns.sh script:

[bash]

#!/bin/bash

sql_server_password=$1

debconf-set-selections <<< "mysql-server mysql-server/root_password password $sql_server_password"

debconf-set-selections <<< "mysql-server mysql-server/root_password_again password $sql_server_password"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/password-confirm password $sql_server_password"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/app-password-confirm password $sql_server_password"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/mysql/app-pass password $sql_server_password"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/mysql/admin-pass password $sql_server_password"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/dbconfig-remove select true"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/dbconfig-reinstall select false"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/dbconfig-upgrade select true"

debconf-set-selections <<< "pdns-backend-mysql pdns-backend-mysql/dbconfig-install select true"

apt-get update

apt-get -y install mysql-server mysql-client pdns-server pdns-backend-mysql pdns-server pdns-backend-remote memcached python3-pip

#Add recurser to Azure DNS

sudo sed -i.bak 's/# recursor=no/recursor=168.63.129.16/' /etc/powerdns/pdns.conf

#Install polaris

git clone https://github.com/polaris-gslb/polaris-gslb.git

cd polaris-gslb

sudo python3 setup.py install

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/pdns/pdns.local.remote.conf

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/polaris/polaris-health.yaml

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/polaris/polaris-lb.yaml

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/polaris/polaris-pdns.yaml

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/polaris/polaris-topology.yaml

wget https://raw.githubusercontent.com/hansenms/PrivateTrafficManager/master/PrivateTrafficManager/scripts/polaris.service

cp pdns.local.remote.conf /etc/powerdns/pdns.d/

cp *.yaml /opt/polaris/etc/

systemctl restart pdns.service

#Copy startup to ensure proper reboot behavior

cp polaris.service /etc/systemd/system

systemctl enable polaris.service

systemctl start polaris.service

pdnsutil create-zone contoso.local

pdnsutil add-record contoso.local ns1 A 10.1.0.4

pdnsutil add-record contoso.local ns2 A 10.2.0.4

pdnsutil add-record contoso.local www1 A 10.1.0.5

pdnsutil add-record contoso.local www2 A 10.2.0.5

exit 0

[/bash]

A few different things are achieved in that script:

- PowerDNS with MySQL backend is installed. This involves installing MySQL server and setting root password for that. In order for this script to run unattended during template deployment, we feed in the answers needed during installation.

- Polaris GSLB is installed.

- The contoso.local zone is established.

- NS records are created for the name servers and the web servers.

- We ensure that Polaris GSLB runs on reboot of the name servers.

For the web servers, we use the setup_www.sh script:

[bash]

#!/bin/bash

machine_message="<html><h1>Machine: $(hostname)</h1></html>"

apt-get update

apt-get -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

apt-get -y update

apt-get -y install docker-ce

mkdir -p /var/www

echo $machine_message >> /var/www/index.html

docker run --detach --restart unless-stopped -p 80:80 --name nginx1 -v /var/www:/usr/share/nginx/html:ro -d nginx

[/bash]

This script installs Docker (Community Edition), creates an HTML page displaying the machine name, and runs nginx in a Docker container to display this page. This is just to allow us to see a difference as to whether the page is served from one region or the other in this example. In a real scenario, the user should not really experience a different going to one region or the other.

As mentioned, we are not using the Azure Docker Extension here. It is not yet available in Azure Government and the approach above ensures it will deploy in either Azure Commercial Cloud or Azure US Government Cloud.

After deploying the template, you can log into the jump box using RDP and a first test is to ping myweb.consoso.local:

[powershell]

Windows PowerShell

Copyright (C) 2016 Microsoft Corporation. All rights reserved.

PS C:\Users\mihansen> ping myweb.contoso.local

Pinging myweb.contoso.local [10.1.0.5] with 32 bytes of data:

Reply from 10.1.0.5: bytes=32 time=28ms TTL=62

Reply from 10.1.0.5: bytes=32 time=28ms TTL=62

Reply from 10.1.0.5: bytes=32 time=29ms TTL=62

Reply from 10.1.0.5: bytes=32 time=29ms TTL=62

Ping statistics for 10.1.0.5:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 28ms, Maximum = 29ms, Average = 28ms

PS C:\Users\mihansen>

[/powershell]

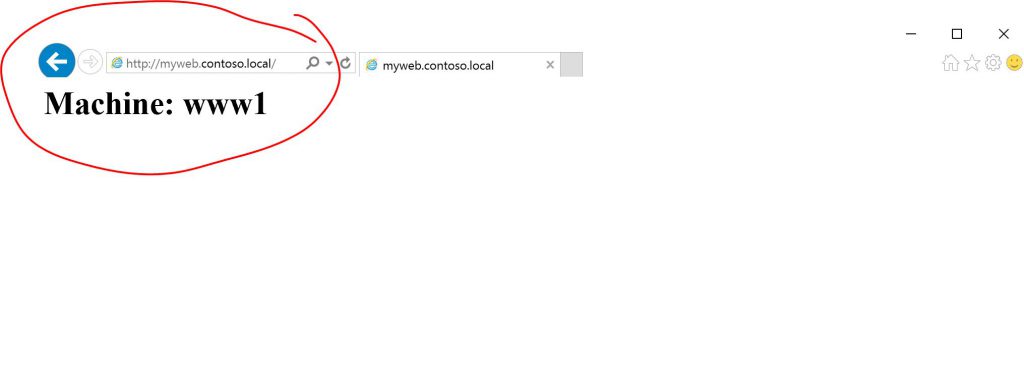

We can also open a browser en verify that we are getting the page from the first web server (www1):

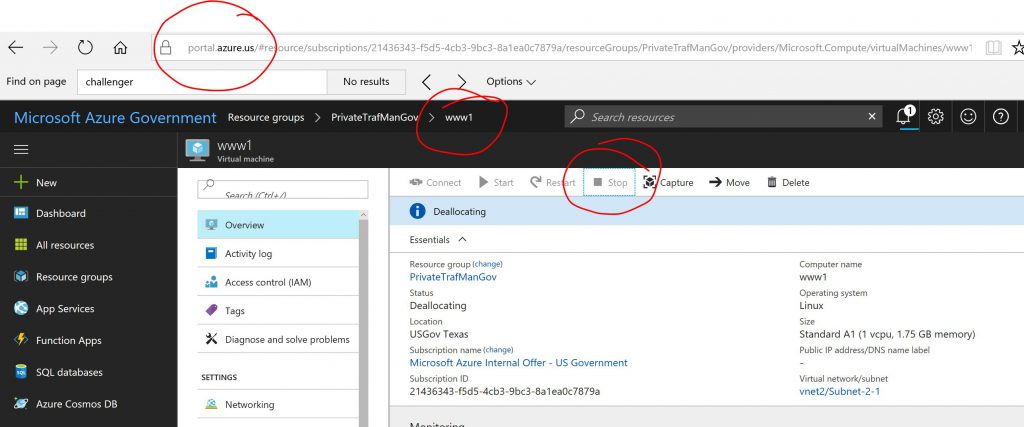

Now we can go on the Azure Portal (https://portal.azure.us for Azure Gov used here, https://portal.azure.com for Azure Commercial) and cause the primary web server to fail. In this case, we will simply stop it:

After stopping it, wait a few seconds and repeat the ping test:

[powershell]

PS C:\Users\mihansen> ping myweb.contoso.local

Pinging myweb.contoso.local [10.2.0.5] with 32 bytes of data:

Reply from 10.2.0.5: bytes=32 time=49ms TTL=62

Reply from 10.2.0.5: bytes=32 time=46ms TTL=62

Reply from 10.2.0.5: bytes=32 time=53ms TTL=62

Reply from 10.2.0.5: bytes=32 time=48ms TTL=62

Ping statistics for 10.2.0.5:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 46ms, Maximum = 53ms, Average = 49ms

PS C:\Users\mihansen>

[/powershell]

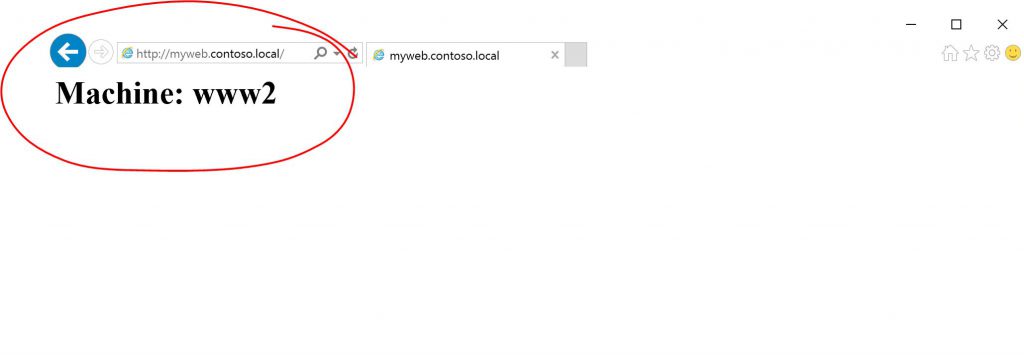

As we can see, it is now sending clients to the secondary region and if we go to the browser (you may have to close your browser and force a refresh to clear the cache), you should see that we are going to our secondary region:

Of course if you restart www1, the clients will be directed to the primary region.

In summary, we have established a private network with a private DNS zone. A private traffic manager has been established with open source components and we have demonstrated failing over to a secondary region. All of this was done without any public end-points and could be entirely private between an on-prem network and Azure.

Let me know if you have comments/suggestions/experiences to share.