Hooray for Buggy Software???

No this is not a piece on job security for Software QA Professionals. I was recently at a Test Team sprint-end demo. For at least two of the projects shown, the metric of interest to Dev managements was, “how many bugs did you find?” and when the answer was either a high number or a high severity (“Three hi-Sev ones!”) the response was applause.

This seemed wrong to me.

My first reaction is, OK, let’s re-phrase this….

“How many high severity defects did we just design and implement into our product?”....”Three?”....”That’s great! (applause)”

or

"Oh Test team, did you catch our graven mistakes?"...."Three of them?"....."Thank you test team! (applause)"

graven: past tense of grave

grave: serious, fraught with danger or peril

IOW...very dangerous, but no longer our problem... :-)

This would indicate that asking "how many bugs?" is the wrong approach, and I dutifully chastised the assembled as such. But then later I thought...I too have fallen prey to the siren song of this particular metric... not just long ago in my unenlightened days but even more recently...why? And what does this mean for the validity of "how many bugs?" Here is my conclusion....

Three Stages

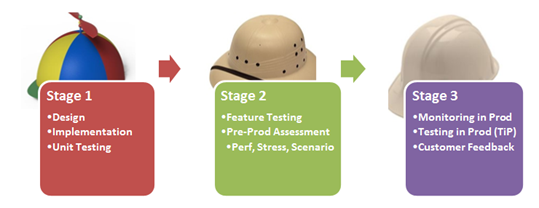

The world of the software development Life-cycle can be sliced and diced many different ways. Here, to make my point, I will delineate three stages.

The bulleted items are the quality centric activities we engage in at each step.

When we ask how many bugs did we find, we are focusing on Stage 2. So asking the question is not wrong in itself, but it is an error of omission in that we neglect the contribution and impact on quality of stages 1 and 3.

Stage 1 Quality

When I make the titular quips earlier in this blog entry, I am saying that high bug counts in stage 2 may mean we have a breakdown of quality in stage 1. While developers often lead the design, a solid quality design will be the result of contributions from Test (system suitability and testability) and Program Management (customer focus). Implementation quality is not only dependent on good development practices, but can be integrally tied to unit testing when Test-Driven Development (TDD) is used. If not then the unit tests still can provide foundational insight that what we asked for is what we got.

When I make the titular quips earlier in this blog entry, I am saying that high bug counts in stage 2 may mean we have a breakdown of quality in stage 1. While developers often lead the design, a solid quality design will be the result of contributions from Test (system suitability and testability) and Program Management (customer focus). Implementation quality is not only dependent on good development practices, but can be integrally tied to unit testing when Test-Driven Development (TDD) is used. If not then the unit tests still can provide foundational insight that what we asked for is what we got.

Quality Measurements for Stage 1

- Design reviewed with Test and PM (a binary measurement)

- Percent of code that was peer-reviewed. My opinion...should be 100%. This is not only to catch mistakes, but is a great way for senior developers to guide more junior ones in good development practices like using design patterns, and code re-use.

- Unit Test Code Coverage. Yipes, did I say that? Some would fancy this a controversial assertion. But just like bug count is not an end all, but one piece of the puzzle, so is code coverage.

- Code Coverage Gap Analysis: Of the code not covered, are we missing anything?

- Static Code Analysis [added 10/7]

Stage 2 Quality

A primary point I am trying to make is that this stage is where many software professional's minds go when they think of software quality. The error of this perception is best summed up by the adage, "you cannot test quality into your system". [if anyone knows who to credit for this, please let me know]

A primary point I am trying to make is that this stage is where many software professional's minds go when they think of software quality. The error of this perception is best summed up by the adage, "you cannot test quality into your system". [if anyone knows who to credit for this, please let me know]

This is but one stage in the process, and a lot of the quality measurements in stage 1 have their counter-parts here:

-

-

-

- Test Plan Reviewed by Dev and PM?

- Percent Test Code peer reviewed

- Integration Test Code Coverage (same caveats as always)

-

-

Then there are the ones specific to this stage

- Documented test results: Especially important for perf and stress as these provide the benchmarks for what we know we can take in production.

- Number and Severity of bugs found. There it is. Yes it can be a valid quality/risk indicator. But it cannot be taken in a vacuum... it must be interpreted along with Stage 1 and Stage 3 results.

- Does a high and severe bug count mean our super-effective stage 2 is going to wring all the risk out of this product, or does it mean our Stage 1 quality is terrible, and we can expect more terribleness to plague our customers?

- Does a low bug count mean our stage 2 tools are wastes of time, or does it mean that stage 1 kicked butt and resulted in high quality code?

Stage 3 Quality

I have spoken before about Testing in Production (TiP); as a valid approach to software services. The acceptance of this approach (if not the approach itself) is somewhat new. Monitoring in Production however is not new. Amazon.com is a good example of how well developed and supported monitoring tools, plus other tools that enable rapid response to production issues (i.e. hot fixes) can be part of a quality regimen for services. But you may ask, is allowing defects into production, even if you can find and fix them fast, "quality"...yes, just ask most Amazon.com users if they have experienced a problem...or see Amazon's customer satisfaction scores.

I have spoken before about Testing in Production (TiP); as a valid approach to software services. The acceptance of this approach (if not the approach itself) is somewhat new. Monitoring in Production however is not new. Amazon.com is a good example of how well developed and supported monitoring tools, plus other tools that enable rapid response to production issues (i.e. hot fixes) can be part of a quality regimen for services. But you may ask, is allowing defects into production, even if you can find and fix them fast, "quality"...yes, just ask most Amazon.com users if they have experienced a problem...or see Amazon's customer satisfaction scores.

Even if we accept production as a legitimate quality stage, then why not just catch all the defects in stage 2 and then don't worry about stage 3? The answer is cost. If we attempt to find everything with our Big Up-Front Testing in stage 2, we can approach the limits of diminishing returns with increasing costs. Employing stage 3 along with stages 1 and 2 is a legitimate cost-effective way to maximize quality for services. And what does this mean for bug-counts in stage 2? It means that we should also be keenly aware of the price we are paying to find those bugs, and make sure it is cost effective.

In Conclusion…

….hey guys who were in that meeting where I railed against your interest in bug counts, I too have been known to engage in a bug count or two. As long as we are on the same page with respect to where bug counts fit within the SDLC universe (and all indications thus far indicate that we are), then I am cool with it.