Events

Take the Microsoft Learn AI Skills Challenge

Sep 24, 11 PM - Nov 1, 11 PM

Elevate your skills in Microsoft Azure AI Document Intelligence and earn a digital badge by November 1.

Register nowThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Important

Some of the features described in this article might only be available in preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

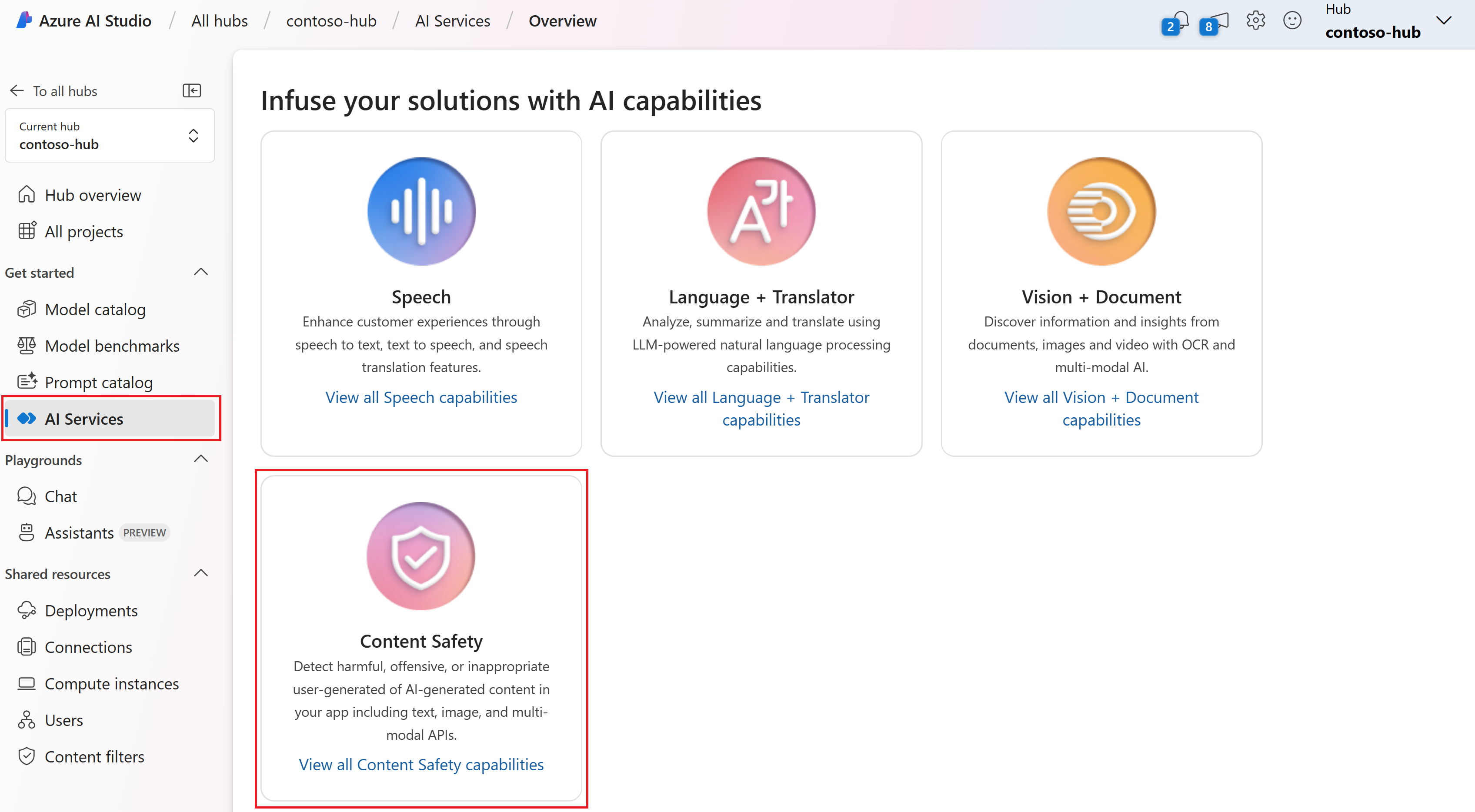

Learn how to use Azure AI Content Safety Prompt Shields to check large language model (LLM) inputs for both User Prompt attacks and Document attacks.

Either select a sample scenario or write your own inputs in the text boxes provided. Prompt Shields analyzes both the user prompt and any documents included with the prompt for potential attacks.

Select Run test to get the result.

Configure content filters for each provided category to match your use case.

Events

Take the Microsoft Learn AI Skills Challenge

Sep 24, 11 PM - Nov 1, 11 PM

Elevate your skills in Microsoft Azure AI Document Intelligence and earn a digital badge by November 1.

Register nowTraining

Module

Apply prompt engineering with Azure OpenAI Service - Training

In this module, learn how prompt engineering can help to create and fine-tune prompts for natural language processing models. Prompt engineering involves designing and testing various prompts to optimize the performance of the model in generating accurate and relevant responses.

Certification

Microsoft Certified: Azure AI Engineer Associate - Certifications

Design and implement an Azure AI solution using Azure AI services, Azure AI Search, and Azure Open AI.