Get started with Document Intelligence

Important

- Azure Cognitive Services Form Recognizer is now Azure AI Document Intelligence.

- Some platforms are still awaiting the renaming update.

- All mention of Form Recognizer or Document Intelligence in our documentation refers to the same Azure service.

This content applies to: ![]() v4.0 (preview) Earlier versions:

v4.0 (preview) Earlier versions: ![]() v3.1 (GA)

v3.1 (GA) ![]() v3.0 (GA)

v3.0 (GA)

- Get started with Azure AI Document Intelligence latest preview version (2024-02-29-preview).

This content applies to: ![]() v3.1 (GA) Earlier versions:

v3.1 (GA) Earlier versions: ![]() v3.0

v3.0 ![]() v2.1

v2.1

- Get started with Azure Form Recognizer latest GA version (

2023-07-31).

This content applies to: ![]() v3.0 (GA) Newer version:

v3.0 (GA) Newer version: ![]() v3.1

v3.1 ![]() v2.1

v2.1

- Get started with Azure Form Recognizer legacy GA version (

2022-08-31).

Azure AI Document Intelligence / Form Recognizer is a cloud-based Azure AI service that uses machine learning to extract key-value pairs, text, tables, and key data from your documents.

You can easily integrate document processing models into your workflows and applications by using a programming language SDK or calling the REST API.

For this quickstart, we recommend that you use the free service while you're learning the technology. Remember that the number of free pages is limited to 500 per month.

To learn more about the API features and development options, visit our Overview page.

Client library | SDK reference | API reference | Package (NuGet) | Samples | Supported REST API version

In this quickstart, use the following features to analyze and extract data and values from forms and documents:

Layout model—Analyze and extract tables, lines, words, and selection marks like radio buttons and check boxes in documents, without the need to train a model.

Prebuilt model—Analyze and extract common fields from specific document types using a prebuilt model.

Prerequisites

Azure subscription - Create one for free.

The current version of Visual Studio IDE.

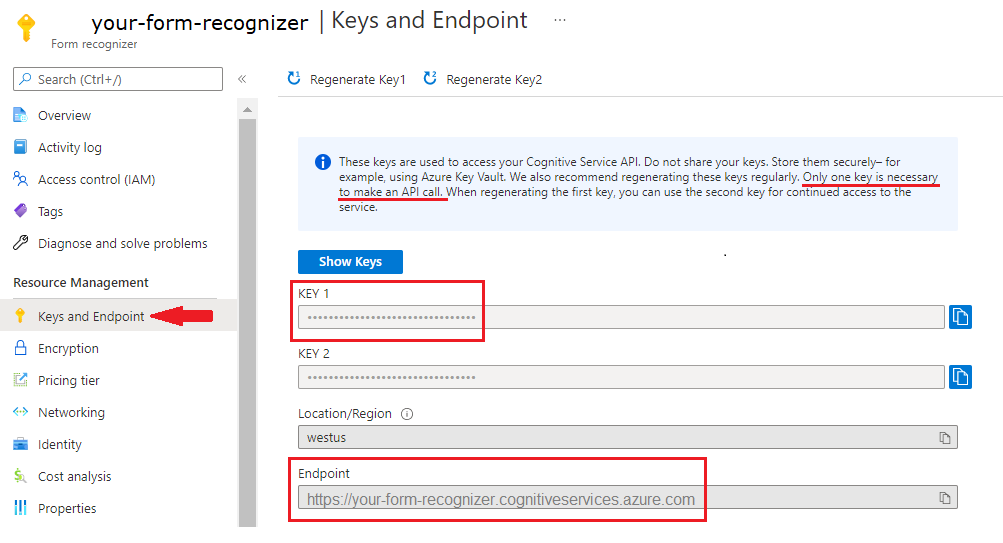

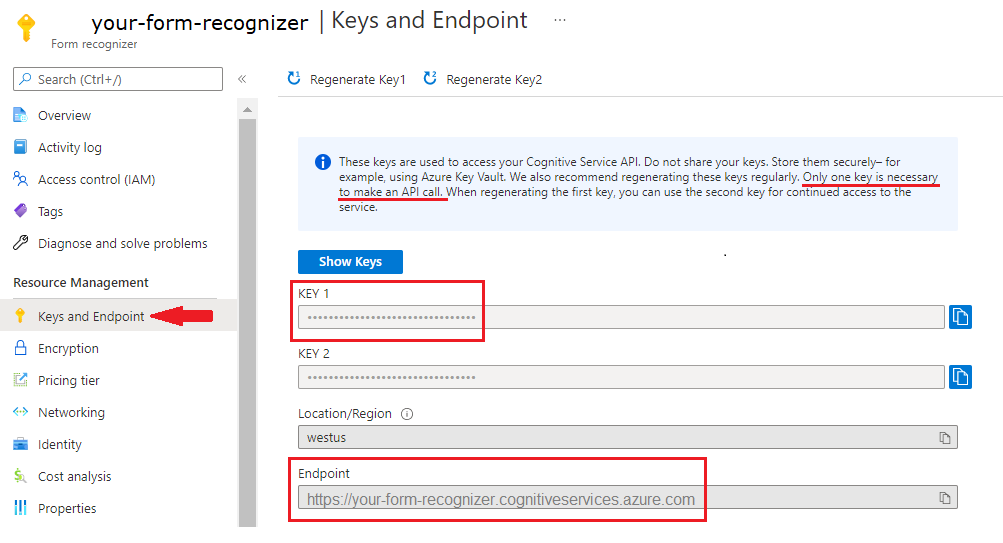

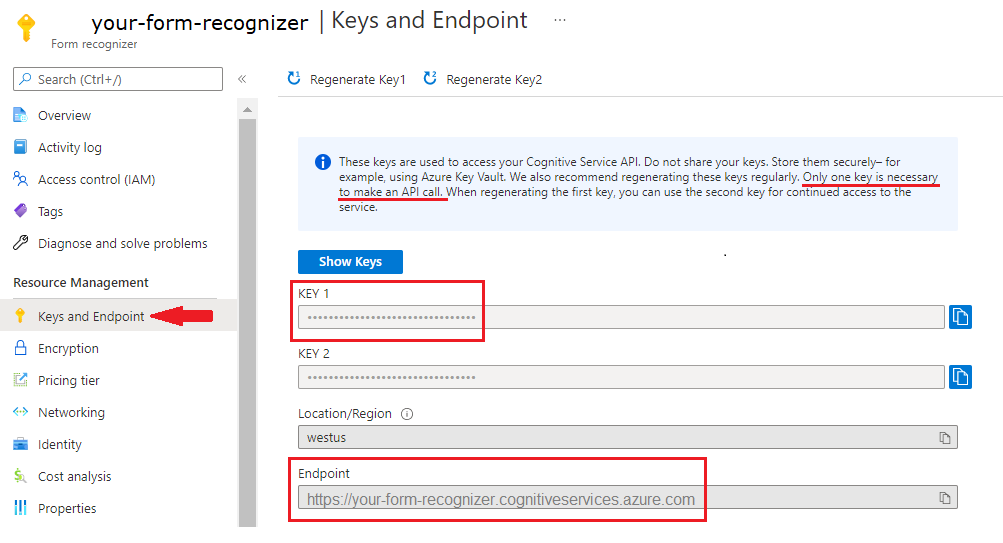

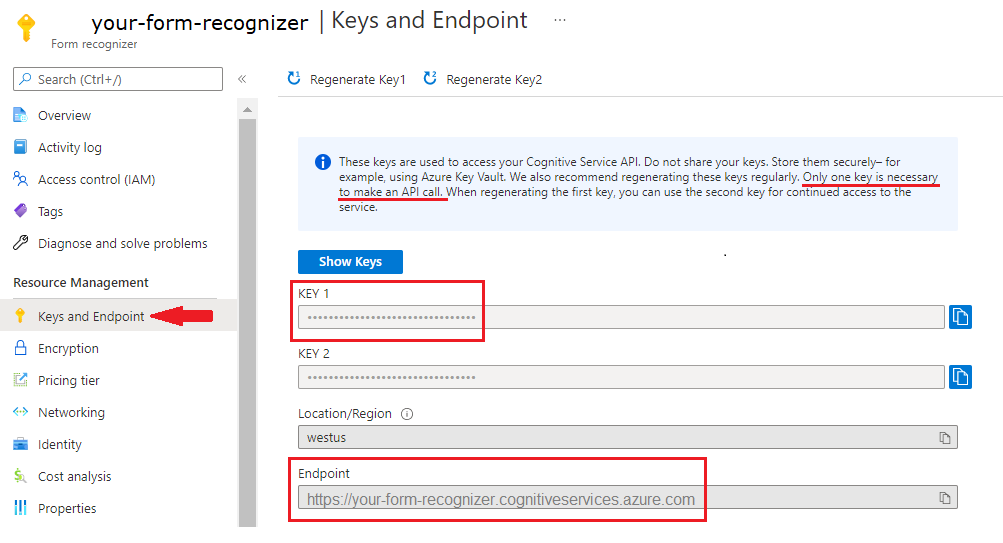

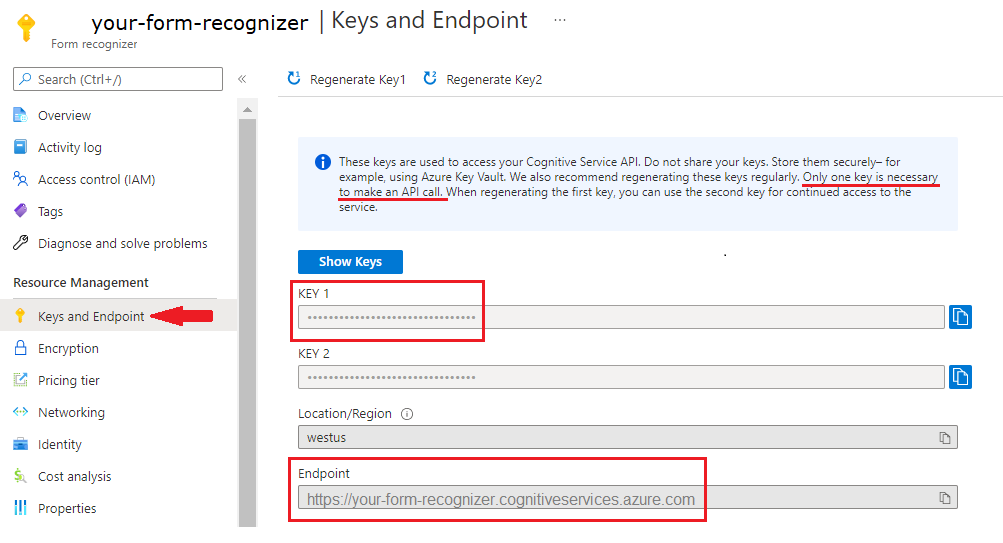

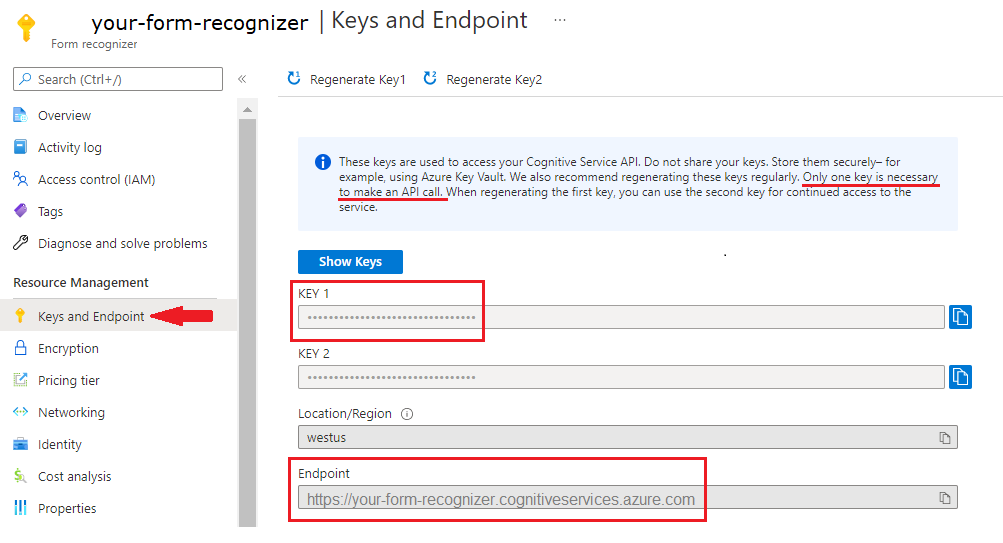

An Azure AI services or Document Intelligence resource. Once you have your Azure subscription, create a single-service or Azure AI multi-service resource, in the Azure portal, to get your key and endpoint.

You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

Tip

Create an Azure AI services resource if you plan to access multiple Azure AI services under a single endpoint/key. For Document Intelligence access only, create a Document Intelligence resource. Please note that you'll need a single-service resource if you intend to use Microsoft Entra authentication.

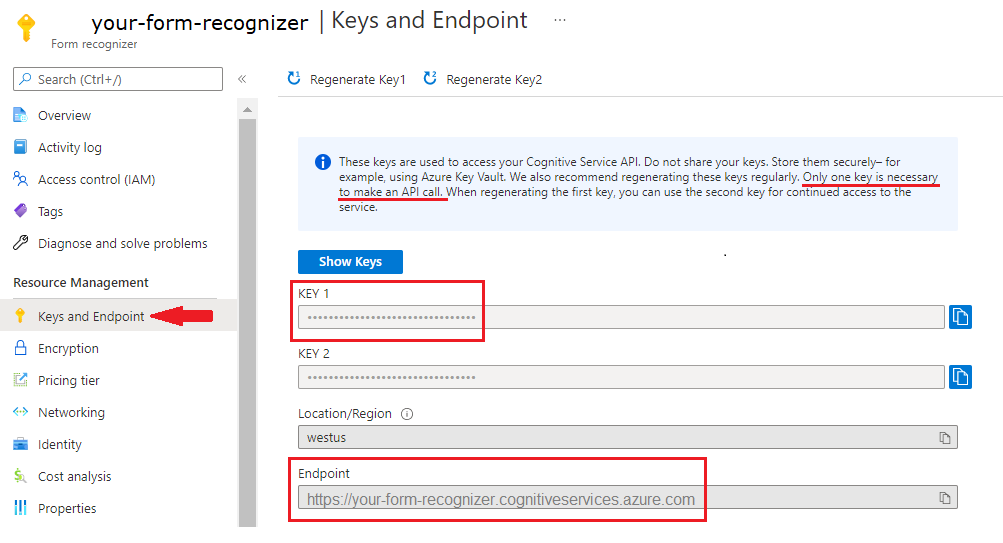

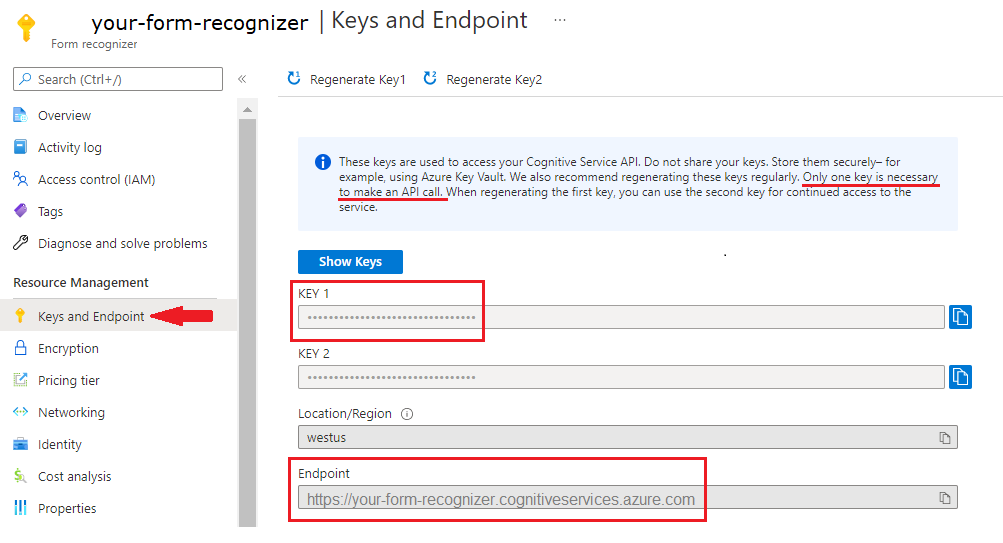

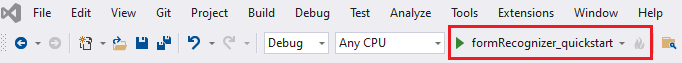

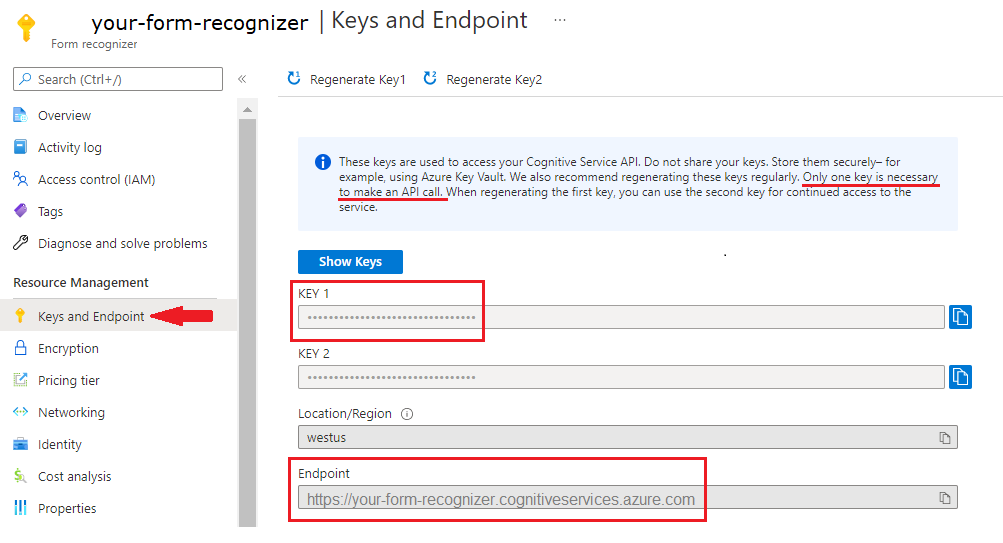

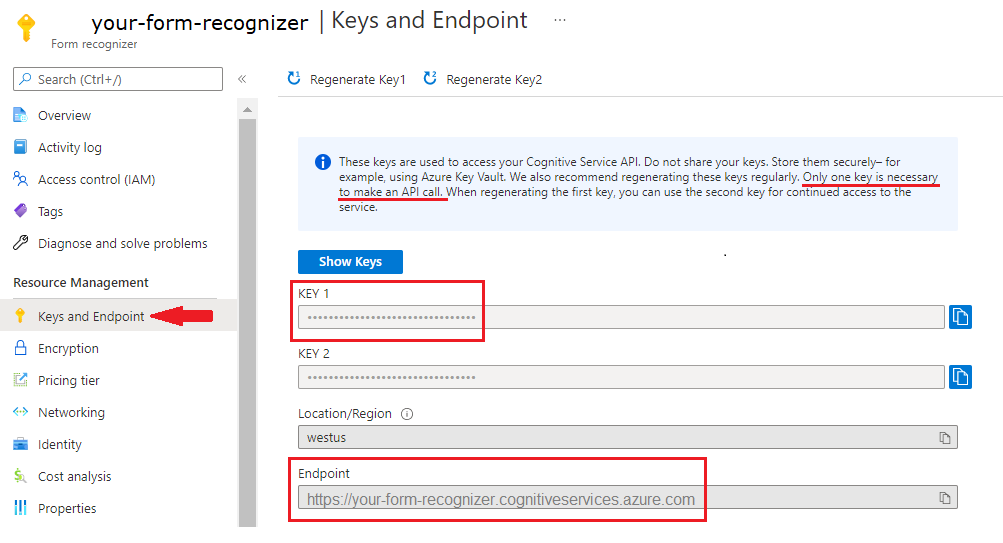

After your resource deploys, select Go to resource. You need the key and endpoint from the resource you create to connect your application to the Document Intelligence API. You paste your key and endpoint into the code later in the quickstart:

An Azure AI services or Form Recognizer resource. Once you have your Azure subscription, create a single-service or Azure AI multi-service resource, in the Azure portal, to get your key and endpoint.

You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

Tip

Create an Azure AI services resource if you plan to access multiple Azure AI services under a single endpoint/key. For Form Recognizer access only, create a Form Recognizer resource. Please note that you'll need a single-service resource if you intend to use Microsoft Entra authentication.

After your resource deploys, select Go to resource. You need the key and endpoint from the resource you create to connect your application to the Form Recognizer API. You paste your key and endpoint into the code later in the quickstart:

Set up

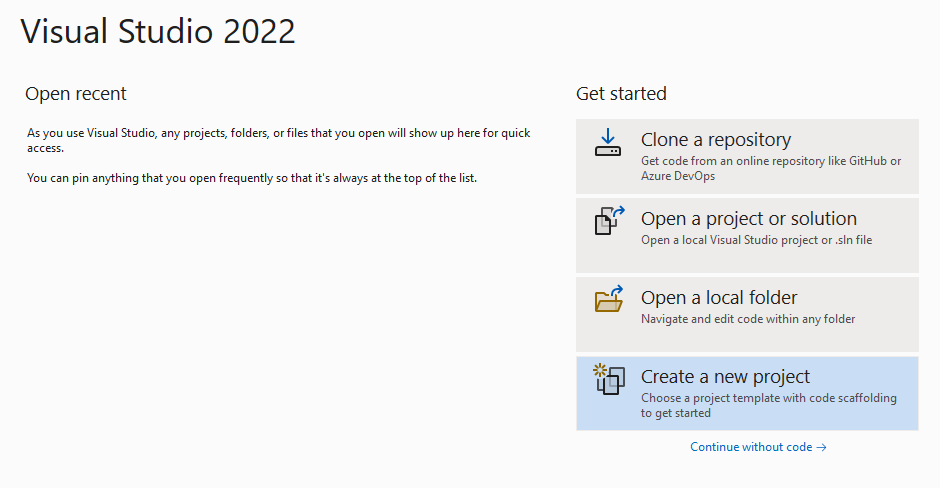

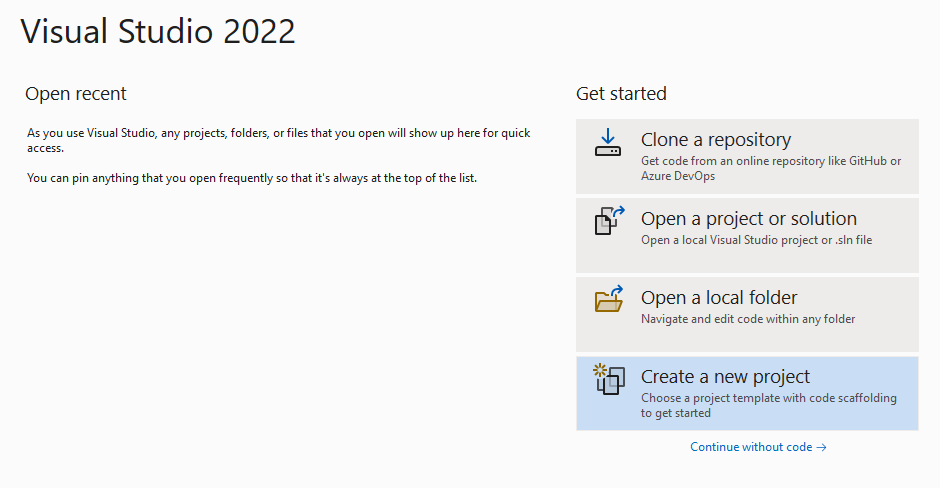

Start Visual Studio.

On the start page, choose Create a new project.

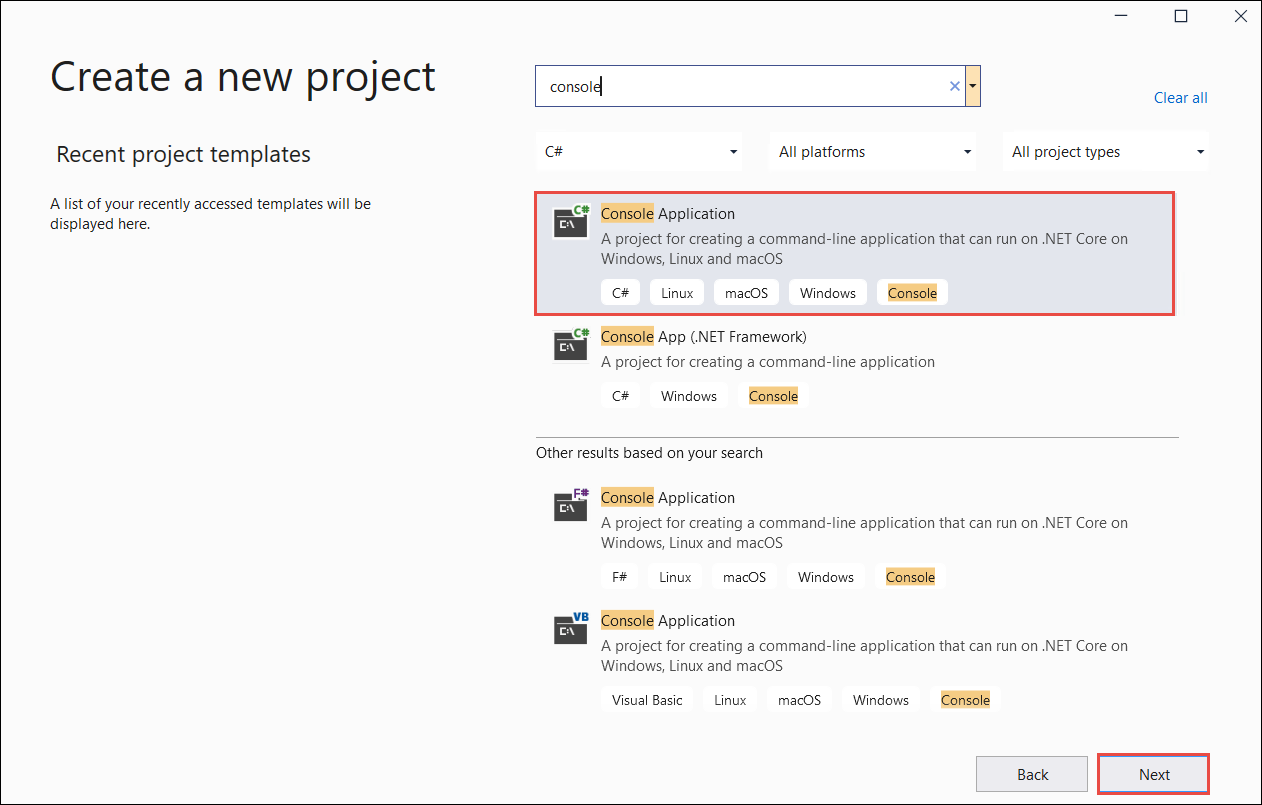

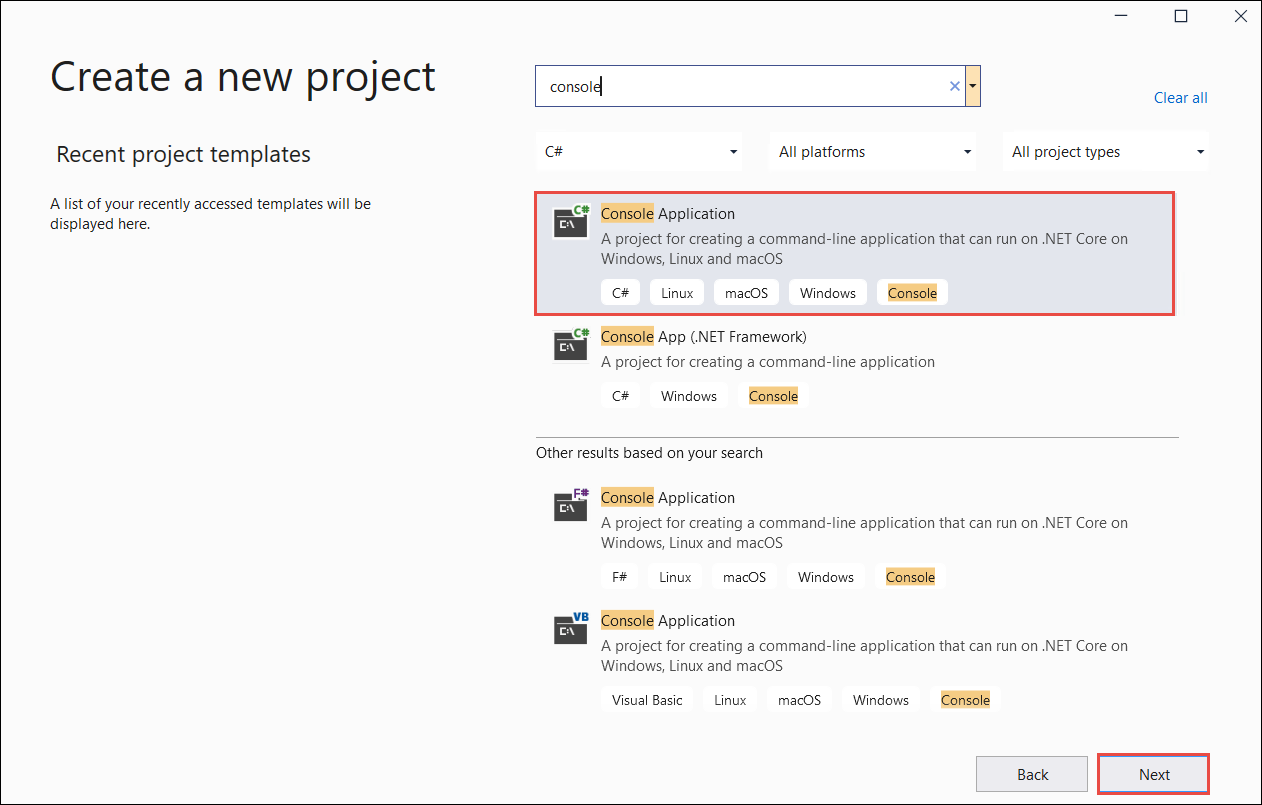

On the Create a new project page, enter console in the search box. Choose the Console Application template, then choose Next.

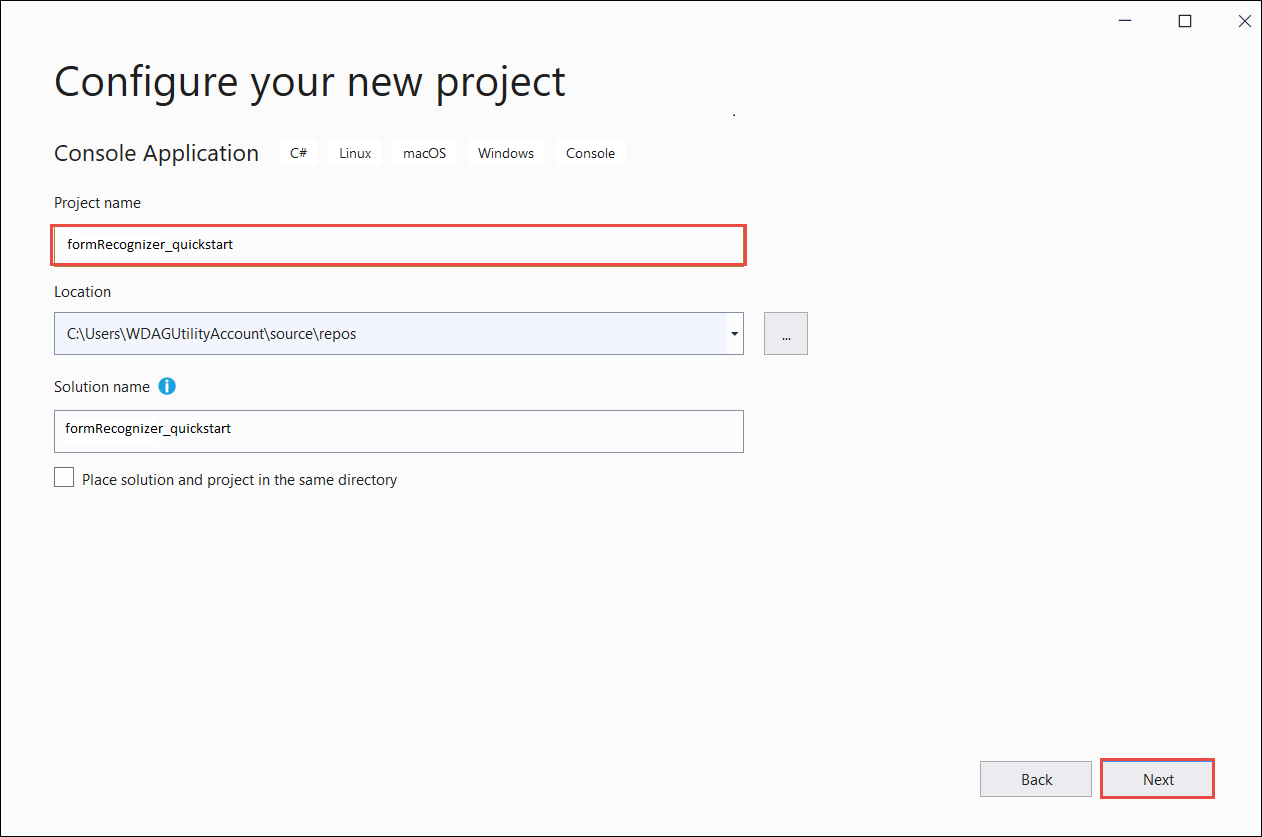

- In the Configure your new project dialog window, enter

doc_intel_quickstartin the Project name box. Then choose Next.

- In the Configure your new project dialog window, enter

form_recognizer_quickstartin the Project name box. Then choose Next.

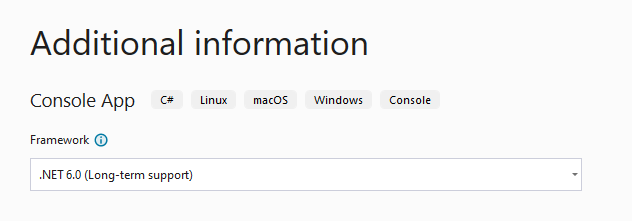

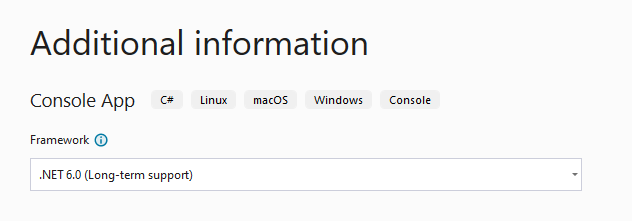

In the Additional information dialog window, select .NET 6.0 (Long-term support), and then select Create.

Install the client library with NuGet

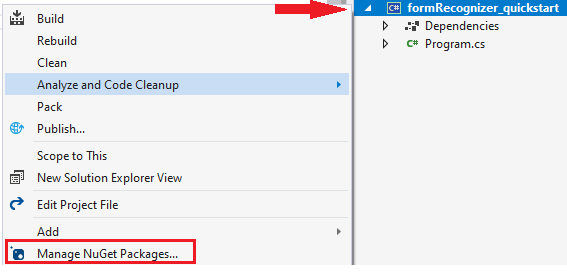

Right-click on your doc_intel_quickstart project and select Manage NuGet Packages... .

Select the Browse tab and type Azure.AI.DocumentIntelligence. Choose the

Include prereleasecheckbox and select version 1.0.0-beta.1 from the dropdown menu

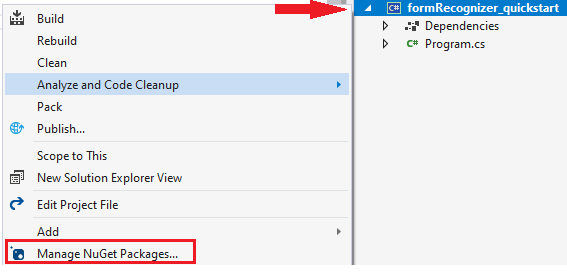

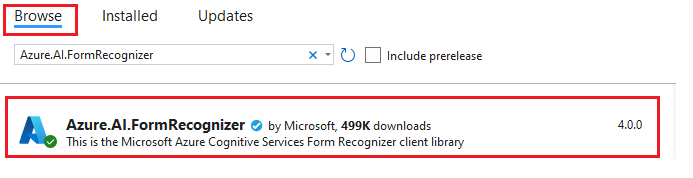

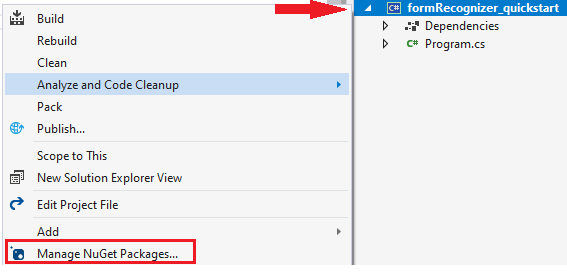

Right-click on your form_recognizer_quickstart project and select Manage NuGet Packages... .

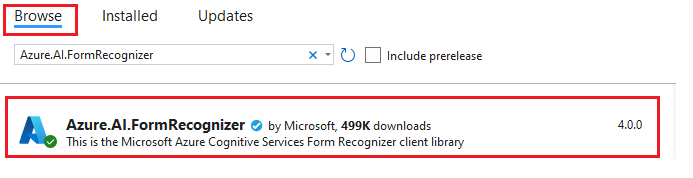

Select the Browse tab and type Azure.AI.FormRecognizer. Select version 4.1.0 from the dropdown menu

Right-click on your form_recognizer_quickstart project and select Manage NuGet Packages... .

Select the Browse tab and type Azure.AI.FormRecognizer. Select version 4.0.0 from the dropdown menu

Build your application

To interact with the Document Intelligence service, you need to create an instance of the DocumentIntelligenceClient class. To do so, you create an AzureKeyCredential with your key from the Azure portal and a DocumentIntelligenceClient instance with the AzureKeyCredential and your Document Intelligence endpoint.

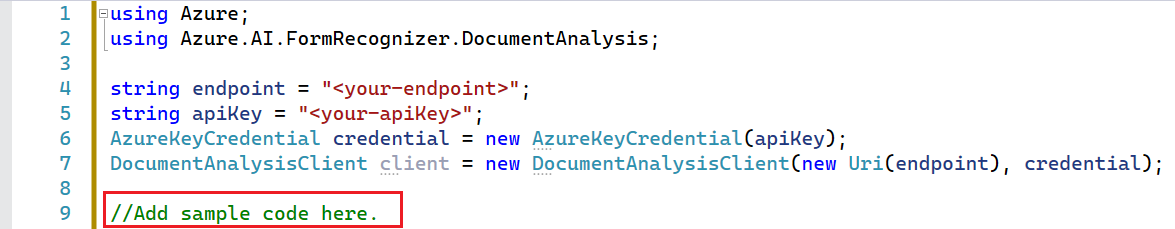

To interact with the Form Recognizer service, you need to create an instance of the DocumentAnalysisClient class. To do so, you create an AzureKeyCredential with your key from the Azure portal and a DocumentAnalysisClient instance with the AzureKeyCredential and your Form Recognizer endpoint.

Note

- Starting with .NET 6, new projects using the

consoletemplate generate a new program style that differs from previous versions. - The new output uses recent C# features that simplify the code you need to write.

- When you use the newer version, you only need to write the body of the

Mainmethod. You don't need to include top-level statements, global using directives, or implicit using directives. - For more information, see New C# templates generate top-level statements.

Open the Program.cs file.

Delete the pre-existing code, including the line

Console.Writeline("Hello World!"), and select one of the following code samples to copy and paste into your application's Program.cs file:

Important

Remember to remove the key from your code when you're done, and never post it publicly. For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information, see Azure AI services security.

Layout model

Extract text, selection marks, text styles, table structures, and bounding region coordinates from documents.

- For this example, you'll need a document file from a URI. You can use our sample document for this quickstart.

- We've added the file URI value to the

Uri fileUrivariable at the top of the script. - To extract the layout from a given file at a URI, use the

StartAnalyzeDocumentFromUrimethod and passprebuilt-layoutas the model ID. The returned value is anAnalyzeResultobject containing data from the submitted document.

Add the following code sample to the Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

using Azure;

using Azure.AI.DocumentIntelligence;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `DocumentIntelligenceClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentIntelligenceClient client = new DocumentIntelligenceClient(new Uri(endpoint), credential);

//sample document

Uri fileUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf");

Operation<AnalyzeResult> operation = await client.AnalyzeDocumentAsync(WaitUntil.Completed, "prebuilt-layout", fileUri);

AnalyzeResult result = operation.Value;

foreach (DocumentPage page in result.Pages)

{

Console.WriteLine($"Document Page {page.PageNumber} has {page.Lines.Count} line(s), {page.Words.Count} word(s)," +

$" and {page.SelectionMarks.Count} selection mark(s).");

for (int i = 0; i < page.Lines.Count; i++)

{

DocumentLine line = page.Lines[i];

Console.WriteLine($" Line {i}:");

Console.WriteLine($" Content: '{line.Content}'");

Console.Write(" Bounding polygon, with points ordered clockwise:");

for (int j = 0; j < line.Polygon.Count; j += 2)

{

Console.Write($" ({line.Polygon[j]}, {line.Polygon[j + 1]})");

}

Console.WriteLine();

}

for (int i = 0; i < page.SelectionMarks.Count; i++)

{

DocumentSelectionMark selectionMark = page.SelectionMarks[i];

Console.WriteLine($" Selection Mark {i} is {selectionMark.State}.");

Console.WriteLine($" State: {selectionMark.State}");

Console.Write(" Bounding polygon, with points ordered clockwise:");

for (int j = 0; j < selectionMark.Polygon.Count; j++)

{

Console.Write($" ({selectionMark.Polygon[j]}, {selectionMark.Polygon[j + 1]})");

}

Console.WriteLine();

}

}

for (int i = 0; i < result.Paragraphs.Count; i++)

{

DocumentParagraph paragraph = result.Paragraphs[i];

Console.WriteLine($"Paragraph {i}:");

Console.WriteLine($" Content: {paragraph.Content}");

if (paragraph.Role != null)

{

Console.WriteLine($" Role: {paragraph.Role}");

}

}

foreach (DocumentStyle style in result.Styles)

{

// Check the style and style confidence to see if text is handwritten.

// Note that value '0.8' is used as an example.

bool isHandwritten = style.IsHandwritten.HasValue && style.IsHandwritten == true;

if (isHandwritten && style.Confidence > 0.8)

{

Console.WriteLine($"Handwritten content found:");

foreach (DocumentSpan span in style.Spans)

{

var handwrittenContent = result.Content.Substring(span.Offset, span.Length);

Console.WriteLine($" {handwrittenContent}");

}

}

}

for (int i = 0; i < result.Tables.Count; i++)

{

DocumentTable table = result.Tables[i];

Console.WriteLine($"Table {i} has {table.RowCount} rows and {table.ColumnCount} columns.");

foreach (DocumentTableCell cell in table.Cells)

{

Console.WriteLine($" Cell ({cell.RowIndex}, {cell.ColumnIndex}) is a '{cell.Kind}' with content: {cell.Content}");

}

}

Run your application

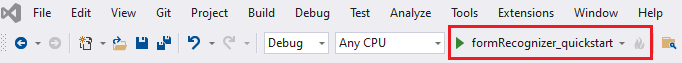

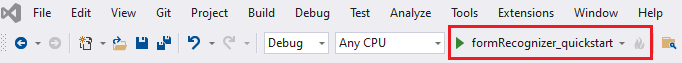

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

Add the following code sample to the Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Form Recognizer instance:

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `DocumentAnalysisClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

//sample document

Uri fileUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf");

AnalyzeDocumentOperation operation = await client.AnalyzeDocumentFromUriAsync(WaitUntil.Completed, "prebuilt-layout", fileUri);

AnalyzeResult result = operation.Value;

foreach (DocumentPage page in result.Pages)

{

Console.WriteLine($"Document Page {page.PageNumber} has {page.Lines.Count} line(s), {page.Words.Count} word(s),");

Console.WriteLine($"and {page.SelectionMarks.Count} selection mark(s).");

for (int i = 0; i < page.Lines.Count; i++)

{

DocumentLine line = page.Lines[i];

Console.WriteLine($" Line {i} has content: '{line.Content}'.");

Console.WriteLine($" Its bounding box is:");

Console.WriteLine($" Upper left => X: {line.BoundingPolygon[0].X}, Y= {line.BoundingPolygon[0].Y}");

Console.WriteLine($" Upper right => X: {line.BoundingPolygon[1].X}, Y= {line.BoundingPolygon[1].Y}");

Console.WriteLine($" Lower right => X: {line.BoundingPolygon[2].X}, Y= {line.BoundingPolygon[2].Y}");

Console.WriteLine($" Lower left => X: {line.BoundingPolygon[3].X}, Y= {line.BoundingPolygon[3].Y}");

}

for (int i = 0; i < page.SelectionMarks.Count; i++)

{

DocumentSelectionMark selectionMark = page.SelectionMarks[i];

Console.WriteLine($" Selection Mark {i} is {selectionMark.State}.");

Console.WriteLine($" Its bounding box is:");

Console.WriteLine($" Upper left => X: {selectionMark.BoundingPolygon[0].X}, Y= {selectionMark.BoundingPolygon[0].Y}");

Console.WriteLine($" Upper right => X: {selectionMark.BoundingPolygon[1].X}, Y= {selectionMark.BoundingPolygon[1].Y}");

Console.WriteLine($" Lower right => X: {selectionMark.BoundingPolygon[2].X}, Y= {selectionMark.BoundingPolygon[2].Y}");

Console.WriteLine($" Lower left => X: {selectionMark.BoundingPolygon[3].X}, Y= {selectionMark.BoundingPolygon[3].Y}");

}

}

foreach (DocumentStyle style in result.Styles)

{

// Check the style and style confidence to see if text is handwritten.

// Note that value '0.8' is used as an example.

bool isHandwritten = style.IsHandwritten.HasValue && style.IsHandwritten == true;

if (isHandwritten && style.Confidence > 0.8)

{

Console.WriteLine($"Handwritten content found:");

foreach (DocumentSpan span in style.Spans)

{

Console.WriteLine($" Content: {result.Content.Substring(span.Index, span.Length)}");

}

}

}

Console.WriteLine("The following tables were extracted:");

for (int i = 0; i < result.Tables.Count; i++)

{

DocumentTable table = result.Tables[i];

Console.WriteLine($" Table {i} has {table.RowCount} rows and {table.ColumnCount} columns.");

foreach (DocumentTableCell cell in table.Cells)

{

Console.WriteLine($" Cell ({cell.RowIndex}, {cell.ColumnIndex}) has kind '{cell.Kind}' and content: '{cell.Content}'.");

}

}

Run your application

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

Layout model output

Here's a snippet of the expected output:

Document Page 1 has 69 line(s), 425 word(s), and 15 selection mark(s).

Line 0 has content: 'UNITED STATES'.

Its bounding box is:

Upper left => X: 3.4915, Y= 0.6828

Upper right => X: 5.0116, Y= 0.6828

Lower right => X: 5.0116, Y= 0.8265

Lower left => X: 3.4915, Y= 0.8265

Line 1 has content: 'SECURITIES AND EXCHANGE COMMISSION'.

Its bounding box is:

Upper left => X: 2.1937, Y= 0.9061

Upper right => X: 6.297, Y= 0.9061

Lower right => X: 6.297, Y= 1.0498

Lower left => X: 2.1937, Y= 1.0498

To view the entire output, visit the Azure samples repository on GitHub to view the layout model output.

Add the following code sample to the Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Form Recognizer instance:

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `DocumentAnalysisClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

//sample document

Uri fileUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf");

AnalyzeDocumentOperation operation = await client.AnalyzeDocumentFromUriAsync(WaitUntil.Completed, "prebuilt-layout", fileUri);

AnalyzeResult result = operation.Value;

foreach (DocumentPage page in result.Pages)

{

Console.WriteLine($"Document Page {page.PageNumber} has {page.Lines.Count} line(s), {page.Words.Count} word(s),");

Console.WriteLine($"and {page.SelectionMarks.Count} selection mark(s).");

for (int i = 0; i < page.Lines.Count; i++)

{

DocumentLine line = page.Lines[i];

Console.WriteLine($" Line {i} has content: '{line.Content}'.");

Console.WriteLine($" Its bounding polygon (points ordered clockwise):");

for (int j = 0; j < line.BoundingPolygon.Count; j++)

{

Console.WriteLine($" Point {j} => X: {line.BoundingPolygon[j].X}, Y: {line.BoundingPolygon[j].Y}");

}

}

for (int i = 0; i < page.SelectionMarks.Count; i++)

{

DocumentSelectionMark selectionMark = page.SelectionMarks[i];

Console.WriteLine($" Selection Mark {i} is {selectionMark.State}.");

Console.WriteLine($" Its bounding polygon (points ordered clockwise):");

for (int j = 0; j < selectionMark.BoundingPolygon.Count; j++)

{

Console.WriteLine($" Point {j} => X: {selectionMark.BoundingPolygon[j].X}, Y: {selectionMark.BoundingPolygon[j].Y}");

}

}

}

Console.WriteLine("Paragraphs:");

foreach (DocumentParagraph paragraph in result.Paragraphs)

{

Console.WriteLine($" Paragraph content: {paragraph.Content}");

if (paragraph.Role != null)

{

Console.WriteLine($" Role: {paragraph.Role}");

}

}

foreach (DocumentStyle style in result.Styles)

{

// Check the style and style confidence to see if text is handwritten.

// Note that value '0.8' is used as an example.

bool isHandwritten = style.IsHandwritten.HasValue && style.IsHandwritten == true;

if (isHandwritten && style.Confidence > 0.8)

{

Console.WriteLine($"Handwritten content found:");

foreach (DocumentSpan span in style.Spans)

{

Console.WriteLine($" Content: {result.Content.Substring(span.Index, span.Length)}");

}

}

}

Console.WriteLine("The following tables were extracted:");

for (int i = 0; i < result.Tables.Count; i++)

{

DocumentTable table = result.Tables[i];

Console.WriteLine($" Table {i} has {table.RowCount} rows and {table.ColumnCount} columns.");

foreach (DocumentTableCell cell in table.Cells)

{

Console.WriteLine($" Cell ({cell.RowIndex}, {cell.ColumnIndex}) has kind '{cell.Kind}' and content: '{cell.Content}'.");

}

}

Extract the layout of a document from a file stream

To extract the layout from a given file at a file stream, use the AnalyzeDocument method and pass prebuilt-layout as the model ID. The returned value is an AnalyzeResult object containing data about the submitted document.

string filePath = "<filePath>";

using var stream = new FileStream(filePath, FileMode.Open);

AnalyzeDocumentOperation operation = await client.AnalyzeDocumentAsync(WaitUntil.Completed, "prebuilt-layout", stream);

AnalyzeResult result = operation.Value;

foreach (DocumentPage page in result.Pages)

{

Console.WriteLine($"Document Page {page.PageNumber} has {page.Lines.Count} line(s), {page.Words.Count} word(s),");

Console.WriteLine($"and {page.SelectionMarks.Count} selection mark(s).");

for (int i = 0; i < page.Lines.Count; i++)

{

DocumentLine line = page.Lines[i];

Console.WriteLine($" Line {i} has content: '{line.Content}'.");

Console.WriteLine($" Its bounding polygon (points ordered clockwise):");

for (int j = 0; j < line.BoundingPolygon.Count; j++)

{

Console.WriteLine($" Point {j} => X: {line.BoundingPolygon[j].X}, Y: {line.BoundingPolygon[j].Y}");

}

}

for (int i = 0; i < page.SelectionMarks.Count; i++)

{

DocumentSelectionMark selectionMark = page.SelectionMarks[i];

Console.WriteLine($" Selection Mark {i} is {selectionMark.State}.");

Console.WriteLine($" Its bounding polygon (points ordered clockwise):");

for (int j = 0; j < selectionMark.BoundingPolygon.Count; j++)

{

Console.WriteLine($" Point {j} => X: {selectionMark.BoundingPolygon[j].X}, Y: {selectionMark.BoundingPolygon[j].Y}");

}

}

}

Console.WriteLine("Paragraphs:");

foreach (DocumentParagraph paragraph in result.Paragraphs)

{

Console.WriteLine($" Paragraph content: {paragraph.Content}");

if (paragraph.Role != null)

{

Console.WriteLine($" Role: {paragraph.Role}");

}

}

foreach (DocumentStyle style in result.Styles)

{

// Check the style and style confidence to see if text is handwritten.

// Note that value '0.8' is used as an example.

bool isHandwritten = style.IsHandwritten.HasValue && style.IsHandwritten == true;

if (isHandwritten && style.Confidence > 0.8)

{

Console.WriteLine($"Handwritten content found:");

foreach (DocumentSpan span in style.Spans)

{

Console.WriteLine($" Content: {result.Content.Substring(span.Index, span.Length)}");

}

}

}

Console.WriteLine("The following tables were extracted:");

for (int i = 0; i < result.Tables.Count; i++)

{

DocumentTable table = result.Tables[i];

Console.WriteLine($" Table {i} has {table.RowCount} rows and {table.ColumnCount} columns.");

foreach (DocumentTableCell cell in table.Cells)

{

Console.WriteLine($" Cell ({cell.RowIndex}, {cell.ColumnIndex}) has kind '{cell.Kind}' and content: '{cell.Content}'.");

}

}

Run your application

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

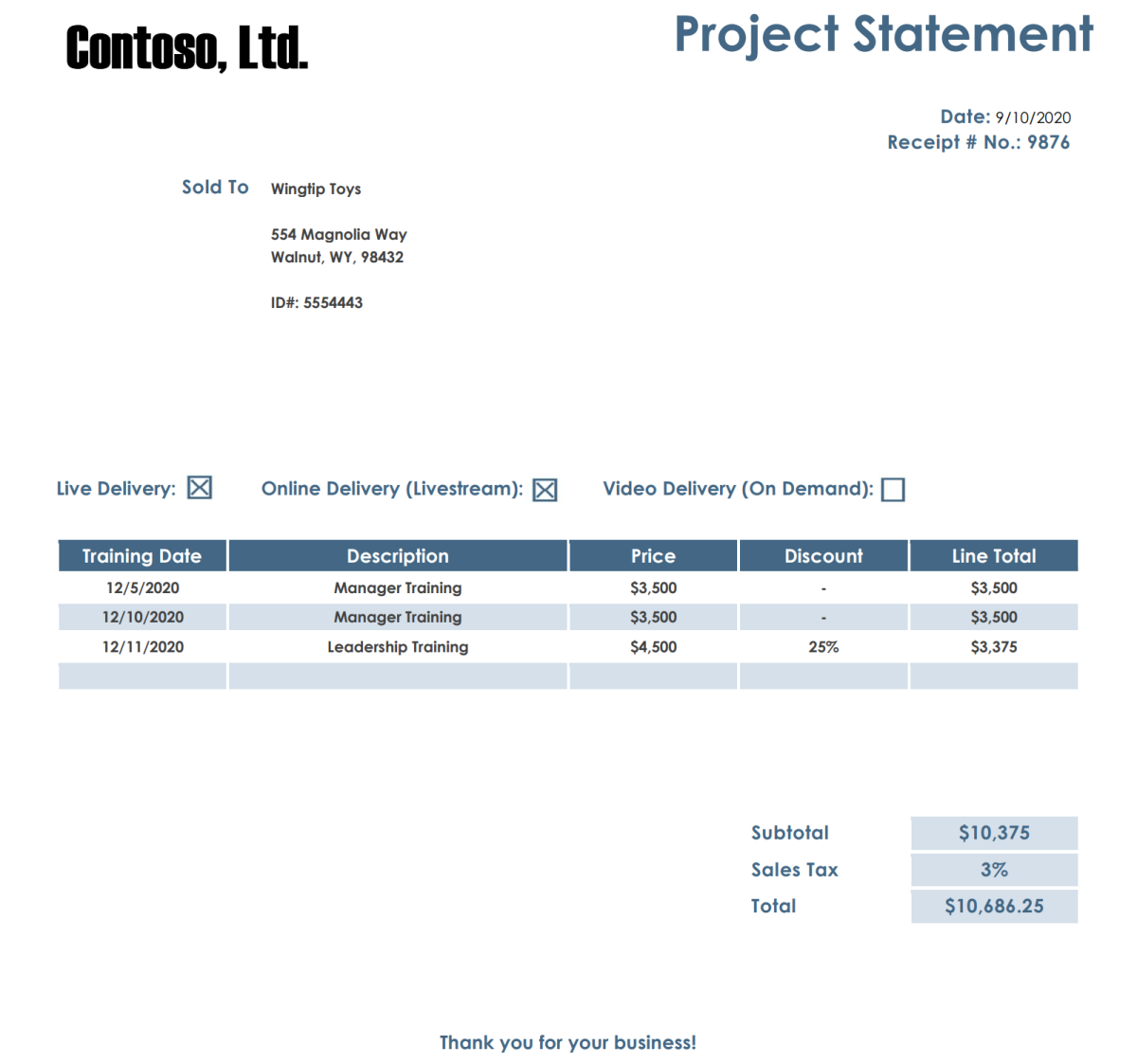

Prebuilt model

Analyze and extract common fields from specific document types using a prebuilt model. In this example, we analyze an invoice using the prebuilt-invoice model.

Tip

You aren't limited to invoices—there are several prebuilt models to choose from, each of which has its own set of supported fields. The model to use for the analyze operation depends on the type of document to be analyzed. See model data extraction.

- Analyze an invoice using the prebuilt-invoice model. You can use our sample invoice document for this quickstart.

- We've added the file URI value to the

Uri invoiceUrivariable at the top of the Program.cs file. - To analyze a given file at a URI, use the

StartAnalyzeDocumentFromUrimethod and passprebuilt-invoiceas the model ID. The returned value is anAnalyzeResultobject containing data from the submitted document. - For simplicity, all the key-value pairs that the service returns are not shown here. To see the list of all supported fields and corresponding types, see our Invoice concept page.

Add the following code sample to your Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

using Azure;

using Azure.AI.DocumentIntelligence;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `DocumentIntelligenceClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentIntelligenceClient client = new DocumentIntelligenceClient(new Uri(endpoint), credential);

//sample invoice document

Uri invoiceUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf");

Operation<AnalyzeResult> operation = await client.AnalyzeDocumentAsync(WaitUntil.Completed, "prebuilt-invoice", invoiceUri);

AnalyzeResult result = operation.Value;

for (int i = 0; i < result.Documents.Count; i++)

{

Console.WriteLine($"Document {i}:");

AnalyzedDocument document = result.Documents[i];

if (document.Fields.TryGetValue("VendorName", out DocumentField vendorNameField)

&& vendorNameField.Type == DocumentFieldType.String)

{

string vendorName = vendorNameField.ValueString;

Console.WriteLine($"Vendor Name: '{vendorName}', with confidence {vendorNameField.Confidence}");

}

if (document.Fields.TryGetValue("CustomerName", out DocumentField customerNameField)

&& customerNameField.Type == DocumentFieldType.String)

{

string customerName = customerNameField.ValueString;

Console.WriteLine($"Customer Name: '{customerName}', with confidence {customerNameField.Confidence}");

}

if (document.Fields.TryGetValue("Items", out DocumentField itemsField)

&& itemsField.Type == DocumentFieldType.Array)

{

foreach (DocumentField itemField in itemsField.ValueArray)

{

Console.WriteLine("Item:");

if (itemField.Type == DocumentFieldType.Object)

{

IReadOnlyDictionary<string, DocumentField> itemFields = itemField.ValueObject;

if (itemFields.TryGetValue("Description", out DocumentField itemDescriptionField)

&& itemDescriptionField.Type == DocumentFieldType.String)

{

string itemDescription = itemDescriptionField.ValueString;

Console.WriteLine($" Description: '{itemDescription}', with confidence {itemDescriptionField.Confidence}");

}

if (itemFields.TryGetValue("Amount", out DocumentField itemAmountField)

&& itemAmountField.Type == DocumentFieldType.Currency)

{

CurrencyValue itemAmount = itemAmountField.ValueCurrency;

Console.WriteLine($" Amount: '{itemAmount.CurrencySymbol}{itemAmount.Amount}', with confidence {itemAmountField.Confidence}");

}

}

}

}

if (document.Fields.TryGetValue("SubTotal", out DocumentField subTotalField)

&& subTotalField.Type == DocumentFieldType.Currency)

{

CurrencyValue subTotal = subTotalField.ValueCurrency;

Console.WriteLine($"Sub Total: '{subTotal.CurrencySymbol}{subTotal.Amount}', with confidence {subTotalField.Confidence}");

}

if (document.Fields.TryGetValue("TotalTax", out DocumentField totalTaxField)

&& totalTaxField.Type == DocumentFieldType.Currency)

{

CurrencyValue totalTax = totalTaxField.ValueCurrency;

Console.WriteLine($"Total Tax: '{totalTax.CurrencySymbol}{totalTax.Amount}', with confidence {totalTaxField.Confidence}");

}

if (document.Fields.TryGetValue("InvoiceTotal", out DocumentField invoiceTotalField)

&& invoiceTotalField.Type == DocumentFieldType.Currency)

{

CurrencyValue invoiceTotal = invoiceTotalField.ValueCurrency;

Console.WriteLine($"Invoice Total: '{invoiceTotal.CurrencySymbol}{invoiceTotal.Amount}', with confidence {invoiceTotalField.Confidence}");

}

}

Run your application

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

Add the following code sample to your Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Form Recognizer instance:

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `FormRecognizerClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

//sample invoice document

Uri invoiceUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf");

Operation operation = await client.AnalyzeDocumentAsync(WaitUntil.Completed, "prebuilt-invoice", invoiceUri);

AnalyzeResult result = operation.Value;

for (int i = 0; i < result.Documents.Count; i++)

{

Console.WriteLine($"Document {i}:");

AnalyzedDocument document = result.Documents[i];

if (document.Fields.TryGetValue("VendorName", out DocumentField vendorNameField))

{

if (vendorNameField.FieldType == DocumentFieldType.String)

{

string vendorName = vendorNameField.Value.AsString();

Console.WriteLine($"Vendor Name: '{vendorName}', with confidence {vendorNameField.Confidence}");

}

}

if (document.Fields.TryGetValue("CustomerName", out DocumentField customerNameField))

{

if (customerNameField.FieldType == DocumentFieldType.String)

{

string customerName = customerNameField.Value.AsString();

Console.WriteLine($"Customer Name: '{customerName}', with confidence {customerNameField.Confidence}");

}

}

if (document.Fields.TryGetValue("Items", out DocumentField itemsField))

{

if (itemsField.FieldType == DocumentFieldType.List)

{

foreach (DocumentField itemField in itemsField.Value.AsList())

{

Console.WriteLine("Item:");

if (itemField.FieldType == DocumentFieldType.Dictionary)

{

IReadOnlyDictionary<string, DocumentField> itemFields = itemField.Value.AsDictionary();

if (itemFields.TryGetValue("Description", out DocumentField itemDescriptionField))

{

if (itemDescriptionField.FieldType == DocumentFieldType.String)

{

string itemDescription = itemDescriptionField.Value.AsString();

Console.WriteLine($" Description: '{itemDescription}', with confidence {itemDescriptionField.Confidence}");

}

}

if (itemFields.TryGetValue("Amount", out DocumentField itemAmountField))

{

if (itemAmountField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue itemAmount = itemAmountField.Value.AsCurrency();

Console.WriteLine($" Amount: '{itemAmount.Symbol}{itemAmount.Amount}', with confidence {itemAmountField.Confidence}");

}

}

}

}

}

}

if (document.Fields.TryGetValue("SubTotal", out DocumentField subTotalField))

{

if (subTotalField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue subTotal = subTotalField.Value.AsCurrency();

Console.WriteLine($"Sub Total: '{subTotal.Symbol}{subTotal.Amount}', with confidence {subTotalField.Confidence}");

}

}

if (document.Fields.TryGetValue("TotalTax", out DocumentField totalTaxField))

{

if (totalTaxField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue totalTax = totalTaxField.Value.AsCurrency();

Console.WriteLine($"Total Tax: '{totalTax.Symbol}{totalTax.Amount}', with confidence {totalTaxField.Confidence}");

}

}

if (document.Fields.TryGetValue("InvoiceTotal", out DocumentField invoiceTotalField))

{

if (invoiceTotalField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue invoiceTotal = invoiceTotalField.Value.AsCurrency();

Console.WriteLine($"Invoice Total: '{invoiceTotal.Symbol}{invoiceTotal.Amount}', with confidence {invoiceTotalField.Confidence}");

}

}

}

Run your application

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

Prebuilt model output

Here's a snippet of the expected output:

Document 0:

Vendor Name: 'CONTOSO LTD.', with confidence 0.962

Customer Name: 'MICROSOFT CORPORATION', with confidence 0.951

Item:

Description: 'Test for 23 fields', with confidence 0.899

Amount: '100', with confidence 0.902

Sub Total: '100', with confidence 0.979

To view the entire output, visit the Azure samples repository on GitHub to view the prebuilt invoice model output.

Add the following code sample to your Program.cs file. Make sure you update the key and endpoint variables with values from your Azure portal Form Recognizer instance:

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

//set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal to create your `AzureKeyCredential` and `FormRecognizerClient` instance

string endpoint = "<your-endpoint>";

string key = "<your-key>";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

//sample invoice document

Uri invoiceUri = new Uri ("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf");

AnalyzeDocumentOperation operation = await client.AnalyzeDocumentFromUriAsync(WaitUntil.Completed, "prebuilt-invoice", invoiceUri);

AnalyzeResult result = operation.Value;

for (int i = 0; i < result.Documents.Count; i++)

{

Console.WriteLine($"Document {i}:");

AnalyzedDocument document = result.Documents[i];

if (document.Fields.TryGetValue("VendorName", out DocumentField vendorNameField))

{

if (vendorNameField.FieldType == DocumentFieldType.String)

{

string vendorName = vendorNameField.Value.AsString();

Console.WriteLine($"Vendor Name: '{vendorName}', with confidence {vendorNameField.Confidence}");

}

}

if (document.Fields.TryGetValue("CustomerName", out DocumentField customerNameField))

{

if (customerNameField.FieldType == DocumentFieldType.String)

{

string customerName = customerNameField.Value.AsString();

Console.WriteLine($"Customer Name: '{customerName}', with confidence {customerNameField.Confidence}");

}

}

if (document.Fields.TryGetValue("Items", out DocumentField itemsField))

{

if (itemsField.FieldType == DocumentFieldType.List)

{

foreach (DocumentField itemField in itemsField.Value.AsList())

{

Console.WriteLine("Item:");

if (itemField.FieldType == DocumentFieldType.Dictionary)

{

IReadOnlyDictionary<string, DocumentField> itemFields = itemField.Value.AsDictionary();

if (itemFields.TryGetValue("Description", out DocumentField itemDescriptionField))

{

if (itemDescriptionField.FieldType == DocumentFieldType.String)

{

string itemDescription = itemDescriptionField.Value.AsString();

Console.WriteLine($" Description: '{itemDescription}', with confidence {itemDescriptionField.Confidence}");

}

}

if (itemFields.TryGetValue("Amount", out DocumentField itemAmountField))

{

if (itemAmountField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue itemAmount = itemAmountField.Value.AsCurrency();

Console.WriteLine($" Amount: '{itemAmount.Symbol}{itemAmount.Amount}', with confidence {itemAmountField.Confidence}");

}

}

}

}

}

}

if (document.Fields.TryGetValue("SubTotal", out DocumentField subTotalField))

{

if (subTotalField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue subTotal = subTotalField.Value.AsCurrency();

Console.WriteLine($"Sub Total: '{subTotal.Symbol}{subTotal.Amount}', with confidence {subTotalField.Confidence}");

}

}

if (document.Fields.TryGetValue("TotalTax", out DocumentField totalTaxField))

{

if (totalTaxField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue totalTax = totalTaxField.Value.AsCurrency();

Console.WriteLine($"Total Tax: '{totalTax.Symbol}{totalTax.Amount}', with confidence {totalTaxField.Confidence}");

}

}

if (document.Fields.TryGetValue("InvoiceTotal", out DocumentField invoiceTotalField))

{

if (invoiceTotalField.FieldType == DocumentFieldType.Currency)

{

CurrencyValue invoiceTotal = invoiceTotalField.Value.AsCurrency();

Console.WriteLine($"Invoice Total: '{invoiceTotal.Symbol}{invoiceTotal.Amount}', with confidence {invoiceTotalField.Confidence}");

}

}

}

Run your application

Once you add a code sample to your application, choose the green Start button next to formRecognizer_quickstart to build and run your program, or press F5.

Client library | SDK reference | REST API reference | Package (Maven) | Samples |Supported REST API version

Client library | SDK reference | REST API reference | Package (Maven) | Samples| Supported REST API version

Client library | SDK reference | REST API reference | Package (Maven) | Samples|Supported REST API version

In this quickstart, use the following features to analyze and extract data and values from forms and documents:

Layout—Analyze and extract tables, lines, words, and selection marks like radio buttons and check boxes in documents, without the need to train a model.

Prebuilt Invoice—Analyze and extract common fields from specific document types using a pretrained model.

Prerequisites

Azure subscription - Create one for free.

The latest version of Visual Studio Code or your preferred IDE. See Java in Visual Studio Code.

Tip

- Visual Studio Code offers a Coding Pack for Java for Windows and macOS.The coding pack is a bundle of VS Code, the Java Development Kit (JDK), and a collection of suggested extensions by Microsoft. The Coding Pack can also be used to fix an existing development environment.

- If you are using VS Code and the Coding Pack For Java, install the Gradle for Java extension.

If you aren't using Visual Studio Code, make sure you have the following installed in your development environment:

A Java Development Kit (JDK) version 8 or later. For more information, see Microsoft Build of OpenJDK.

Gradle, version 6.8 or later.

An Azure AI services or Document Intelligence resource. Once you have your Azure subscription, create a single-service or multi-service Document Intelligence resource, in the Azure portal, to get your key and endpoint. You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.Tip

Create an Azure AI services resource if you plan to access multiple Azure AI services under a single endpoint/key. For Document Intelligence access only, create a Document Intelligence resource. Please note that you'll need a single-service resource if you intend to use Microsoft Entra authentication.

After your resource deploys, select Go to resource. You need the key and endpoint from the resource you create to connect your application to the Document Intelligence API. Later, you paste your key and endpoint into the code:

Set up

Create a new Gradle project

In console window (such as cmd, PowerShell, or Bash), create a new directory for your app called doc-intel-app, and navigate to it.

mkdir doc-intel-app && doc-intel-appmkdir doc-intel-app; cd doc-intel-appRun the

gradle initcommand from your working directory. This command creates essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.gradle init --type basicWhen prompted to choose a DSL, select Kotlin.

Accept the default project name (doc-intel-app) by selecting Return or Enter.

In console window (such as cmd, PowerShell, or Bash), create a new directory for your app called form-recognize-app, and navigate to it.

mkdir form-recognize-app && form-recognize-appmkdir form-recognize-app; cd form-recognize-appRun the

gradle initcommand from your working directory. This command creates essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.gradle init --type basicWhen prompted to choose a DSL, select Kotlin.

Accept the default project name (form-recognize-app) by selecting Return or Enter.

Install the client library

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Open the project's build.gradle.kts file in your IDE. Copay and past the following code to include the client library as an implementation statement, along with the required plugins and settings.

plugins {

java

application

}

application {

mainClass.set("DocIntelligence")

}

repositories {

mavenCentral()

}

dependencies {

implementation group: 'com.azure', name: 'azure-ai-documentintelligence', version: '1.0.0-beta.2'

}

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Open the project's build.gradle.kts file in your IDE. Copay and past the following code to include the client library as an implementation statement, along with the required plugins and settings.

plugins {

java

application

}

application {

mainClass.set("FormRecognizer")

}

repositories {

mavenCentral()

}

dependencies {

implementation group: 'com.azure', name: 'azure-ai-formrecognizer', version: '4.1.0'

}

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Open the project's build.gradle.kts file in your IDE. Copay and past the following code to include the client library as an implementation statement, along with the required plugins and settings.

plugins {

java

application

}

application {

mainClass.set("FormRecognizer")

}

repositories {

mavenCentral()

}

dependencies {

implementation group: 'com.azure', name: 'azure-ai-formrecognizer', version: '4.0.0'

}

Create a Java application

To interact with the Document Intelligence service, you need to create an instance of the DocumentIntelligenceClient class. To do so, you create an AzureKeyCredential with your key from the Azure portal and a DocumentIntelligenceClient instance with the AzureKeyCredential and your Document Intelligence endpoint.

To interact with the Document Intelligence service, you need to create an instance of the DocumentAnalysisClient class. To do so, you create an AzureKeyCredential with your key from the Azure portal and a DocumentAnalysisClient instance with the AzureKeyCredential and your Document Intelligence endpoint.

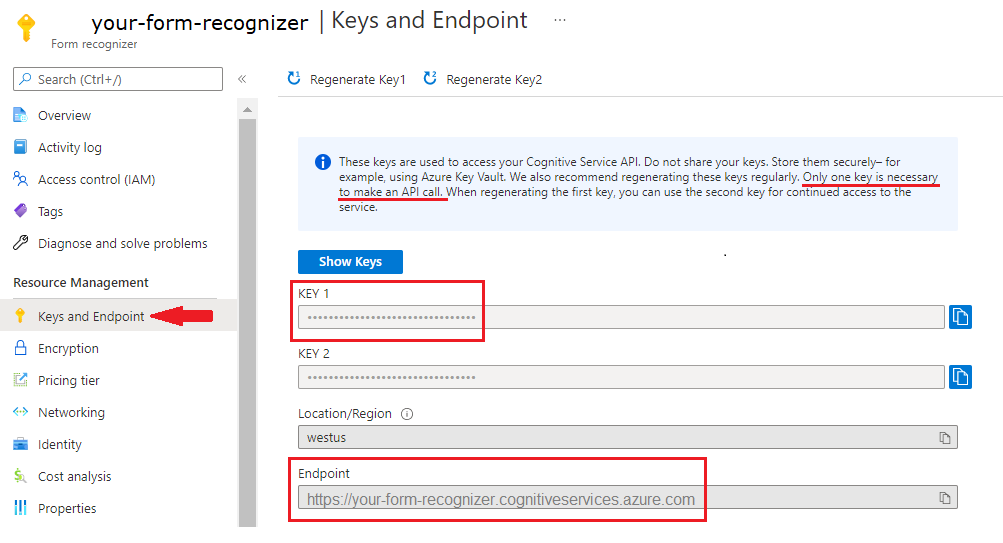

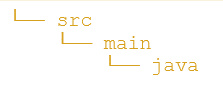

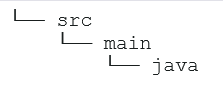

From the doc-intel-app directory, run the following command:

mkdir -p src/main/javaYou create the following directory structure:

Navigate to the

javadirectory and create a file namedDocIntelligence.java.Tip

- You can create a new file using PowerShell.

- Open a PowerShell window in your project directory by holding down the Shift key and right-clicking the folder.

- Type the following command New-Item DocIntelligence.java.

Open the

DocIntelligence.javafile. Copy and paste one of the following code samples into your application:

Navigate to the

javadirectory and create a file namedFormRecognizer.java.Tip

- You can create a new file using PowerShell.

- Open a PowerShell window in your project directory by holding down the Shift key and right-clicking the folder.

- Type the following command New-Item FormRecognizer.java.

Open the

FormRecognizer.javafile. Copy and paste one of the following code samples into your application:

Important

Remember to remove the key from your code when you're done, and never post it publicly. For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information, see Azure AI services security.

Layout model

Extract text, selection marks, text styles, table structures, and bounding region coordinates from documents.

- For this example, you'll need a document file at a URI. You can use our sample document for this quickstart.

- To analyze a given file at a URI, you'll use the

beginAnalyzeDocumentFromUrlmethod and passprebuilt-layoutas the model Id. The returned value is anAnalyzeResultobject containing data about the submitted document. - We've added the file URI value to the

documentUrlvariable in the main method.

Add the following code sample to the DocIntelligence.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.documentintelligence;

import com.azure.ai.documentintelligence.models.AnalyzeDocumentRequest;

import com.azure.ai.documentintelligence.models.AnalyzeResult;

import com.azure.ai.documentintelligence.models.AnalyzeResultOperation;

import com.azure.ai.documentintelligence.models.DocumentTable;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.util.List;

public class DocIntelligence {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(String[] args) {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentIntelligenceClient client = new DocumentIntelligenceClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String documentUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf";

String modelId = "prebuilt-layout";

SyncPoller <AnalyzeResultOperation, AnalyzeResultOperation> analyzeLayoutResultPoller =

client.beginAnalyzeDocument(modelId, documentUrl);

AnalyzeResult analyzeLayoutResult = analyzeLayoutPoller.getFinalResult().getAnalyzeResult();

// pages

analyzeLayoutResult.getPages().forEach(documentPage -> {

System.out.printf("Page has width: %.2f and height: %.2f, measured with unit: %s%n",

documentPage.getWidth(),

documentPage.getHeight(),

documentPage.getUnit());

// lines

documentPage.getLines().forEach(documentLine ->

System.out.printf("Line '%s' is within a bounding polygon %s.%n",

documentLine.getContent(),

documentLine.getPolygon()));

// words

documentPage.getWords().forEach(documentWord ->

System.out.printf("Word '%s' has a confidence score of %.2f.%n",

documentWord.getContent(),

documentWord.getConfidence()));

// selection marks

documentPage.getSelectionMarks().forEach(documentSelectionMark ->

System.out.printf("Selection mark is '%s' and is within a bounding polygon %s with confidence %.2f.%n",

documentSelectionMark.getState().toString(),

documentSelectionMark.getPolygon(),

documentSelectionMark.getConfidence()));

});

// tables

List < DocumentTable > tables = analyzeLayoutResult.getTables();

for (int i = 0; i < tables.size(); i++) {

DocumentTable documentTable = tables.get(i);

System.out.printf("Table %d has %d rows and %d columns.%n", i, documentTable.getRowCount(),

documentTable.getColumnCount());

documentTable.getCells().forEach(documentTableCell -> {

System.out.printf("Cell '%s', has row index %d and column index %d.%n", documentTableCell.getContent(),

documentTableCell.getRowIndex(), documentTableCell.getColumnIndex());

});

System.out.println();

}

// styles

analyzeLayoutResult.getStyles().forEach(documentStyle -

> System.out.printf("Document is handwritten %s.%n", documentStyle.isHandwritten()));

}

}

Build and run the application

After you add a code sample to your application, navigate back to your main project directory—doc-intel-app.

Build your application with the

buildcommand:gradle buildRun your application with the

runcommand:gradle run

Add the following code sample to the FormRecognizer.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.formrecognizer.*;

import com.azure.ai.formrecognizer.documentanalysis.models.*;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClient;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClientBuilder;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.io.IOException;

import java.util.List;

import java.util.Arrays;

import java.time.LocalDate;

import java.util.Map;

import java.util.stream.Collectors;

public class FormRecognizer {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(String[] args) {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentAnalysisClient client = new DocumentAnalysisClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String documentUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf";

String modelId = "prebuilt-layout";

SyncPoller < OperationResult, AnalyzeResult > analyzeLayoutResultPoller =

client.beginAnalyzeDocumentFromUrl(modelId, documentUrl);

AnalyzeResult analyzeLayoutResult = analyzeLayoutResultPoller.getFinalResult();

// pages

analyzeLayoutResult.getPages().forEach(documentPage -> {

System.out.printf("Page has width: %.2f and height: %.2f, measured with unit: %s%n",

documentPage.getWidth(),

documentPage.getHeight(),

documentPage.getUnit());

// lines

documentPage.getLines().forEach(documentLine ->

System.out.printf("Line %s is within a bounding polygon %s.%n",

documentLine.getContent(),

documentLine.getBoundingPolygon().toString()));

// words

documentPage.getWords().forEach(documentWord ->

System.out.printf("Word '%s' has a confidence score of %.2f%n",

documentWord.getContent(),

documentWord.getConfidence()));

// selection marks

documentPage.getSelectionMarks().forEach(documentSelectionMark ->

System.out.printf("Selection mark is %s and is within a bounding polygon %s with confidence %.2f.%n",

documentSelectionMark.getState().toString(),

documentSelectionMark.getBoundingPolygon().toString(),

documentSelectionMark.getConfidence()));

});

// tables

List < DocumentTable > tables = analyzeLayoutResult.getTables();

for (int i = 0; i < tables.size(); i++) {

DocumentTable documentTable = tables.get(i);

System.out.printf("Table %d has %d rows and %d columns.%n", i, documentTable.getRowCount(),

documentTable.getColumnCount());

documentTable.getCells().forEach(documentTableCell -> {

System.out.printf("Cell '%s', has row index %d and column index %d.%n", documentTableCell.getContent(),

documentTableCell.getRowIndex(), documentTableCell.getColumnIndex());

});

System.out.println();

}

}

// Utility function to get the bounding polygon coordinates

private static String getBoundingCoordinates(List < Point > boundingPolygon) {

return boundingPolygon.stream().map(point -> String.format("[%.2f, %.2f]", point.getX(),

point.getY())).collect(Collectors.joining(", "));

}

}

Build and run the application

After you add a code sample to your application, navigate back to your main project directory—form-recognize-app.

Build your application with the

buildcommand:gradle buildRun your application with the

runcommand:gradle run

Layout model output

Here's a snippet of the expected output:

Table 0 has 5 rows and 3 columns.

Cell 'Title of each class', has row index 0 and column index 0.

Cell 'Trading Symbol', has row index 0 and column index 1.

Cell 'Name of exchange on which registered', has row index 0 and column index 2.

Cell 'Common stock, $0.00000625 par value per share', has row index 1 and column index 0.

Cell 'MSFT', has row index 1 and column index 1.

Cell 'NASDAQ', has row index 1 and column index 2.

Cell '2.125% Notes due 2021', has row index 2 and column index 0.

Cell 'MSFT', has row index 2 and column index 1.

Cell 'NASDAQ', has row index 2 and column index 2.

Cell '3.125% Notes due 2028', has row index 3 and column index 0.

Cell 'MSFT', has row index 3 and column index 1.

Cell 'NASDAQ', has row index 3 and column index 2.

Cell '2.625% Notes due 2033', has row index 4 and column index 0.

Cell 'MSFT', has row index 4 and column index 1.

Cell 'NASDAQ', has row index 4 and column index 2.

To view the entire output, visit the Azure samples repository on GitHub to view the layout model output.

Add the following code sample to the FormRecognizer.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.formrecognizer;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClient;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClientBuilder;

import com.azure.ai.formrecognizer.documentanalysis.models.AnalyzeResult;

import com.azure.ai.formrecognizer.documentanalysis.models.OperationResult;

import com.azure.ai.formrecognizer.documentanalysis.models.DocumentTable;

import com.azure.ai.formrecognizer.documentanalysis.models.Point;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.util.List;

import java.util.stream.Collectors;

public class FormRecognizer {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(String[] args) {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentAnalysisClient client = new DocumentAnalysisClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String documentUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-layout.pdf";

String modelId = "prebuilt-layout";

SyncPoller < OperationResult, AnalyzeResult > analyzeLayoutPoller =

client.beginAnalyzeDocumentFromUrl(modelId, documentUrl);

AnalyzeResult analyzeLayoutResult = analyzeLayoutPoller.getFinalResult();

// pages

analyzeLayoutResult.getPages().forEach(documentPage -> {

System.out.printf("Page has width: %.2f and height: %.2f, measured with unit: %s%n",

documentPage.getWidth(),

documentPage.getHeight(),

documentPage.getUnit());

// lines

documentPage.getLines().forEach(documentLine ->

System.out.printf("Line '%s' is within a bounding polygon %s.%n",

documentLine.getContent(),

getBoundingCoordinates(documentLine.getBoundingPolygon())));

// words

documentPage.getWords().forEach(documentWord ->

System.out.printf("Word '%s' has a confidence score of %.2f.%n",

documentWord.getContent(),

documentWord.getConfidence()));

// selection marks

documentPage.getSelectionMarks().forEach(documentSelectionMark ->

System.out.printf("Selection mark is '%s' and is within a bounding polygon %s with confidence %.2f.%n",

documentSelectionMark.getSelectionMarkState().toString(),

getBoundingCoordinates(documentSelectionMark.getBoundingPolygon()),

documentSelectionMark.getConfidence()));

});

// tables

List < DocumentTable > tables = analyzeLayoutResult.getTables();

for (int i = 0; i < tables.size(); i++) {

DocumentTable documentTable = tables.get(i);

System.out.printf("Table %d has %d rows and %d columns.%n", i, documentTable.getRowCount(),

documentTable.getColumnCount());

documentTable.getCells().forEach(documentTableCell -> {

System.out.printf("Cell '%s', has row index %d and column index %d.%n", documentTableCell.getContent(),

documentTableCell.getRowIndex(), documentTableCell.getColumnIndex());

});

System.out.println();

}

// styles

analyzeLayoutResult.getStyles().forEach(documentStyle -

> System.out.printf("Document is handwritten %s.%n", documentStyle.isHandwritten()));

}

/**

* Utility function to get the bounding polygon coordinates.

*/

private static String getBoundingCoordinates(List < Point > boundingPolygon) {

return boundingPolygon.stream().map(point -> String.format("[%.2f, %.2f]", point.getX(),

point.getY())).collect(Collectors.joining(", "));

}

}

Build and run the application

After you add a code sample to your application, navigate back to your main project directory—form-recognize-app.

Build your application with the

buildcommand:gradle buildRun your application with the

runcommand:gradle run

Prebuilt model

Analyze and extract common fields from specific document types using a prebuilt model. In this example, we analyze an invoice using the prebuilt-invoice model.

Tip

You aren't limited to invoices—there are several prebuilt models to choose from, each of which has its own set of supported fields. The model to use for the analyze operation depends on the type of document to be analyzed. See model data extraction.

- Analyze an invoice using the prebuilt-invoice model. You can use our sample invoice document for this quickstart.

- We've added the file URL value to the

invoiceUrlvariable at the top of the file. - To analyze a given file at a URI, you'll use the

beginAnalyzeDocumentsmethod and passPrebuiltModels.Invoiceas the model Id. The returned value is aresultobject containing data about the submitted document. - For simplicity, all the key-value pairs that the service returns are not shown here. To see the list of all supported fields and corresponding types, see our Invoice concept page.

Add the following code sample to the DocIntelligence.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.documentintelligence;

import com.azure.ai.documentintelligence.models.AnalyzeDocumentRequest;

import com.azure.ai.documentintelligence.models.AnalyzeResult;

import com.azure.ai.documentintelligence.models.AnalyzeResultOperation;

import com.azure.ai.documentintelligence.models.Document;

import com.azure.ai.documentintelligence.models.DocumentField;

import com.azure.ai.documentintelligence.models.DocumentFieldType;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.io.IOException;

import java.time.LocalDate;

import java.util.List;

import java.util.Map;

public class DocIntelligence {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(String[] args) {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentIntelligenceClient client = new DocumentIntelligenceClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String modelId = "prebuilt-invoice";

String invoiceUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf";

public static void main(final String[] args) throws IOException {

// Instantiate a client that will be used to call the service.

DocumentIntelligenceClient client = new DocumentIntelligenceClientBuilder()

.credential(new AzureKeyCredential("{key}"))

.endpoint("https://{endpoint}.cognitiveservices.azure.com/")

.buildClient();

SyncPoller < OperationResult, AnalyzeResult > analyzeLayoutResultPoller =

client.beginAnalyzeDocument(modelId, invoiceUrl);

AnalyzeResult analyzeInvoiceResult = analyzeInvoicesPoller.getFinalResult().getAnalyzeResult();

for (int i = 0; i < analyzeInvoiceResult.getDocuments().size(); i++) {

Document analyzedInvoice = analyzeInvoiceResult.getDocuments().get(i);

Map < String, DocumentField > invoiceFields = analyzedInvoice.getFields();

System.out.printf("----------- Analyzing invoice %d -----------%n", i);

DocumentField vendorNameField = invoiceFields.get("VendorName");

if (vendorNameField != null) {

if (DocumentFieldType.STRING == vendorNameField.getType()) {

String merchantName = vendorNameField.getValueString();

System.out.printf("Vendor Name: %s, confidence: %.2f%n",

merchantName, vendorNameField.getConfidence());

}

}

DocumentField vendorAddressField = invoiceFields.get("VendorAddress");

if (vendorAddressField != null) {

if (DocumentFieldType.STRING == vendorAddressField.getType()) {

String merchantAddress = vendorAddressField.getValueString();

System.out.printf("Vendor address: %s, confidence: %.2f%n",

merchantAddress, vendorAddressField.getConfidence());

}

}

DocumentField customerNameField = invoiceFields.get("CustomerName");

if (customerNameField != null) {

if (DocumentFieldType.STRING == customerNameField.getType()) {

String merchantAddress = customerNameField.getValueString();

System.out.printf("Customer Name: %s, confidence: %.2f%n",

merchantAddress, customerNameField.getConfidence());

}

}

DocumentField customerAddressRecipientField = invoiceFields.get("CustomerAddressRecipient");

if (customerAddressRecipientField != null) {

if (DocumentFieldType.STRING == customerAddressRecipientField.getType()) {

String customerAddr = customerAddressRecipientField.getValueString();

System.out.printf("Customer Address Recipient: %s, confidence: %.2f%n",

customerAddr, customerAddressRecipientField.getConfidence());

}

}

DocumentField invoiceIdField = invoiceFields.get("InvoiceId");

if (invoiceIdField != null) {

if (DocumentFieldType.STRING == invoiceIdField.getType()) {

String invoiceId = invoiceIdField.getValueString();

System.out.printf("Invoice ID: %s, confidence: %.2f%n",

invoiceId, invoiceIdField.getConfidence());

}

}

DocumentField invoiceDateField = invoiceFields.get("InvoiceDate");

if (customerNameField != null) {

if (DocumentFieldType.DATE == invoiceDateField.getType()) {

LocalDate invoiceDate = invoiceDateField.getValueDate();

System.out.printf("Invoice Date: %s, confidence: %.2f%n",

invoiceDate, invoiceDateField.getConfidence());

}

}

DocumentField invoiceTotalField = invoiceFields.get("InvoiceTotal");

if (customerAddressRecipientField != null) {

if (DocumentFieldType.NUMBER == invoiceTotalField.getType()) {

Double invoiceTotal = invoiceTotalField.getValueNumber();

System.out.printf("Invoice Total: %.2f, confidence: %.2f%n",

invoiceTotal, invoiceTotalField.getConfidence());

}

}

DocumentField invoiceItemsField = invoiceFields.get("Items");

if (invoiceItemsField != null) {

System.out.printf("Invoice Items: %n");

if (DocumentFieldType.ARRAY == invoiceItemsField.getType()) {

List < DocumentField > invoiceItems = invoiceItemsField.getValueArray();

invoiceItems.stream()

.filter(invoiceItem -> DocumentFieldType.OBJECT == invoiceItem.getType())

.map(documentField -> documentField.getValueObject())

.forEach(documentFieldMap -> documentFieldMap.forEach((key, documentField) -> {

// See a full list of fields found on an invoice here:

// https://aka.ms/documentintelligence/invoicefields

if ("Description".equals(key)) {

if (DocumentFieldType.STRING == documentField.getType()) {

String name = documentField.getValueString();

System.out.printf("Description: %s, confidence: %.2fs%n",

name, documentField.getConfidence());

}

}

if ("Quantity".equals(key)) {

if (DocumentFieldType.NUMBER == documentField.getType()) {

Double quantity = documentField.getValueNumber();

System.out.printf("Quantity: %f, confidence: %.2f%n",

quantity, documentField.getConfidence());

}

}

if ("UnitPrice".equals(key)) {

if (DocumentFieldType.NUMBER == documentField.getType()) {

Double unitPrice = documentField.getValueNumber();

System.out.printf("Unit Price: %f, confidence: %.2f%n",

unitPrice, documentField.getConfidence());

}

}

if ("ProductCode".equals(key)) {

if (DocumentFieldType.NUMBER == documentField.getType()) {

Double productCode = documentField.getValueNumber();

System.out.printf("Product Code: %f, confidence: %.2f%n",

productCode, documentField.getConfidence());

}

}

}));

}

}

}

}

}

}

Build and run the application

After you add a code sample to your application, navigate back to your main project directory—doc-intel-app.

Build your application with the

buildcommand:gradle buildRun your application with the

runcommand:gradle run

Add the following code sample to the FormRecognizer.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.formrecognizer.*;

import com.azure.ai.formrecognizer.documentanalysis.models.*;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClient;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClientBuilder;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.io.IOException;

import java.util.List;

import java.util.Arrays;

import java.time.LocalDate;

import java.util.Map;

import java.util.stream.Collectors;

public class FormRecognizer {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(final String[] args) throws IOException {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentAnalysisClient client = new DocumentAnalysisClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String modelId = "prebuilt-invoice";

String invoiceUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf";

SyncPoller < OperationResult, AnalyzeResult > analyzeInvoicePoller = client.beginAnalyzeDocumentFromUrl(modelId, invoiceUrl);

AnalyzeResult analyzeInvoiceResult = analyzeInvoicePoller.getFinalResult();

for (int i = 0; i < analyzeInvoiceResult.getDocuments().size(); i++) {

AnalyzedDocument analyzedInvoice = analyzeInvoiceResult.getDocuments().get(i);

Map < String, DocumentField > invoiceFields = analyzedInvoice.getFields();

System.out.printf("----------- Analyzing invoice %d -----------%n", i);

DocumentField vendorNameField = invoiceFields.get("VendorName");

if (vendorNameField != null) {

if (DocumentFieldType.STRING == vendorNameField.getType()) {

String merchantName = vendorNameField.getValueAsString();

System.out.printf("Vendor Name: %s, confidence: %.2f%n",

merchantName, vendorNameField.getConfidence());

}

}

DocumentField vendorAddressField = invoiceFields.get("VendorAddress");

if (vendorAddressField != null) {

if (DocumentFieldType.STRING == vendorAddressField.getType()) {

String merchantAddress = vendorAddressField.getValueAsString();

System.out.printf("Vendor address: %s, confidence: %.2f%n",

merchantAddress, vendorAddressField.getConfidence());

}

}

DocumentField customerNameField = invoiceFields.get("CustomerName");

if (customerNameField != null) {

if (DocumentFieldType.STRING == customerNameField.getType()) {

String merchantAddress = customerNameField.getValueAsString();

System.out.printf("Customer Name: %s, confidence: %.2f%n",

merchantAddress, customerNameField.getConfidence());

}

}

DocumentField customerAddressRecipientField = invoiceFields.get("CustomerAddressRecipient");

if (customerAddressRecipientField != null) {

if (DocumentFieldType.STRING == customerAddressRecipientField.getType()) {

String customerAddr = customerAddressRecipientField.getValueAsString();

System.out.printf("Customer Address Recipient: %s, confidence: %.2f%n",

customerAddr, customerAddressRecipientField.getConfidence());

}

}

DocumentField invoiceIdField = invoiceFields.get("InvoiceId");

if (invoiceIdField != null) {

if (DocumentFieldType.STRING == invoiceIdField.getType()) {

String invoiceId = invoiceIdField.getValueAsString();

System.out.printf("Invoice ID: %s, confidence: %.2f%n",

invoiceId, invoiceIdField.getConfidence());

}

}

DocumentField invoiceDateField = invoiceFields.get("InvoiceDate");

if (customerNameField != null) {

if (DocumentFieldType.DATE == invoiceDateField.getType()) {

LocalDate invoiceDate = invoiceDateField.getValueAsDate();

System.out.printf("Invoice Date: %s, confidence: %.2f%n",

invoiceDate, invoiceDateField.getConfidence());

}

}

DocumentField invoiceTotalField = invoiceFields.get("InvoiceTotal");

if (customerAddressRecipientField != null) {

if (DocumentFieldType.DOUBLE == invoiceTotalField.getType()) {

Double invoiceTotal = invoiceTotalField.getValueAsDouble();

System.out.printf("Invoice Total: %.2f, confidence: %.2f%n",

invoiceTotal, invoiceTotalField.getConfidence());

}

}

DocumentField invoiceItemsField = invoiceFields.get("Items");

if (invoiceItemsField != null) {

System.out.printf("Invoice Items: %n");

if (DocumentFieldType.LIST == invoiceItemsField.getType()) {

List < DocumentField > invoiceItems = invoiceItemsField.getValueAsList();

invoiceItems.stream()

.filter(invoiceItem -> DocumentFieldType.MAP == invoiceItem.getType())

.map(documentField -> documentField.getValueAsMap())

.forEach(documentFieldMap -> documentFieldMap.forEach((key, documentField) -> {

// See a full list of fields found on an invoice here:

// https://aka.ms/formrecognizer/invoicefields

if ("Description".equals(key)) {

if (DocumentFieldType.STRING == documentField.getType()) {

String name = documentField.getValueAsString();

System.out.printf("Description: %s, confidence: %.2fs%n",

name, documentField.getConfidence());

}

}

if ("Quantity".equals(key)) {

if (DocumentFieldType.DOUBLE == documentField.getType()) {

Double quantity = documentField.getValueAsDouble();

System.out.printf("Quantity: %f, confidence: %.2f%n",

quantity, documentField.getConfidence());

}

}

if ("UnitPrice".equals(key)) {

if (DocumentFieldType.DOUBLE == documentField.getType()) {

Double unitPrice = documentField.getValueAsDouble();

System.out.printf("Unit Price: %f, confidence: %.2f%n",

unitPrice, documentField.getConfidence());

}

}

if ("ProductCode".equals(key)) {

if (DocumentFieldType.DOUBLE == documentField.getType()) {

Double productCode = documentField.getValueAsDouble();

System.out.printf("Product Code: %f, confidence: %.2f%n",

productCode, documentField.getConfidence());

}

}

}));

}

}

}

}

}

Build and run the application

After you add a code sample to your application, navigate back to your main project directory—doc-intel-app.

Build your application with the

buildcommand:gradle buildRun your application with the

runcommand:gradle run

Prebuilt model output

Here's a snippet of the expected output:

----------- Analyzing invoice 0 -----------

Analyzed document has doc type invoice with confidence : 1.00

Vendor Name: CONTOSO LTD., confidence: 0.92

Vendor address: 123 456th St New York, NY, 10001, confidence: 0.91

Customer Name: MICROSOFT CORPORATION, confidence: 0.84

Customer Address Recipient: Microsoft Corp, confidence: 0.92

Invoice ID: INV-100, confidence: 0.97

Invoice Date: 2019-11-15, confidence: 0.97

To view the entire output, visit the Azure samples repository on GitHub to view the prebuilt invoice model output.

Add the following code sample to the FormRecognizer.java file. Make sure you update the key and endpoint variables with values from your Azure portal Document Intelligence instance:

import com.azure.ai.formrecognizer;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClient;

import com.azure.ai.formrecognizer.documentanalysis.DocumentAnalysisClientBuilder;

import com.azure.ai.formrecognizer.documentanalysis.models.AnalyzeResult;

import com.azure.ai.formrecognizer.documentanalysis.models.AnalyzedDocument;

import com.azure.ai.formrecognizer.documentanalysis.models.DocumentField;

import com.azure.ai.formrecognizer.documentanalysis.models.DocumentFieldType;

import com.azure.ai.formrecognizer.documentanalysis.models.OperationResult;

import com.azure.core.credential.AzureKeyCredential;

import com.azure.core.util.polling.SyncPoller;

import java.io.IOException;

import java.time.LocalDate;

import java.util.List;

import java.util.Map;

public class FormRecognizer {

// set `<your-endpoint>` and `<your-key>` variables with the values from the Azure portal

private static final String endpoint = "<your-endpoint>";

private static final String key = "<your-key>";

public static void main(String[] args) {

// create your `DocumentAnalysisClient` instance and `AzureKeyCredential` variable

DocumentAnalysisClient client = new DocumentAnalysisClientBuilder()

.credential(new AzureKeyCredential(key))

.endpoint(endpoint)

.buildClient();

// sample document

String modelId = "prebuilt-invoice";

String invoiceUrl = "https://raw.githubusercontent.com/Azure-Samples/cognitive-services-REST-api-samples/master/curl/form-recognizer/sample-invoice.pdf";

SyncPoller < OperationResult, AnalyzeResult > analyzeInvoicePoller = client.beginAnalyzeDocumentFromUrl(modelId, invoiceUrl);

AnalyzeResult analyzeInvoiceResult = analyzeInvoicePoller.getFinalResult();

for (int i = 0; i < analyzeInvoiceResult.getDocuments().size(); i++) {

AnalyzedDocument analyzedInvoice = analyzeInvoiceResult.getDocuments().get(i);

Map < String, DocumentField > invoiceFields = analyzedInvoice.getFields();

System.out.printf("----------- Analyzing invoice %d -----------%n", i);

DocumentField vendorNameField = invoiceFields.get("VendorName");

if (vendorNameField != null) {

if (DocumentFieldType.STRING == vendorNameField.getType()) {

String merchantName = vendorNameField.getValueAsString();

System.out.printf("Vendor Name: %s, confidence: %.2f%n",

merchantName, vendorNameField.getConfidence());

}

}

DocumentField vendorAddressField = invoiceFields.get("VendorAddress");

if (vendorAddressField != null) {

if (DocumentFieldType.STRING == vendorAddressField.getType()) {

String merchantAddress = vendorAddressField.getValueAsString();

System.out.printf("Vendor address: %s, confidence: %.2f%n",

merchantAddress, vendorAddressField.getConfidence());

}

}

DocumentField customerNameField = invoiceFields.get("CustomerName");

if (customerNameField != null) {

if (DocumentFieldType.STRING == customerNameField.getType()) {

String merchantAddress = customerNameField.getValueAsString();

System.out.printf("Customer Name: %s, confidence: %.2f%n",

merchantAddress, customerNameField.getConfidence());

}

}

DocumentField customerAddressRecipientField = invoiceFields.get("CustomerAddressRecipient");

if (customerAddressRecipientField != null) {

if (DocumentFieldType.STRING == customerAddressRecipientField.getType()) {

String customerAddr = customerAddressRecipientField.getValueAsString();

System.out.printf("Customer Address Recipient: %s, confidence: %.2f%n",

customerAddr, customerAddressRecipientField.getConfidence());