Enable audio effects detection (preview)

Important

Due to the Azure Media Services retirement announcement, Azure AI Video Indexer announces Azure AI Video Indexer features adjustments. See Changes related to Azure Media Service (AMS) retirement to understand what this means for your Azure AI Video Indexer account. See the Preparing for AMS retirement: VI update and migration guide.

Audio effects detection is one of Azure AI Video Indexer AI capabilities that detects various acoustics events and classifies them into different acoustic categories (such as dog barking, crowd reactions, laugher and more).

Some scenarios where this feature is useful:

- Companies with a large set of video archives can easily improve accessibility with audio effects detection. The feature provides more context for persons who are hard of hearing, and enhances video transcription with nonspeech effects.

- In the Media & Entertainment domain, the detection feature can improve efficiency when creating raw data for content creators. Important moments in promos and trailers (such as laughter, crowd reactions, gunshot, or explosion) can be identified by using audio effects detection.

- In the Public Safety & Justice domain, the feature can detect and classify gunshots, explosions, and glass shattering. It can be implemented in a smart-city system or in other public environments that include cameras and microphones to offer fast and accurate detection of violence incidents.

Supported audio categories

Audio effect detection can detect and classify different categories. In the following table, you can find the different categories split in to the different presets, divided to Standard and Advanced. For more information, see pricing.

The following table shows which categories are supported depending on Preset Name (Audio Only / Video + Audio vs. Advance Audio / Advance Video + Audio). When you're using the Advanced indexing, categories appear in the Insights pane of the website.

| Class | Standard indexing | Advanced indexing |

|---|---|---|

| Crowd Reactions | ✔️ | |

| Silence | ✔️ | ✔️ |

| Gunshot or explosion | ✔️ | |

| Breaking glass | ✔️ | |

| Alarm or siren | ✔️ | |

| Laughter | ✔️ | |

| Dog | ✔️ | |

| Bell ringing | ✔️ | |

| Bird | ✔️ | |

| Car | ✔️ | |

| Engine | ✔️ | |

| Crying | ✔️ | |

| Music playing | ✔️ | |

| Screaming | ✔️ | |

| Thunderstorm | ✔️ |

Result formats

The audio effects are retrieved in the insights JSON that includes the category ID, type, and set of instances per category along with their specific timeframe and confidence score.

audioEffects: [{

id: 0,

type: "Gunshot or explosion",

instances: [{

confidence: 0.649,

adjustedStart: "0:00:13.9",

adjustedEnd: "0:00:14.7",

start: "0:00:13.9",

end: "0:00:14.7"

}, {

confidence: 0.7706,

adjustedStart: "0:01:54.3",

adjustedEnd: "0:01:55",

start: "0:01:54.3",

end: "0:01:55"

}

]

}, {

id: 1,

type: "CrowdReactions",

instances: [{

confidence: 0.6816,

adjustedStart: "0:00:47.9",

adjustedEnd: "0:00:52.5",

start: "0:00:47.9",

end: "0:00:52.5"

},

{

confidence: 0.7314,

adjustedStart: "0:04:57.67",

adjustedEnd: "0:05:01.57",

start: "0:04:57.67",

end: "0:05:01.57"

}

]

}

],

How to index audio effects

In order to set the index process to include the detection of audio effects, select one of the Advanced presets under Video + audio indexing menu as can be seen below.

Closed Caption

When audio effects are retrieved in the closed caption files, they're retrieved in square brackets the following structure:

| Type | Example |

|---|---|

| SRT | 00:00:00,000 00:00:03,671 [Gunshot or explosion] |

| VTT | 00:00:00.000 00:00:03.671 [Gunshot or explosion] |

| TTML | Confidence: 0.9047 <p begin="00:00:00.000" end="00:00:03.671">[Gunshot or explosion]</p> |

| TXT | [Gunshot or explosion] |

| CSV | 0.9047,00:00:00.000,00:00:03.671, [Gunshot or explosion] |

Audio Effects in closed captions file are retrieved with the following logic employed:

Silenceevent type won't be added to the closed captions.- Minimum timer duration to show an event is 700 milliseconds.

Adding audio effects in closed caption files

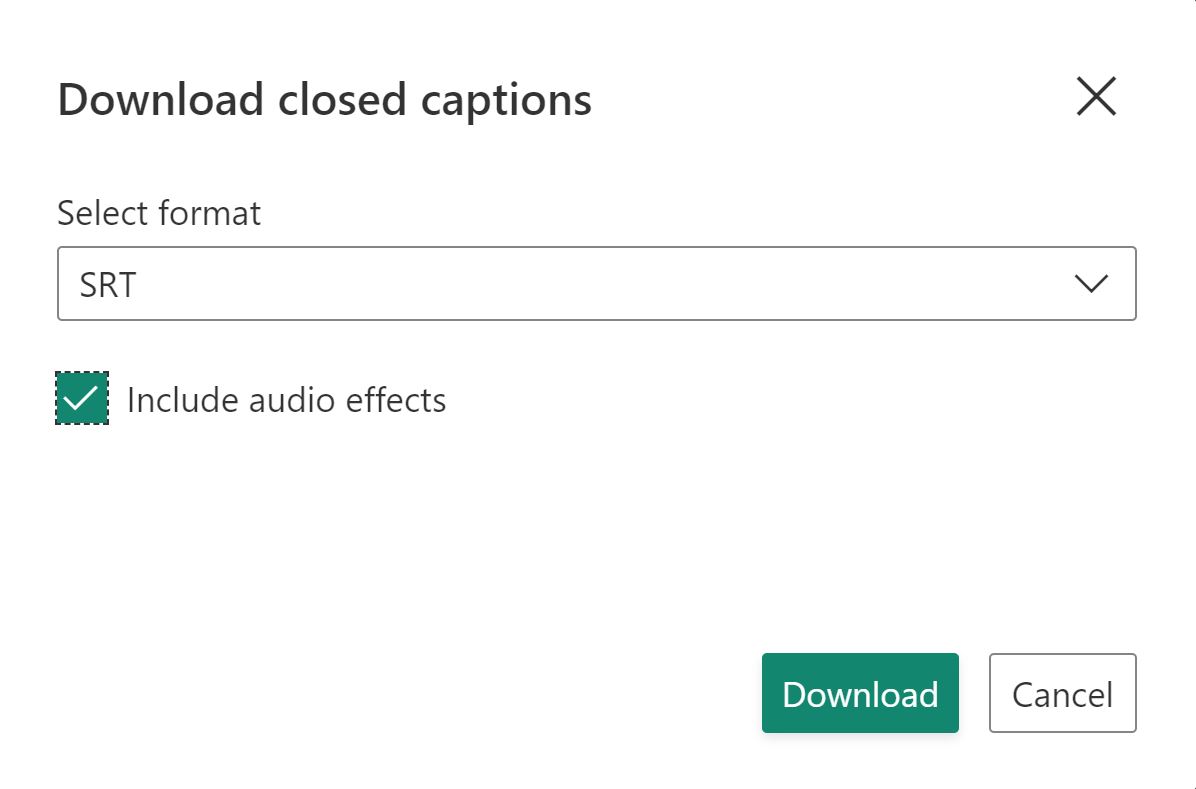

Audio effects can be added to the closed captions files supported by Azure AI Video Indexer via the Get video captions API by choosing true in the includeAudioEffects parameter or via the video.ai website experience by selecting Download -> Closed Captions -> Include Audio Effects.

Note

When using update transcript from closed caption files or update custom language model from closed caption files, audio effects included in those files are ignored.

Limitations and assumptions

- The audio effects are detected when present in nonspeech segments only.

- The model is optimized for cases where there's no loud background music.

- Low quality audio may impact the detection results.

- Minimal nonspeech section duration is 2 seconds.

- Music that is characterized with repetitive and/or linearly scanned frequency can be mistakenly classified as Alarm or siren.

- The model is currently optimized for natural and nonsynthetic gunshot and explosions sounds.

- Door knocks and door slams can sometimes be mistakenly labeled as gunshot and explosions.

- Prolonged shouting and human physical effort sounds can sometimes be mistakenly detected.

- Group of people laughing can sometime be classified as both Laughter and Crowd reactions.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for