Get data from Azure storage

Data ingestion is the process used to load data from one or more sources into a table in Azure Data Explorer. Once ingested, the data becomes available for query. In this article, you learn how to get data from Azure storage (ADLS Gen2 container, blob container, or individual blobs) into either a new or existing table.

Ingestion can be done as a one-time operation, or as a continuous method. Continuous ingestion can be configured via portal only.

For general information on data ingestion, see Azure Data Explorer data ingestion overview.

Prerequisites

- A Microsoft account or a Microsoft Entra user identity. An Azure subscription isn't required.

- Sign-in to the Azure Data Explorer web UI.

- An Azure Data Explorer cluster and database. Create a cluster and database.

- A storage account.

Get data

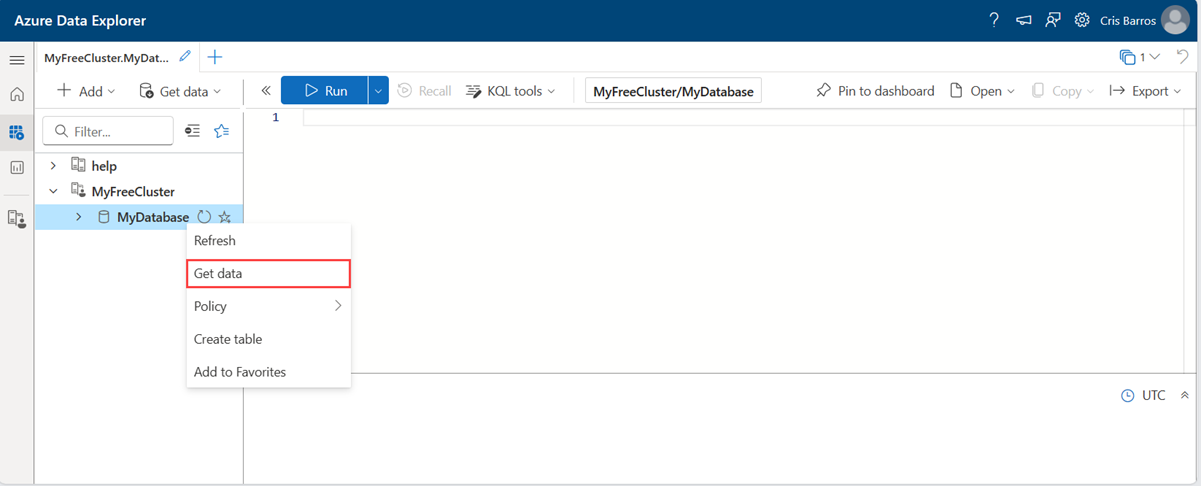

From the left menu, select Query.

Right-click on the database where you want to ingest the data. Select Get data.

Source

In the Get data window, the Source tab is selected.

Select the data source from the available list. In this example, you're ingesting data from Azure storage.

Configure

Select a target database and table. If you want to ingest data into a new table, select + New table and enter a table name.

Note

Table names can be up to 1024 characters including spaces, alphanumeric, hyphens, and underscores. Special characters aren't supported.

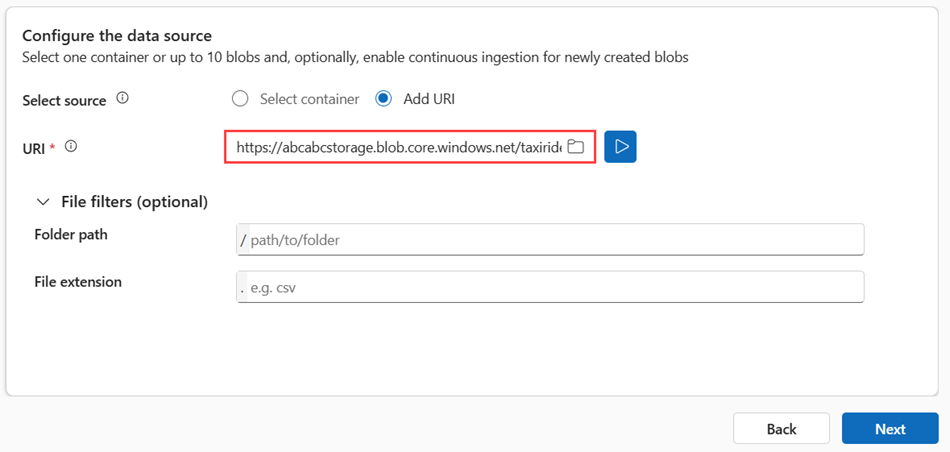

To add your source, select Select container or Add URI.

If you selected Select container, fill in the following fields:

Setting Field description Subscription The subscription ID where the storage account is located. Storage account The name that identifies your storage account. Container The storage container you want to ingest. File filters (optional) Folder path Filters data to ingest files with a specific folder path. File extension Filters data to ingest files with a specific file extension only. If you selected Add URI, paste your storage connection string for a blob container or individual files in the URI field, and then select +.

Note

- You can add up to 10 individual blobs. Each blob can be a max of 1 GB uncompressed.

- You can ingest up to 5000 blobs from a single container.

Select Next

Inspect

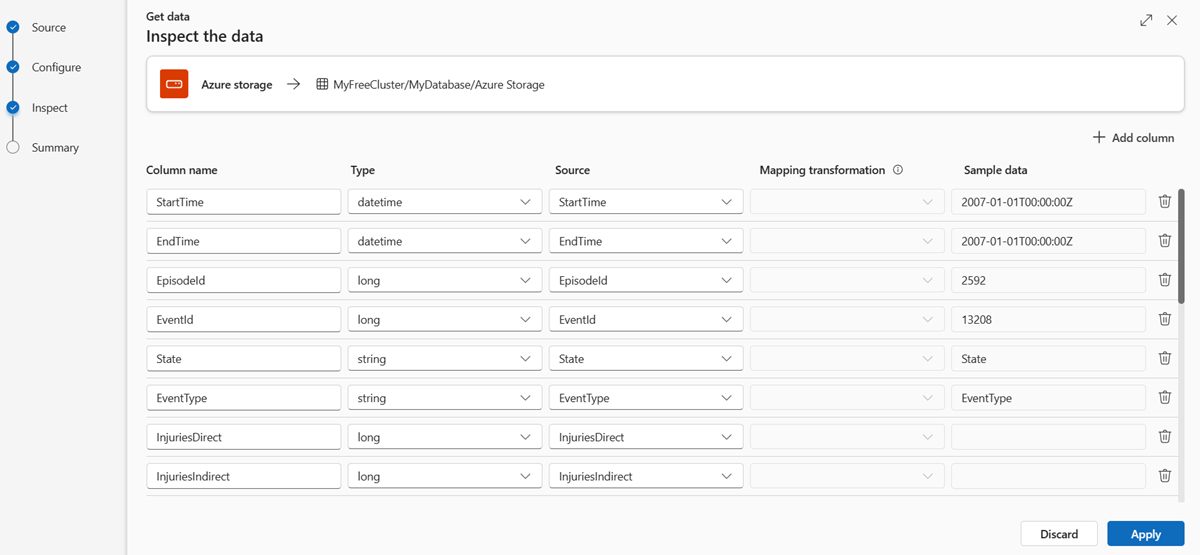

The Inspect tab opens with a preview of the data.

To complete the ingestion process, select Finish.

Optionally:

- Select Command viewer to view and copy the automatic commands generated from your inputs.

- Use the Schema definition file dropdown to change the file that the schema is inferred from.

- Change the automatically inferred data format by selecting the desired format from the dropdown. See Data formats supported by Azure Data Explorer for ingestion.

- Edit columns.

- Explore Advanced options based on data type.

Edit columns

Note

- For tabular formats (CSV, TSV, PSV), you can't map a column twice. To map to an existing column, first delete the new column.

- You can't change an existing column type. If you try to map to a column having a different format, you may end up with empty columns.

The changes you can make in a table depend on the following parameters:

- Table type is new or existing

- Mapping type is new or existing

| Table type | Mapping type | Available adjustments |

|---|---|---|

| New table | New mapping | Rename column, change data type, change data source, mapping transformation, add column, delete column |

| Existing table | New mapping | Add column (on which you can then change data type, rename, and update) |

| Existing table | Existing mapping | none |

Mapping transformations

Some data format mappings (Parquet, JSON, and Avro) support simple ingest-time transformations. To apply mapping transformations, create or update a column in the Edit columns window.

Mapping transformations can be performed on a column of type string or datetime, with the source having data type int or long. Supported mapping transformations are:

- DateTimeFromUnixSeconds

- DateTimeFromUnixMilliseconds

- DateTimeFromUnixMicroseconds

- DateTimeFromUnixNanoseconds

Advanced options based on data type

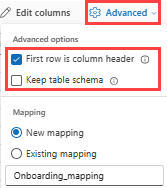

Tabular (CSV, TSV, PSV):

If you're ingesting tabular formats in an existing table, you can select Advanced > Keep current table schema. Tabular data doesn't necessarily include the column names that are used to map source data to the existing columns. When this option is checked, mapping is done by-order, and the table schema remains the same. If this option is unchecked, new columns are created for incoming data, regardless of data structure.

To use the first row as column names, select Advanced > First row is column header.

JSON:

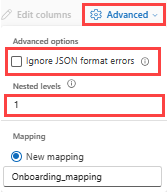

To determine column division of JSON data, select Advanced > Nested levels, from 1 to 100.

If you select Advanced > Ignore data format errors, the data is ingested in JSON format. If you leave this check box unselected, the data is ingested in multijson format.

Summary

In the Data preparation window, all three steps are marked with green check marks when data ingestion finishes successfully. You can view the commands that were used for each step, or select a card to query, visualize, or drop the ingested data.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for