Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Schema drift is the case where your sources often change metadata. Fields, columns, and, types can be added, removed, or changed on the fly. Without handling for schema drift, your data flow becomes vulnerable to upstream data source changes. Typical ETL patterns fail when incoming columns and fields change because they tend to be tied to those source names.

To protect against schema drift, it's important to have the facilities in a data flow tool to allow you, as a Data Engineer, to:

Azure Data Factory natively supports flexible schemas that change from execution to execution so that you can build generic data transformation logic without the need to recompile your data flows.

You need to make an architectural decision in your data flow to accept schema drift throughout your flow. When you do this, you can protect against schema changes from the sources. However, you'll lose early-binding of your columns and types throughout your data flow. Azure Data Factory treats schema drift flows as late-binding flows, so when you build your transformations, the drifted column names won't be available to you in the schema views throughout the flow.

This video provides an introduction to some of the complex solutions that you can build easily in Azure Data Factory or Synapse Analytics pipelines with data flow's schema drift feature. In this example, we build reusable patterns based on flexible database schemas:

Columns coming into your data flow from your source definition are defined as "drifted" when they are not present in your source projection. You can view your source projection from the projection tab in the source transformation. When you select a dataset for your source, the service will automatically take the schema from the dataset and create a projection from that dataset schema definition.

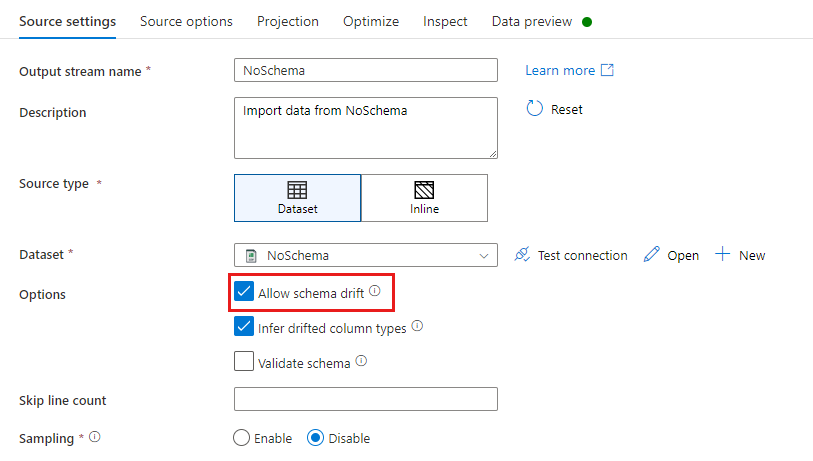

In a source transformation, schema drift is defined as reading columns that aren't defined in your dataset schema. To enable schema drift, check Allow schema drift in your source transformation.

When schema drift is enabled, all incoming fields are read from your source during execution and passed through the entire flow to the Sink. By default, all newly detected columns, known as drifted columns, arrive as a string data type. If you wish for your data flow to automatically infer data types of drifted columns, check Infer drifted column types in your source settings.

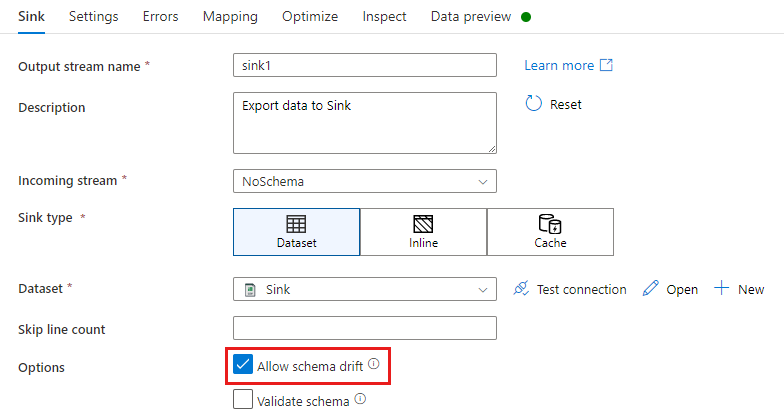

In a sink transformation, schema drift is when you write additional columns on top of what is defined in the sink data schema. To enable schema drift, check Allow schema drift in your sink transformation.

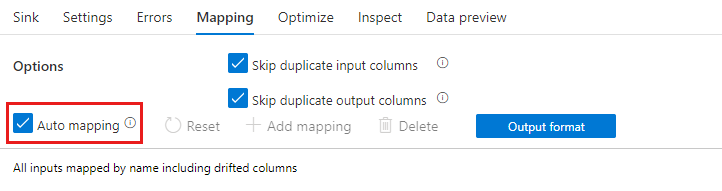

If schema drift is enabled, make sure the Auto-mapping slider in the Mapping tab is turned on. With this slider on, all incoming columns are written to your destination. Otherwise you must use rule-based mapping to write drifted columns.

When your data flow has drifted columns, you can access them in your transformations with the following methods:

byPosition and byName expressions to explicitly reference a column by name or position number.For more information on how to implement column patterns, see Column patterns in mapping data flow.

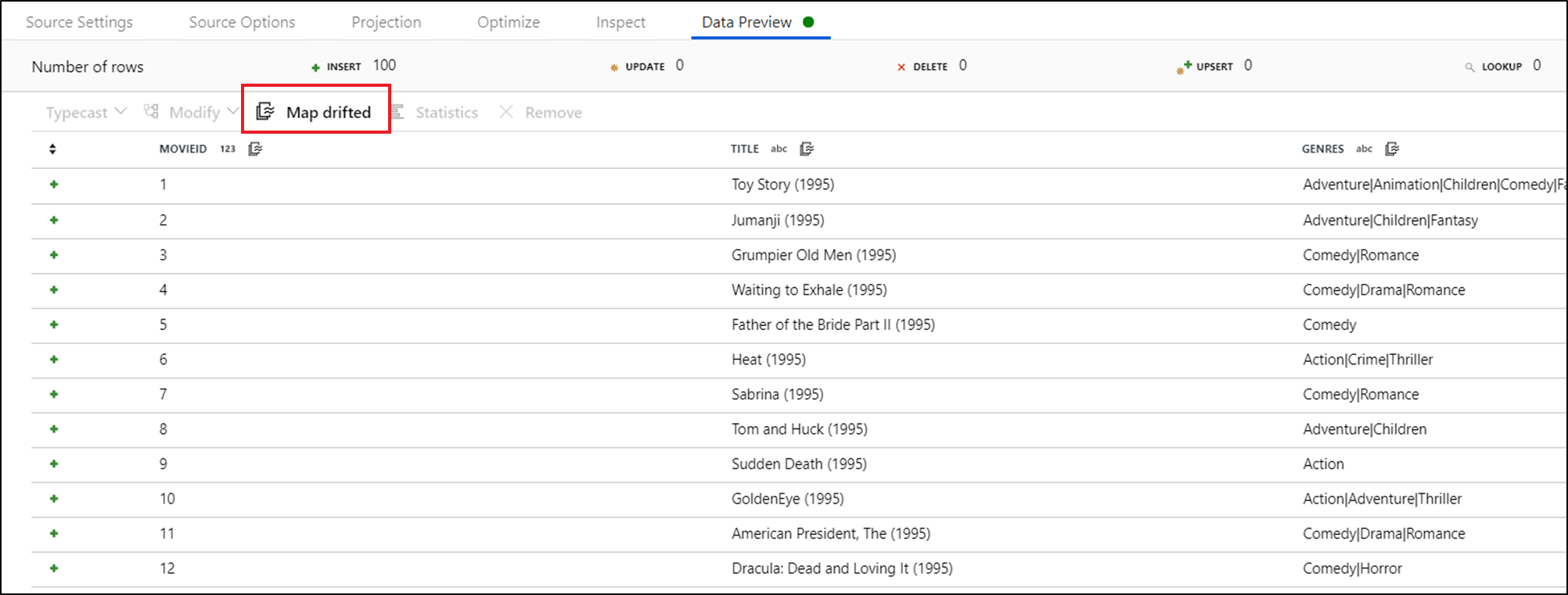

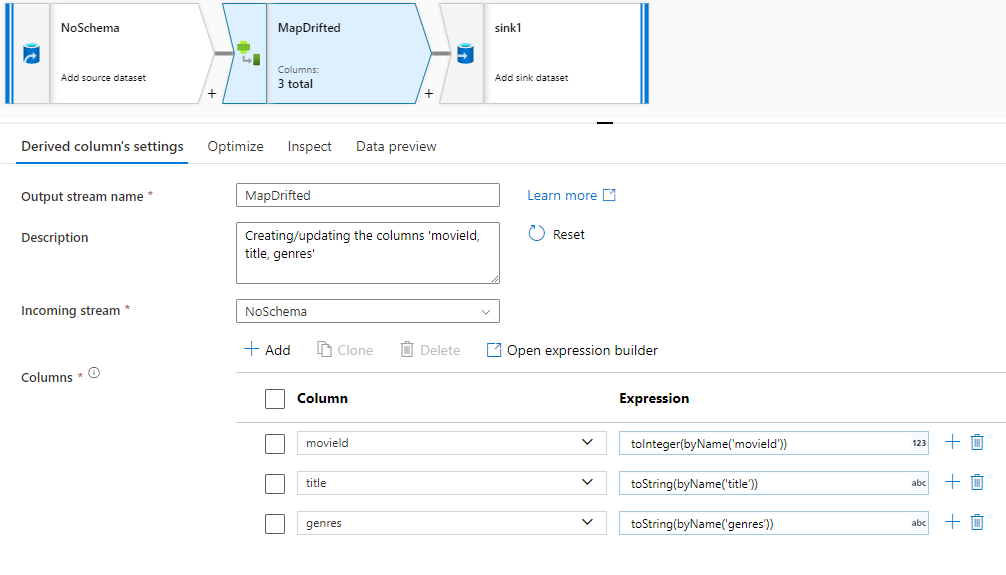

To explicitly reference drifted columns, you can quickly generate mappings for these columns via a data preview quick action. Once debug mode is on, go to the Data Preview tab and click Refresh to fetch a data preview. If data factory detects that drifted columns exist, you can click Map Drifted and generate a derived column that allows you to reference all drifted columns in schema views downstream.

In the generated Derived Column transformation, each drifted column is mapped to its detected name and data type. In the above data preview, the column 'movieId' is detected as an integer. After Map Drifted is clicked, movieId is defined in the Derived Column as toInteger(byName('movieId')) and included in schema views in downstream transformations.

In the Data Flow Expression Language, you'll find additional facilities for column patterns and schema drift including "byName" and "byPosition".

Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayTraining

Module

Code-free transformation at scale with Azure Data Factory - Training

Perform code-free transformation at scale with Azure Data Factory or Azure Synapse Pipeline

Certification

Microsoft Certified: Azure Data Engineer Associate - Certifications

Demonstrate understanding of common data engineering tasks to implement and manage data engineering workloads on Microsoft Azure, using a number of Azure services.

Documentation

Data Flow activity - Azure Data Factory & Azure Synapse

How to execute data flows from inside an Azure Data Factory or Azure Synapse Analytics pipeline.

Mapping data flows - Azure Data Factory

An overview of mapping data flows in Azure Data Factory

Mapping data flow Debug Mode - Azure Data Factory & Azure Synapse

Start an interactive debug session when building data flows with Azure Data Factory or Synapse Analytics.