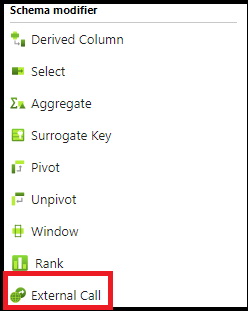

External call transformation in mapping data flows

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Data flows are available both in Azure Data Factory and Azure Synapse Pipelines. This article applies to mapping data flows. If you are new to transformations, please refer to the introductory article Transform data using a mapping data flow.

The external call transformation enables data engineers to call out to external REST end points row-by-row in order to add custom or third party results into your data flow streams.

Configuration

In the external call transformation configuration panel, you'll first pick the type of external endpoint you wish to connect to. Next step is to map incoming columns. Finally, define an output data structure to be consumed by downstream transformations.

Settings

Choose the inline dataset type and associated linked service. Today, only REST is supported. However, SQL stored procedures and other linked service types will become available as well. See the REST source configuration for explanations of the settings properties.

Mapping

You can choose auto-mapping to pass all input columns to the endpoint. Optionally, you can manually set the columns and rename the columns that are sent to the target endpoint here.

Output

This is where you'll define the data structure for the output of the external call. You can define the structure for the body as well as choose how to store the headers and the status returned from the external call.

If you choose to store the body, headers, and status, first choose a column name for each so that they can be consumed by downstream data transformations.

You can define the body data structure manually using ADF data flow syntax. To define the column names and data types for the body, click on "import projection" and allow ADF to detect the schema output from the external call. Here is an example schema definition structure as output from a weather REST API GET call:

({@context} as string[],

geometry as (coordinates as string[][][],

type as string),

properties as (elevation as (unitCode as string,

value as string),

forecastGenerator as string,

generatedAt as string,

periods as (detailedForecast as string, endTime as string, icon as string, isDaytime as string, name as string, number as string, shortForecast as string, startTime as string, temperature as string, temperatureTrend as string, temperatureUnit as string, windDirection as string, windSpeed as string)[],

units as string,

updateTime as string,

updated as string,

validTimes as string),

type as string)

Examples

Samples including data flow script

source(output(

id as string

),

allowSchemaDrift: true,

validateSchema: false,

ignoreNoFilesFound: false) ~> source1

Filter1 call(mapColumn(

id

),

skipDuplicateMapInputs: false,

skipDuplicateMapOutputs: false,

output(

headers as [string,string],

body as (name as string)

),

allowSchemaDrift: true,

store: 'restservice',

format: 'rest',

timeout: 30,

httpMethod: 'POST',

entity: 'api/Todo/',

requestFormat: ['type' -> 'json'],

responseFormat: ['type' -> 'json', 'documentForm' -> 'documentPerLine']) ~> ExternalCall1

source1 filter(toInteger(id)==1) ~> Filter1

ExternalCall1 sink(allowSchemaDrift: true,

validateSchema: false,

skipDuplicateMapInputs: true,

skipDuplicateMapOutputs: true,

store: 'cache',

format: 'inline',

output: false,

saveOrder: 1) ~> sink1

Data flow script

ExternalCall1 sink(allowSchemaDrift: true,

validateSchema: false,

skipDuplicateMapInputs: true,

skipDuplicateMapOutputs: true,

store: 'cache',

format: 'inline',

output: false,

saveOrder: 1) ~> sink1

Related content

- Use the Flatten transformation to pivot rows to columns.

- Use the Derived column transformation to transform rows.

- See the REST source for more information on REST settings.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for