Share information between tasks in an Azure Databricks job

You can use task values to pass arbitrary parameters between tasks in an Azure Databricks job. You pass task values using the taskValues subutility in Databricks Utilities. The taskValues subutility provides a simple API that allows tasks to output values that can be referenced in subsequent tasks, making it easier to create more expressive workflows. For example, you can communicate identifiers or metrics, such as information about the evaluation of a machine learning model, between different tasks within a job run. Each task can set and get multiple task values. Task values can be set and retrieved in Python notebooks.

Note

You can now use dynamic value references in your notebooks to reference task values set in upstream tasks. For example, to reference the value with the key name set by the task Get_user_data, use {{tasks.Get_user_data.values.name}}. Because they can be used with multiple task types, Databricks recommends using dynamic value references instead of dbutils.jobs.taskValues.get to retrieve the task value programmatically.

Using task values

The taskValues subutility provides two commands: dbutils.jobs.taskValues.set() to set a variable and dbutils.jobs.taskValues.get() to retrieve a value. Suppose you have two notebook tasks: Get_user_data and Analyze_user_data and want to pass a user’s name and age from the Get_user_data task to the Analyze_user_data task. The following example sets the user’s name and age in the Get_user_data task:

dbutils.jobs.taskValues.set(key = 'name', value = 'Some User')

dbutils.jobs.taskValues.set(key = "age", value = 30)

keyis the name of the task value key. This name must be unique to the task.valueis the value for this task value’s key. This command must be able to represent the value internally in JSON format. The size of the JSON representation of the value cannot exceed 48 KiB.

The following example then gets the values in the Analyze_user_data task:

dbutils.jobs.taskValues.get(taskKey = "Get_user_data", key = "age", default = 42, debugValue = 0)

dbutils.jobs.taskValues.get(taskKey = "Get_user_data", key = "name", default = "Jane Doe")

taskKeyis the name of the job task setting the value. If the command cannot find this task, aValueErroris raised.keyis the name of the task value’s key. If the command cannot find this task value’s key, aValueErroris raised (unlessdefaultis specified).defaultis an optional value that is returned ifkeycannot be found.defaultcannot beNone.debugValueis an optional value that is returned if you try to get the task value from within a notebook that is running outside of a job. This can be useful during debugging when you want to run your notebook manually and return some value instead of raising aTypeErrorby default.debugValuecannot beNone.

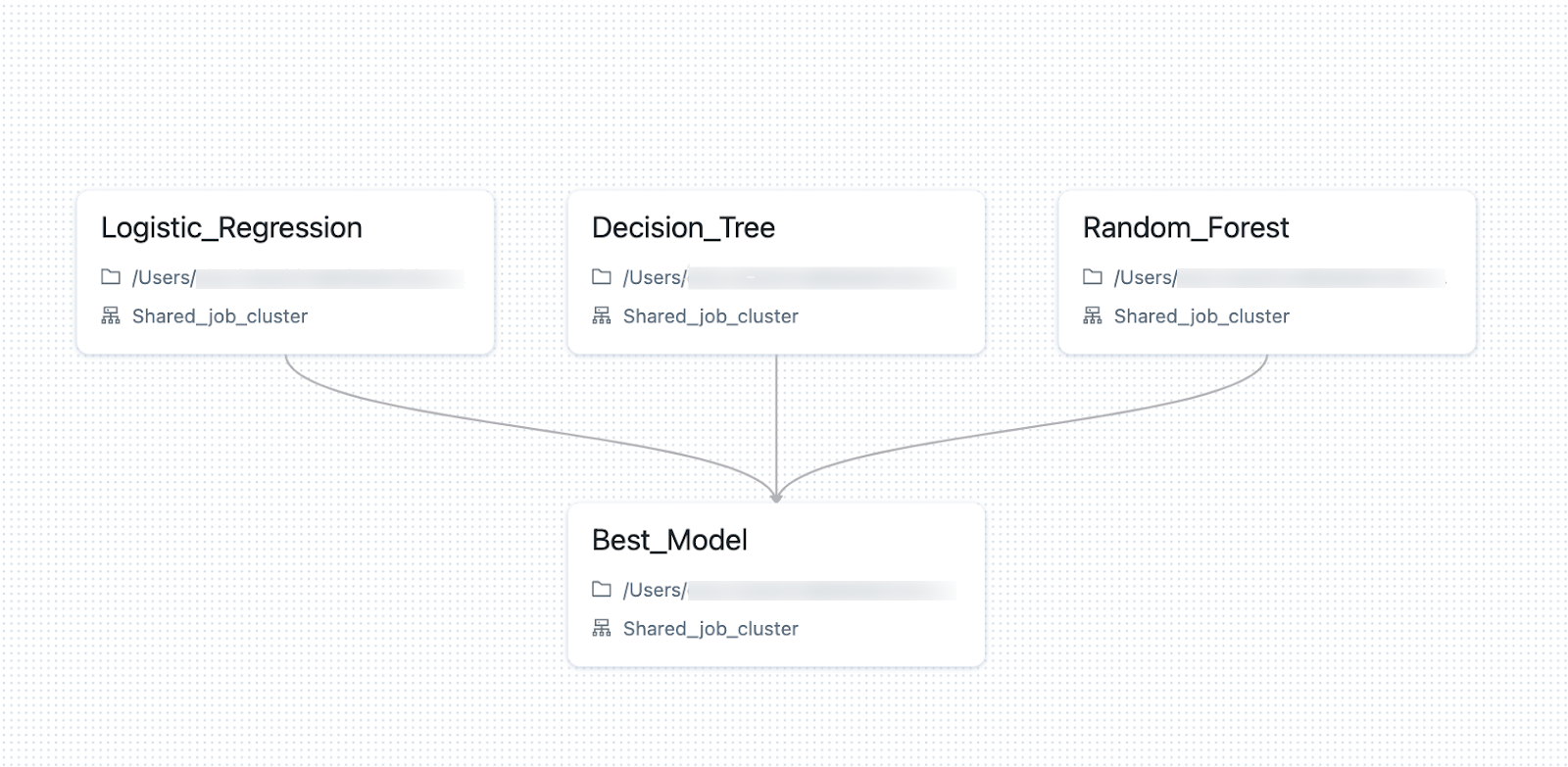

As a more complex example of sharing context between tasks, suppose that you have an application that includes several machine learning models to predict an individual’s income given various personal attributes, and a task that determines the best model to use based on output from the previous three tasks. The models are run by three tasks named Logistic_Regression, Decision_Tree, and Random_Forest, and the Best_Model task determines the best model to use based on output from the previous three tasks.

The accuracy for each model (how well the classifier predicts income) is passed in a task value to determine the best performing algorithm. For example, the logistic regression notebook associated with the Logistic_Regression task includes the following command:

dbutils.jobs.taskValues.set(key = "model_performance", value = result)

Each model task sets a value for the model_performance key. The Best_Model task reads the value for each task, and uses that value to determine the optimal model. The following example reads the value set by the Logistic_Regression task:

logistic_regression = dbutils.jobs.taskValues.get(taskKey = "Logistic_Regression", key = "model_performance")

View task values

To view the value of a task value after a task runs, go to the task run history for the task. The task value results are displayed in the Output panel.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for