Deploy and score a machine learning model by using an online endpoint

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you'll learn to deploy your model to an online endpoint for use in real-time inferencing. You'll begin by deploying a model on your local machine to debug any errors. Then, you'll deploy and test the model in Azure. You'll also learn to view the deployment logs and monitor the service-level agreement (SLA). By the end of this article, you'll have a scalable HTTPS/REST endpoint that you can use for real-time inference.

Online endpoints are endpoints that are used for real-time inferencing. There are two types of online endpoints: managed online endpoints and Kubernetes online endpoints. For more information on endpoints and differences between managed online endpoints and Kubernetes online endpoints, see What are Azure Machine Learning endpoints?.

Managed online endpoints help to deploy your ML models in a turnkey manner. Managed online endpoints work with powerful CPU and GPU machines in Azure in a scalable, fully managed way. Managed online endpoints take care of serving, scaling, securing, and monitoring your models, freeing you from the overhead of setting up and managing the underlying infrastructure.

The main example in this doc uses managed online endpoints for deployment. To use Kubernetes instead, see the notes in this document that are inline with the managed online endpoint discussion.

Prerequisites

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Before following the steps in this article, make sure you have the following prerequisites:

The Azure CLI and the

mlextension to the Azure CLI. For more information, see Install, set up, and use the CLI (v2).Important

The CLI examples in this article assume that you are using the Bash (or compatible) shell. For example, from a Linux system or Windows Subsystem for Linux.

An Azure Machine Learning workspace. If you don't have one, use the steps in the Install, set up, and use the CLI (v2) to create one.

Azure role-based access controls (Azure RBAC) are used to grant access to operations in Azure Machine Learning. To perform the steps in this article, your user account must be assigned the owner or contributor role for the Azure Machine Learning workspace, or a custom role allowing

Microsoft.MachineLearningServices/workspaces/onlineEndpoints/*. If you use studio to create/manage online endpoints/deployments, you will need an additional permission "Microsoft.Resources/deployments/write" from the resource group owner. For more information, see Manage access to an Azure Machine Learning workspace.(Optional) To deploy locally, you must install Docker Engine on your local computer. We highly recommend this option, so it's easier to debug issues.

Virtual machine quota allocation for deployment

For managed online endpoints, Azure Machine Learning reserves 20% of your compute resources for performing upgrades on some VM SKUs. If you request a given number of instances for those VM SKUs in a deployment, you must have a quota for ceil(1.2 * number of instances requested for deployment) * number of cores for the VM SKU available to avoid getting an error. For example, if you request 10 instances of a Standard_DS3_v2 VM (that comes with 4 cores) in a deployment, you should have a quota for 48 cores (12 instances * 4 cores) available. This extra quota is reserved for system-initated operations such as OS upgrade, VM recovery etc, and it won't incur cost unless such operation runs. To view your usage and request quota increases, see View your usage and quotas in the Azure portal. To view your cost of running managed online endpoints, see View cost for managed online endpoint. There are certain VM SKUs that are exempted from extra quota reservation. To view the full list, see Managed online endpoints SKU list.

Azure Machine Learning provides a shared quota pool from which all users can access quota to perform testing for a limited time. When you use the studio to deploy Llama-2, Phi, Nemotron, Mistral, Dolly and Deci-DeciLM models from the model catalog to a managed online endpoint, Azure Machine Learning allows you to access this shared quota for a short time.

For more information on how to use the shared quota for online endpoint deployment, see How to deploy foundation models using the studio.

Prepare your system

Set environment variables

If you haven't already set the defaults for the Azure CLI, save your default settings. To avoid passing in the values for your subscription, workspace, and resource group multiple times, run this code:

az account set --subscription <subscription ID>

az configure --defaults workspace=<Azure Machine Learning workspace name> group=<resource group>

Clone the examples repository

To follow along with this article, first clone the examples repository (azureml-examples). Then, run the following code to go to the repository's cli/ directory:

git clone --depth 1 https://github.com/Azure/azureml-examples

cd azureml-examples

cd cli

Tip

Use --depth 1 to clone only the latest commit to the repository, which reduces time to complete the operation.

The commands in this tutorial are in the files deploy-local-endpoint.sh and deploy-managed-online-endpoint.sh in the cli directory, and the YAML configuration files are in the endpoints/online/managed/sample/ subdirectory.

Note

The YAML configuration files for Kubernetes online endpoints are in the endpoints/online/kubernetes/ subdirectory.

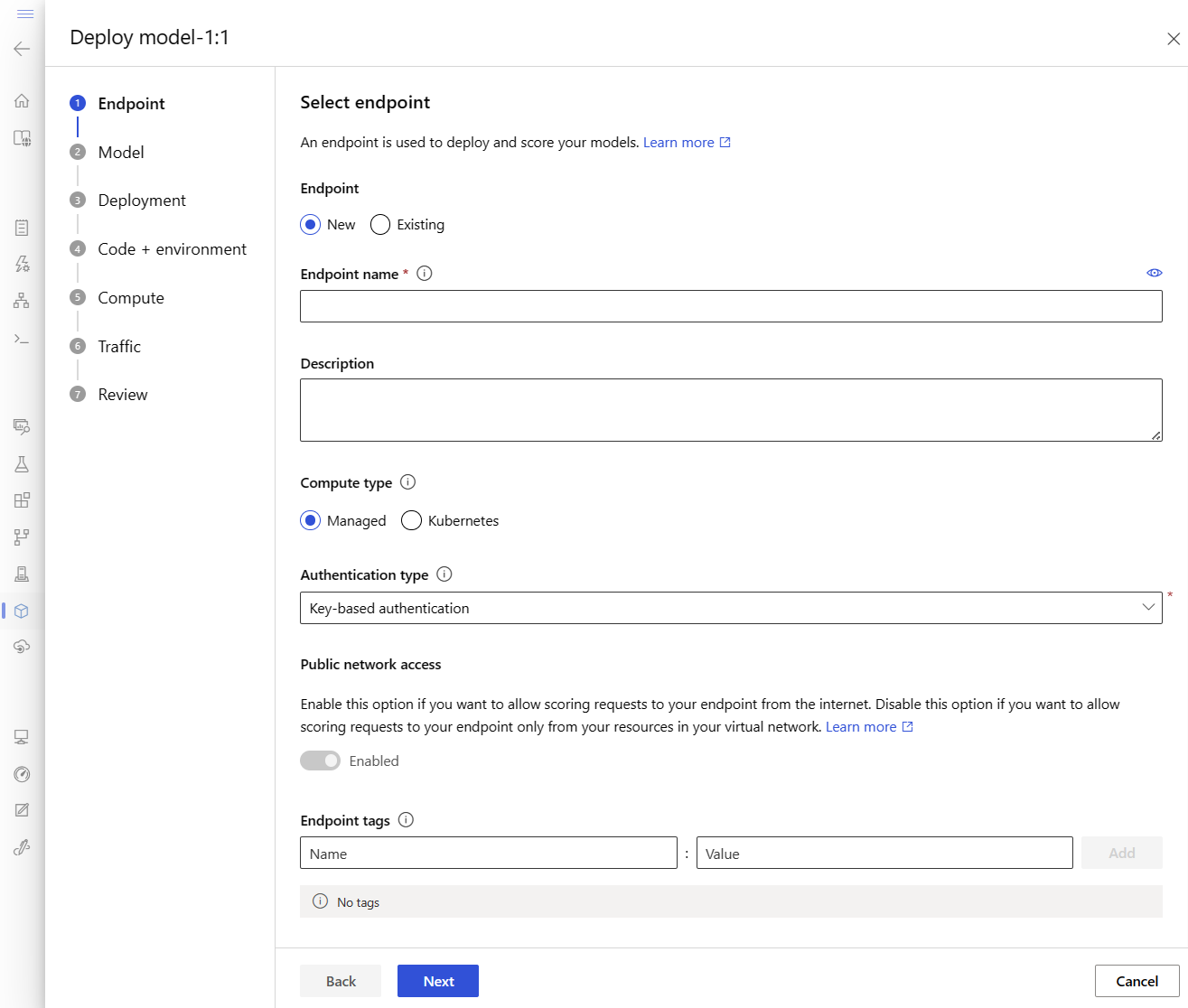

Define the endpoint

To define an endpoint, you need to specify:

- Endpoint name: The name of the endpoint. It must be unique in the Azure region. For more information on the naming rules, see endpoint limits.

- Authentication mode: The authentication method for the endpoint. Choose between key-based authentication and Azure Machine Learning token-based authentication. A key doesn't expire, but a token does expire. For more information on authenticating, see Authenticate to an online endpoint.

- Optionally, you can add a description and tags to your endpoint.

Set an endpoint name

To set your endpoint name, run the following command (replace YOUR_ENDPOINT_NAME with a unique name).

For Linux, run this command:

export ENDPOINT_NAME="<YOUR_ENDPOINT_NAME>"

Configure the endpoint

The following snippet shows the endpoints/online/managed/sample/endpoint.yml file:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineEndpoint.schema.json

name: my-endpoint

auth_mode: key

The reference for the endpoint YAML format is described in the following table. To learn how to specify these attributes, see the online endpoint YAML reference. For information about limits related to managed endpoints, see limits for online endpoints.

| Key | Description |

|---|---|

$schema |

(Optional) The YAML schema. To see all available options in the YAML file, you can view the schema in the preceding code snippet in a browser. |

name |

The name of the endpoint. |

auth_mode |

Use key for key-based authentication. Use aml_token for Azure Machine Learning token-based authentication. To get the most recent token, use the az ml online-endpoint get-credentials command. |

Define the deployment

A deployment is a set of resources required for hosting the model that does the actual inferencing. To deploy a model, you must have:

- Model files (or the name and version of a model that's already registered in your workspace). In the example, we have a scikit-learn model that does regression.

- A scoring script, that is, code that executes the model on a given input request. The scoring script receives data submitted to a deployed web service and passes it to the model. The script then executes the model and returns its response to the client. The scoring script is specific to your model and must understand the data that the model expects as input and returns as output. In this example, we have a score.py file.

- An environment in which your model runs. The environment can be a Docker image with Conda dependencies or a Dockerfile.

- Settings to specify the instance type and scaling capacity.

The following table describes the key attributes of a deployment:

| Attribute | Description |

|---|---|

| Name | The name of the deployment. |

| Endpoint name | The name of the endpoint to create the deployment under. |

| Model | The model to use for the deployment. This value can be either a reference to an existing versioned model in the workspace or an inline model specification. |

| Code path | The path to the directory on the local development environment that contains all the Python source code for scoring the model. You can use nested directories and packages. |

| Scoring script | The relative path to the scoring file in the source code directory. This Python code must have an init() function and a run() function. The init() function will be called after the model is created or updated (you can use it to cache the model in memory, for example). The run() function is called at every invocation of the endpoint to do the actual scoring and prediction. |

| Environment | The environment to host the model and code. This value can be either a reference to an existing versioned environment in the workspace or an inline environment specification. |

| Instance type | The VM size to use for the deployment. For the list of supported sizes, see Managed online endpoints SKU list. |

| Instance count | The number of instances to use for the deployment. Base the value on the workload you expect. For high availability, we recommend that you set the value to at least 3. We reserve an extra 20% for performing upgrades. For more information, see virtual machine quota allocation for deployments. |

Warning

- The model and container image (as defined in Environment) can be referenced again at any time by the deployment when the instances behind the deployment go through security patches and/or other recovery operations. If you used a registered model or container image in Azure Container Registry for deployment and removed the model or the container image, the deployments relying on these assets can fail when reimaging happens. If you removed the model or the container image, ensure the dependent deployments are re-created or updated with alternative model or container image.

- The container registry that the environment refers to can be private only if the endpoint identity has the permission to access it via Microsoft Entra authentication and Azure RBAC. For the same reason, private Docker registries other than Azure Container Registry are not supported.

Configure a deployment

The following snippet shows the endpoints/online/managed/sample/blue-deployment.yml file, with all the required inputs to configure a deployment:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

path: ../../model-1/model/

code_configuration:

code: ../../model-1/onlinescoring/

scoring_script: score.py

environment:

conda_file: ../../model-1/environment/conda.yaml

image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest

instance_type: Standard_DS3_v2

instance_count: 1

Note

In the blue-deployment.yml file, we've specified the following deployment attributes:

model- In this example, we specify the model properties inline using thepath. Model files are automatically uploaded and registered with an autogenerated name.environment- In this example, we have inline definitions that include thepath. We'll useenvironment.docker.imagefor the image. Theconda_filedependencies will be installed on top of the image.

During deployment, the local files such as the Python source for the scoring model, are uploaded from the development environment.

For more information about the YAML schema, see the online endpoint YAML reference.

Note

To use Kubernetes instead of managed endpoints as a compute target:

- Create and attach your Kubernetes cluster as a compute target to your Azure Machine Learning workspace by using Azure Machine Learning studio.

- Use the endpoint YAML to target Kubernetes instead of the managed endpoint YAML. You'll need to edit the YAML to change the value of

targetto the name of your registered compute target. You can use this deployment.yaml that has additional properties applicable to Kubernetes deployment.

All the commands that are used in this article (except the optional SLA monitoring and Azure Log Analytics integration) can be used either with managed endpoints or with Kubernetes endpoints.

Register your model and environment separately

In this example, we specify the path (where to upload files from) inline. The CLI automatically uploads the files and registers the model and environment. As a best practice for production, you should register the model and environment and specify the registered name and version separately in the YAML. Use the form model: azureml:my-model:1 or environment: azureml:my-env:1.

For registration, you can extract the YAML definitions of model and environment into separate YAML files and use the commands az ml model create and az ml environment create. To learn more about these commands, run az ml model create -h and az ml environment create -h.

For more information on registering your model as an asset, see Register your model as an asset in Machine Learning by using the CLI. For more information on creating an environment, see Manage Azure Machine Learning environments with the CLI & SDK (v2).

Use different CPU and GPU instance types and images

The preceding definition in the blue-deployment.yml file uses a general-purpose type Standard_DS3_v2 instance and a non-GPU Docker image mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest. For GPU compute, choose a GPU compute type SKU and a GPU Docker image.

For supported general-purpose and GPU instance types, see Managed online endpoints supported VM SKUs. For a list of Azure Machine Learning CPU and GPU base images, see Azure Machine Learning base images.

Note

To use Kubernetes instead of managed endpoints as a compute target, see Introduction to Kubernetes compute target.

Identify model path with respect to AZUREML_MODEL_DIR

When deploying your model to Azure Machine Learning, you need to specify the location of the model you wish to deploy as part of your deployment configuration. In Azure Machine Learning, the path to your model is tracked with the AZUREML_MODEL_DIR environment variable. By identifying the model path with respect to AZUREML_MODEL_DIR, you can deploy one or more models that are stored locally on your machine or deploy a model that is registered in your Azure Machine Learning workspace.

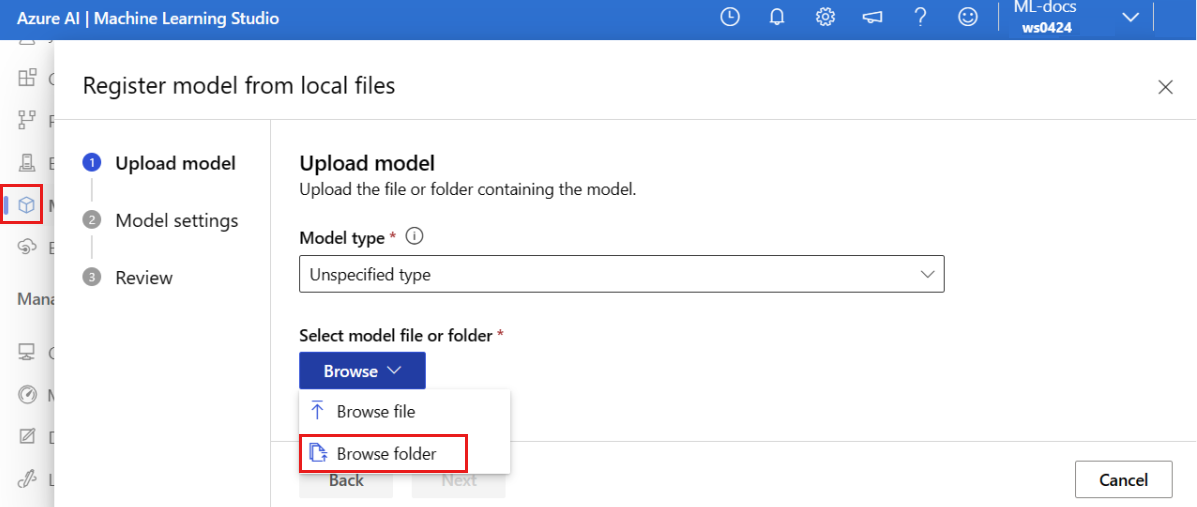

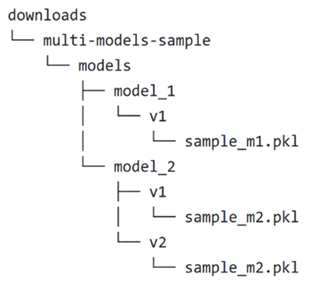

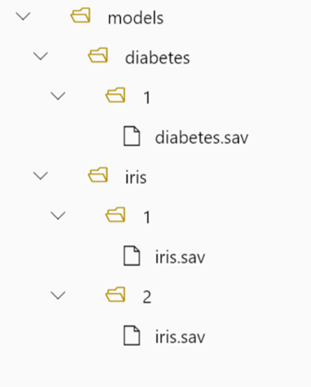

For illustration, we reference the following local folder structure for the first two cases where you deploy a single model or deploy multiple models that are stored locally:

Use a single local model in a deployment

To use a single model that you have on your local machine in a deployment, specify the path to the model in your deployment YAML. Here's an example of the deployment YAML with the path /Downloads/multi-models-sample/models/model_1/v1/sample_m1.pkl:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

path: /Downloads/multi-models-sample/models/model_1/v1/sample_m1.pkl

code_configuration:

code: ../../model-1/onlinescoring/

scoring_script: score.py

environment:

conda_file: ../../model-1/environment/conda.yml

image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest

instance_type: Standard_DS3_v2

instance_count: 1

After you create your deployment, the environment variable AZUREML_MODEL_DIR will point to the storage location within Azure where your model is stored. For example, /var/azureml-app/azureml-models/81b3c48bbf62360c7edbbe9b280b9025/1 will contain the model sample_m1.pkl.

Within your scoring script (score.py), you can load your model (in this example, sample_m1.pkl) in the init() function:

def init():

model_path = os.path.join(str(os.getenv("AZUREML_MODEL_DIR")), "sample_m1.pkl")

model = joblib.load(model_path)

Use multiple local models in a deployment

Although the Azure CLI, Python SDK, and other client tools allow you to specify only one model per deployment in the deployment definition, you can still use multiple models in a deployment by registering a model folder that contains all the models as files or subdirectories.

In the previous example folder structure, you notice that there are multiple models in the models folder. In your deployment YAML, you can specify the path to the models folder as follows:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

path: /Downloads/multi-models-sample/models/

code_configuration:

code: ../../model-1/onlinescoring/

scoring_script: score.py

environment:

conda_file: ../../model-1/environment/conda.yml

image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest

instance_type: Standard_DS3_v2

instance_count: 1

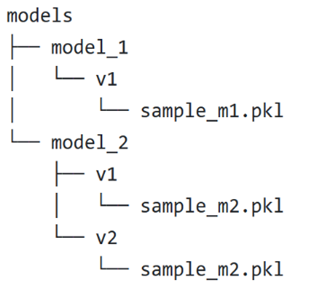

After you create your deployment, the environment variable AZUREML_MODEL_DIR will point to the storage location within Azure where your models are stored. For example, /var/azureml-app/azureml-models/81b3c48bbf62360c7edbbe9b280b9025/1 will contain the models and the file structure.

For this example, the contents of the AZUREML_MODEL_DIR folder will look like this:

Within your scoring script (score.py), you can load your models in the init() function. The following code loads the sample_m1.pkl model:

def init():

model_path = os.path.join(str(os.getenv("AZUREML_MODEL_DIR")), "models","model_1","v1", "sample_m1.pkl ")

model = joblib.load(model_path)

For an example of how to deploy multiple models to one deployment, see Deploy multiple models to one deployment (CLI example) and Deploy multiple models to one deployment (SDK example).

Tip

If you have more than 1500 files to register, consider compressing the files or subdirectories as .tar.gz when registering the models. To consume the models, you can uncompress the files or subdirectories in the init() function from the scoring script. Alternatively, when you register the models, set the azureml.unpack property to True, to automatically uncompress the files or subdirectories. In either case, uncompression happens once in the initialization stage.

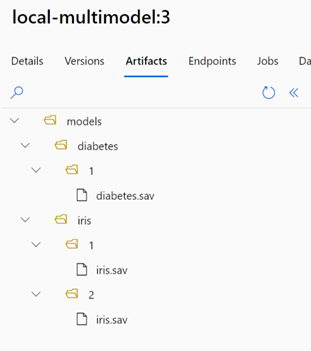

Use models registered in your Azure Machine Learning workspace in a deployment

To use one or more models, which are registered in your Azure Machine Learning workspace, in your deployment, specify the name of the registered model(s) in your deployment YAML. For example, the following deployment YAML configuration specifies the registered model name as azureml:local-multimodel:3:

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model: azureml:local-multimodel:3

code_configuration:

code: ../../model-1/onlinescoring/

scoring_script: score.py

environment:

conda_file: ../../model-1/environment/conda.yml

image: mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest

instance_type: Standard_DS3_v2

instance_count: 1

For this example, consider that local-multimodel:3 contains the following model artifacts, which can be viewed from the Models tab in the Azure Machine Learning studio:

After you create your deployment, the environment variable AZUREML_MODEL_DIR will point to the storage location within Azure where your models are stored. For example, /var/azureml-app/azureml-models/local-multimodel/3 will contain the models and the file structure. AZUREML_MODEL_DIR will point to the folder containing the root of the model artifacts.

Based on this example, the contents of the AZUREML_MODEL_DIR folder will look like this:

Within your scoring script (score.py), you can load your models in the init() function. For example, load the diabetes.sav model:

def init():

model_path = os.path.join(str(os.getenv("AZUREML_MODEL_DIR"), "models", "diabetes", "1", "diabetes.sav")

model = joblib.load(model_path)

Understand the scoring script

Tip

The format of the scoring script for online endpoints is the same format that's used in the preceding version of the CLI and in the Python SDK.

As noted earlier, the scoring script specified in code_configuration.scoring_script must have an init() function and a run() function.

This example uses the score.py file: score.py

import os

import logging

import json

import numpy

import joblib

def init():

"""

This function is called when the container is initialized/started, typically after create/update of the deployment.

You can write the logic here to perform init operations like caching the model in memory

"""

global model

# AZUREML_MODEL_DIR is an environment variable created during deployment.

# It is the path to the model folder (./azureml-models/$MODEL_NAME/$VERSION)

# Please provide your model's folder name if there is one

model_path = os.path.join(

os.getenv("AZUREML_MODEL_DIR"), "model/sklearn_regression_model.pkl"

)

# deserialize the model file back into a sklearn model

model = joblib.load(model_path)

logging.info("Init complete")

def run(raw_data):

"""

This function is called for every invocation of the endpoint to perform the actual scoring/prediction.

In the example we extract the data from the json input and call the scikit-learn model's predict()

method and return the result back

"""

logging.info("model 1: request received")

data = json.loads(raw_data)["data"]

data = numpy.array(data)

result = model.predict(data)

logging.info("Request processed")

return result.tolist()

The init() function is called when the container is initialized or started. Initialization typically occurs shortly after the deployment is created or updated. The init function is the place to write logic for global initialization operations like caching the model in memory (as we do in this example).

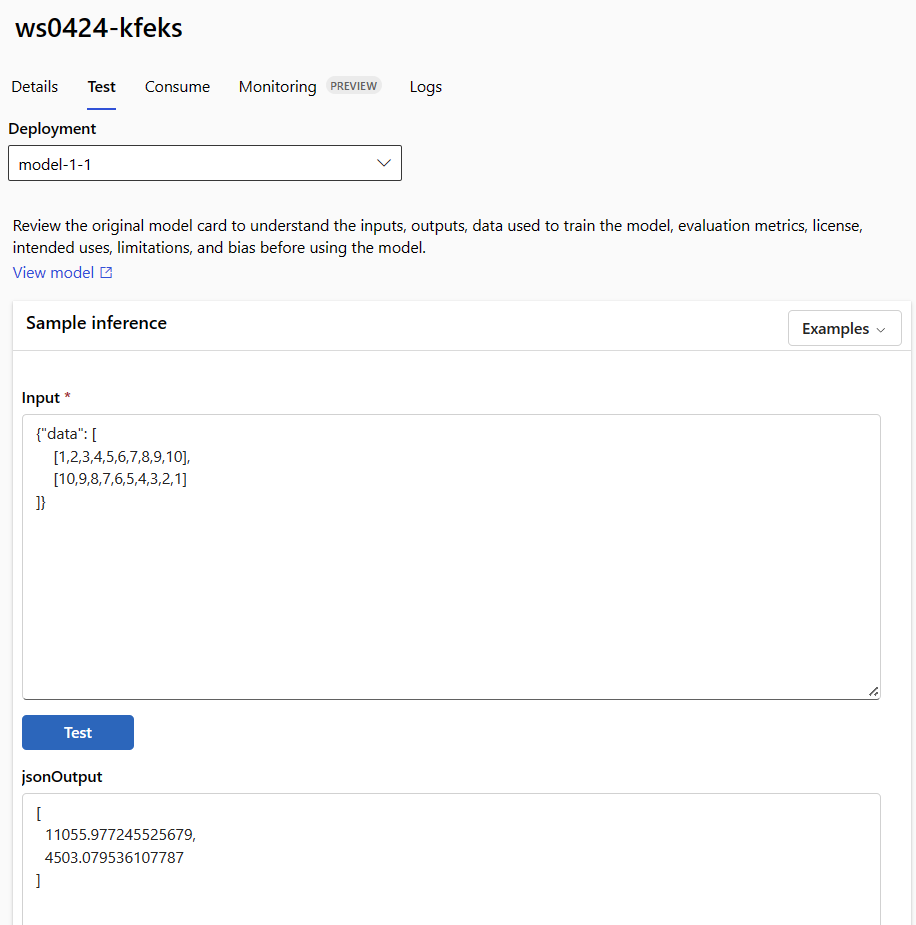

The run() function is called for every invocation of the endpoint, and it does the actual scoring and prediction. In this example, we'll extract data from a JSON input, call the scikit-learn model's predict() method, and then return the result.

Deploy and debug locally by using local endpoints

We highly recommend that you test-run your endpoint locally by validating and debugging your code and configuration before you deploy to Azure. Azure CLI and Python SDK support local endpoints and deployments, while Azure Machine Learning studio and ARM template don't.

To deploy locally, Docker Engine must be installed and running. Docker Engine typically starts when the computer starts. If it doesn't, you can troubleshoot Docker Engine.

Tip

You can use Azure Machine Learning inference HTTP server Python package to debug your scoring script locally without Docker Engine. Debugging with the inference server helps you to debug the scoring script before deploying to local endpoints so that you can debug without being affected by the deployment container configurations.

Note

Local endpoints have the following limitations:

- They do not support traffic rules, authentication, or probe settings.

- They support only one deployment per endpoint.

- They support local model files and environment with local conda file only. If you want to test registered models, first download them using CLI or SDK, then use

pathin the deployment definition to refer to the parent folder. If you want to test registered environments, check the context of the environment in Azure Machine Learning studio and prepare local conda file to use. Example in this article demonstrates using local model and environment with local conda file, which supports local deployment.

For more information on debugging online endpoints locally before deploying to Azure, see Debug online endpoints locally in Visual Studio Code.

Deploy the model locally

First create an endpoint. Optionally, for a local endpoint, you can skip this step and directly create the deployment (next step), which will, in turn, create the required metadata. Deploying models locally is useful for development and testing purposes.

az ml online-endpoint create --local -n $ENDPOINT_NAME -f endpoints/online/managed/sample/endpoint.yml

Now, create a deployment named blue under the endpoint.

az ml online-deployment create --local -n blue --endpoint $ENDPOINT_NAME -f endpoints/online/managed/sample/blue-deployment.yml

The --local flag directs the CLI to deploy the endpoint in the Docker environment.

Tip

Use Visual Studio Code to test and debug your endpoints locally. For more information, see debug online endpoints locally in Visual Studio Code.

Verify the local deployment succeeded

Check the status to see whether the model was deployed without error:

az ml online-endpoint show -n $ENDPOINT_NAME --local

The output should appear similar to the following JSON. The provisioning_state is Succeeded.

{

"auth_mode": "key",

"location": "local",

"name": "docs-endpoint",

"properties": {},

"provisioning_state": "Succeeded",

"scoring_uri": "http://localhost:49158/score",

"tags": {},

"traffic": {}

}

The following table contains the possible values for provisioning_state:

| State | Description |

|---|---|

| Creating | The resource is being created. |

| Updating | The resource is being updated. |

| Deleting | The resource is being deleted. |

| Succeeded | The create/update operation was successful. |

| Failed | The create/update/delete operation has failed. |

Invoke the local endpoint to score data by using your model

Invoke the endpoint to score the model by using the convenience command invoke and passing query parameters that are stored in a JSON file:

az ml online-endpoint invoke --local --name $ENDPOINT_NAME --request-file endpoints/online/model-1/sample-request.json

If you want to use a REST client (like curl), you must have the scoring URI. To get the scoring URI, run az ml online-endpoint show --local -n $ENDPOINT_NAME. In the returned data, find the scoring_uri attribute. Sample curl based commands are available later in this doc.

Review the logs for output from the invoke operation

In the example score.py file, the run() method logs some output to the console.

You can view this output by using the get-logs command:

az ml online-deployment get-logs --local -n blue --endpoint $ENDPOINT_NAME

Deploy your online endpoint to Azure

Next, deploy your online endpoint to Azure.

Deploy to Azure

To create the endpoint in the cloud, run the following code:

az ml online-endpoint create --name $ENDPOINT_NAME -f endpoints/online/managed/sample/endpoint.yml

To create the deployment named blue under the endpoint, run the following code:

az ml online-deployment create --name blue --endpoint $ENDPOINT_NAME -f endpoints/online/managed/sample/blue-deployment.yml --all-traffic

This deployment might take up to 15 minutes, depending on whether the underlying environment or image is being built for the first time. Subsequent deployments that use the same environment will finish processing more quickly.

Tip

- If you prefer not to block your CLI console, you may add the flag

--no-waitto the command. However, this will stop the interactive display of the deployment status.

Important

The --all-traffic flag in the above az ml online-deployment create allocates 100% of the endpoint traffic to the newly created blue deployment. Though this is helpful for development and testing purposes, for production, you might want to open traffic to the new deployment through an explicit command. For example, az ml online-endpoint update -n $ENDPOINT_NAME --traffic "blue=100".

Tip

- Use Troubleshooting online endpoints deployment to debug errors.

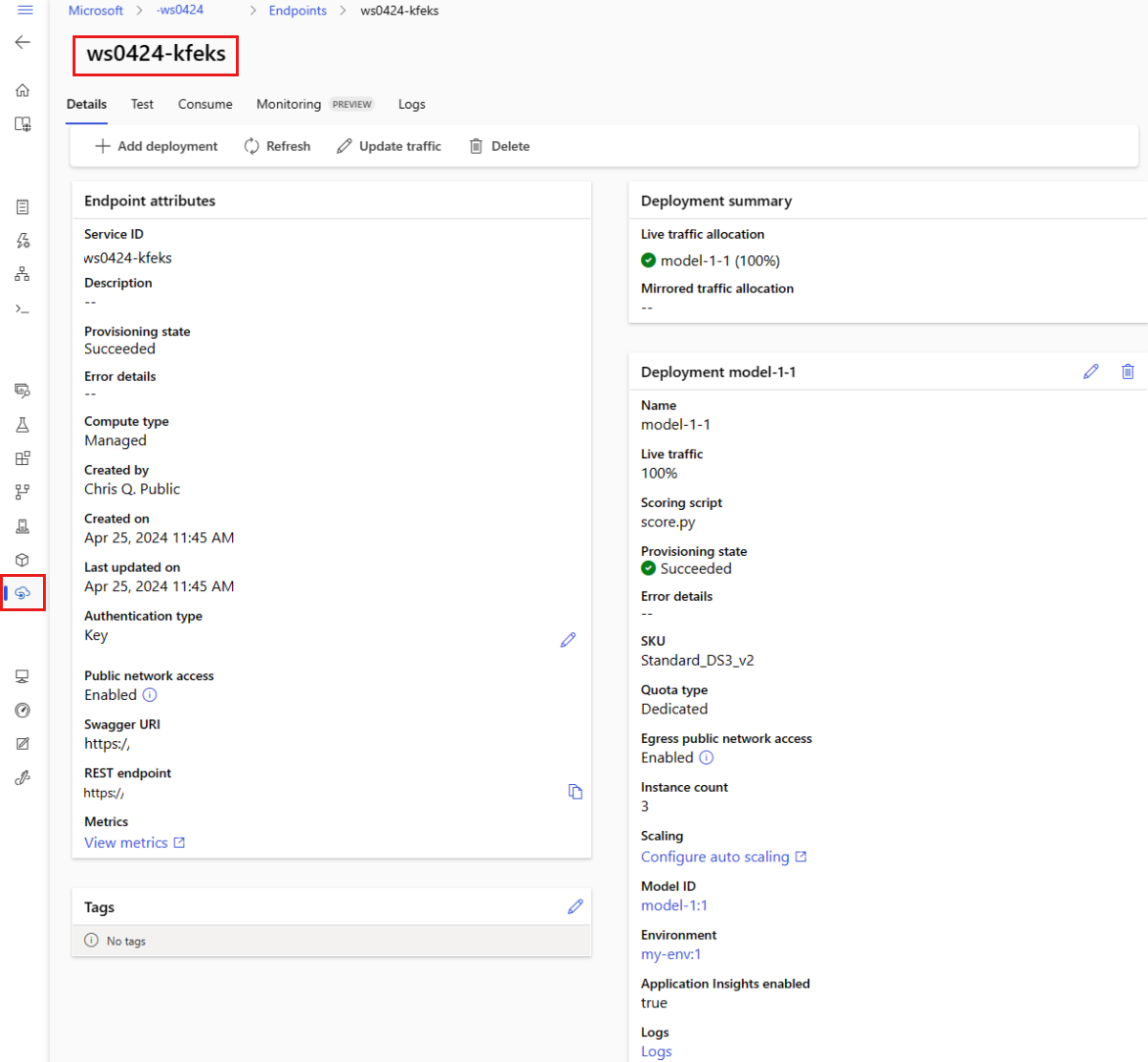

Check the status of the endpoint

The show command contains information in provisioning_state for the endpoint and deployment:

az ml online-endpoint show -n $ENDPOINT_NAME

You can list all the endpoints in the workspace in a table format by using the list command:

az ml online-endpoint list --output table

Check the status of the online deployment

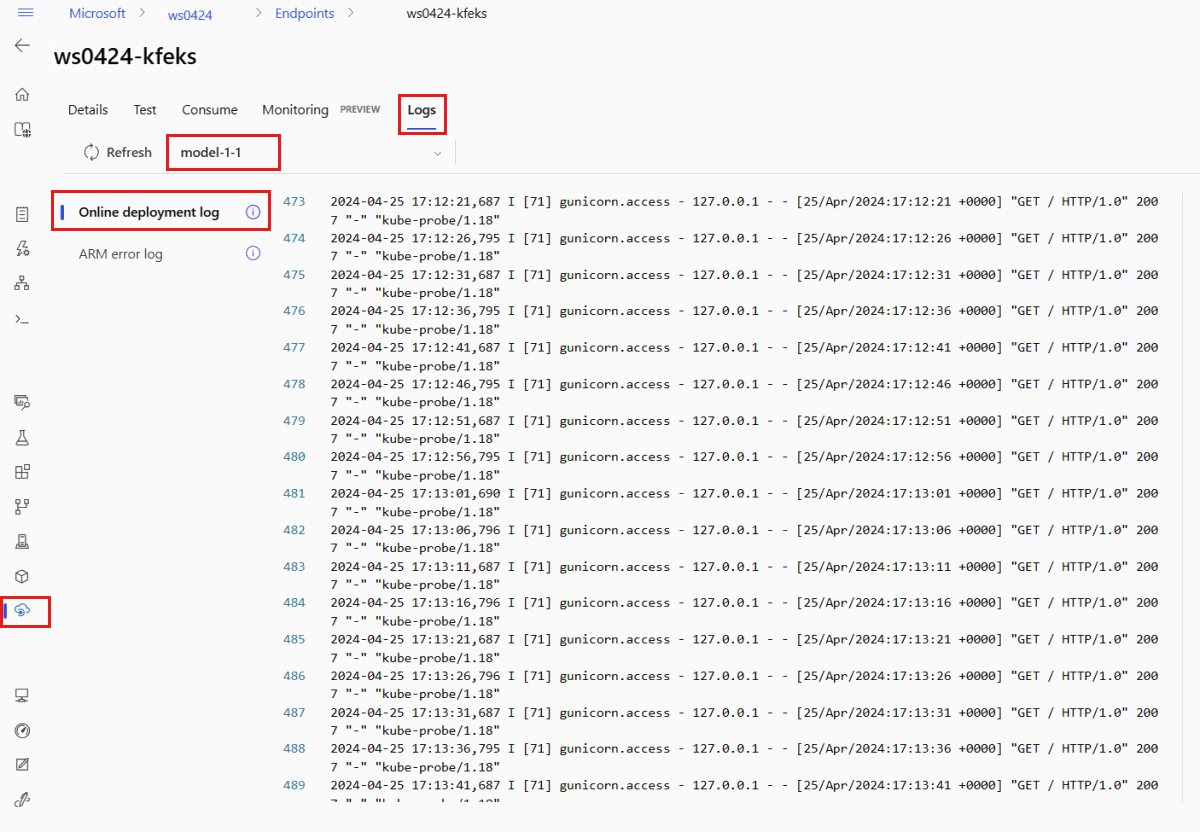

Check the logs to see whether the model was deployed without error.

To see log output from a container, use the following CLI command:

az ml online-deployment get-logs --name blue --endpoint $ENDPOINT_NAME

By default, logs are pulled from the inference server container. To see logs from the storage initializer container, add the --container storage-initializer flag. For more information on deployment logs, see Get container logs.

Invoke the endpoint to score data by using your model

You can use either the invoke command or a REST client of your choice to invoke the endpoint and score some data:

az ml online-endpoint invoke --name $ENDPOINT_NAME --request-file endpoints/online/model-1/sample-request.json

The following example shows how to get the key used to authenticate to the endpoint:

Tip

You can control which Microsoft Entra security principals can get the authentication key by assigning them to a custom role that allows Microsoft.MachineLearningServices/workspaces/onlineEndpoints/token/action and Microsoft.MachineLearningServices/workspaces/onlineEndpoints/listkeys/action. For more information, see Manage access to an Azure Machine Learning workspace.

ENDPOINT_KEY=$(az ml online-endpoint get-credentials -n $ENDPOINT_NAME -o tsv --query primaryKey)

Next, use curl to score data.

SCORING_URI=$(az ml online-endpoint show -n $ENDPOINT_NAME -o tsv --query scoring_uri)

curl --request POST "$SCORING_URI" --header "Authorization: Bearer $ENDPOINT_KEY" --header 'Content-Type: application/json' --data @endpoints/online/model-1/sample-request.json

Notice we use show and get-credentials commands to get the authentication credentials. Also notice that we're using the --query flag to filter attributes to only what we need. To learn more about --query, see Query Azure CLI command output.

To see the invocation logs, run get-logs again.

For information on authenticating using a token, see Authenticate to online endpoints.

(Optional) Update the deployment

If you want to update the code, model, or environment, update the YAML file, and then run the az ml online-endpoint update command.

Note

If you update instance count (to scale your deployment) along with other model settings (such as code, model, or environment) in a single update command, the scaling operation will be performed first, then the other updates will be applied. It's a good practice to perform these operations separately in a production environment.

To understand how update works:

Open the file online/model-1/onlinescoring/score.py.

Change the last line of the

init()function: Afterlogging.info("Init complete"), addlogging.info("Updated successfully").Save the file.

Run this command:

az ml online-deployment update -n blue --endpoint $ENDPOINT_NAME -f endpoints/online/managed/sample/blue-deployment.ymlNote

Updating by using YAML is declarative. That is, changes in the YAML are reflected in the underlying Azure Resource Manager resources (endpoints and deployments). A declarative approach facilitates GitOps: All changes to endpoints and deployments (even

instance_count) go through the YAML.Tip

- You can use generic update parameters, such as the

--setparameter, with the CLIupdatecommand to override attributes in your YAML or to set specific attributes without passing them in the YAML file. Using--setfor single attributes is especially valuable in development and test scenarios. For example, to scale up theinstance_countvalue for the first deployment, you could use the--set instance_count=2flag. However, because the YAML isn't updated, this technique doesn't facilitate GitOps. - Specifying the YAML file is NOT mandatory. For example, if you wanted to test different concurrency setting for a given deployment, you can try something like

az ml online-deployment update -n blue -e my-endpoint --set request_settings.max_concurrent_requests_per_instance=4 environment_variables.WORKER_COUNT=4. This will keep all existing configuration but update only the specified parameters.

- You can use generic update parameters, such as the

Because you modified the

init()function, which runs when the endpoint is created or updated, the messageUpdated successfullywill be in the logs. Retrieve the logs by running:az ml online-deployment get-logs --name blue --endpoint $ENDPOINT_NAME

The update command also works with local deployments. Use the same az ml online-deployment update command with the --local flag.

Note

The previous update to the deployment is an example of an inplace rolling update.

- For a managed online endpoint, the deployment is updated to the new configuration with 20% nodes at a time. That is, if the deployment has 10 nodes, 2 nodes at a time will be updated.

- For a Kubernetes online endpoint, the system will iteratively create a new deployment instance with the new configuration and delete the old one.

- For production usage, you should consider blue-green deployment, which offers a safer alternative for updating a web service.

(Optional) Configure autoscaling

Autoscale automatically runs the right amount of resources to handle the load on your application. Managed online endpoints support autoscaling through integration with the Azure monitor autoscale feature. To configure autoscaling, see How to autoscale online endpoints.

(Optional) Monitor SLA by using Azure Monitor

To view metrics and set alerts based on your SLA, complete the steps that are described in Monitor online endpoints.

(Optional) Integrate with Log Analytics

The get-logs command for CLI or the get_logs method for SDK provides only the last few hundred lines of logs from an automatically selected instance. However, Log Analytics provides a way to durably store and analyze logs. For more information on using logging, see Monitor online endpoints.

Delete the endpoint and the deployment

If you aren't going use the deployment, you should delete it by running the following code (it deletes the endpoint and all the underlying deployments):

az ml online-endpoint delete --name $ENDPOINT_NAME --yes --no-wait

Related content

- Safe rollout for online endpoints

- Deploy models with REST

- How to autoscale managed online endpoints

- How to monitor managed online endpoints

- Access Azure resources from an online endpoint with a managed identity

- Troubleshoot online endpoints deployment

- Enable network isolation with managed online endpoints

- View costs for an Azure Machine Learning managed online endpoint

- Manage and increase quotas for resources with Azure Machine Learning

- Use batch endpoints for batch scoring

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for