Use Responsible AI scorecard (preview) in Azure Machine Learning

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

An Azure Machine Learning Responsible AI scorecard is a PDF report that's generated based on Responsible AI dashboard insights and customizations to accompany your machine learning models. You can easily configure, download, and share your PDF scorecard with your technical and non-technical stakeholders to educate them about your data and model health and compliance, and to help build trust. You can also use the scorecard in audit reviews to inform the stakeholders about the characteristics of your model.

Important

This feature is currently in public preview. This preview version is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities.

For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Where to find your Responsible AI scorecard

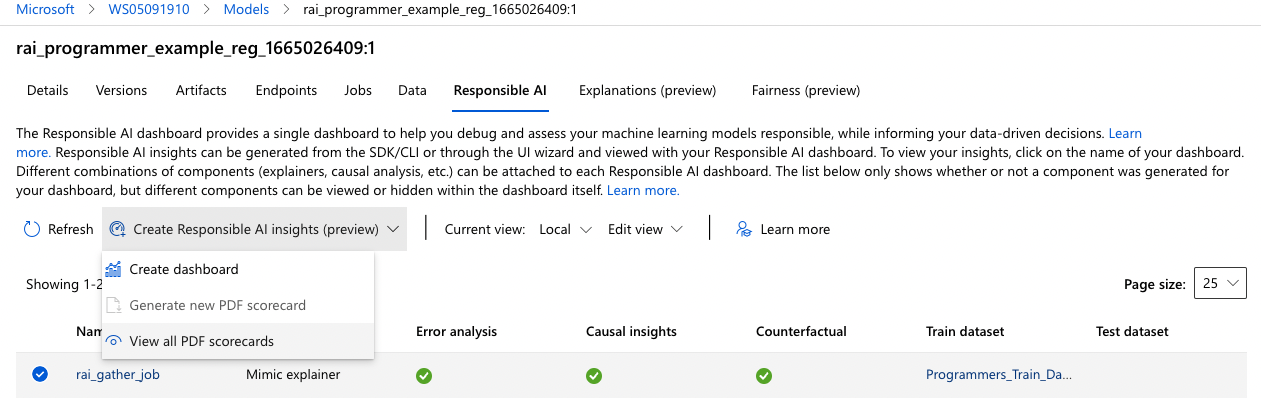

Responsible AI scorecards are linked to your Responsible AI dashboards. To view your Responsible AI scorecard, go into your model registry by selecting the Model in Azure Machine Learning studio. Then select the registered model that you've generated a Responsible AI dashboard and scorecard for. After you've selected your model, select the Responsible AI tab to view a list of generated dashboards. Select which dashboard you want to export a Responsible AI scorecard PDF for by selecting Responsible AI Insights then **View all PDF scorecards.

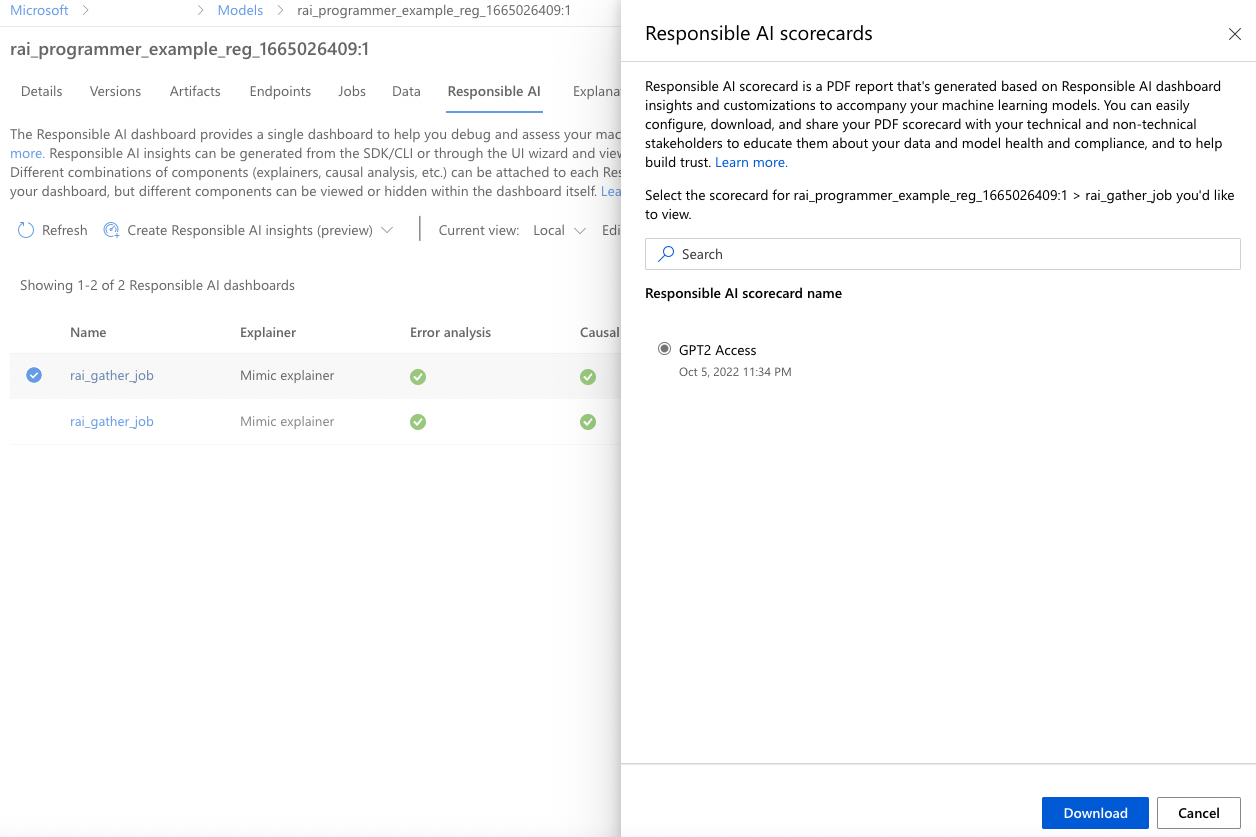

Select Responsible AI scorecard (preview) to display a list of all Responsible AI scorecards that are generated for this dashboard.

In the list, select the scorecard you want to download, and then select Download to download the PDF to your machine.

How to read your Responsible AI scorecard

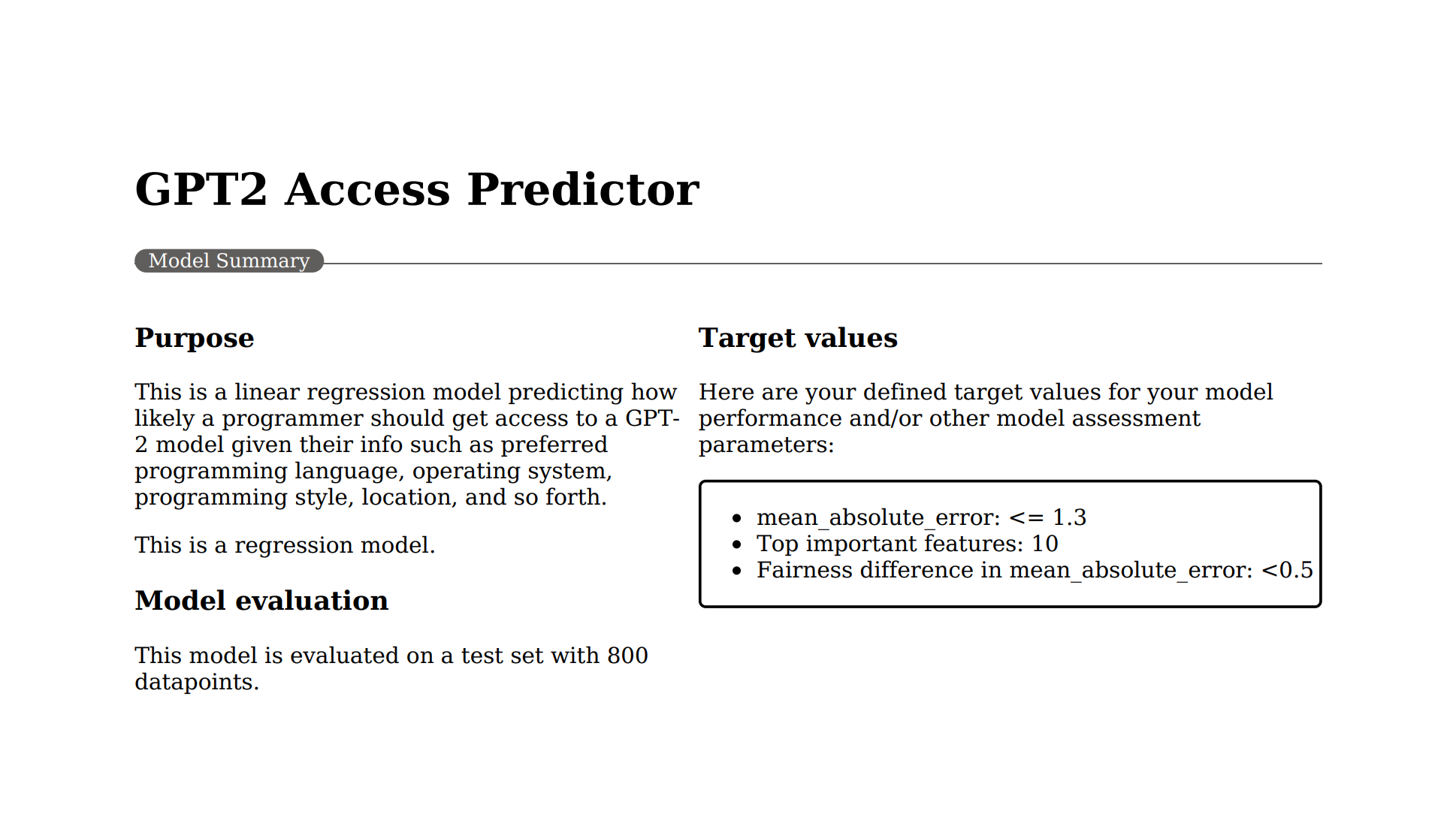

The Responsible AI scorecard is a PDF summary of key insights from your Responsible AI dashboard. The first summary segment of the scorecard gives you an overview of the machine learning model and the key target values you've set to help your stakeholders determine whether the model is ready to be deployed:

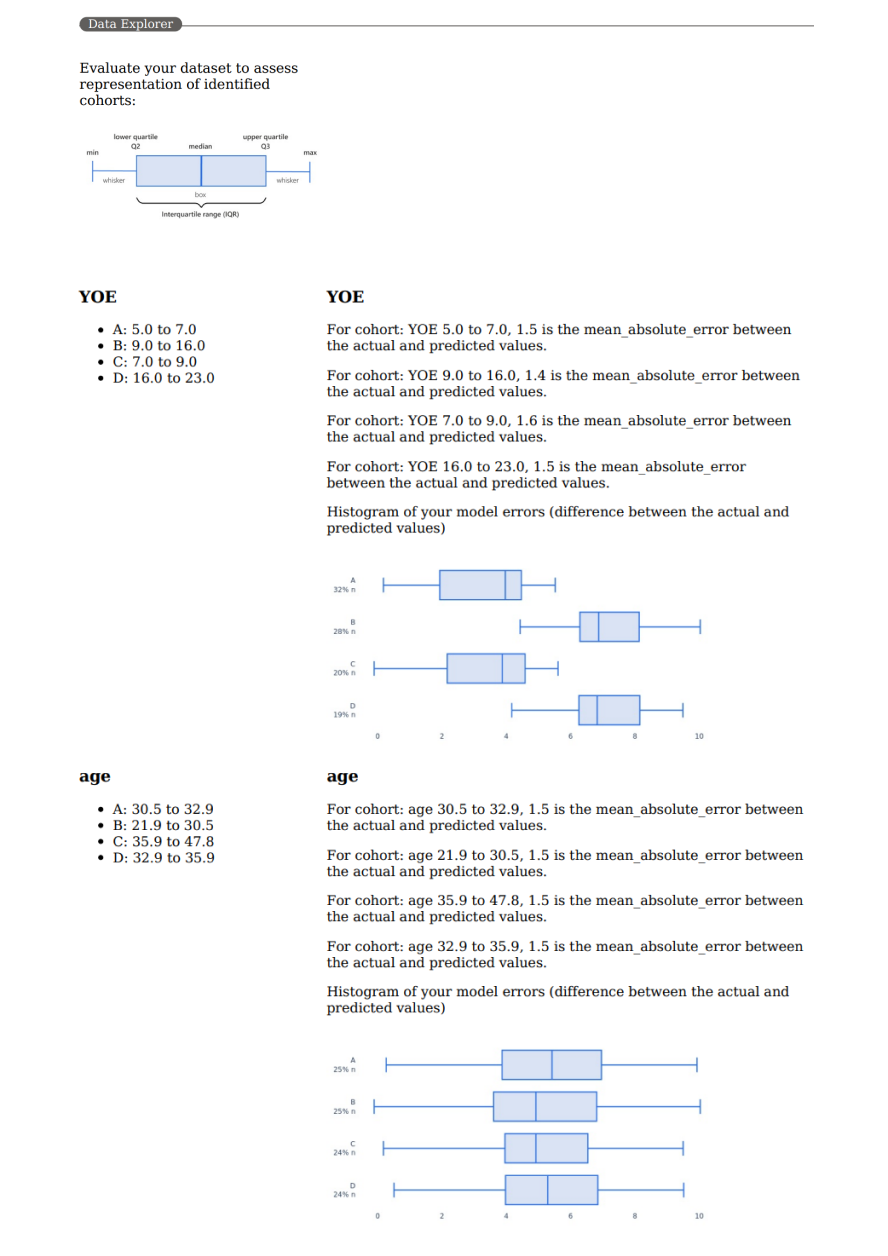

The data analysis segment shows you characteristics of your data, because any model story is incomplete without a correct understanding of your data:

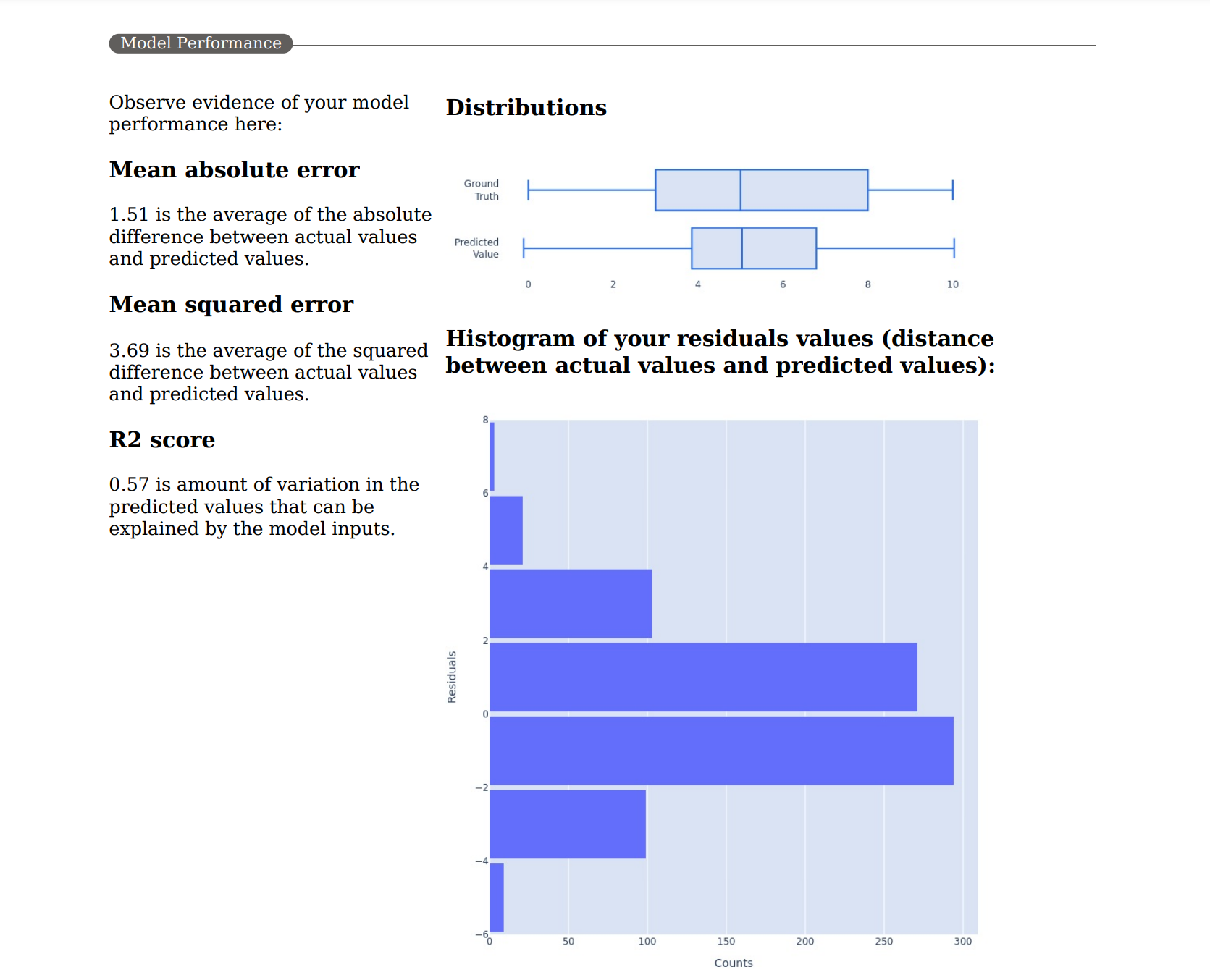

The model performance segment displays your model's most important metrics and characteristics of your predictions and how well they satisfy your desired target values:

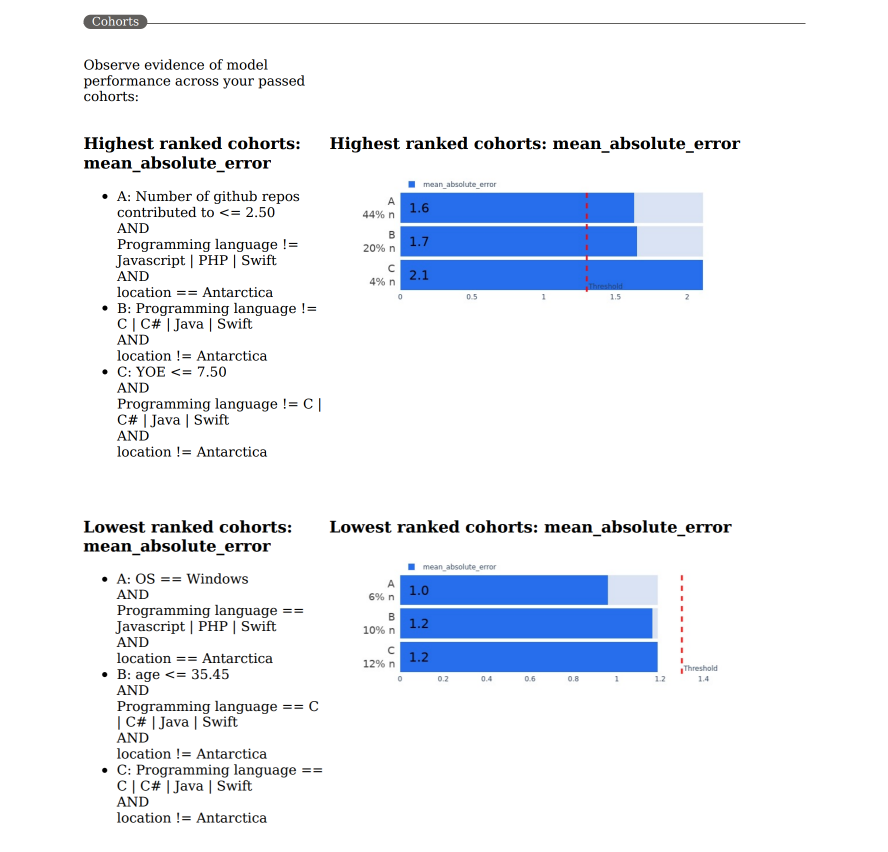

Next, you can also view the top performing and worst performing data cohorts and subgroups that are automatically extracted for you to see the blind spots of your model:

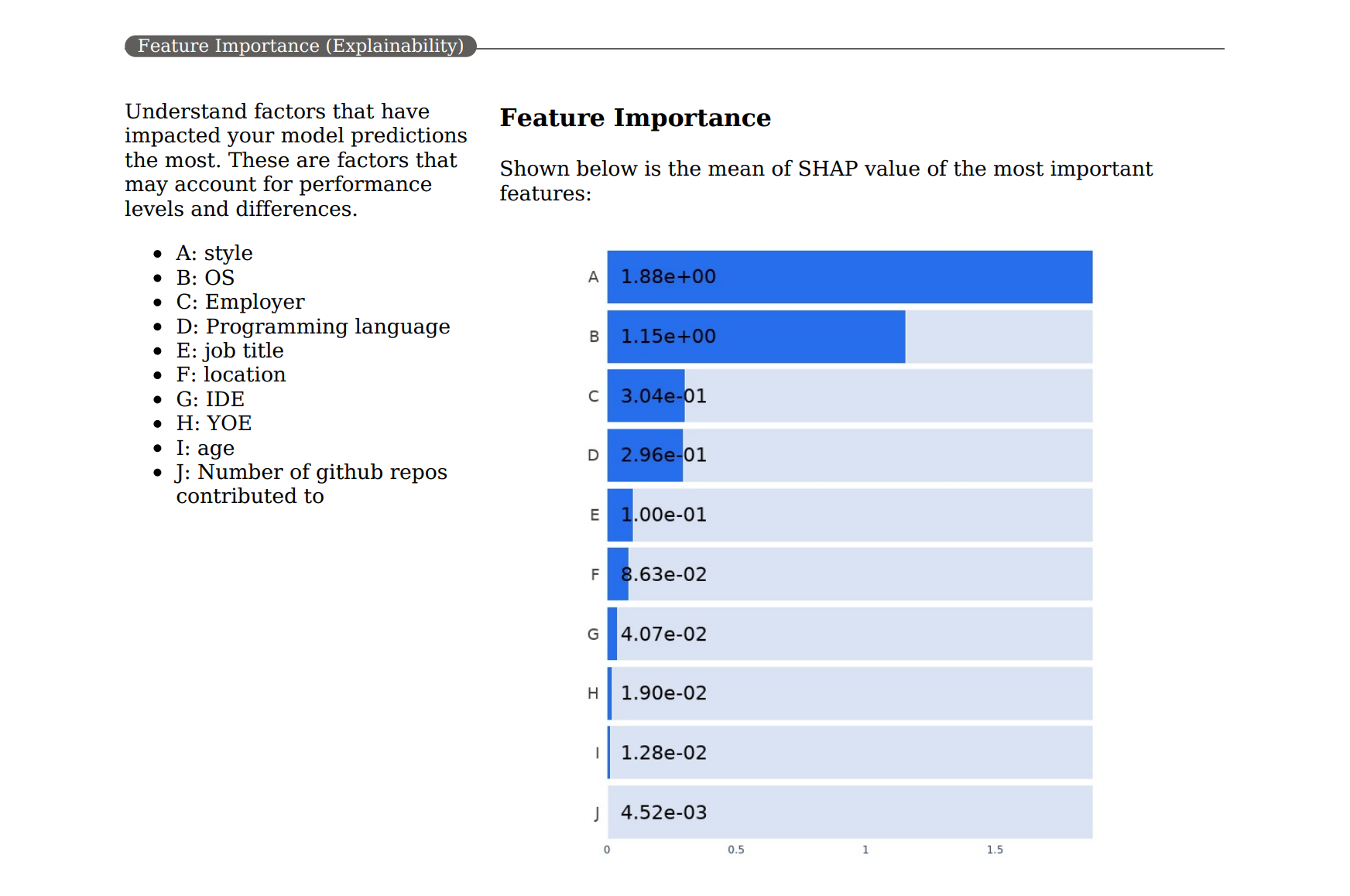

You can see the top important factors that affect your model predictions, which is a requirement to build trust with how your model is performing its task:

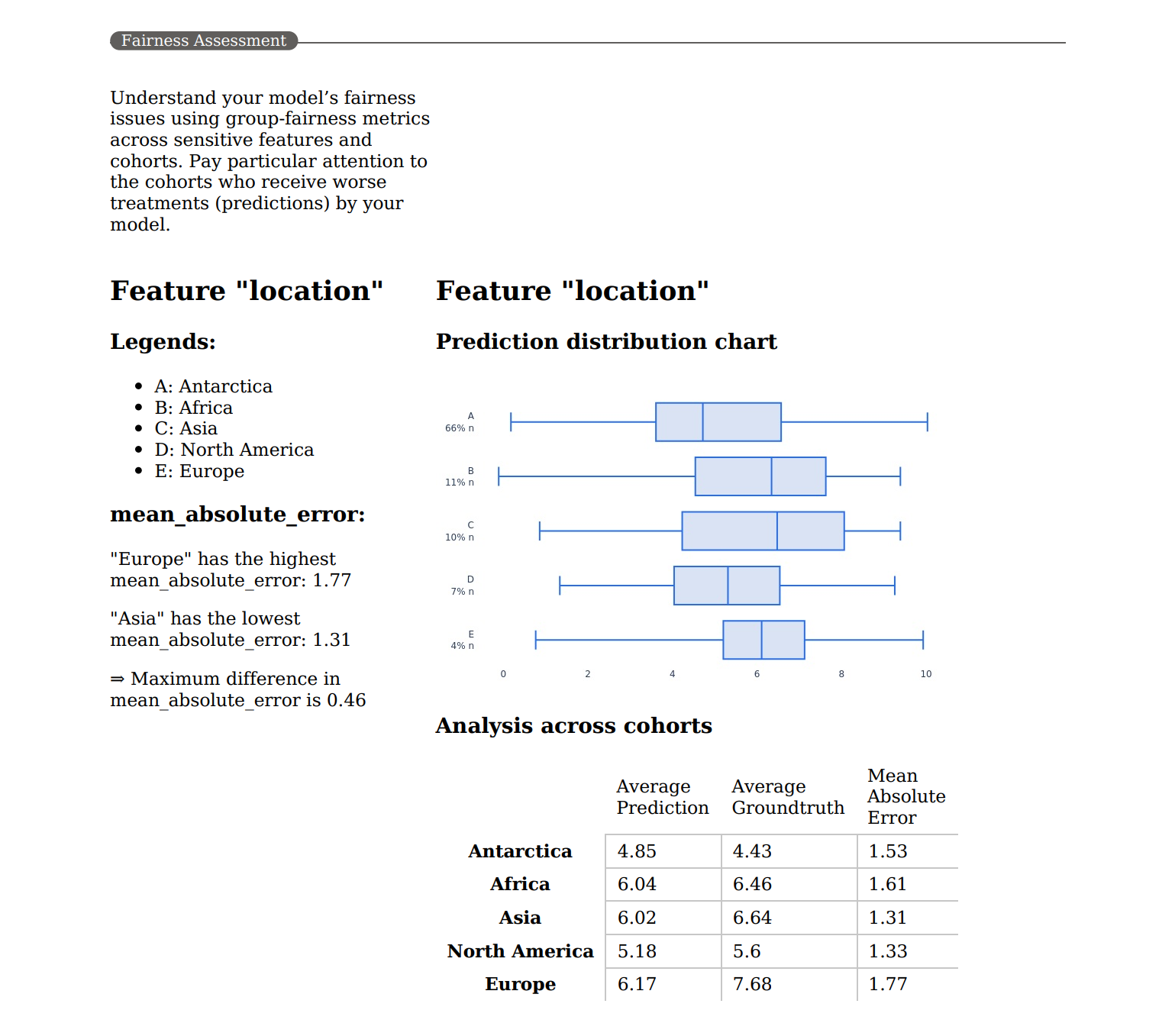

You can further see your model fairness insights summarized and inspect how well your model is satisfying the fairness target values you've set for your desired sensitive groups:

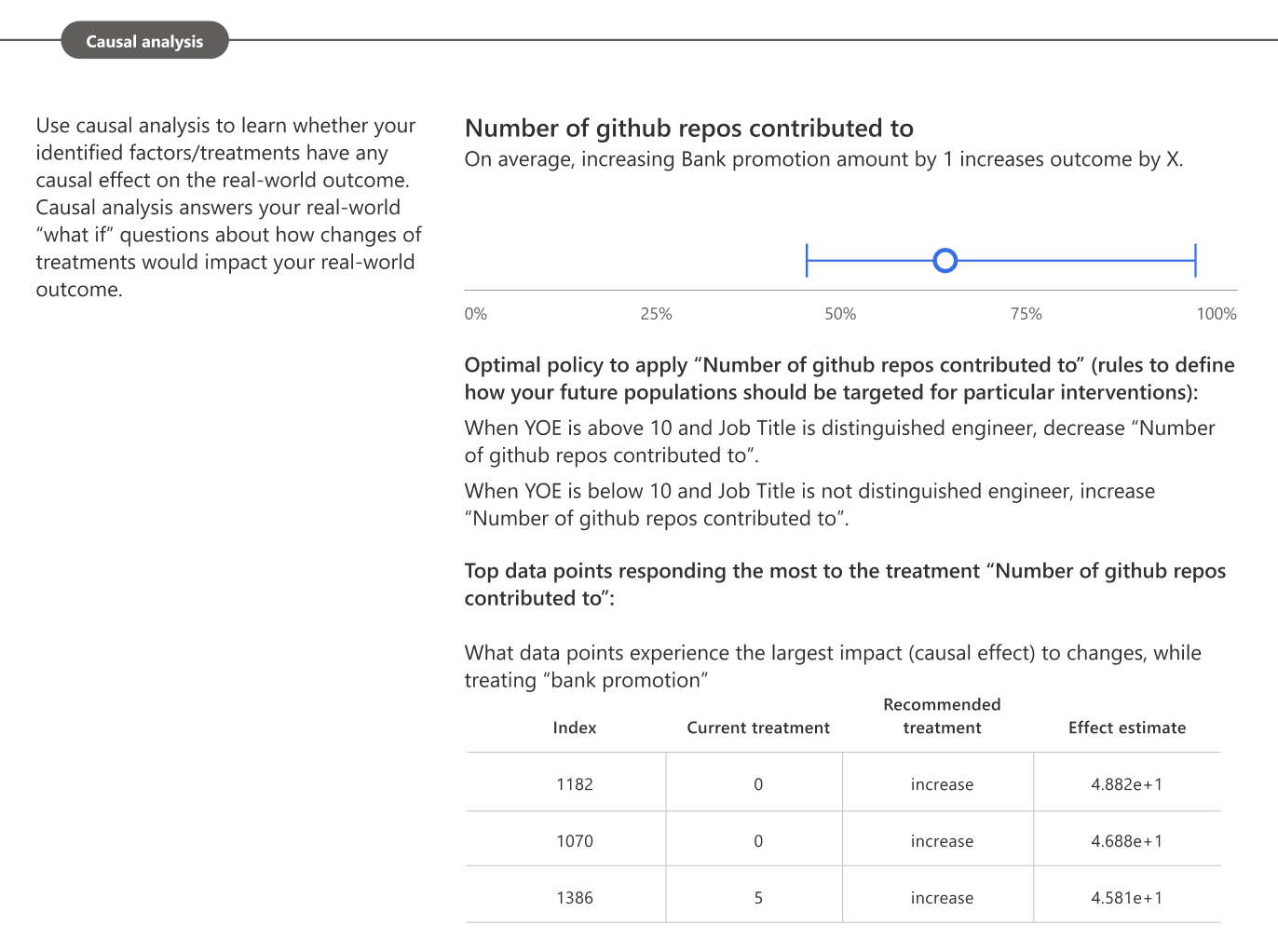

Finally, you can see your dataset's causal insights summarized, which can help you determine whether your identified factors or treatments have any causal effect on the real-world outcome:

Next steps

- See the how-to guide for generating a Responsible AI dashboard via CLI v2 and SDK v2 or the Azure Machine Learning studio UI.

- Learn more about the concepts and techniques behind the Responsible AI dashboard.

- View sample YAML and Python notebooks to generate a Responsible AI dashboard with YAML or Python.

- Learn more about how you can use the Responsible AI dashboard and scorecard to debug data and models and inform better decision-making in this tech community blog post.

- Learn about how the Responsible AI dashboard and scorecard were used by the UK National Health Service (NHS) in a real-life customer story.

- Explore the features of the Responsible AI dashboard through this interactive AI lab web demo.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for