Filter and ingest to Azure Synapse SQL using the Stream Analytics no code editor

This article describes how you can use the no code editor to easily create a Stream Analytics job. It continuously reads from your Event Hubs, filters the incoming data, and then writes the results continuously to Synapse SQL table.

Prerequisites

- Your Azure Event Hubs resources must be publicly accessible and can't be behind a firewall or secured in an Azure Virtual Network.

- The data in your Event Hubs must be serialized in either JSON, CSV, or Avro format.

Develop a Stream Analytics job to filter and ingest data

Use the following steps to develop a Stream Analytics job to filter and ingest real time data into a Synapse SQL table.

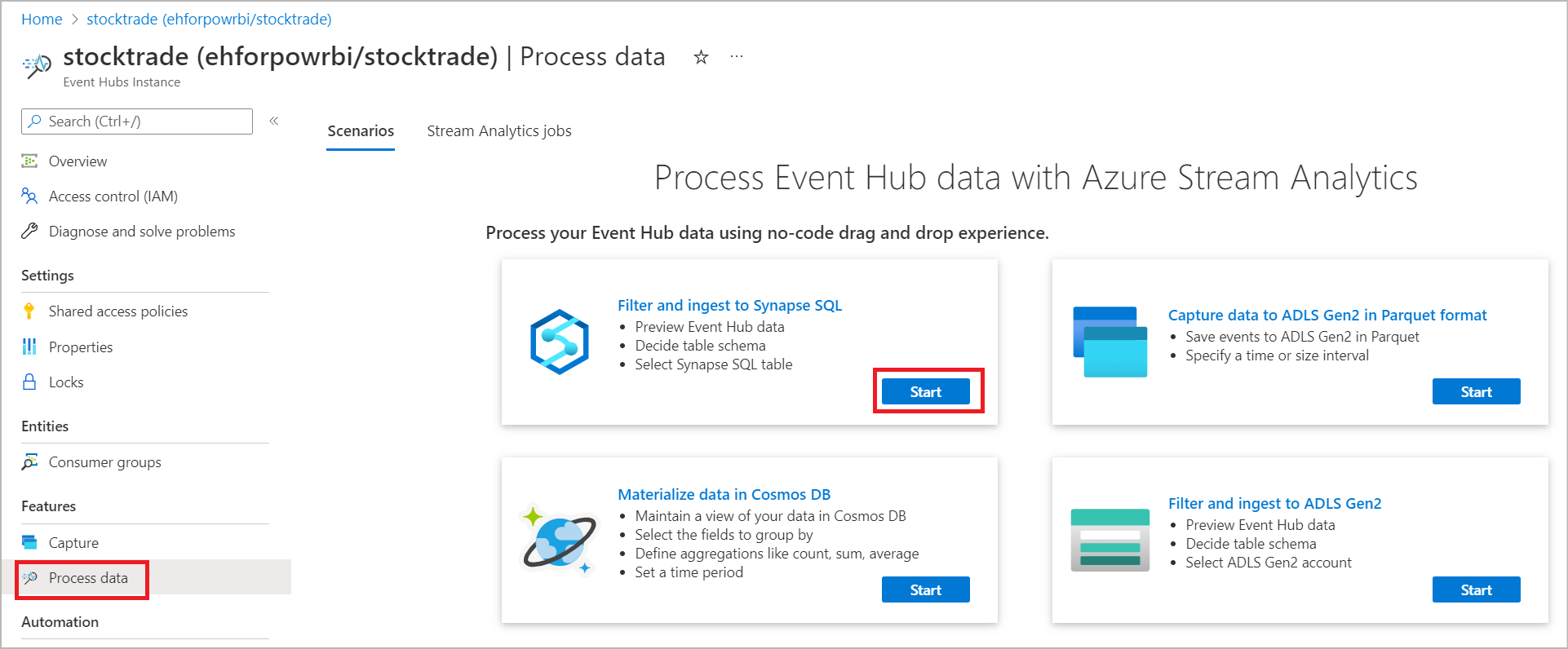

In the Azure portal, locate and select your Azure Event Hubs instance.

Select Features > Process Data, and select Start on the Filter and ingest to Synapse SQL card.

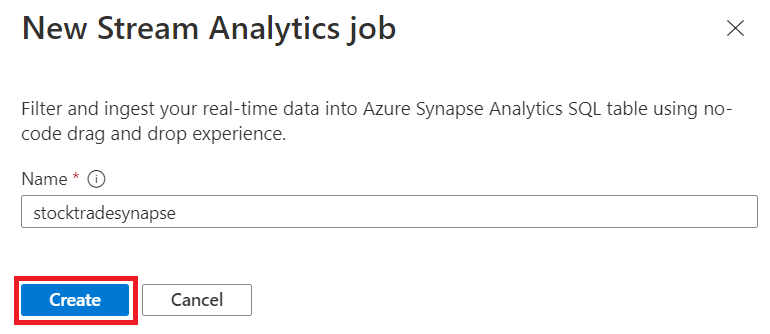

Enter a name to identify your Stream Analytics job, then select Create.

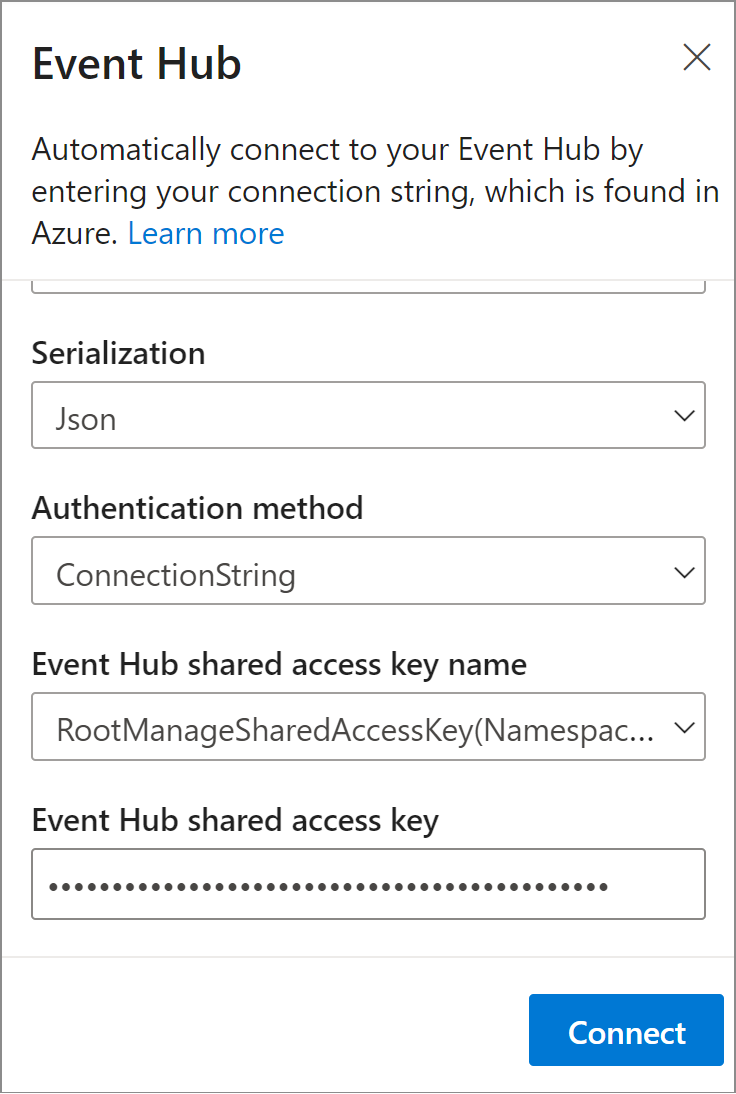

Specify the Serialization type of your data in the Event Hubs window and the Authentication method that the job will use to connect to the Event Hubs. Then select Connect.

When the connection is established successfully and you have data streams flowing into your Event Hubs instance, you'll immediately see two things:

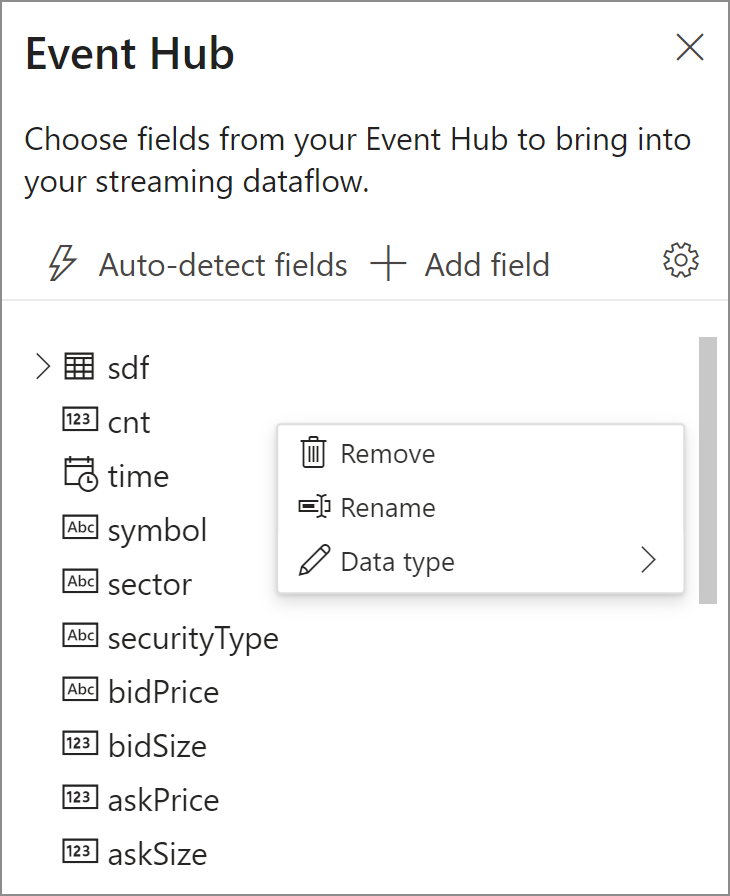

- Fields that are present in the input data. You can choose Add field or select the three dot symbol next to a field to remove, rename, or change its type.

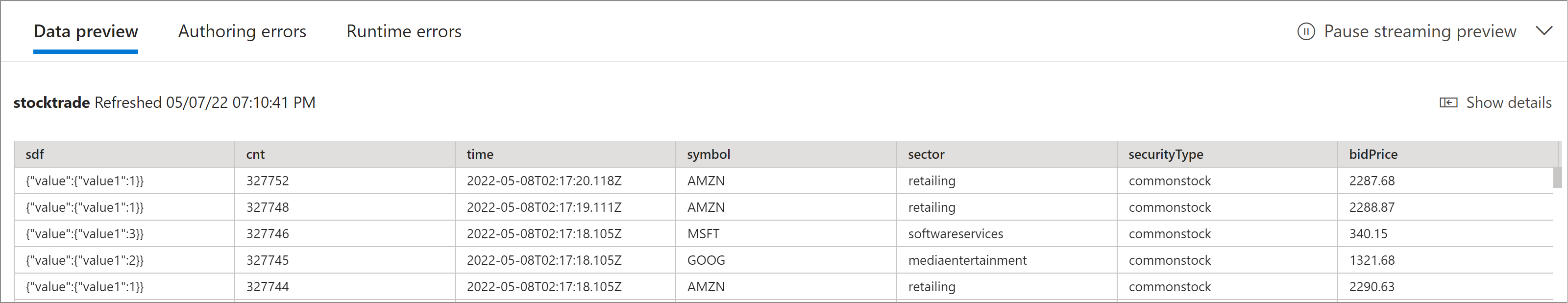

- A live sample of incoming data in the Data preview table under the diagram view. It automatically refreshes periodically. You can select Pause streaming preview to see a static view of the sample input data.

- Fields that are present in the input data. You can choose Add field or select the three dot symbol next to a field to remove, rename, or change its type.

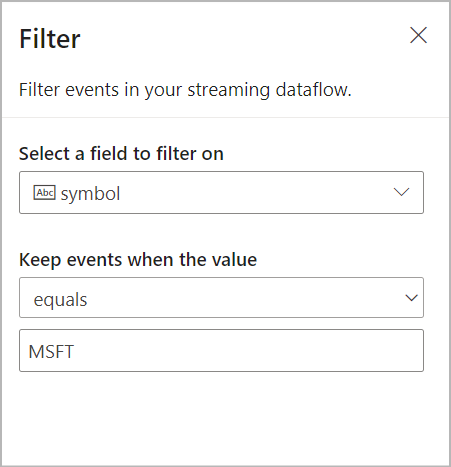

In the Filter area, select a field to filter the incoming data with a condition.

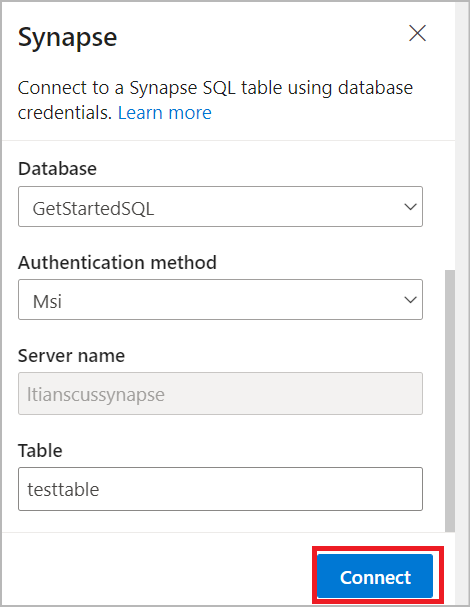

Select the Synapse SQL table to send your filtered data:

- Select the Subscription, Database (dedicated SQL pool name) and Authentication method from the drop-down menu.

- Enter Table name where the filtered data will be ingested. Select Connect.

Note

The table schema must exactly match the number of fields and their types that your data preview generates.

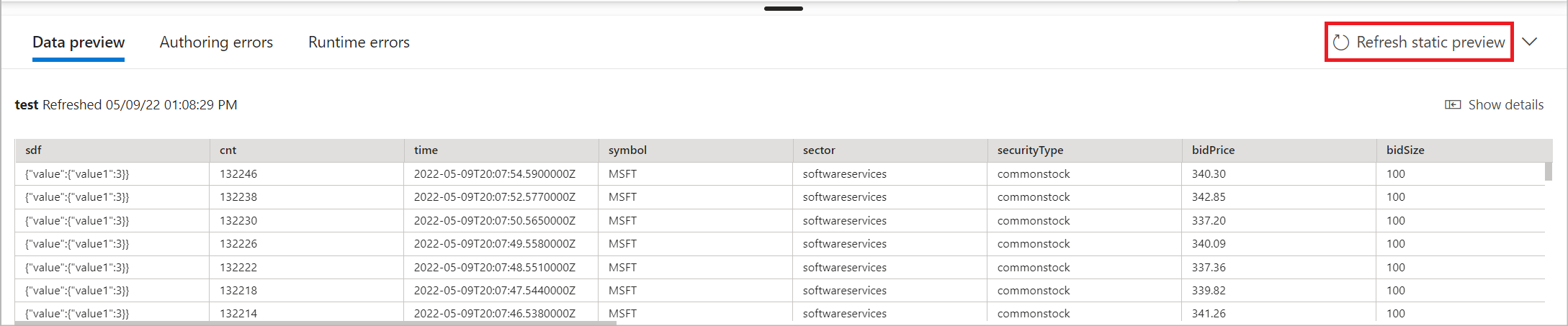

Optionally, select Get static preview/Refresh static preview to see the data preview that will be ingested in selected Synapse SQL table.

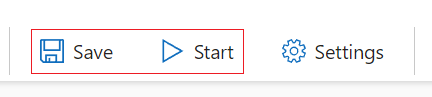

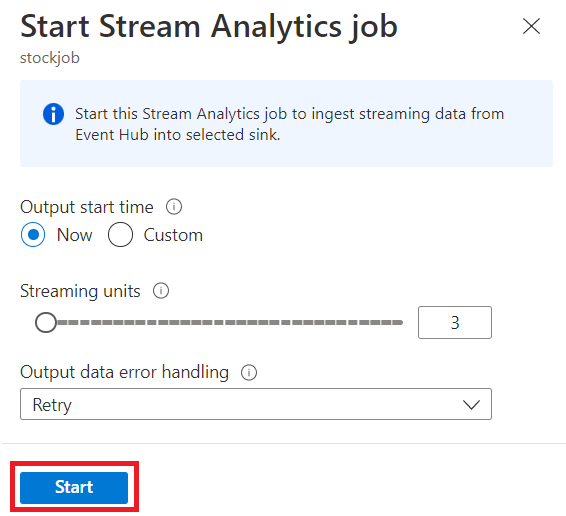

To start the job, specify:

- The number of Streaming Units (SUs) the job runs with. SUs represents the amount of compute and memory allocated to the job. We recommended that you start with three and then adjust as needed.

- Output data error handling – It allows you to specify the behavior you want when a job’s output to your destination fails due to data errors. By default, your job retries until the write operation succeeds. You can also choose to drop such output events.

After you select Start, the job starts running within two minutes and the metrics will be open in tab section below.

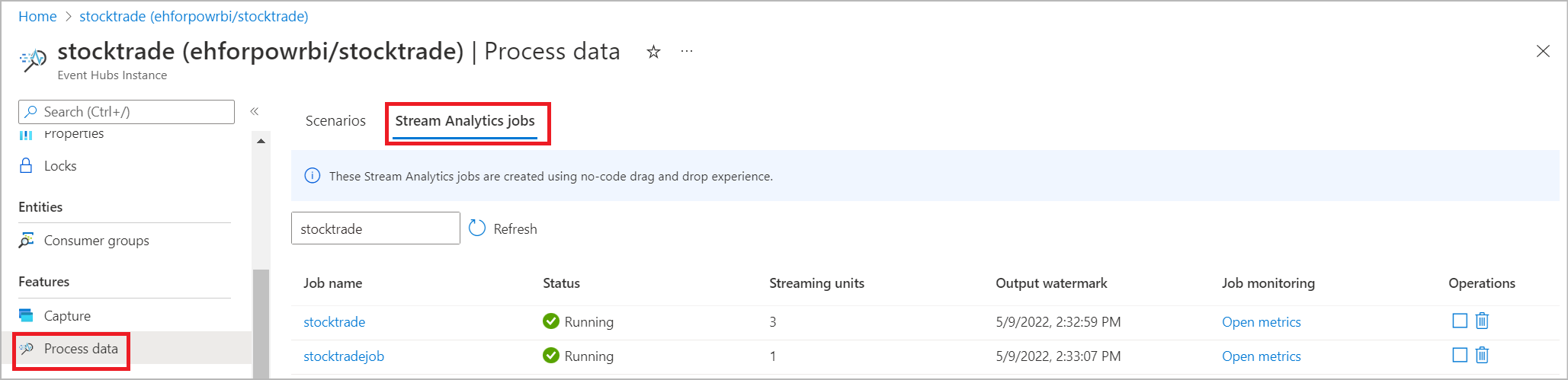

You can also see the job under the Process Data section on the Stream Analytics jobs tab. Select Open metrics to monitor it or stop and restart it, as needed.

Next steps

Learn more about Azure Stream Analytics and how to monitor the job you've created.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for