Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

This article shows you how to add Confluent Kafka source to an eventstream.

Confluent Cloud Kafka is a streaming platform offering powerful data streaming and processing functionalities using Apache Kafka. By integrating Confluent Cloud Kafka as a source within your eventstream, you can seamlessly process real-time data streams before routing them to multiple destinations within Fabric.

Note

This source is not supported in the following regions of your workspace capacity: West US3, Switzerland West.

If you haven't added any source to your eventstream yet, select Use external source tile.

If you're adding the source to an already published eventstream, switch to Edit mode, select Add source on the ribbon, and then select External sources.

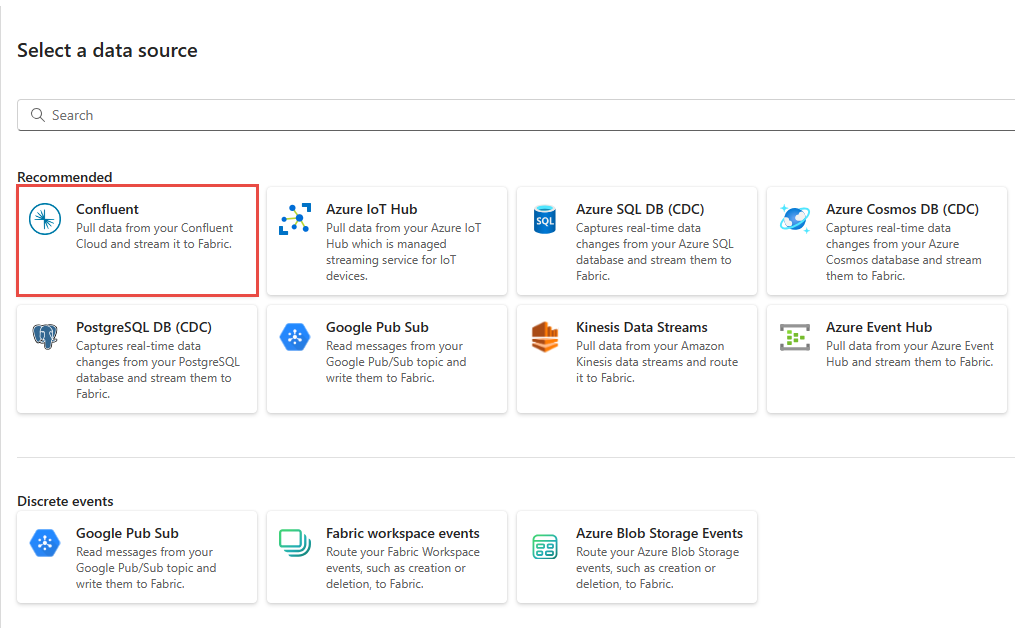

On the Select a data source page, select Confluent.

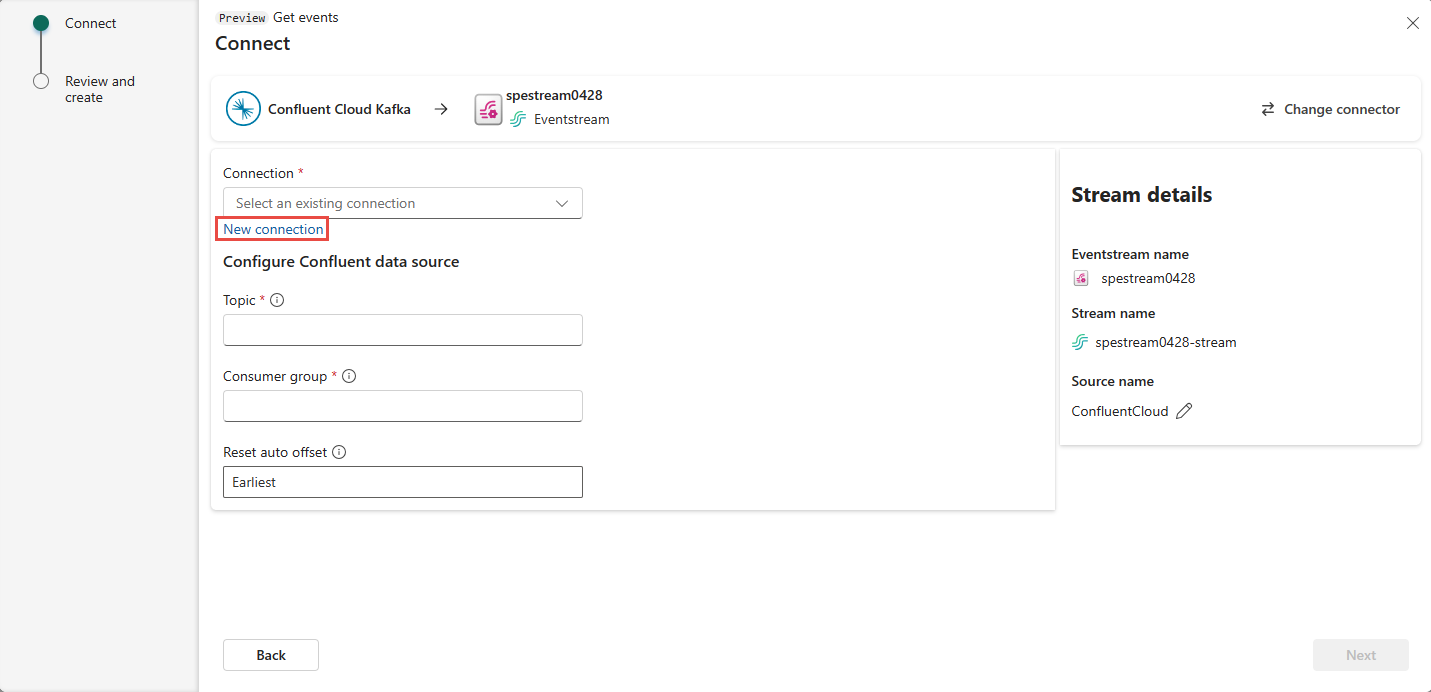

To create a connection to the Confluent Cloud Kafka source, select New connection.

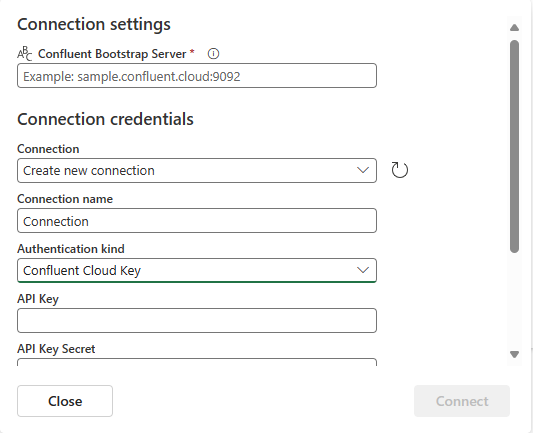

In the Connection settings section, enter Confluent Bootstrap Server. Navigate to your Confluent Cloud home page, select Cluster Settings, and copy the address to your Bootstrap Server.

In the Connection credentials section, If you have an existing connection to the Confluent cluster, select it from the drop-down list for Connection. Otherwise, follow these steps:

Navigate to your Confluent Cloud.

Select API Keys on the side menu.

Select the Add key button to create a new API key.

Copy the API Key and Secret.

Paste those values into the API Key and API Key Secret fields.

Select Connect

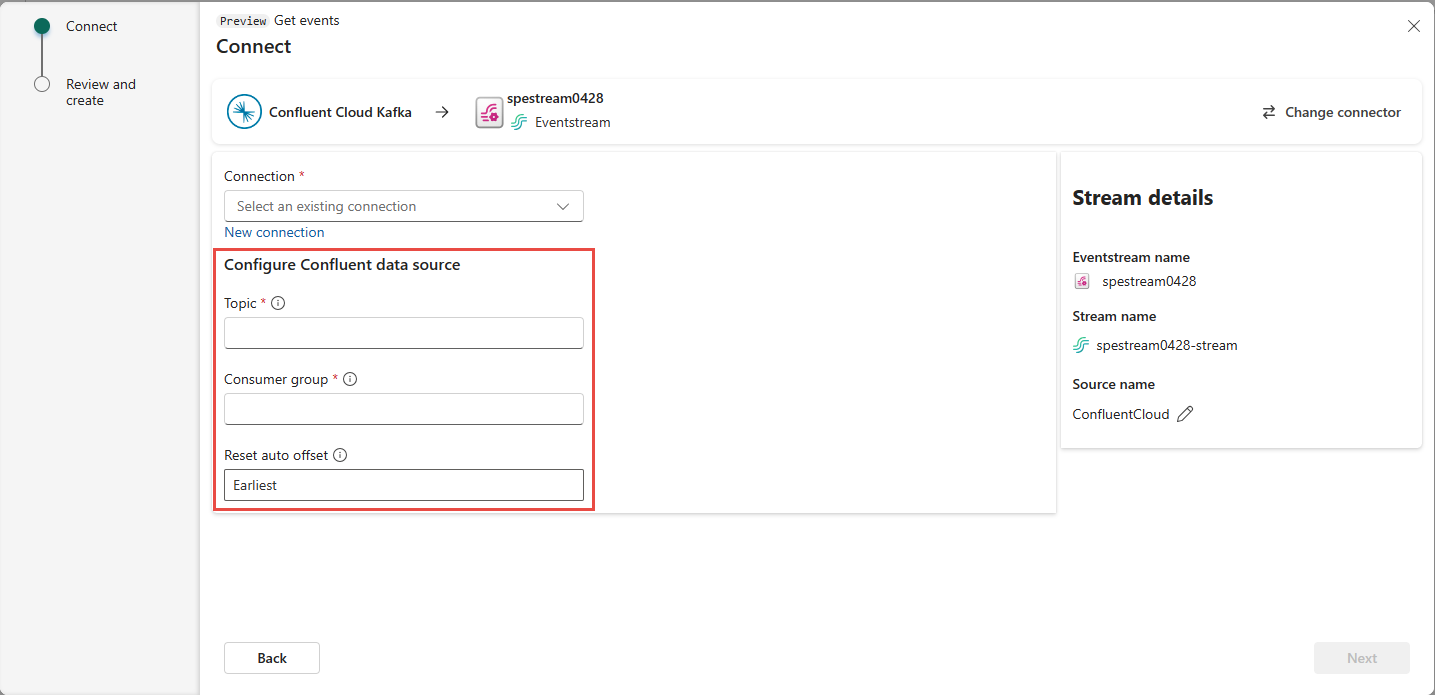

Scroll to see the Configure Confluent data source section on the page. Enter the information to complete the configuration of the Confluent data source.

Earliest – the earliest data available from your Confluent cluster

Latest – the latest available data

None – don't automatically set the offset.

Select Next. On the Review and create screen, review the summary, and then select Add.

You see that the Confluent Cloud Kafka source is added to your eventstream on the canvas in Edit mode. To implement this newly added Confluent Cloud Kafka source, select Publish on the ribbon.

After you complete these steps, the Confluent Cloud Kafka source is available for visualization in Live view.

Note

The maximum number of sources and destinations for one eventstream is 11.

Other connectors:

Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayTraining

Module

Use real-time eventstreams in Microsoft Fabric - Training

Microsoft Fabric Eventstreams is a technology for ingesting and transforming real-time streams of data.

Documentation

Add Apache Kafka source to a Fabric eventstream - Microsoft Fabric

Learn how to add an Apache Kafka topic as a source to an eventstream. This feature is currently in preview.

Get data from Kafka - Microsoft Fabric

Learn how to get data from Kafka in a KQL database in Real-Time Intelligence.

Learn how to stream and consume events to and from Real-Time Intelligence by using the Apache Kafka endpoint in an eventstream.

Add Apache Kafka as source in Fabric Real-Time hub - Microsoft Fabric

This article describes how to add an Apache Kafka topic as an event source in Fabric Real-Time hub using an API key.