Create and manage communication compliance policies

Important

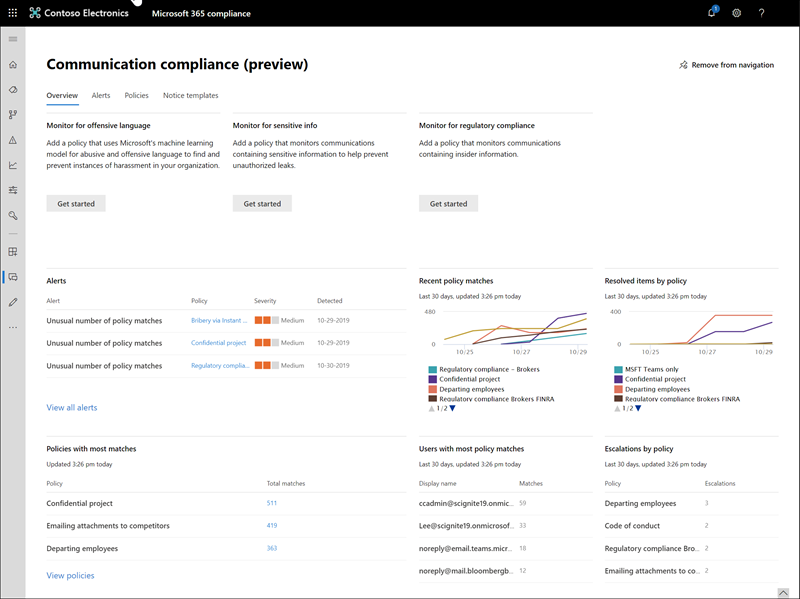

Microsoft Purview Communication Compliance provides the tools to help organizations detect regulatory compliance violations (for example SEC or FINRA), such as sensitive or confidential information, harassing or threatening language, and sharing of adult content. Built with privacy by design, usernames are pseudonymized by default, role-based access controls are built in, investigators are opted in by an admin, and audit logs are in place to ensure user-level privacy.

Tip

If you're not an E5 customer, use the 90-day Microsoft Purview solutions trial to explore how additional Purview capabilities can help your organization manage data security and compliance needs. Start now at the Microsoft Purview compliance portal trials hub. Learn details about signing up and trial terms.

Policies

Important

Using PowerShell to create and manage communication compliance policies is not supported. To create and manage these policies, you must use the policy management controls in the Communication Compliance solution.

You create communication compliance policies for Microsoft 365 organizations in the Microsoft Purview compliance portal. Communication compliance policies define which communications and users are subject to review in your organization, define which custom conditions the communications must meet, and specify who should do reviews. Users assigned the Communication Compliance Admins role can set up policies, and anyone who has this role assigned can access the Communication compliance page and global settings in Microsoft Purview. If needed, you can export the history of modifications to a policy to a .csv (comma-separated values) file that also includes the status of alerts pending review, escalated items, and resolved items. Policies can't be renamed and can be deleted when no longer needed.

Policy templates

Policy templates are predefined policy settings that you can use to quickly create policies to address common compliance scenarios. Each of these templates has differences in conditions and scope, and all templates use the same types of detection signals. You can choose from the following policy templates:

| Area | Policy Template | Details |

|---|---|---|

| Copilot interactions | Detect Copilot for Microsoft 365 interactions | - Location: Copilot for Microsoft 365 - Direction: Inbound, Outbound, Internal - Review Percentage: 100% - Conditions: No conditions are selected by default, but you must select at least one condition. |

| Inappropriate content | Detect inappropriate content (preview) | - Location: Microsoft Teams - Direction: Inbound, Outbound, Internal - Review Percentage: 100% - Conditions: Hate, Violence, Sexual, Self-harm classifiers |

| Inappropriate text | Detect inappropriate text | - Locations: Exchange Online, Microsoft Teams, Viva Engage - Direction: Inbound, Outbound, Internal - Review Percentage: 100% - Conditions: Threat, Discrimination, and Targeted harassment classifiers |

| Inappropriate images | Detect inappropriate images | - Locations: Exchange Online, Microsoft Teams - Direction: Inbound, Outbound, Internal - Review Percentage: 100% - Conditions: Adult and Racy image classifiers |

| Sensitive information | Detect sensitive info types | - Locations: Exchange Online, Microsoft Teams, Viva Engage - Direction: Inbound, Outbound, Internal - Review Percentage: 10% - Conditions: Sensitive information, out-of-the-box content patterns, and types, custom dictionary option, attachments larger than 1 MB |

| Regulatory compliance | Detect financial regulatory compliance | - Locations: Exchange Online, Microsoft Teams, Viva Engage - Direction: Inbound, Outbound - Review Percentage: 10% - Conditions: Customer complaints, Gifts & entertainment, Money laundering, Regulatory collusion, Stock manipulation, and Unauthorized disclosure classifiers |

| Conflict of interest | Detect conflict of interest | - Locations: Exchange Online, Microsoft Teams, Viva Engage - Direction: Internal - Review Percentage: 100% - Conditions: None |

Communications are scanned every 24 hours from the time policies are created. For example, if you create an inappropriate content policy at 11:00 AM, the policy gathers communication compliance signals every 24 hours at 11:00 AM daily. Editing a policy doesn't change this time. To view the last scan date and Coordinated Universal Time (UTC) for a policy, navigate to the Last policy scan column on the Policy page. After creating a new policy, it may take up to 24 hours to view the first policy scan date and time.

User-reported messages policy

Note

The User-reported messages policy is implemented for your organization when you purchase a license that includes Microsoft Purview Communication Compliance. It can take up to thirty days for this feature to be available after the license is purchased, however.

As part of a layered defense to detect and remediate inappropriate messages in your organization, you can supplement communication compliance policies with user-reported messages in Microsoft Teams and Viva Engage (preview). To help foster a safe and compliant work environment, this feature empowers users in your organization to self-report inappropriate internal Teams chat messages and Viva Engage conversations, such as harassing or threatening language, sharing of adult content, and sharing of sensitive or confidential information.

Microsoft Teams

Enabled by default in the Teams admin center, the Report inappropriate content option in Teams messages allows users in your organization to submit inappropriate internal personal and group chat messages for review by communication compliance reviewers for the policy. These messages are supported by a default system policy that supports reporting messages in Teams group and private chats.

When a user submits a Teams chat message for review, the message is copied to the User-reported message policy. Reported messages initially remain visible to all chat members and there isn't any notification to chat members or the submitter that a message has been reported in channel, private, or group chats. A user can't report the same message more than once and the message remains visible to all users included in the chat session during the policy review process.

During the review process, communication compliance reviewers can perform all the standard remediation actions on the message, including removing the message from the Teams chat. Depending on how the messages are remediated, the message sender and recipients will see different notification messages in Teams chats after the review.

Important

If a user reports a message that was sent before they were added to a chat, Teams message remediation is not supported; the Teams message can't be removed from the chat.

User-reported messages from Teams chats are the only messages processed by the User-reported message policy and only the assigned reviewers for the policy can be modified. All other policy properties aren't editable. When the policy is created, the initial reviewers assigned to the policy are all members of the Communication Compliance Admins role group (if populated with at least one user) or all members of your organization's Global Admin role group. The policy creator is a randomly selected user from the Communication Compliance Admins role group (if populated with at least one user) or a randomly selected user from your organization's Global Admin role group.

Admins should immediately assign custom reviewers to this policy as appropriate for your organization. This may include reviewers such as your Compliance Officer, Risk Officer, or members of your Human Resources department.

Customize the reviewers for chat messages submitted as user-reported messages

Select the appropriate tab for the portal you're using. To learn more about the Microsoft Purview portal, see Microsoft Purview portal. To learn more about the Compliance portal, see Microsoft Purview compliance portal.

- Sign in to the Microsoft Purview portal using credentials for an admin account in your Microsoft 365 organization.

- Go to the Communication Compliance solution.

- Select Policies in the left navigation.

- Select the User-reported messages policy, and then select Edit.

- On the Detect user-reported messages pane, assign reviewers for the policy. Reviewers must have mailboxes hosted on Exchange Online. When reviewers are added to a policy, they automatically receive an email message that notifies them of the assignment to the policy and provides links to information about the review process.

- Select Save.

The Report inappropriate content option is enabled by default and can be controlled via Teams messaging policies in the Teams Admin Center. Users in your organization automatically receive the global policy, unless you create and assign a custom policy. Edit the settings in the global policy or create and assign one or more custom policies to turn on or turn off the Report inappropriate content option. To learn more, see Manage messaging policies in Teams.

Important

If you're using PowerShell to turn on or turn off the End user reporting option in the Teams Admin Center, you must use Microsoft Teams cmdlets module version 4.2.0 or later.

Viva Engage

The Report Conversations option is off by default in the Viva Engage admin center. When you turn this option on, you see different options depending on whether you have a license that includes communication compliance:

Licenses that don't include communication compliance. If you don't have a license that includes communication compliance, when the option is turned on, the Viva Engage admin can specify an email address to receive reported conversations. The admin can also enter pre-submission instructions and post-submission confirmations for the user. Learn more about enabling the Report Conversations option if you don't have a license that includes communication compliance

Licenses that do include communication compliance. If you do have a license that includes communication compliance, when the option is turned on, any reported conversations are automatically routed through communication compliance for investigation.

Tip

If you're not an E5 customer, use the 90-day Microsoft Purview solutions trial to explore how additional Purview capabilities can help your organization manage data security and compliance needs. Start now at the Microsoft Purview compliance portal trials hub. Learn details about signing up and trial terms.

How upgrades and downgrades in licensing affect the reported conversations workflow

If a customer has a license that doesn't include communication compliance and upgrades to a license that does include communication compliance and has turned on the Report Conversations option, reported conversations are automatically rerouted to communication compliance. The Viva Engage admin can continue to access earlier reported conversations and can view new reported conversations if they're added as an investigator in communication compliance.

If a customer has a license that includes communication compliance and downgrades to a license that doesn't include communication compliance and has turned on the Report Conversations option, the workflow reverts to the process described in this article for customers that don't have a communication compliance license. To continue to use the feature, an admin must turn on the Report Conversations option in the Viva Engage admin center again and specify an email address to send reported conversations to.

User experience for customers that have a license that includes communication compliance

If you choose to route user-reported conversations through communication compliance, you can create pre-submission instructions for the user.

To report a conversation in Viva Engage, the user selects the ellipsis (three dots) button, and then selects Report conversation.

Note

The command name changes to Report comment, Report question, or Report answer depending on where the user is in Viva Engage or Answers in Viva.

If the report is submitted successfully, the user sees a confirmation message.

When a conversation is reported, the conversation is copied to the communication compliance User-reported message policy. The communication compliance investigator can review reported conversations in the User Reported policy inbox and can do all the standard remediation actions for the conversation.

Turn the Report Conversation feature on in the Viva Engage admin center

- Go to the Viva Engage admin center.

- On the Settings page, select Report Conversations.

- Turn the setting on.

- Under Report recipient, add an email address to route reports to. This email address is used to notify the admin in case there's any problem with the configuration.

- In the Pre-submission details or instructions for users box, enter any instructions you want to display for the user.

- In the Post-submission instructions to user box, enter any instructions (optional) that the user will see after reporting a conversation.

Policy for insider risk management integration

When users experience employment Duressors, they may engage in risky activities. Workplace stress may lead to uncharacteristic or malicious behavior by some users that could surface as potentially inappropriate behavior on your organization's messaging systems. Communication compliance can provide risk signals detected in applicable messages to insider risk management risky user policies by using a dedicated Detect inappropriate text policy. This policy is automatically created (if selected as an option) during configuration of a Data leaks by risky users or Security policy violations by risky users policy in insider risk management.

When configured for an insider risk management policy, a dedicated policy named Insider risk trigger - (date created) is created in communication compliance and automatically includes all organization users in the policy. This policy starts detecting risky behavior in messages by using the built-in Threat, Harassment, and Discrimination trainable classifiers and automatically sends these signals to insider risk management. If needed, this policy can be edited to update the scope of included users and the policy conditions and classifiers.

Users that send five or more messages classified as potentially risky within 24 hours are automatically brought in-scope for insider risk management policies that include this option. Once in-scope, the insider risk management policy detects potentially risky activities configured in the policy and generates alerts as applicable. It may take up to 48 hours from the time risky messages are sent until the time a user is brought in-scope in an insider risk management policy. If an alert is generated for a potentially risky activity detected by the insider risk management policy, the triggering event for the alert is identified as being sourced from the communication compliance risky activity.

All users assigned to the Insider Risk Management Investigators role group are automatically assigned as reviewers in the dedicated communication compliance policy. If insider risk management investigators need to review the associated risky user alert directly on the communication compliance alerts page (linked from the insider risk management alert details), they must be manually added to the Communication Compliance Investigators role group.

Before integrating communication compliance with insider risk management, you should also consider the following guidance when detecting messages containing potentially inappropriate text:

- For organizations without an existing Detect inappropriate text policy. The new Risky user in messages - (date created) policy is automatically created by the insider risk management policy wizard. In most cases, no further actions are needed.

- For organizations with an existing Detect inappropriate text policy. The new Risky user in messages - (date created) policy is automatically created by the insider risk management policy wizard. Although you'll have two communication compliance policies for potentially inappropriate text in messages, investigators won't see duplicate alerts for the same activity. Insider risk management investigators only see alerts for the dedicated integration policy and communication compliance investigators only see the alerts for the existing policy. If needed, you can edit the dedicated policy to change the in-scope users or individual policy conditions as applicable.

Policy health (preview)

The policy health status gives you insights into potential issues or optimizations for your communication compliance policies. At the top of the Policies page, you'll see a summary that lists the total number of policy warnings and recommendations, as well as the total number of healthy policies. If a specific policy has a warning or recommendation(s), a link to the warning or recommendation(s) is listed in the Policy health column. When you select the link, a details pane opens on the right side of the screen with the Policy Health tab selected, which makes it easy to quickly review the warning or recommendation and take action on it.

The Policy Health tab has two parts. The upper part of the tab shows general information about the policy--whether the policy is active, whether it's updated, and general tips. The lower part of the tab shows the specific warning or recommendation(s). If you're a member of the Communication Compliance or Communication Compliance Admins group, you can take action directly from the warning or recommendation. If you're a member of the Communication Compliance Analysts or Communication Compliance Investigators group, you can see the warning or recommendation so you can prompt your admin to take action.

- Warnings: If you don't take action on a warning, the policy will stop working. For the policy health preview, there's one warning, related to a policy's storage limit size.

- Recommendations: Recommendations provide suggestions for optimizing policies. If you ignore a policy recommendation, the policy will continue to work. If you're a member of the Communication Compliance or Communication Compliance Admins group, you can act on a recommendation as long as the policy is active and if it's not updating. If you're a member of the Communication Compliance Analysts or Communication Compliance Investigators group, you can see the recommendation and prompt your admin to take action. For the preview, there are two recommendations:

- Potentially reduce false positives by filtering out bulk emails: This recommendation prompts you to turn on the Filter email blasts setting. This helps to reduce false positives by excluding bulk emails, such as newsletters, and spam, phishing, and malware, from getting flagged by communication compliance policies if the policy conditions are matched.

- Reduce user scoping blind spots: This recommendation prompts you to reduce blind spots by turning on the Show insights and recommendations for users who match this policy's conditions but weren't included in the policy checkbox (used in conjunction with the Selected users policy option).

- Healthy policies: If there are no warnings or recommendations for a specific policy, the policy is considered healthy.

Reduce user scoping blind spots

You can reduce user-scoping related blind spots by turning on the Show insights and recommendations for users who match this policy's conditions but weren't included in the policy checkbox. When this checkbox is turned on, you'll see insights and recommendations for users who are sending messages that match the policy condition but aren't included in the scope of the policy. In this case, communication compliance recommends that you include those users in the scope of the policy so that you can review the messages sent by those users and take actions. You can select the specific users that you want to add or you can extend the policy to all users.

Insights for these users are aggregated; you can't see the messages sent by them until you add them to the scope of the policy.

If an admin doesn't act on a recommendation, the recommendation will continue to recur. To turn off recommendations for users outside the scope of the policy, turn off the checkbox.

Pause a policy

After you've created a communication compliance policy, the policy may be temporarily paused if needed. Pausing a policy may be used for testing or troubleshooting policy matches, or for optimizing policy conditions. Instead of deleting a policy in these circumstances, pausing a policy also preserves existing policy alerts and messages for ongoing investigations and reviews. Pausing a policy prevents inspection and alert generation for all user message conditions defined in the policy for the time the policy is paused. To pause or restart a policy, users must be a member of the Communication Compliance Admins role group.

To pause a policy, navigate to the Policy page, select a policy, and then select Pause policy from the actions toolbar. On the Pause policy pane, confirm you'd like to pause the policy by selecting Pause. In some cases, it may take up to 24 hours for a policy to be paused. Once the policy is paused, alerts for messages matching the policy aren't created. However, messages associated with alerts that were created prior to pausing the policy remain available for investigation, review, and remediation.

The policy status for paused policies may indicate several states:

- Active: The policy is active

- Paused: The policy is fully paused.

- Pausing: The policy is in the process of being paused.

- Resuming: The policy in the process of being resumed.

- Error in resuming: An error has been encountered when resuming the policy. For the error stack trace, hover your mouse over the Error in resuming status in the Status column on the Policy page.

- Error in pausing: An error has been encountered when pausing the policy. For the error stack trace, hover your mouse over the Error in pausing status in the Status column on the Policy page.

To resume a policy, navigate to the Policy page, select a policy, and then select Resume policy from the actions toolbar. On the Resume policy pane, confirm you'd like to resume the policy by selecting Resume. In some cases, it may take up to 24 hours for a policy to be resumed. Once the policy is resumed, alerts for messages matching the policy will be created and will be available for investigation, review, and remediation.

Copy a policy

For organizations with existing communication compliance policies, there may be scenarios when creating a new policy from an existing policy may be helpful. Copying a policy creates an exact duplicate of an existing policy, including all in-scope users, all assigned reviewers, and all policy conditions. Some scenarios may include:

- Policy storage limit reached: Active communication compliance policies have message storage limits. When the storage limit for a policy is reached, the policy is automatically deactivated. Organizations that need to continue to detect, capture, and act on inappropriate messages covered by the deactivated policy can quickly create a new policy with an identical configuration.

- Detect and review inappropriate messages for different groups of users: Some organizations may prefer to create multiple policies with the same configuration but include different in-scope users and different reviewers for each policy.

- Similar policies with small changes: For policies with complex configurations or conditions, it may save time to create a new policy from a similar policy.

To copy a policy, users must be a member of the Communication Compliance or Communication Compliance Admins role groups. After a new policy is created from an existing policy, it may take up to 24 hours to view messages that match the new policy configuration.

To copy a policy and create a new policy, complete the following steps:

- Select the policy you want to copy.

- Select Copy policy command bar button on the command bar or select Copy policy from the action menu for the policy.

- In the Copy policy pane, you can accept the default name for the policy in the Policy name field or rename the policy. The policy name for the new policy can't be the same as an existing active or deactivated policy. Complete the Description field as needed.

- If you don't need further customization of the policy, select Copy policy to complete the process. If you need to update the configuration of the new policy, select Customize policy. This starts the policy wizard to help you update and customize the new policy.

Mark a policy as a favorite

After you've created a communication compliance policy, you can mark the policy as a favorite. Once a policy has been identified as a favorite, you can filter favorite policies to appear at the top of the policies list. By marking a policy as a favorite, you can also easily sort policies by favorites.

To mark a policy as a favorite, you have the following options:

- Mark as favorite: Enables you to mark selected policies as favorites, so you can easily find the policies that you're most interested in rather than having to search for them.

- Sort favorites: Sorts the policies by favorites, so your favorite policies appear at the top of the list.

- Customize columns: Choose to list the favorites that you want to see. You can also choose to sort favorite policies in ascending or descending order.

To sort policies by groups:

- All policies: This is the default view, displaying all the policies in the list.

- Only favorites: Groups policies by favorites at the top of the list.

Policy activity detection

Communications are scanned every hour from the time policies are created. For example, if you create an inappropriate content policy at 11:00 AM, the policy gathers communication compliance signals every hour starting from when the policy was created. Editing a policy doesn't change this time. To view the last scan date and Coordinated Universal Time (UTC) for a policy, navigate to the Last policy scan column on the Policy page. After creating a new policy, it may take up to an hour to view the first policy scan date and time.

The following table outlines the time to detection for supported content types:

| Content type | Time to detection |

|---|---|

| Email body content | 1 hour |

| Teams body content | 1 hour |

| Viva Engage body content | 1 hour |

| Viva Engage attachment | Up to 24 hours |

| Microsoft Copilot for Microsoft 365 body content (prompts and responses) | 1 hour |

| Email OCR | 24 hours |

| Teams OCR | 24 hours |

| Email attachment | 24 hours |

| Team attachment | 24 hours |

| Teams modern attachment | 24 hours |

| Teams metadata | 1 hour |

| Email metadata | 1 hour |

| Teams shared channels | 24 hours |

| Teams transcripts | 1 hour |

For existing policies created before July 31, 2022 it may take up to 24 hours to detect messages and review alerts that match these policies. To reduce the latency for these policies, copy the existing policy and create a new policy from the copy. If you don't need to retain any data from the older policy, it can be paused or deleted.

To identify an older policy, review Last policy scan column on the Policy page. Older policies will display a full date for the scan while policies created after July 31, 2022 will display 1 hour ago for the scan. Another option to reduce latency is to wait until February 28, 2023 for your existing policies to be automatically migrated to the new detection criteria.

Storage limit notification

Each communication compliance policy has a storage limit size of 100 GB or 1 million messages, whichever is reached first. As the policy approaches these limits, notification emails are automatically sent to users assigned to the Communication Compliance or Communication Compliance Admins role groups. Notifications messages are sent when the storage size or message count reach 80, 90, and 95 percent of the limit. When the policy limit is reached, the policy is automatically deactivated, and the policy stops processing messages for alerts.

Important

If a policy is deactivated due to reaching the storage and message limits, be sure to evaluate how to manage the deactivated policy. If you delete the policy, all messages, associated attachments, and message alerts will be permanently deleted. If you need to maintain these items for future use, don't delete the deactivated policy.

To manage policies approaching the storage and message limits, consider making a copy of the policy to maintain coverage continuity or take the following actions to help minimize current policy storage size and message counts:

- Consider reducing the number of users assigned to the policy. Removing users from the policy or creating different policies for different groups of users can help slow the growth of policy size and total messages.

- Examine the policy for excessive false positive alerts. Consider adding exceptions or changes to the policy conditions to ignore common false positive alerts.

- If a policy has reached the storage or message limits and has been deactivated, make a copy of the policy to continue to detect and take action for the same conditions and users.

Policy settings

Users

You can select All users, define specific users in a communication compliance policy, or select an adaptive scope.

- All users: Selecting All users applies the policy to all users and all groups that any user is included in as a member.

- Select users: Defining specific users applies the policy to the defined users and any groups the defined users are included in as a member. If you choose the Selected users option, the Show insights and recommendations for users who match this policy's conditions but weren't included in the policy checkbox automatically appears. Leave this checkbox selected if you want to receive recommendations when users outside of the selected users match the policy conditions. This setting is not available if you choose the All users option or the Select adaptive scopes option.

- Select adaptive scope. An adaptive scope uses a query that you specify to define the membership of users or groups. If you decide to create an adaptive policy, you must create one or more adaptive scopes before you create your policy, and then select them when you choose this option. The scopes that you can select depend on the scope types that you add. For example, if you only added a scope type of User, you will be able to select Groups. Learn more about the advantages of using an adaptive scope.

Direction

By default, the Direction is condition is displayed and can't be removed. Communication direction settings in a policy are chosen individually or together:

- Inbound: Detects communications sent to scoped users from external and internal senders, including other scoped users in the policy.

- Outbound: Detects communications sent from scoped users to external and internal recipients, including other scoped users in the policy.

- Internal: Detects communications between the scoped users or groups in the policy.

Sensitive information types

You have the option of including sensitive information types as part of your communication compliance policy. Sensitive information types are either predefined or custom data types that can help identify and protect credit card numbers, bank account numbers, passport numbers, and more. As part of Learn about Microsoft Purview Data Loss Prevention, the sensitive information configuration can use patterns, character proximity, confidence levels, and even custom data types to help identify and flag content that may be sensitive. The default sensitive information types are:

- Financial

- Medical and health

- Privacy

- Custom information type

Important

Sensitive info types have two different ways of defining the max unique instance count parameters. To learn more, see Create custom sensitive information types.

The communication compliance solution supports default sensitive information types and bundled named-entity sensitive information types, which are collections of sensitive information types. To learn more about sensitive information details and the patterns included in the default types, see Sensitive information type entity definitions. For information on supported bundled named-entity sensitive information types, see the following articles:

To learn more about sensitive information details and the patterns included in the default types, see Sensitive information type entity definitions.

Custom keyword dictionaries

Configure custom keyword dictionaries (or lexicons) to provide simple management of keywords specific to your organization or industry. Keyword dictionaries support up to 100 KB of terms (post-compression) in the dictionary and support any language. The tenant limit is also 100 KB after compression. If needed, you can apply multiple custom keyword dictionaries to a single policy or have a single keyword dictionary per policy. These dictionaries are assigned in a communication compliance policy and can be sourced from a file (such as a .CSV or .TXT list), or from a list you can Import. Use custom dictionaries when you need to support terms or languages specific to your organization and policies.

Trainable classifiers

Communication compliance policies using trainable classifiers inspect and evaluate messages that meet a minimum word count requirement, depending upon the language of the content. For a complete list of supported languages, word count requirements, and file types for these classifiers, see Trainable classifier definitions.

To identify and take action on messages containing inappropriate language content that doesn't meet the word count requirement, you can create a sensitive information type or custom keyword dictionary for communication compliance policies detecting this type of content.

| Trainable classifier | Description |

|---|---|

| Corporate sabotage | Detects messages that may mention acts to damage or destroy corporate assets or property. This classifier can help customers manage regulatory compliance obligations such as NERC Critical Infrastructure Protection standards or state by state regulations like Chapter 9.05 RCW in Washington state. |

| Customer complaints | Detects messages that may suggest customer complaints made on your organization's products or services, as required by law for regulated industries. This classifier can help customers manage regulatory compliance obligations such as FINRA Rule 4530, FINRA 4513, FINRA 2111, Consumer Financial Protection Bureau, Code of Federal Regulations Title 21: Food and Drugs, and the Federal Trade Commission Act. |

| Discrimination | Detects potentially explicit discriminatory language and is particularly sensitive to discriminatory language against the African American/Black communities when compared to other communities. |

| Gifts & entertainment | Detects messages that may suggest exchanging gifts or entertainment in return for service, which violates regulations related to bribery. This classifier can help customers manage regulatory compliance obligations such as Foreign Corrupt Practices Act (FCPA), UK Bribery Act, and FINRA Rule 2320. |

| Harassment | Detects potentially offensive content in multiple languages that targets people regarding race, color, religion, national origin. |

| Money laundering | Detects signs that may suggest money laundering or engagement in acts to conceal or disguise the origin or destination of proceeds. This classifier can help customers manage regulatory compliance obligations such as the Bank Secrecy Act, the USA Patriot Act, FINRA Rule 3310, and the Anti-Money Laundering Act of 2020. |

| Profanity | Detects potentially profane content in multiple languages that would likely offend most people. |

| Regulatory collusion | Detects messages that may violate regulatory anti-collusion requirements such as an attempted concealment of sensitive information. This classifier can help customers manage regulatory compliance obligations such as the Sherman Antitrust Act, Securities Exchange Act 1933, Securities Exchange Act of 1934, Investment Advisers Act of 1940, Federal Commission Act, and the Robinson-Patman Act. |

| Stock manipulation | Detects signs of possible stock manipulation, such as recommendations to buy, sell or hold stocks that may suggest an attempt to manipulate the stock price. This classifier can help customers manage regulatory compliance obligations such as the Securities Exchange Act of 1934, FINRA Rule 2372, and FINRA Rule 5270. |

| Threat | Detects potential threatening content in multiple languages aimed at committing violence or physical harm to a person or property. |

| Unauthorized disclosure | Detects sharing of information containing content that is explicitly designated as confidential or internal to unauthorized individuals. This classifier can help customers manage regulatory compliance obligations such as FINRA Rule 2010 and SEC Rule 10b-5. |

Important

Trainable classifiers may detect a large volume of bulk sender/newsletter content due to a known issue. You can mitigate the detection of large volumes of bulk sender/newsletter content by selecting the Filter email blasts check box when you create the policy. You can also edit an existing policy to turn on this feature.

Content safety classifiers for Teams based on large language models

Communication compliance also includes a set of Azure AI classifiers (preview) for Teams that run on large language models (LLMs) and are highly accurate. If a message containing three or more words is detected by any of these classifiers, a Severity column is added to the Alerts dashboard, if the severity value is 4 or higher, to make it easier to prioritize alerts. Investigators can also sort and filter on the Severity column.

Note

The Severity column is only shown if the policy match is from one of the classifiers listed in the table below. For all other classifiers, the column is empty.

The following table describes the classifiers (preview) available at this time.

| Classifier | Description |

|---|---|

| Hate (Teams only) | Hate refers to any content that attacks or uses pejorative or discriminatory language with reference to a person or identity group on the basis of certain differentiating attributes of these groups, including but not limited to race, ethnicity, nationality, gender identity and expression, sexual orientation, religion, immigration status, ability status, personal appearance, and body size. |

| Sexual (Teams only) | Sexual describes language related to anatomical organs and genitals, romantic relationships, acts portrayed in erotic or affectionate terms, pregnancy, physical sexual acts, including those portrayed as an assault or a forced sexual violent act against one’s will, prostitution, pornography, and abuse. |

| Violence (Teams only) | Violence describes language related to physical actions intended to hurt, injure, damage, or kill someone or something; describes weapons, guns and related entities, such as manufactures, associations, legislation, and so on. |

| Self-harm (Teams only) | Self-harm describes language related to physical actions intended to purposely hurt, injure, damage one’s body, or kill oneself. |

Learn more about Azure AI Content Safety

Limitations

- Only Teams locations are supported.

- Only messages containing three or more words are evaluated. Attachments and OCR images are not evaluated.

- Teams meeting transcripts are not evaluated.

- The maximum supported characters per message is 10000.

- Learn about supported languages

Optical character recognition (OCR)

Note

Microsoft Purview includes OCR (preview) settings for Microsoft Purview Insider Risk Management, Microsoft Purview Data Loss Prevention, Microsoft Purview Data Loss Management, and autolabeling. You can use the OCR (preview) settings to provide image-scanning capabilities for those solutions and technologies. Communication compliance has its own built-in OCR scanning functionality as described in this section and doesn’t support the OCR (preview) settings at this time.

Configure built-in or custom communication compliance policies to scan and identify printed or handwritten text from images that may be inappropriate in your organization. Integrated Azure Cognitive Services and optical scanning support for identifying text in images help analysts and investigators detect and act on instances where inappropriate conduct may be missed in communications that is primarily nontextual.

You can enable optical character recognition (OCR) in new policies from templates, custom policies, or update existing policies to expand support for processing embedded images and attachments. When enabled in a policy created from a policy template, automatic scanning is supported for embedded or attached images in email and Microsoft Teams chat messages. For images embedded in document files, OCR scanning isn't supported. For custom policies, one or more conditional settings associated with keywords, trainable classifiers, or sensitive info types must be configured in the policy to enable the selection of OCR scanning.

Images from 100 KB to 4 MB in the following image formats are scanned and processed:

- .jpg/.jpeg (joint photographic experts group)

- .png (portable network graphics)

- .bmp (bitmap)

- .tiff (tag image file format)

- .pdf (portable document format)

Note

Scanning and extraction for embedded and attached .pdf images is currently supported only for email messages.

When reviewing pending policy matches for policies with OCR enabled, images identified and matched to policy conditions are displayed as child items for associated alerts. You can view the original image to evaluate the identified text in context with the original message. It may take up to 48 hours for detected images to be available with alerts.

Conditional settings

The conditions you choose for the policy apply to communications from both email and third-party sources in your organization (like from Instant Bloomberg).

Tip

To save time, you can test certain conditions before creating your policy.

The following table explains more about each condition.

| Condition | How to use this condition |

|---|---|

| Content matches any of these classifiers | Apply to the policy when any trainable classifiers are included or excluded in a message. Some classifiers are predefined in your organization, and custom classifiers must be configured separately before they're available for this condition. Only one trainable classifier can be defined as a condition in a policy. For more information about configuring trainable classifiers, see Learn about trainable classifiers. |

| Content contains any of these sensitive info types | Apply to the policy when any sensitive information types are included or excluded in a message. Some trainable classifiers are predefined in your tenant, and custom classifiers can be configured separately or as part of the condition assignment process. Each sensitive information type you choose is applied separately and only one of these sensitive information types must apply for the policy to apply to the message. For more information about custom sensitive information types, see Learn about sensitive information types. |

| Message is received from any of these domains Message is not received from any of these domains |

Apply the policy to include or exclude specific domains in received messages. Make sure to use the following syntax when entering conditional text: -Enter each domain and separate multiple domains with a comma. -Don't include spaces between items separated by a comma. -Remove all leading and trailing spaces. Each domain entered is applied separately, only one domain must apply for the policy to apply to the message. If you want to use Message is received from any of these domains to look for messages from specific domains, you need to combine this with another condition like Message contains any of these words, or Content matches any of these classifiers, or you might get unexpected results. If you want to scan all emails but want to exclude messages from a specific domain that don't need review (newsletters, announcements, and so on), you must configure a Message is not received from any of these domains condition that excludes the domain (example 'contoso.com,wingtiptoys.com'). |

| Message is sent to any of these domains Message is not sent to any of these domains |

Apply the policy to include or exclude specific domains in sent messages. Make sure to use the following syntax when entering conditional text: -Enter each domain and separate multiple domains with a comma. -Don't include spaces between items separated by a comma. -Remove all leading and trailing spaces. Each domain is applied separately; only one domain must apply for the policy to apply to the message. If you want to exclude all emails sent to two specific domains, configure the Message is not sent to any of these domains condition with the two domains (example 'contoso.com,wingtiptoys.com'). |

| Message is received from any of these external email addresses Message is not received from any of these external email addresses |

Apply the policy to include or exclude messages received or not received from specific external email addresses (example someone@outlook.com). Use this condition to detect only messages that come from outside the organization (messages that cross the firewall). Make sure to use the following syntax when entering email addresses: - Enter each email address and separate multiple email addresses with a comma. - Don't include spaces between email addresses separated by a comma. - Remove all leading and trailing spaces. - Remove any single quote or double quotes |

| Message is sent to any of these external email addresses Message is not sent to any of these external email addresses |

Apply the policy to include or exclude messages sent or not sent to specific external email addresses (example someone@outlook.com). Use this condition to detect only messages that are sent outside the organization (messages that cross the firewall). Make sure to use the following syntax when entering email addresses: - Enter each email address and separate multiple email addresses with a comma. - Don't include spaces between email addresses separated by a comma. - Remove all leading and trailing spaces. - Remove any single quote or double quotes |

| Message is classified with any of these labels Message is not classified with any of these labels |

To apply the policy when certain retention labels are included or excluded in a message. Retention labels must be configured separately and configured labels are chosen as part of this condition. Each label you choose is applied separately (only one of these labels must apply for the policy to apply to the message). For more information about retention labels, see Learn about retention policies and retention labels. |

| Message contains any of these words Message contains none of these words |

To apply the policy when certain words or phrases are included or excluded in a message. Make sure to use the following syntax when entering conditional text: - Remove all leading and trailing spaces. - Add quotation marks before and after each keyword or key phrase. - Separate each keyword or key phrase with a comma. - Don't include spaces between items separated by a comma. Example: "banker","insider trading","confidential 123" Each word or phrase you enter is applied separately (only one word must apply for the policy to apply to the message). For more information about entering words or phrases, see the next section Matching words and phrases to emails or attachments. |

| Attachment contains any of these words Attachment contains none of these words |

To apply the policy when certain words or phrases are included or excluded in a message attachment (such as a Word document). Make sure to use the following syntax when entering conditional text: - Remove all leading and trailing spaces. - Add quotation marks before and after each keyword or key phrase. - Separate each keyword or key phrase with a comma. - Don't include spaces between items separated by a comma. Example: "banker","insider trading","confidential 123" Each word or phrase you enter is applied separately (only one word must apply for the policy to apply to the attachment). For more information about entering words or phrases, see the next section Matching words and phrases to emails or attachments. |

| Attachment is any of these file types Attachment is none of these file types |

To bring communications into scope that include or exclude specific types of attachments, enter the file extensions (such as .exe or .pdf). If you want to include or exclude multiple file extensions, enter file types separated by a comma (example .exe,.pdf,.zip). Don't include spaces between items separated by a comma. Only one attachment extension must match for the policy to apply. |

| Message size is larger than Message size is not larger than |

To review messages based on a certain size, use these conditions to specify the maximum or minimum size a message can be before it's subject to review. For example, if you specify Message size is larger than > 1.0 MB, all messages that are 1.01 MB and larger are subject to review. You can choose bytes, kilobytes, megabytes, or gigabytes for this condition. |

| Attachment is larger than Attachment is not larger than |

To review messages based on the size of their attachments, specify the maximum or minimum size an attachment can be before the message and its attachments are subject to review. For example, if you specify Attachment is larger than > 2.0 MB, all messages with attachments 2.01 MB and over are subject to review. You can choose bytes, kilobytes, megabytes, or gigabytes for this condition. |

Important

If a condition includes a list, don't include spaces between list items. For example, enter "bias,harassment" instead of "bias, harassment".

Matching words and phrases to emails or attachments

Each word you enter and separate with a comma is applied separately (only one word must apply for the policy condition to apply to the email or attachment). For example, let's use the condition, Message contains any of these words, with the keywords "banker", "confidential", and "insider trading" separated by a comma (banker,confidential,"insider trading"). The policy applies to any messages that includes the word "banker", "confidential", or the phrase "insider trading". Only one of these words or phrases must occur for this policy condition to apply. Words in the message or attachment must exactly match what you enter.

Important

When importing a custom dictionary file, each word or phrase must be separated with a carriage return and on a separate line. For example:

banker

confidential

insider trading

To scan both email messages and attachments for the same keywords, create a custom keyword dictionary for the terms you wish to scan in messages. This policy configuration identifies defined keywords that appear in either the email message OR in the email attachment. Using the standard conditional policy settings (Message contains any of these words and Attachment contains any of these words) to identify terms in messages and in attachments requires the terms to be present in BOTH the message and the attachment.

Enter multiple conditions

If you enter multiple conditions, Microsoft 365 uses all the conditions together to determine when to apply the communication compliance policy to communication items. When you set up multiple conditions, all conditions must be met for the policy to apply, unless you enter an exception. For example, you need a policy that applies if a message contains the word "trade", and is larger than 2 MB. However, if the message also contains the words "Approved by Contoso financial", the policy shouldn't apply. In this example, the three conditions would be defined as follows:

- Message contains any of these words, with the keyword "trade"

- Message size is larger than, with the value 2 MB

- Message contains none of these words, with the keywords "Approved by Contoso financial team"

Review percentage

If you want to reduce the amount of content to review, you can specify a percentage of all the communications governed by a communication compliance policy. A real-time, random sample of content is selected from the total percentage of content that matches chosen policy conditions. If you want reviewers to review all items, you can configure 100% in a communication compliance policy.

Filter email blasts

Use the Filter email blasts setting to exclude messages sent from email blast services. Messages that match the conditions you specify won't generate alerts. This includes bulk email, such as newsletters, and spam, phishing, and malware. When this option is selected, you can view a report that lists the bulk email senders that were filtered out. Reports are retained for 60 days.

Note

The list of senders is filtered before the content is analyzed so there might be senders that don't match the content conditions (there might be extra senders in the report).

This setting is on by default for new policies. If the Filter email blasts setting is off for existing policies, a recommendation is generated to turn the setting on.

Alert policies

After you configure a policy, a corresponding alert policy is automatically created and alerts are generated for messages that match conditions defined in the policy. It may take up to 24 hours after creating a policy start to receive alerts from activity indicators. By default, all policy matches alert triggers are assigned a severity level of medium in the associated alert policy. Alerts are generated for a communication compliance policy once the aggregation trigger threshold level is met in the associated alert policy. A single email notification is sent once every 24 hours for any alerts, regardless of the number of individual messages that match policy conditions. For example, Contoso has an inappropriate content policy enabled and for January 1, there were 100 policy matches that generated six alerts. A single email notification for the six alerts is sent at end of January 1.

For communication compliance policies, the following alert policy values are configured by default:

| Alert policy trigger | Default value |

|---|---|

| Aggregation | Simple aggregation |

| Threshold | Default: 4 activities Minimum: 3 activities Maximum: 2,147,483,647 activities |

| Window | Default: 60 minutes Minimum: 60 minutes Maximum: 10,000 minutes |

Note

The alert policy threshold trigger settings for activities supports a minimum value of 3 or higher for communication compliance policies.

You can change the default settings for triggers on number of activities, period for the activities, and for specific users in alert policies on the Alert policies page in the Microsoft Purview.

Change the severity level for an alert policy

Select the appropriate tab for the portal you're using. To learn more about the Microsoft Purview portal, see Microsoft Purview portal. To learn more about the Compliance portal, see Microsoft Purview compliance portal.

- Sign in to the Microsoft Purview portal using credentials for an admin account in your Microsoft 365 organization.

- Go to the Communication Compliance solution.

- Select Policies in the left navigation.

- Select Office 365 alert on the Policies page to open the Alerts policies page.

- Select the checkbox for the communication compliance policy you want to update, and then select Edit policy.

- On the Description tab, select the Severity dropdown to configure the policy alert level.

- Select Save to apply the new severity level to the policy.

- Select Close.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for