Events

31 Mar, 23 - 02 Apr, 23

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Microsoft Fabric notebooks support seamless interaction with Lakehouse data using Pandas, the most popular Python library for data exploration and processing. Within a notebook, you can quickly read data from, and write data back to, their Lakehouse resources in various file formats. This guide provides code samples to help you get started in your own notebook.

Get a Microsoft Fabric subscription. Or, sign up for a free Microsoft Fabric trial.

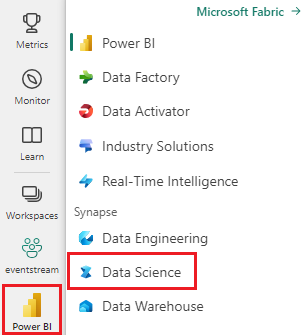

Sign in to Microsoft Fabric.

Use the experience switcher on the bottom left side of your home page to switch to Fabric.

Once you attach a Lakehouse to your Microsoft Fabric notebook, you can explore stored data without leaving the page, and read it into your notebook, all with a few steps. Selection of any Lakehouse file surfaces options to "Load data" into a Spark or a Pandas DataFrame. You can also copy the file's full ABFS path or a friendly relative path.

Selection of one of the "Load data" prompts generates a code cell to load that file into a DataFrame in your notebook.

For reference, this command shows how to convert a Spark DataFrame into a Pandas DataFrame:

# Replace "spark_df" with the name of your own Spark DataFrame

pandas_df = spark_df.toPandas()

Note

Modifying the version of a specific package could potentially break other packages that depend on it. For instance, downgrading azure-storage-blob might cause problems with Pandas and various other libraries that rely on Pandas, including mssparkutils, fsspec_wrapper, and notebookutils.

You can view the list of preinstalled packages and their versions for each runtime here.

These code samples describe the Pandas operations to read and write various file formats.

Note

You must replace the file paths in these code samples. Pandas supports both relative paths, as shown here, and full ABFS paths. Paths of either type can be retrieved and copied from the interface according to the previous step.

import pandas as pd

# Read a CSV file from your Lakehouse into a Pandas DataFrame

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df = pd.read_csv("/LAKEHOUSE_PATH/Files/FILENAME.csv")

display(df)

import pandas as pd

# Write a Pandas DataFrame into a CSV file in your Lakehouse

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df.to_csv("/LAKEHOUSE_PATH/Files/FILENAME.csv")

import pandas as pd

# Read a Parquet file from your Lakehouse into a Pandas DataFrame

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df = pd.read_parquet("/LAKEHOUSE_PATH/Files/FILENAME.parquet")

display(df)

import pandas as pd

# Write a Pandas DataFrame into a Parquet file in your Lakehouse

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df.to_parquet("/LAKEHOUSE_PATH/Files/FILENAME.parquet")

import pandas as pd

# Read an Excel file from your Lakehouse into a Pandas DataFrame

# Replace LAKEHOUSE_PATH and FILENAME with your own values. Also need to add correct filepath after Files/ if file is placed in different folders

# if using default lakehouse that attached to the notebook use the code to replace below: df = pandas.read_excel("/lakehouse/default/Files/FILENAME.xlsx")

df = pandas.read_excel("/LAKEHOUSE_PATH/Files/FILENAME.xlsx")

display(df)

import pandas as pd

# Write a Pandas DataFrame into an Excel file in your Lakehouse

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df.to_excel("/LAKEHOUSE_PATH/Files/FILENAME.xlsx")

import pandas as pd

# Read a JSON file from your Lakehouse into a Pandas DataFrame

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df = pandas.read_json("/LAKEHOUSE_PATH/Files/FILENAME.json")

display(df)

import pandas as pd

# Write a Pandas DataFrame into a JSON file in your Lakehouse

# Replace LAKEHOUSE_PATH and FILENAME with your own values

df.to_json("/LAKEHOUSE_PATH/Files/FILENAME.json")

Events

31 Mar, 23 - 02 Apr, 23

The biggest Fabric, Power BI, and SQL learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayTraining

Learning path

Implement a Lakehouse with Microsoft Fabric DP-601T00 - Training

This learning path introduces the foundational components of implementing a data lakehouse with Microsoft Fabric. (DP-601T00)

Certification

Microsoft Certified: Fabric Data Engineer Associate - Certifications

As a Fabric Data Engineer, you should have subject matter expertise with data loading patterns, data architectures, and orchestration processes.