An accessibility case study - Reading List. Part 5: Live Regions

This post discusses accessibility considerations around the use of Live Regions in the Reading List app available in the Windows 8.1 Preview.

Typically, assistive technology tools such as screen readers will report information relating to the visuals shown on the screen near the point where the customer is working. That point may be the element which has keyboard focus or the element which the customer has specifically navigated to through some means provided by the screen reader. For example, a customer using Narrator can tab through UI and hear details about the element with keyboard focus, and then press the CapLock+LeftArrow to hear details about an element which was bypassed because it does not receive keyboard focus.

However, sometimes screen visuals change to present important information to the sighted customer, and that information might appear at some point on the screen which is far from where the customer’s working. And sometimes it can be critically important that the customer using a screen reader is notified of this change.

This can be achieved through use of the “Live Region”. When text changes in a Live Region, a UI Automation (UIA) LiveRegionChanged event is automatically raised, and a screen reader can react to that event however it thinks is appropriate.

Implementing the use of Live Regions in the Reading List app turned out to be far more interesting for the team than we originally expected. The fundamentals of Live Regions are pretty straightforward. If you have some element text, and you want to have a LiveRegionChanged event raised when the text changes on the element, all you need to do is set its “aria-live” value to either “assertive” or “polite”. You’d set “assertive” when you’d like the screen reader to immediately announce the change, and you’d set “polite” if you’d like the screen reader to finish what it happens to be in the middle of saying, before it announces the change.

So for app development in general, I’d definitely start with the simple approach of assigning the “aria-live” value to elements where you want your customer to be notified of text changes in those elements.

Alternatively, instead of assigning an “aria-live” value explicitly, you could assign a role of “alert” or “log”, as those roles automatically lead to the creation of assertive or polite Live Regions respectively. (These aren’t the only related roles, as mentioned at https://msdn.microsoft.com/en-us/library/windows/apps/hh452704.aspx).

For the Reading List app, the team found that for a couple of reasons this straightforward approach was not sufficient, and so we worked to address this.

Challenge #1 – LiveRegionChanged events not resulting in Narrator announcements

Sometimes text would change on an element that we’d marked as a Live Region, and the Narrator screen reader did not announce the updated text. When this happens, I would always point the AccEvent SDK tool to the app’s UI, to see if any LiveRegionChanged events are being raised at all. If no such event is being raised, then Narrator can’t know of the text change and couldn’t possible announce anything to the customer.

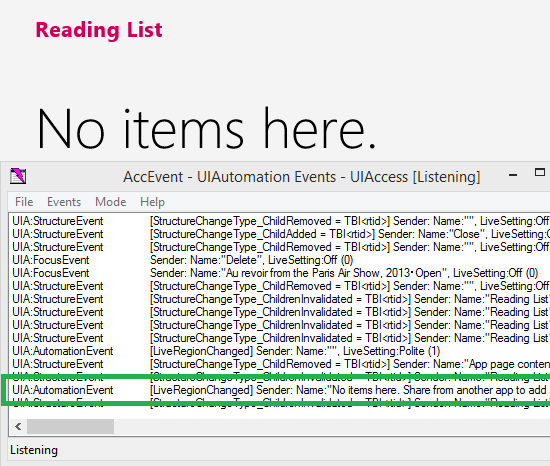

When I looked at the events being raised by the app, I did find that LiveRegionChanged events were being raised. But I also noticed that around the same time, many other events could be raised by the app’s UI due to lots of visuals changing. For example, if I delete the last item in my list and present something like a “No items here” message, the HTML DOM has a great deal of structure changes going on, and probably a focus change as well. A screen reader may receive some subset of all those events, some before the LiveRegionChanged event and some after. So it seemed that it was possible for the LiveRegionChanged events raised by the Reading List UI to get trumped by other events being raised around the same time. That is, Narrator might prepare to announce something in response to the LiveRegionChanged event, but then before it has a chance to speak, some other event is received which it immediately needs to react to.

In order to reduce the likelihood of this happening, we added a short delay before setting the text on the Live Region event. This means that the LiveRegionChanged event would get raised after the many other events were raised, and so would reach a screen reader after those events. If the LiveRegionChanged event arrives after all other events that the screen reader might have to react to, then the customer will end up hearing the details of the LiveRegionChanged event.

Figure 1: The AccEvent SDK tool showing a LiveRegionChanged event arriving after most other events generated around the same time.

Overall this approach improved the experience for the customer. The reliability of the announcements that we wanted to be heard improved considerably. It’s possible that other apps would not need to do the work to delay having the LiveRegionChanged events raised. Perhaps the particular layout and behavior of the Reading List UI affected the frequency that LiveRegionChanged events did not result in announcements being made by Narrator. So app developers would want to go with the straightforward approach first, and only consider delaying the raising of the LiveRegionChanged events if that seems potentially beneficial to the customer.

Challenge #2 – Live Regions announcing text that’s not shown on the screen

Typically Live Regions relate to visuals that are changing on the screen. The Reading List team also considered whether Live Regions could be leveraged to have announcements made where the text being announced was not visible on the screen.

This scenario here related to search results. When the customer performs a search, keyboard focus is left in the SearchBox after the query is complete. Throughout the search itself, Narrator will by default only announce information relating to the SearchBox. Outside of the Searchbox, visually the text string “Results for <the search query>” appears, and the relevant subset of list items is shown. A customer with sight can get a feel for the results based on the number of items that are shown on the screen. But there’s no text shown there which would be useful to a customer using Narrator.

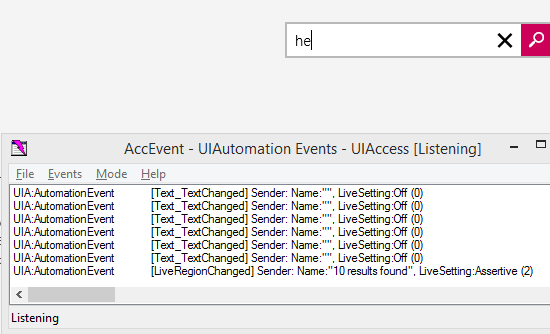

So the Reading List team felt it would be useful to have a count of search results announced, despite the fact that that information isn’t shown visually. In order to achieve that, we created a text element and made it a Live Region, but styled it such that it had no visuals shown on the screen. It was styled to be zero-size and transparent. When the app knows how many search results are found following a search, it sets the appropriate text on this invisible text element, and Narrator goes on to announce the count.

And having done that, we also extended it to use the delayed announcement approach that I mentioned earlier. The was so much UI changing as the list of search result items are appearing, we didn’t want the LiveRegionChanged event with the count of results to be pushed aside by other events. So we let the search results list UI settle down for a moment before raising the LiveRegionChanged event with the count of search results.

And to illustrate the effect of doing this, this is what I just experienced on my own Window 8.1 Preview device:

- I’m using Narrator at the Home view of my list of items. I’ve just been told about the item with focus, followed by “3 of 47”.

- I press the ‘b’ key.

- I hear “b. SearchBox, search, enter to submit query, escape to clear text, editing b. 24 results found”. The bulk of that text relates to focus being moved to the SearchBox.

- I press the ‘a’ key.

- I hear “a. 3 results found”.

- I press the ‘r’ key.

- I hear “r. 0 results found”.

- I press the backspace key.

- I hear “a. 3 results found”.

So they count of search results is announced as we wanted it to be despite that fact that that text isn’t shown visually on the screen. We made the Live Region “assertive” in order to have a screen reader announce the result as soon as it received the LiveRegionChanged event.

Figure 2: The AccEvent SDK tool showing the LiveRegionChanged event relating to the announcement of the count of search results.

Note that Figure 2 also shows that many UIA TextChanged events are being raised when a search is performed and the app’s UI presents the result. By delaying the raising of the ListRegionChanged event, that event will arrive at the screen reader after those TextChanged events have arrived.

The issues

Overall the action taken by the Reading List team to delay the raising of the LiveRegionChanged events and to use an invisible Live Region significantly improved the experienced for our customers using Narrator. There are some drawbacks with the action taken.

The use of the delay in raising of the LiveRegionChanged events is not 100% reliable. Sometimes the announcements are still not heard, and that’s likely due to the timing of other events being raised due to the restructuring or to the changing of visibility of various areas of the UI.

An invisible Live Region has to exist in the UIA tree of elements in order for the LiveRegionChanged event to be raised. Given that the element exists in the UIA tree of elements, the customer using Narrator can navigate to it explicitly. If a customer were to navigate to the element, its existence alone would be distracting, but worse, if it contained stale text, (such as an old search result,) then that would be very confusing. For Reading List, we cleared old text on the invisible Live Region wherever practical in order to reduce the likelihood of that confusion arising. As it happens, clearing the text was not as straightforward as we’d hoped, due to the use of the delay in raising the LiveRegionChanged event. (That is, we didn’t want to clear the text from the element before a screen reader had actually accessed that text in order to make an announcement.)

By the way, whenever an “invisible” text element exists, it’s important to check how it appears visually on the screen when a high contrast theme is active. By default, some system colour might be applied to the text. If so, it’s necessary to explicitly set a style to make it invisible when a high contrast is active.

Despite these drawbacks, we were pleased to have been able to add support in the common case for announcements of the count of search results.

The end result

Given that the approach of delaying the raising of the LiveRegionChanged event on the invisible Live Region had been so helpful to us for announcing the count the search results, we decided to use the same approach for all announcements for Live Regions in the app. We ended up one invisible “assertive” Live Region, and one invisible “polite” Live Region. Which of these two Live Regions we used in a given situation depended on the scenario. No other elements in the app were marked as Live Regions.

In addition to announcing the count of search results, we used the Live Regions for two other situations.

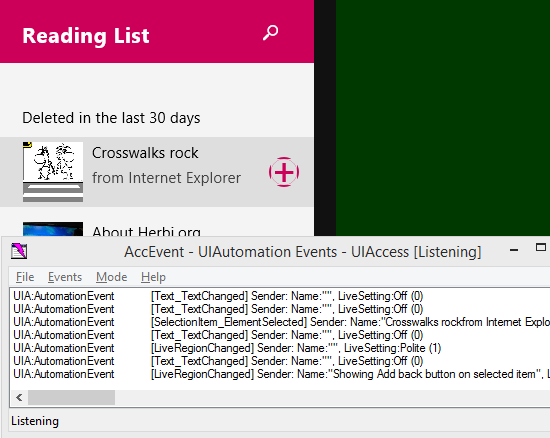

Whenever an item in the vertically laid-out list is selected, we show a button on it. By default, Narrator will announce the details of the item that’s been selected, but not the appearance of the button on the item. When our customers are getting familiar with the Reading List app, there’s no reason for them to expect a transient button to appear on a selected item, so we wanted Narrator to let them know. In order to do this, when the item’s selected we use an invisible Live Region to announce, (in the case of the Recently Deleted view,) “Showing Add Back button on selected item”. We request that this text will be announced after the completion of other speech relating to the selected item by using the “polite” Live Region for this. This means that as the customer gets familiar with the app, they’ll learn of the existence of the transient buttons on the selected items. Once they’re familiar with the app, they may well not wait to hear the full Live Region speech. Instead they’ll select the item, quickly move to the button and invoke it.

Figure 3: The AccEvent SDK tool showing the LiveRegionChanged event relating to the announcement of “Showing Add Back button on selected item”.

The other situation where we leverage the Live Regions relates to showing UI when the list contains no items at all. If the list is empty, we need to make sure the customer using Narrator is made aware of this. (In the Windows 8.1 Preview, when the list is empty, keyboard focus doesn’t move to an element which when announced by Narrator would make the customer aware of the empty list.) So whenever we present the UI relating to an empty list, (whether this is on startup and no items exist, or the customer has just deleted the last item in their list), we leverage our Live Regions as we do elsewhere.

Summary

The first consideration around Live Regions is whether they’re appropriate to use. If the customer is frequently interrupted by unimportant changes happening at some distance from the point where they’re working, this soon becomes an irritating distraction. Sometimes however, reporting that distant change might make the difference between an app being usable and it being not.

The Reading List team felt it was appropriate to incorporate Live Regions into the app for specific scenarios. We first tried the simplest approach of simply setting the “aria-live” value on existing text elements. For some apps, doing this alone would result in the necessary experience for the customer. In our case, we found that we needed to take additional action to delay the raising of the LiveRegionChanged events, and also to allow some announcements that had no visual equivalent shown on the screen. Both these additional steps had some drawbacks, but it was felt that the overall experience was much improved by the addition of the Live Regions in this way into the app.

An accessibility case study - Reading List. Part 1: Introduction

An accessibility case study - Reading List. Part 2: Colours and Contrast

An accessibility case study - Reading List. Part 3: Keyboard accessibility

An accessibility case study - Reading List. Part 4: Programmatic accessibility

An accessibility case study - Reading List. Part 5: Live Regions

An accessibility case study - Reading List. Part 6: Using Narrator with touch input only