Fail to pull images from Azure Container Registry to Azure Kubernetes Service cluster

Note

Was this article helpful? Your input is important to us. Please use the Feedback button on this page to let us know how well this article worked for you or how we can improve it.

When you're using Microsoft Azure Container Registry together with Azure Kubernetes Service (AKS), an authentication mechanism must be established. You can set up the AKS to Container Registry integration by using a few simple Azure CLI or Azure PowerShell commands. This integration assigns the AcrPull role for the kubelet identity that's associated with the AKS cluster to pull images from a container registry.

In some cases, trying to pull images from a container registry to an AKS cluster fails. This article provides guidance for troubleshooting the most common errors that you encounter when you pull images from a container registry to an AKS cluster.

Before you begin

This article assumes that you have an existing AKS cluster and an existing container registry. See the following quick starts:

If you need an AKS cluster, deploy one by using the Azure CLI or the Azure portal.

If you need an Azure Container Registry (ACR), create one by using the Azure CLI or the Azure portal.

You also need Azure CLI version 2.0.59 or a later version to be installed and configured. Run az version to determine the version. If you have to install or upgrade, see Install Azure CLI.

Symptoms and initial troubleshooting

The Kubernetes pod's STATUS is ImagePullBackOff or ErrImagePull. To get detailed error information, run the following command and check Events from the output.

kubectl describe pod <podname> -n <namespace>

We recommend that you start troubleshooting by checking the container registry's health and checking whether the container registry is accessible from the AKS cluster.

To check the container registry's health, run the following command:

az acr check-health --name <myregistry> --ignore-errors --yes

If a problem is detected, it provides an error code and description. For more information about the errors and possible solutions, see Health check error reference.

Note

If you get Helm-related or Notary-related errors, it doesn't mean that you an issue is affecting Container Registry or AKS. It indicates only that Helm or Notary isn't installed, or that Azure CLI isn't compatible with the current installed version of Helm or Notary, and so on.

To validate whether the container registry is accessible from the AKS cluster, run the following az aks check-acr command:

az aks check-acr --resource-group <MyResourceGroup> --name <MyManagedCluster> --acr <myacr>.azurecr.io

The following sections help you troubleshoot the most common errors that are displayed in Events in the output of the kubectl describe pod command.

Cause 1: 401 Unauthorized error

An AKS cluster requires an identity. This identity can be either a managed identity or a service principal. If the AKS cluster uses a managed identity, the kubelet identity is used for authenticating with ACR. If the AKS cluster is using as an identity a service principal, the service principal itself is used for authenticating with ACR. No matter what the identity is, the proper authorization that's used to pull an image from a container registry is necessary. Otherwise, you may get the following "401 Unauthorized" error:

Failed to pull image "<acrname>.azurecr.io/<repository:tag>": [rpc error: code = Unknown desc = failed to pull and unpack image "<acrname>.azurecr.io/<repository:tag>": failed to resolve reference "<acrname>.azurecr.io/<repository:tag>": failed to authorize: failed to fetch oauth token: unexpected status: 401 Unauthorized

Several solutions can help you resolve this error, subject to the following constraints:

Solutions 2, 3, and 5 are applicable only to AKS clusters that use a service principal.

Solutions 1, 2, 3, and 4 are applicable for the Azure method of creating the role assignment at Container Registry level for AKS's identity.

Solutions 5 and 6 are applicable for the Kubernetes method of pulling a Kubernetes secret.

Solution 1: Make sure AcrPull role assignment is created for identity

The integration between AKS and Container Registry creates an AcrPull role assignment at container registry level for the AKS cluster's kubelet identity. Make sure that the role assignment is created.

To check whether the AcrPull role assignment is created, use one of the following methods:

Run the following command:

az role assignment list --scope /subscriptions/<subscriptionID>/resourceGroups/<resourcegroupname>/providers/Microsoft.ContainerRegistry/registries/<acrname> -o tableCheck in the Azure portal by selecting Azure Container Registry > Access control (IAM) > Role assignments. For more information, see List Azure role assignments using the Azure portal.

Besides the AcrPull role, some built-in roles and custom roles can also contain the "Microsoft.ContainerRegistry/registries/pull/read" action. Check those roles if you've got any of them.

If the AcrPull role assignment isn't created, create it by configuring Container Registry integration for the AKS cluster with the following command:

az aks update -n <myAKSCluster> -g <myResourceGroup> --attach-acr <acr-resource-id>

Solution 2: Make sure service principal isn't expired

Make sure that the secret of the service principal that's associated with the AKS cluster isn't expired. To check the expiration date of your service principal, run the following commands:

SP_ID=$(az aks show --resource-group <myResourceGroup> --name <myAKSCluster> \

--query servicePrincipalProfile.clientId -o tsv)

az ad sp credential list --id "$SP_ID" --query "[].endDate" -o tsv

For more information, see Check the expiration date of your service principal.

If the secret is expired, update the credentials for the AKS cluster.

Solution 3: Make sure AcrPull role is assigned to correct service principal

In some cases, the container registry role assignment still refers to the old service principal. For example, when the service principal of the AKS cluster is replaced with a new one. To make sure that the container registry role assignment refers to the correct service principal, follow these steps:

To check the service principal that's used by the AKS cluster, run the following command:

az aks show --resource-group <myResourceGroup> \ --name <myAKSCluster> \ --query servicePrincipalProfile.clientId \ --output tsvTo check the service principal that's referenced by the container registry role assignment, run the following command:

az role assignment list --scope /subscriptions/<subscriptionID>/resourceGroups/<resourcegroupname>/providers/Microsoft.ContainerRegistry/registries/<acrname> -o tableCompare the two service principals. If they don't match, integrate the AKS cluster with the container registry again.

Solution 4: Make sure the kubelet identity is referenced in the AKS VMSS

When a managed identity is used for authentication with the ACR, the managed identity is known as the kubelet identity. By default, the kubelet identity is assigned at the AKS VMSS level. If the kubelet identity is removed from the AKS VMSS, the AKS nodes can't pull images from the ACR.

To find the kubelet identity of your AKS cluster, run the following command:

az aks show --resource-group <MyResourceGroup> --name <MyManagedCluster> --query identityProfile.kubeletidentity

Then, you can list the identities of the AKS VMSS by opening the VMSS from the node resource group and selecting Identity > User assigned in the Azure portal or by running the following command:

az vmss identity show --resource-group <NodeResourceGroup> --name <AksVmssName>

If the kubelet identity of your AKS cluster isn't assigned to the AKS VMSS, assign it back.

Note

Modifying the AKS VMSS using the IaaS APIs or from the Azure portal isn't supported, and no AKS operation can remove the kubelet identity from the AKS VMSS. This means that something unexpected removed it, for example, a manual removal performed by a team member. To prevent such removal or modification, you can consider using the NRGLockdown feature.

Because modifications to the AKS VMSS aren't supported, they don't propagate at the AKS level. To reassign the kubelet identity to the AKS VMSS, a reconciliation operation is needed. To do this, run the following command:

az aks update --resource-group <MyResourceGroup> --name <MyManagedCluster>

Solution 5: Make sure the service principal is correct and the secret is valid

If you pull an image by using an image pull secret, and that Kubernetes secret was created by using the values of a service principal, make sure that the associated service principal is correct and the secret is still valid. Follow these steps:

Run the following kubectl get and base64 command to see the values of the Kubernetes secret:

kubectl get secret <secret-name> --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decodeCheck the expiration date by running the following az ad sp credential list command. The username is the service principal value.

az ad sp credential list --id "<username>" --query "[].endDate" --output tsvIf necessary, reset the secret of that service principal by running the following az ad sp credential reset command:

az ad sp credential reset --name "$SP_ID" --query password --output tsvUpdate or re-create the Kubernetes secret accordingly.

Solution 6: Make sure the Kubernetes secret has the correct values of the container registry admin account

If you pull an image by using an image pull secret, and that Kubernetes secret was created by using values of container registry admin account, make sure that the values in the Kubernetes secret are the same as the values of the container registry admin account. Follow these steps:

Run the following kubectl get and base64 command to see the values of the Kubernetes secret:

kubectl get secret <secret-name> --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decodeIn the Azure portal, search for and select Container registries.

In the list of container registries, select your container registry.

In the navigation pane for the container registry, select Access keys.

In the Access keys page for the container registry, compare the container registry values with the values in the Kubernetes secret.

If the values don't match, update or re-create the Kubernetes secret accordingly.

Note

If a Regenerate password operation occurred, an operation that's named "Regenerate Container Registry Login Credentials" will be displayed in the Activity log page of the container registry. The Activity log has a 90-day retention period.

Cause 2: Image not found error

Failed to pull image "<acrname>.azurecr.io/<repository:tag>": [rpc error: code = NotFound desc = failed to pull and unpack image "<acrname>.azurecr.io/<repository:tag>": failed to resolve reference "<acrname>.azurecr.io/<repository:tag>": <acrname>.azurecr.io/<repository:tag>: not found

Solution: Make sure image name is correct

If you see this error, make nsure that the image name is fully correct. You should check the registry name, registry login server, the repository name, and the tag. A common mistake is that the login server is specified as "azureacr.io" instead of "azurecr.io".

If the image name isn't fully correct, the 401 Unauthorized error may also occur because AKS always tries anonymous pull regardless of whether the container registry has enabled anonymous pull access.

Cause 3: 403 Forbidden error

Failed to pull image "<acrname>.azurecr.io/<repository:tag>": rpc error: code = Unknown desc = failed to pull and unpack image "<acrname>.azurecr.io/<repository:tag>": failed to resolve reference "<acrname>.azurecr.io/<repository:tag>": failed to authorize: failed to fetch anonymous token: unexpected status: 403 Forbidden

Solution 1: Make sure AKS virtual network link is set in the container registry's Private DNS zone

If the network interface of the container registry's private endpoint and the AKS cluster are in different virtual networks, make sure that the virtual network link for the AKS cluster's virtual network is set in the Private DNS zone of the container registry. (That link is named "privatelink.azurecr.io" by default.) If the virtual network link isn't in the Private DNS zone of the container registry, add it by using one of the following ways:

In the Azure portal, select the private DNS zone "privatelink.azurecr.io", select Virtual network links > Add under the Settings panel, and then select a name and the virtual network of the AKS cluster. Select OK.

Note

It's optional to select the "Enable auto registration" feature.

Create a virtual network link to the specified Private DNS zone by using Azure CLI.

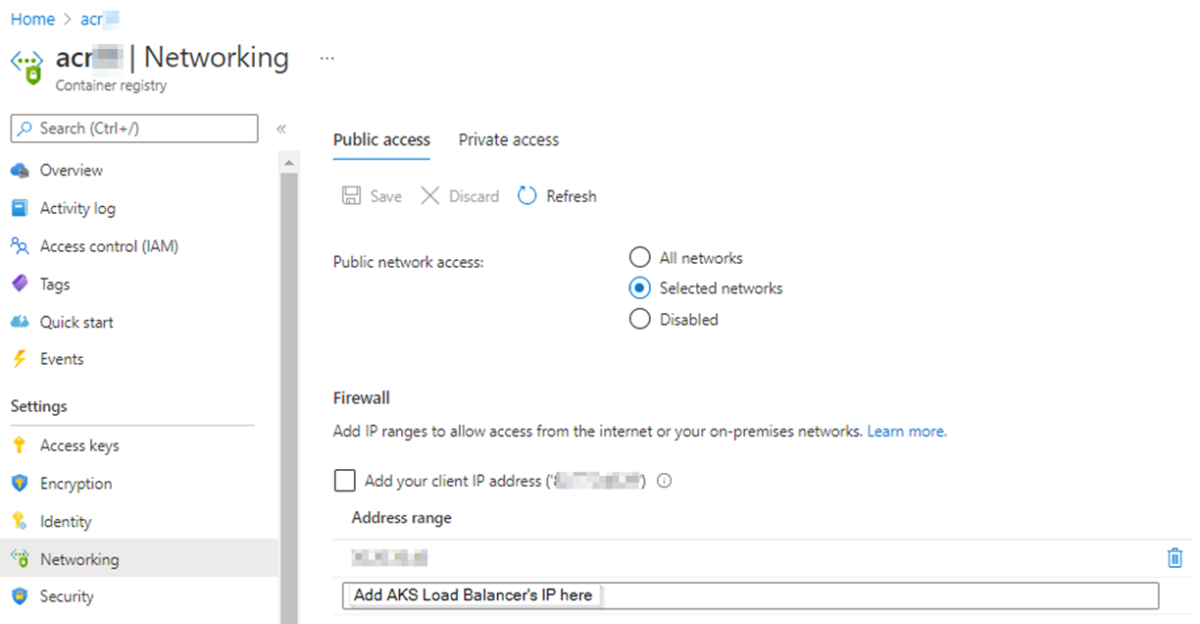

Solution 2: Add AKS Load Balancer's public IP address to allowed IP address range of the container registry

If the AKS cluster connects publicly to the container registry (NOT through a private link or an endpoint) and the public network access of the container registry is limited to selected networks, add AKS Load Balancer's public IP address to the allowed IP address range of the container registry:

Verify that the public network access is limited to selected networks.

In the Azure portal, navigate to the container registry. Under Settings, select Networking. On the Public access tab, Public network access is set to Selected networks or Disabled.

Obtain the AKS Load Balancer's public IP address by using one of the following ways:

In the Azure portal, navigate to the AKS cluster. Under Settings, select Properties, select one of the virtual machine scale sets in the infrastructure resource group, and check the public IP address of the AKS Load Balancer.

Run the following command:

az network public-ip show --resource-group <infrastructure-resource-group> --name <public-IP-name> --query ipAddress -o tsv

Allow access from the AKS Load Balancer's public IP address by using one of the following ways:

Run

az acr network-rule addcommand as follows:az acr network-rule add --name acrname --ip-address <AKS-load-balancer-public-IP-address>For more information, see Add network rule to registry.

In the Azure portal, navigate to the container registry. Under Settings, select Networking. On the Public access tab, under Firewall, add the AKS Load Balancer's public IP address to Address range and then select Save. For more information, see Access from selected public network - portal.

Note

If Public network access is set to Disabled, switch it to Selected networks first.

Cause 4: 443 timeout error

Failed to pull image "<acrname>.azurecr.io/<repository:tag>": rpc error: code = Unknown desc = failed to pull and unpack image "<acrname>.azurecr.io/<repository:tag>": failed to resolve reference "<acrname>.azurecr.io/<repository:tag>": failed to do request: Head "https://<acrname>.azurecr.io/v2/<repository>/manifests/v1": dial tcp <acrprivateipaddress>:443: i/o timeout

Note

The "443 timeout" error occurs only when you connect privately to a container registry by using Azure Private Link.

Solution 1: Make sure virtual network peering is used

If the network interface of the container registry's private endpoint and the AKS cluster are in different virtual networks, make sure that virtual network peering is used for both virtual networks. You can check virtual network peering by running the Azure CLI command az network vnet peering list --resource-group <MyResourceGroup> --vnet-name <MyVirtualNetwork> --output table or in the Azure portal by selecting the VNETs > Peerings under the Settings panel. For more information about listing all peerings of a specified virtual network, see az network vnet peering list.

If the virtual network peering is used for both virtual networks, make sure that the status is "Connected". If the status is Disconnected, delete the peering from both virtual networks, and then re-create it. If the status is "Connected", see the troubleshooting guide: The peering status is "Connected".

For further troubleshooting, connect to one of the AKS nodes or pods, and then test the connectivity with the container registry at TCP level by using the Telnet or Netcat utility. Check the IP address with the nslookup <acrname>.azurecr.io command, and then run the telnet <ip-address-of-the-container-registry> 443 command.

For more information about connecting to AKS nodes, see Connect with SSH to Azure Kubernetes Service (AKS) cluster nodes for maintenance or troubleshooting.

Solution 2: Use Azure Firewall Service

If the network interface of the container registry's private endpoint and the AKS cluster are in different virtual networks, in addition to virtual network peering, you may use Azure Firewall Service to set up a Hub-spoke network topology in Azure. When you set up the firewall rule, you need to use network rules to explicitly allow the outbound connection to the container registry private endpoint IP addresses.

Cause 5: No match for platform in manifest

The host operating system (node OS) is incompatible with the image that's used for the pod or container. For example, if you schedule a pod to run a Linux container on a Windows node, or a Windows container on a Linux node, the following error occurs:

Failed to pull image "<acrname>.azurecr.io/<repository:tag>":

[

rpc error:

code = NotFound

desc = failed to pull and unpack image "<acrname>.azurecr.io/<repository:tag>": no match for platform in manifest: not found,

]

This error can occur for an image that's pulled from any source, as long as the image is incompatible with the host OS. The error isn't limited to images that are pulled from the container registry.

Solution: Configure the nodeSelector field correctly in your pod or deployment

Specify the correct nodeSelector field in the configuration settings of your pod or deployment. The correct value for this field's kubernetes.io/os setting ensures that the pod will be scheduled on the correct type of node. The following table shows how to set the kubernetes.io/os setting in YAML:

| Container type | YAML setting |

|---|---|

| Linux container | "kubernetes.io/os": linux |

| Windows container | "kubernetes.io/os": windows |

For example, the following YAML code describes a pod that needs to be scheduled on a Linux node:

apiVersion: v1

kind: Pod

metadata:

name: aspnetapp

labels:

app: aspnetapp

spec:

containers:

- image: "mcr.microsoft.com/dotnet/core/samples:aspnetapp"

name: aspnetapp-image

ports:

- containerPort: 80

protocol: TCP

nodeSelector:

"kubernetes.io/os": linux

More information

If the troubleshooting guidance in this article doesn't help you resolve the issue, here are some other things to consider:

Check the network security groups and route tables associated with subnets, if you've got any of those items.

If a virtual appliance like a firewall controls the traffic between subnets, check the firewall and Firewall access rules.

Third-party information disclaimer

The third-party products that this article discusses are manufactured by companies that are independent of Microsoft. Microsoft makes no warranty, implied or otherwise, about the performance or reliability of these products.

Contact us for help

If you have questions or need help, create a support request, or ask Azure community support. You can also submit product feedback to Azure feedback community.

Saran dan Komentar

Segera hadir: Sepanjang tahun 2024 kami akan menghentikan penggunaan GitHub Issues sebagai mekanisme umpan balik untuk konten dan menggantinya dengan sistem umpan balik baru. Untuk mengetahui informasi selengkapnya, lihat: https://aka.ms/ContentUserFeedback.

Kirim dan lihat umpan balik untuk